1duo / Awesome Ai Infrastructures

Labels

Projects that are alternatives of or similar to Awesome Ai Infrastructures

📙 List of real-world AI infrastructures (a.k.a., machine learning systems, pipelines, workflows, and platforms) for machine/deep learning training and/or inference in production 🔌. This usually includes technology stack necessary to enable machine learning algorithms run in production environments in a stable, scalable and reliable way. The list is for my own learning purposes, but feel free to contribute / star / fork / pull request. Any recommendations and suggestions are welcome 🎉.

Introduction

This list contains some popular actively-maintained AI infrastructures that focus on one or more of the following topics:

- Architecture of end-to-end machine learning training pipelines.

- Inference at scale in production on Cloud ☁️ or on end devices 📱.

- Compiler and optimization stacks for deployments on variety of devices.

- Novel ideas of efficient large-scale distributed training.

in no specific order. This list cares more about overall architectures of AI solutions in production instead of individual machine/deep learning training or inference frameworks. My learning goals are: understand the workflows and principles of how to build (large-scale) systems that can enable machine learning in production.

Platforms

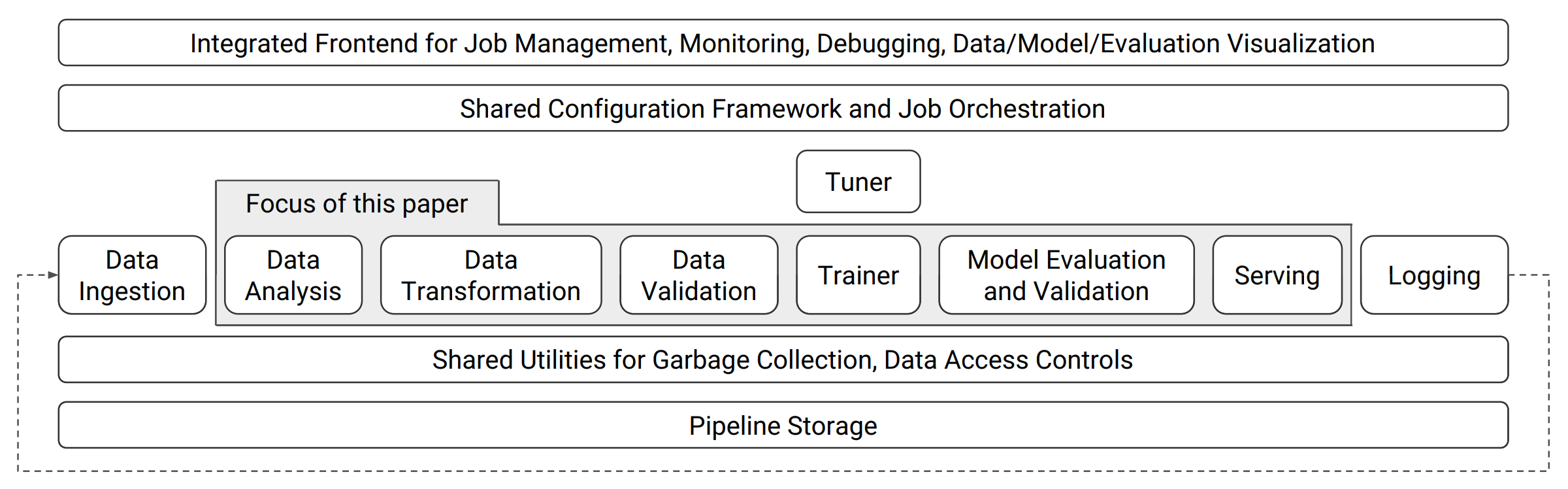

TFX - TensorFlow Extended (Google)

TensorFlow Extended (TFX) is a TensorFlow-based general-purpose machine learning platform implemented at Google.

Architecture:

Components:

-

TensorFlow Data Validation: a library for exploring and validating machine learning data.

-

TensorFlow Transformation: perform full-pass analyze phases over data to create transformation graphs that are consistently applied during training and serving.

-

TensorFlow Model Analysis: libraries and visualization components to compute full-pass and sliced model metrics over large datasets, and analyze them in a notebook.

-

TensorFlow Serving: a flexible, high-performance serving system for machine learning models, designed for production environments

Kubeflow - The Machine Learning Toolkit for Kubernetes (Google)

The Kubeflow project is dedicated to making deployments of machine learning (ML) workflows on Kubernetes simple, portable and scalable. Our goal is not to recreate other services, but to provide a straightforward way to deploy best-of-breed open-source systems for ML to diverse infrastructures. Anywhere you are running Kubernetes, you should be able to run Kubeflow.

Kubeflow started as an open sourcing of the way Google ran TensorFlow internally, based on a pipeline called TensorFlow Extended.

| homepage | github | documentation | blog | talk | slices |

Components:

-

Notebooks: a JupyterHub to create and manage interactive Jupyter notebooks.

-

TensorFlow Model Training: a TensorFlow Training Controller that can be configured to use either CPUs or GPUs and be dynamically adjusted to the size of a cluster with a single setting.

-

Model Serving: a TensorFlow Serving container to export trained TensorFlow models to Kubernetes. Integrated with Seldon Core, an open source platform for deploying machine learning models on Kubernetes, and NVIDIA TensorRT Inference Server for maximized GPU utilization when deploying ML/DL models at scale.

-

Multi-Framework: includes TensorFlow, PyTorch, MXNet, Chainer, and more.

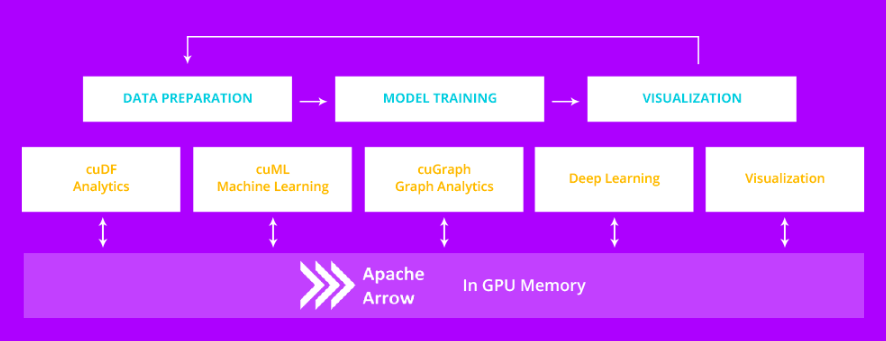

RAPIDS - Open GPU Data Science (NVIDIA)

The RAPIDS suite of open source software libraries gives you the freedom to execute end-to-end data science and analytics pipelines entirely on GPUs. It relies on NVIDIA® CUDA® primitives for low-level compute optimization, but exposes that GPU parallelism and high-bandwidth memory speed through user-friendly Python interfaces.

RAPIDS is the result of contributions from the machine learning community and GPU Open Analytics Initiative (GOAI) partners, such as Anaconda, BlazingDB, Gunrock, etc.

Architecture:

Components:

-

Apache Arrow: a columnar, in-memory data structure that delivers efficient and fast data interchange with flexibility to support complex data models.

-

cuDF: a DataFrame manipulation library based on Apache Arrow that accelerates loading, filtering, and manipulation of data for model training data preparation. The Python bindings of the core-accelerated CUDA DataFrame manipulation primitives mirror the pandas interface for seamless onboarding of pandas users.

-

cuML: a collection of GPU-accelerated machine learning libraries that will provide GPU versions of all machine learning algorithms available in scikit-learn.

-

cuGRAPH: a framework and collection of graph analytics libraries that seamlessly integrate into the RAPIDS data science platform.

-

Deep Learning Libraries: data stored in Apache Arrow can be seamlessly pushed to deep learning frameworks that accept array_interface such as PyTorch and Chainer.

-

Visualization Libraries: RAPIDS will include tightly integrated data visualization libraries based on Apache Arrow. Native GPU in-memory data format provides high-performance, high-FPS data visualization, even with very large datasets.

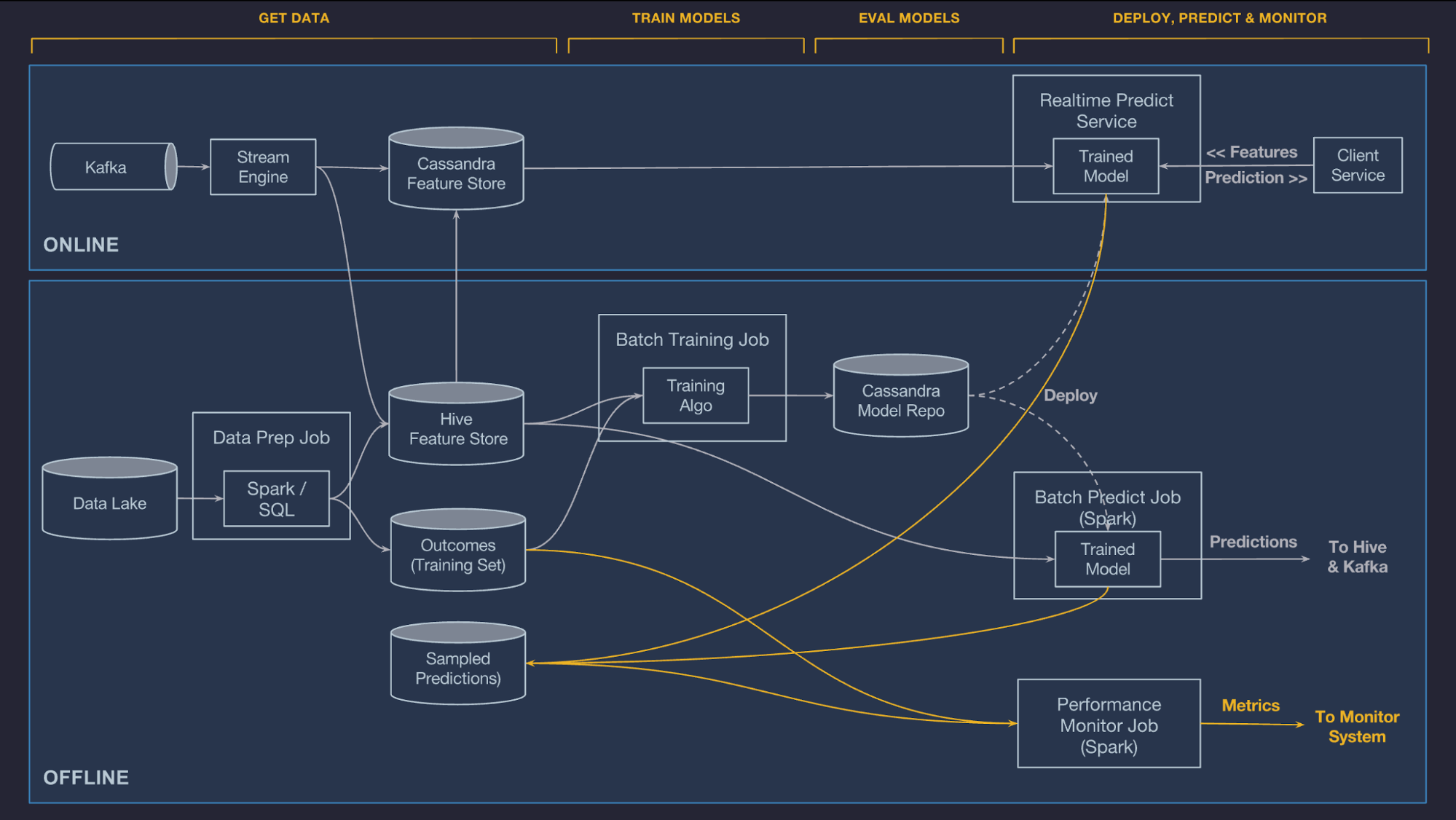

Michelangelo - Uber's Machine Learning Platform (Uber)

Michelangelo, an internal ML-as-a-service platform that democratizes machine learning and makes scaling AI to meet the needs of business as easy as requesting a ride.

Michelangelo consists of a mix of open source systems and components built in-house. The primary open sourced components used are HDFS, Spark, Samza, Cassandra, MLLib, XGBoost, and TensorFlow.

Architecture:

Components:

- Manage data

- Train models

- Evaluate models

- Deploy models

- Make predictions

- Monitor predictions

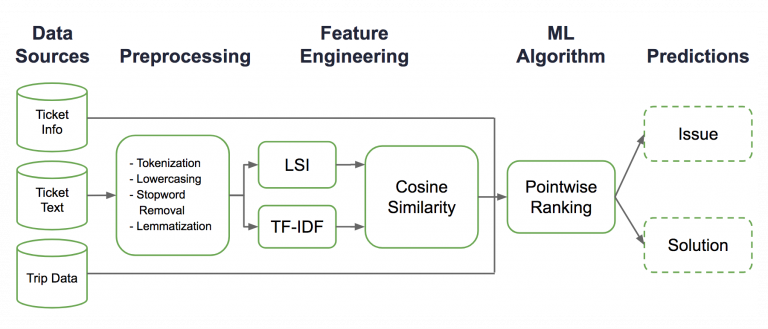

COTA: Improving Uber Customer Care with NLP & Machine Learning (Uber)

COTA, our Customer Obsession Ticket Assistant, a tool that uses machine learning and natural language processing (NLP) techniques to help agents deliver better customer support. Leveraging our Michelangelo machine learning-as-a-service platform on top of our customer support platform

| blog |

Architecture:

Components:

- Preprocessing

- Topic modeling

- Feature engineering

- Pointwise ranking algorithm

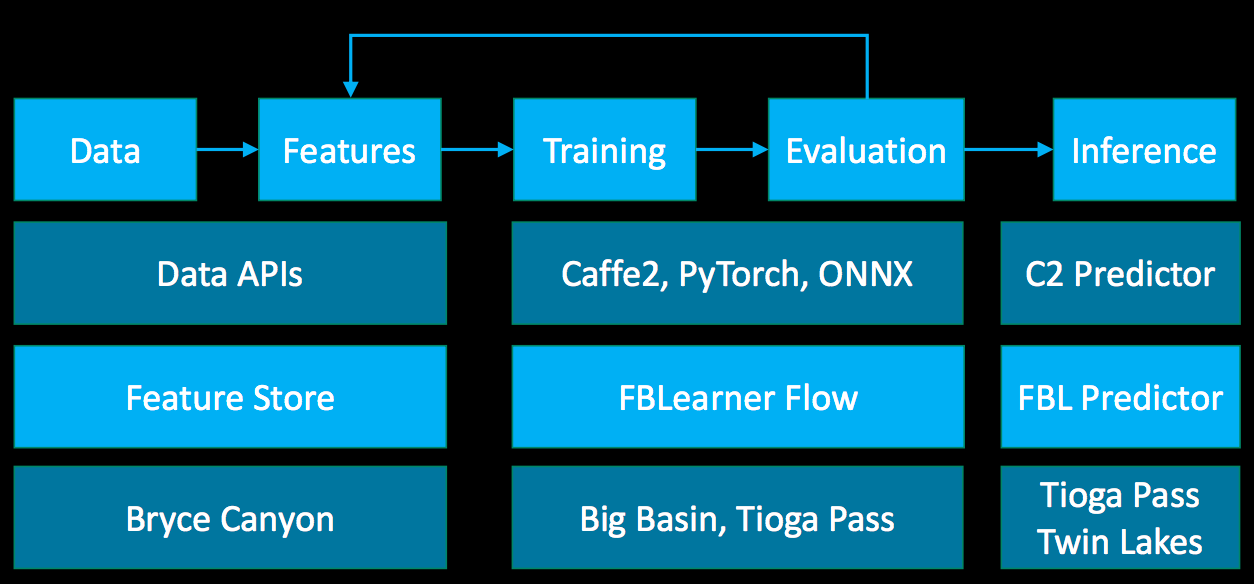

FBLearner (Facebook)

FBLearner Flow is capable of easily reusing algorithms in different products, scaling to run thousands of simultaneous custom experiments, and managing experiments with ease.

Architecture:

Components:

- Experimentation Management UI

- Launching Workflows

- Visualizing and Comparing Outputs

- Managing Experiments

- Machine Learning Library

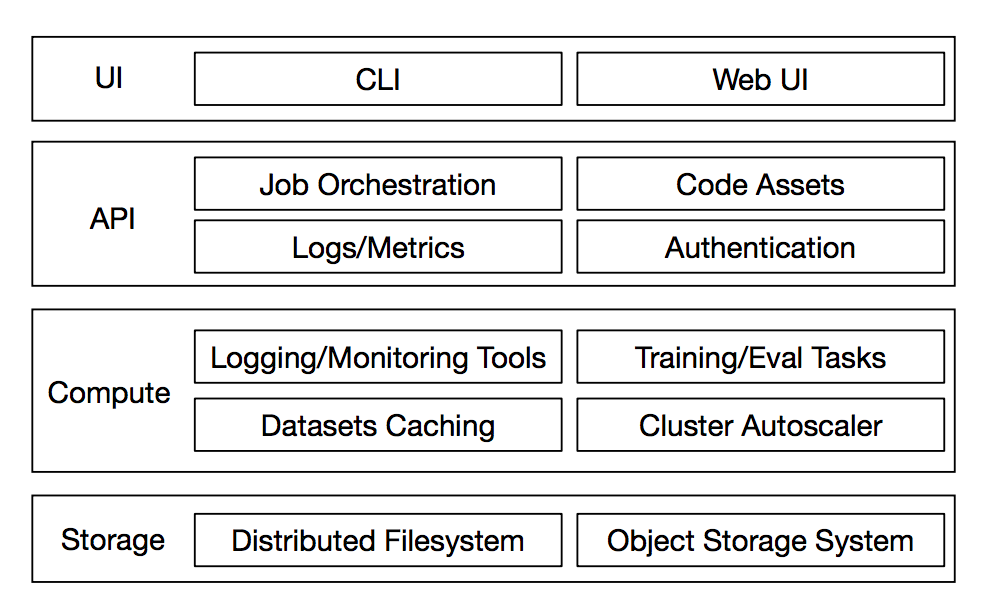

Alchemist (Apple)

Alchemist - an internal service built at Apple from the ground up for easy, fast, and scalable distributed training.

| paper |

Architecture:

Components:

-

UI Layer: command line interface (CLI) and a web UI.

-

API Layer: exposes services to users, which allows them to upload and browse the code assets, submit distributed jobs, and query the logs and metrics.

-

Compute Layer: contains the compute-intensive workloads; in particular, the training and evaluation tasks orchestrated by the job scheduler.

-

Storage Layer: contains the distributed file systems and object storage systems that maintain the training and evaluation datasets, the trained model artifacts, and the code assets.

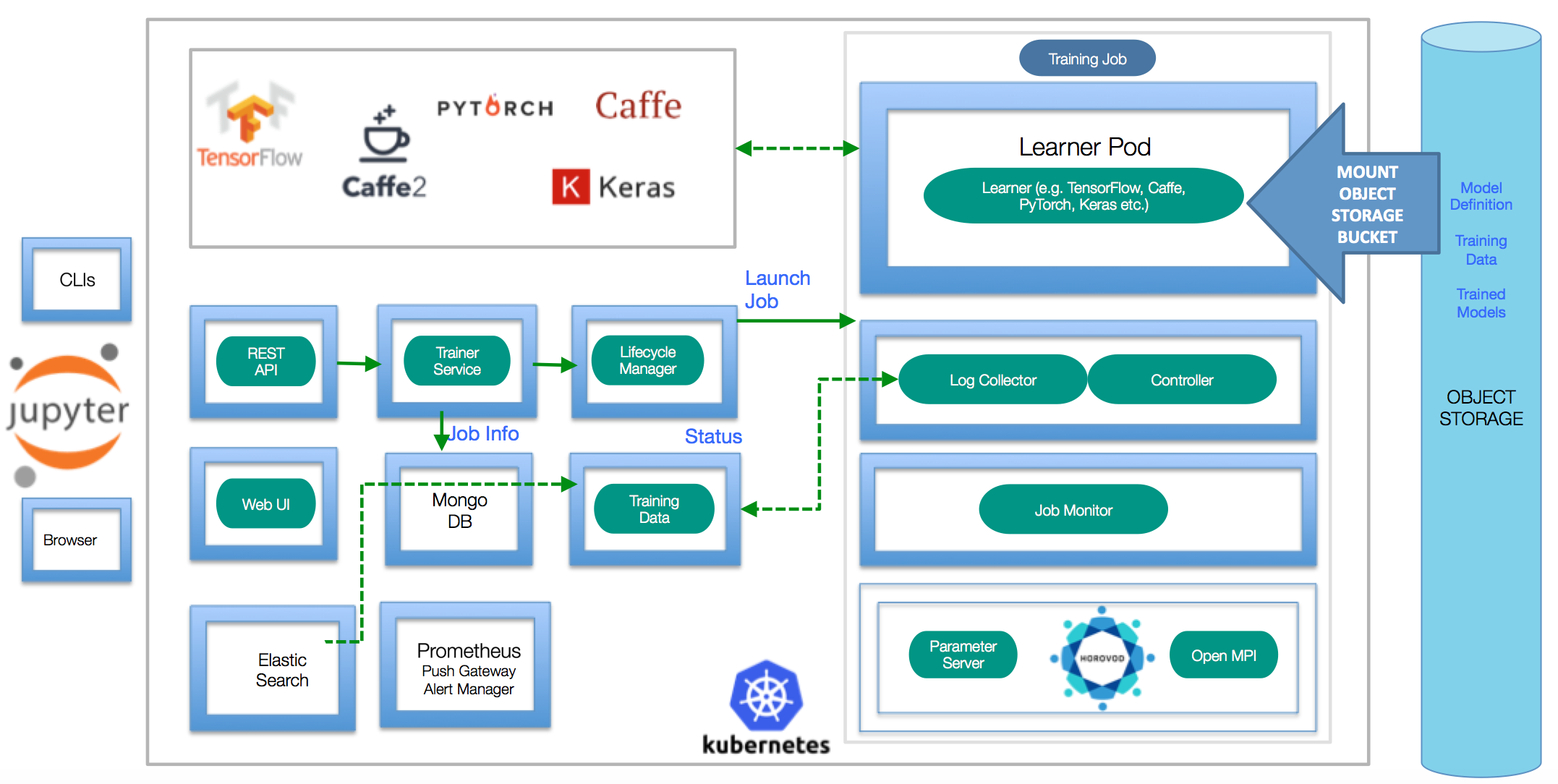

FfDL - Fabric for Deep Learning (IBM)

Deep learning frameworks such as TensorFlow, PyTorch, Caffe, Torch, Theano, and MXNet have contributed to the popularity of deep learning by reducing the effort and skills needed to design, train, and use deep learning models. Fabric for Deep Learning (FfDL, pronounced “fiddle”) provides a consistent way to run these deep-learning frameworks as a service on Kubernetes.

Architecture:

Components:

-

REST API: the REST API microservice handles REST-level HTTP requests and acts as proxy to the lower-level gRPC Trainer service.

-

Trainer: the Trainer service admits training job requests, persisting metadata and model input configuration in a database (MongoDB).

-

Lifecycle Manager and learner pods: The Lifecycle Manager (LCM) deploys training jobs arriving from the Trainer, halting (pausing) and terminating training jobs. LCM uses the Kubernetes cluster manager to deploy containerized training jobs.

-

Training Data Service: The Training Data Service (TDS) provides short-lived storage and retrieval for logs and evaluation data from a Deep Learning training job.

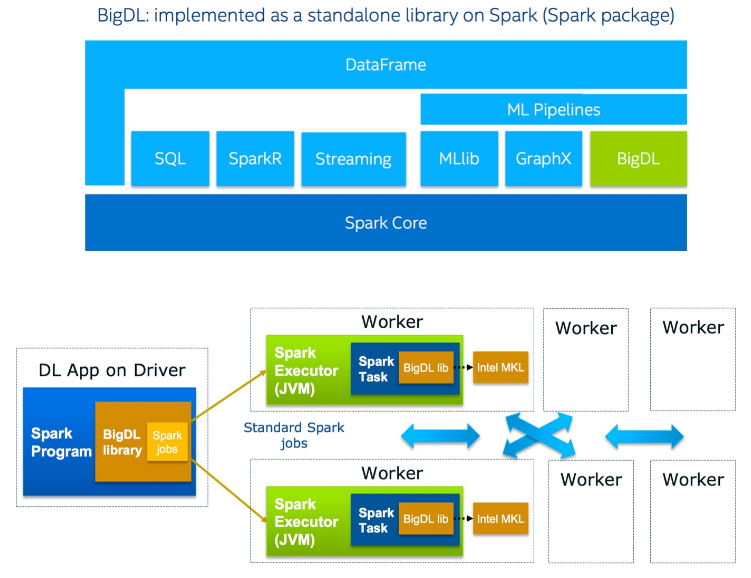

BigDL - Distributed Deep Learning Library for Apache Spark (Intel)

BigDL is a distributed deep learning library for Apache Spark; with BigDL, users can write their deep learning applications as standard Spark programs, which can directly run on top of existing Spark or Hadoop clusters. To makes it easy to build Spark and BigDL applications, a high level Analytics Zoo is provided for end-to-end analytics + AI pipelines.

Architecture:

Components:

-

Rich deep learning support. Modeled after Torch, BigDL provides comprehensive support for deep learning, including numeric computing (via Tensor) and high level neural networks; in addition, users can load pre-trained Caffe or Torch models into Spark programs using BigDL.

-

Extremely high performance. To achieve high performance, BigDL uses Intel MKL and multi-threaded programming in each Spark task. Consequently, it is orders of magnitude faster than out-of-box open source Caffe, Torch or TensorFlow on a single-node Xeon (i.e., comparable with mainstream GPU).

-

Efficiently scale-out. BigDL can efficiently scale out to perform data analytics at "Big Data scale", by leveraging Apache Spark (a lightning fast distributed data processing framework), as well as efficient implementations of synchronous SGD and all-reduce communications on Spark.

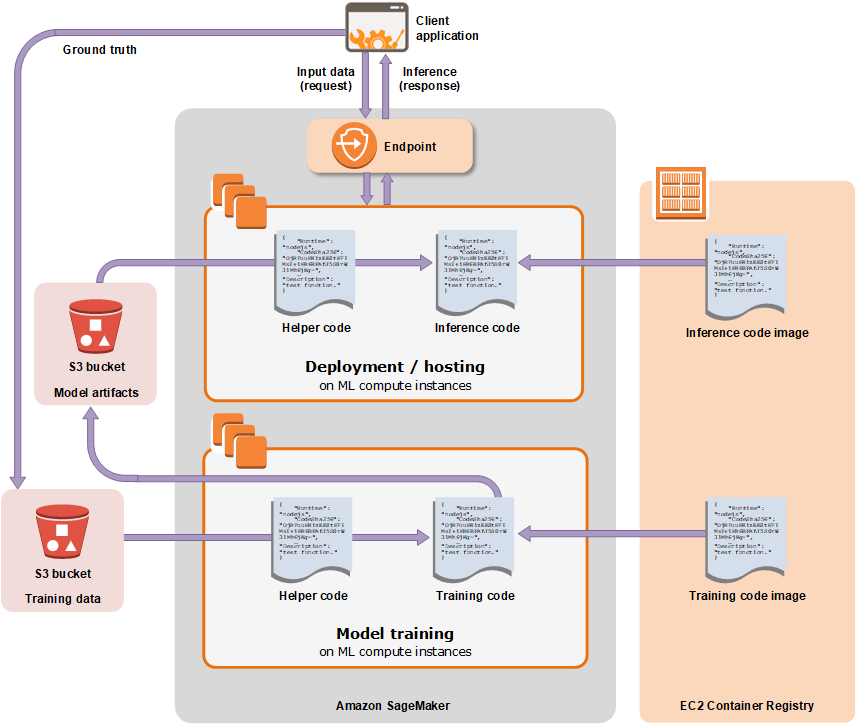

SageMaker (Amazon Web Service)

Amazon SageMaker is a fully-managed platform that enables developers and data scientists to quickly and easily build, train, and deploy machine learning models at any scale. Amazon SageMaker removes all the barriers that typically slow down developers who want to use machine learning.

Architecture:

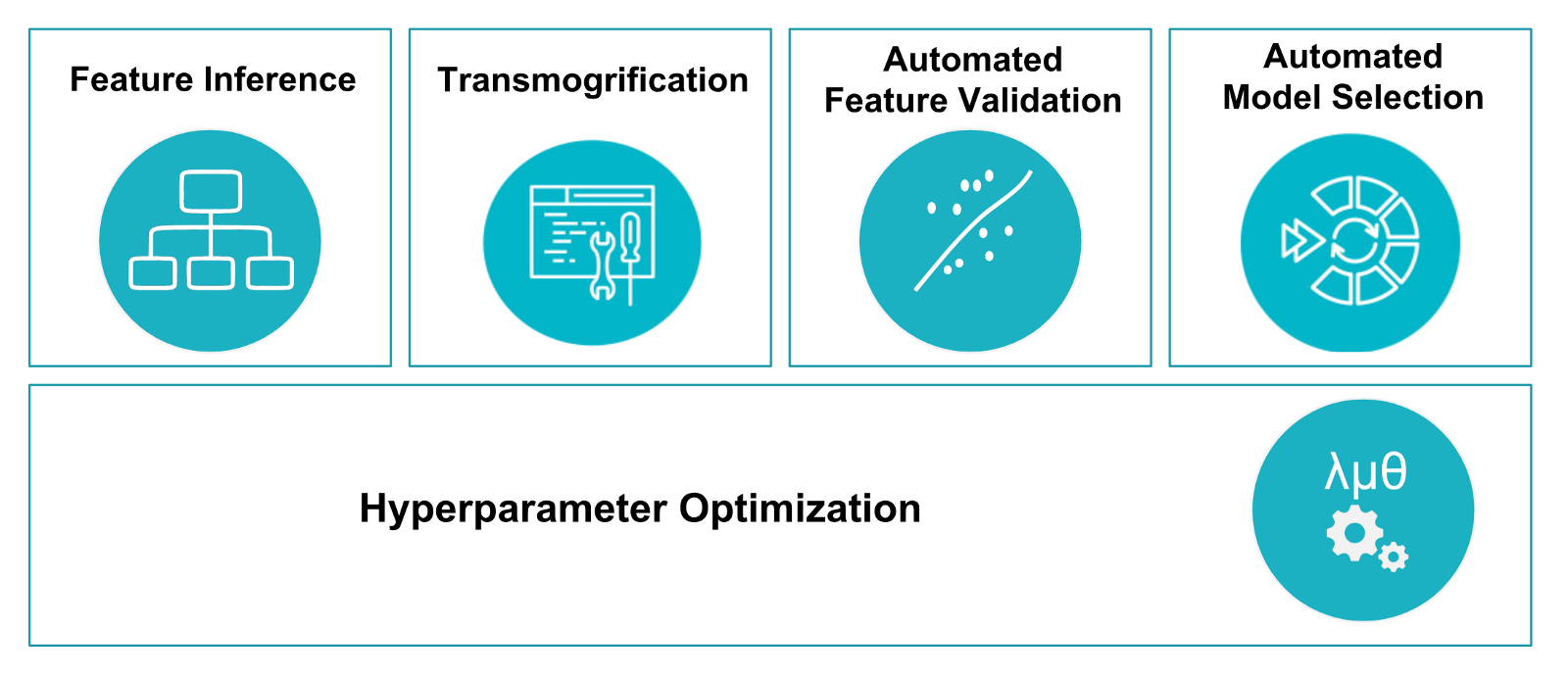

TransmogrifAI (Salesforce)

TransmogrifAI (pronounced trăns-mŏgˈrə-fī) is an AutoML library written in Scala that runs on top of Spark. It was developed with a focus on enhancing machine learning developer productivity through machine learning automation, and an API that enforces compile-time type-safety, modularity and reuse.

| homepage | github | documentation | blog |

Architecture:

Components:

-

Build production ready machine learning applications in hours, not months.

-

Build machine learning models.

-

Build modular, reusable, strongly typed machine learning workflows.

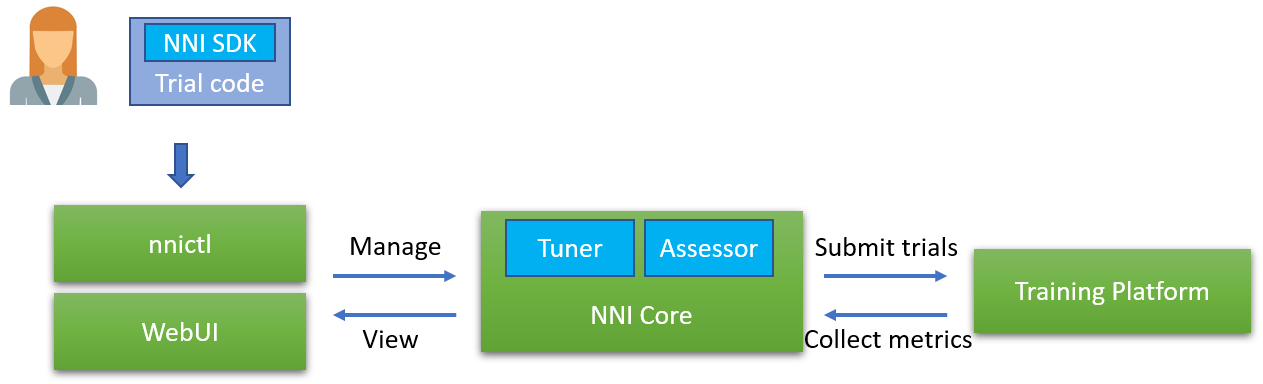

NNI - Neural Network Intelligence (Microsoft)

NNI (Neural Network Intelligence) is a toolkit to help users run automated machine learning (AutoML) experiments. The tool dispatches and runs trial jobs generated by tuning algorithms to search the best neural architecture and/or hyper-parameters in different environments like local machine, remote servers and cloud.

Architecture:

Components:

-

Experiment: An experiment is one task of, for example, finding out the best hyperparameters of a model, finding out the best neural network architecture. It consists of trials and AutoML algorithms.

-

Search Space: It means the feasible region for tuning the model. For example, the value range of each hyperparameters.

-

Configuration: A configuration is an instance from the search space, that is, each hyperparameter has a specific value.

-

Trial: Trial is an individual attempt at applying a new configuration (e.g., a set of hyperparameter values, a specific nerual architecture). Trial code should be able to run with the provided configuration.

-

Tuner: Tuner is an AutoML algorithm, which generates a new configuration for the next try. A new trial will run with this configuration.

-

Assessor: Assessor analyzes trial’s intermediate results (e.g., periodically evaluated accuracy on test dataset) to tell whether this trial can be early stopped or not.

-

Training Platform: It means where trials are executed. Depending on your experiment’s configuration, it could be your local machine, or remote servers, or large-scale training platform (e.g., OpenPAI, Kubernetes).

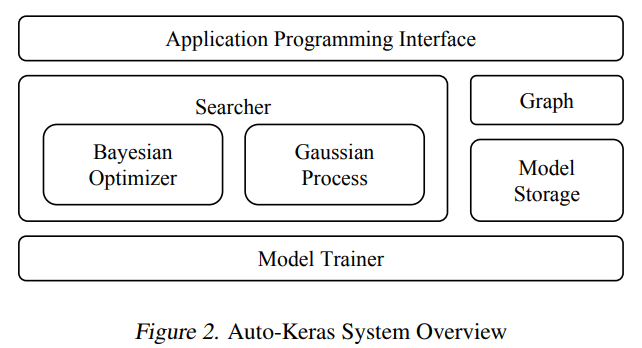

Auto-Keras

Auto-Keras is an open source software library for automated machine learning (AutoML). It is developed by DATA Lab at Texas A&M University and community contributors. The ultimate goal of AutoML is to provide easily accessible deep learning tools to domain experts with limited data science or machine learning background. Auto-Keras provides functions to automatically search for architecture and hyperparameters of deep learning models.

Architecture:

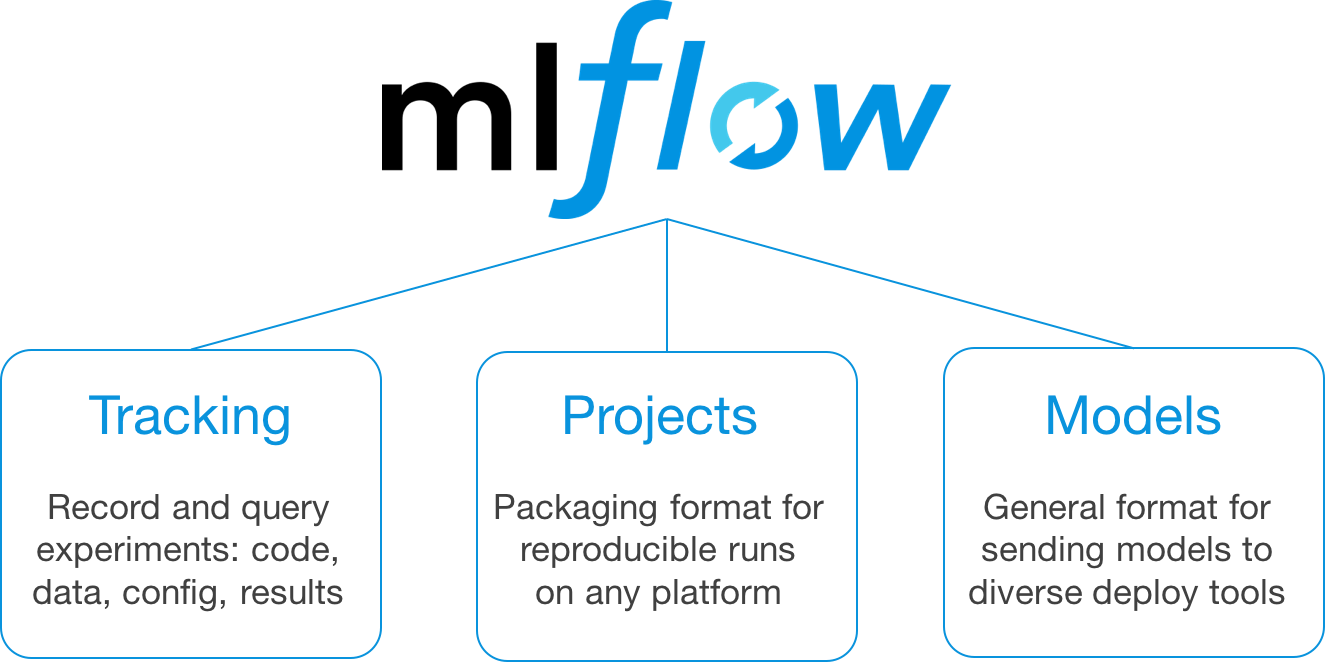

MLFlow (Databricks)

MLflow is an open source platform for managing the end-to-end machine learning lifecycle.

| homepage | github | documentation | blog |

Architecture:

Components:

-

MLflow Tracking: tracking experiments to record and compare parameters and results.

-

MLflow Projects: packaging ML code in a reusable, reproducible form in order to share with other data scientists or transfer to production.

-

MLflow Models: managing and deploying models from a variety of ML libraries to a variety of model serving and inference platforms.

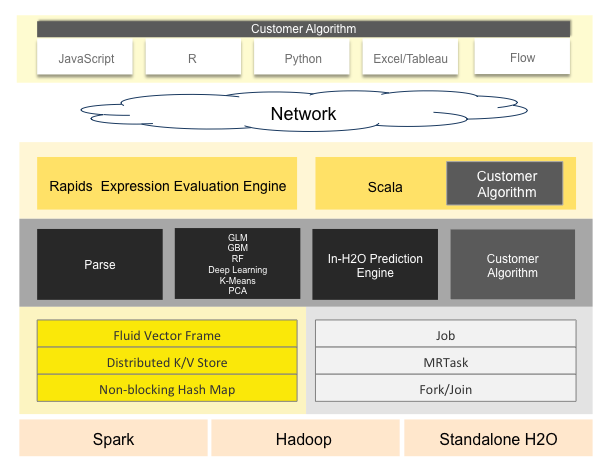

H2O - Open Source, Distributed Machine Learning for Everyone

H2O is a fully open source, distributed in-memory machine learning platform with linear scalability. H2O’s supports the most widely used statistical & machine learning algorithms including gradient boosted machines, generalized linear models, deep learning and more. H2O also has an industry leading AutoML functionality that automatically runs through all the algorithms and their hyperparameters to produce a leaderboard of the best models.

H2O4GPU is an open source, GPU-accelerated machine learning package with APIs in Python and R that allows anyone to take advantage of GPUs to build advanced machine learning models. A variety of popular algorithms are available including Gradient Boosting Machines (GBM’s), Generalized Linear Models (GLM’s), and K-Means Clustering. Our benchmarks found that training machine learning models on GPUs was up to 40x faster than CPU based systems.

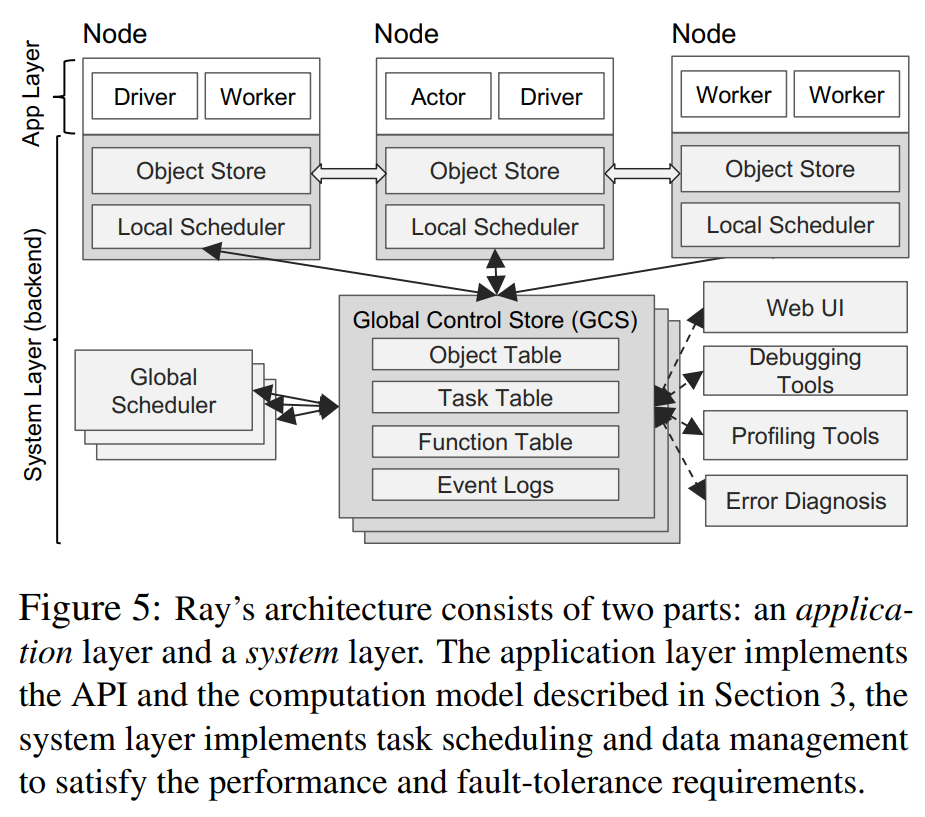

Project Ray (RISELab)

Ray is a high-performance distributed execution framework targeted at large-scale machine learning and reinforcement learning applications. It achieves scalability and fault tolerance by abstracting the control state of the system in a global control store and keeping all other components stateless. It uses a shared-memory distributed object store to efficiently handle large data through shared memory, and it uses a bottom-up hierarchical scheduling architecture to achieve low-latency and high-throughput scheduling. It uses a lightweight API based on dynamic task graphs and actors to express a wide range of applications in a flexible manner.

| homepage | github | blog | design overview | paper |

Architecture:

Components:

-

Tune: Scalable hyper-parameter search.

-

RLlib: Scalable reinforcement learning.

-

Distributed training.

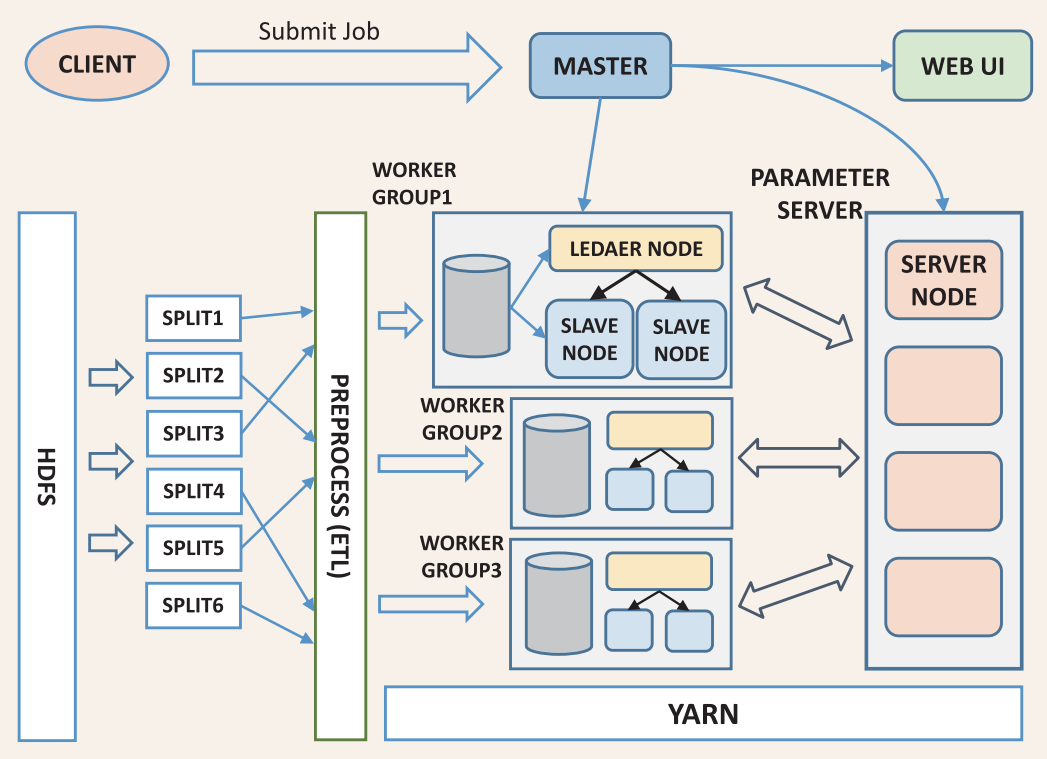

Angel (Tencent)

Angel is a high-performance distributed machine learning platform based on the philosophy of Parameter Server. It is tuned for performance with big data from Tencent and has a wide range of applicability and stability, demonstrating increasing advantage in handling higher dimension model. Angel is jointly developed by Tencent and Peking University, taking account of both high availability in industry and innovation in academia.

With model-centered core design concept, Angel partitions parameters of complex models into multiple parameter-server nodes, and implements a variety of machine learning algorithms using efficient model-updating interfaces and functions, as well as flexible consistency model for synchronization. Angel is developed with Java and Scala. It supports running on Yarn. With PS Service abstraction, it supports Spark on Angel.

| github | documentation | paper |

Architecture:

Components:

-

Parameter Server Layer: provide flexible and multi-framework PS responsible for distributed model storage, communication synchronization and coordination of computing.

-

Worker Layer: satisfy needs for algorithm development and innovation which automatically read and partition data and compute model delta locally. It communicate with the PS Server to complete the model training and prediction process.

-

Model Layer: provide necessary functions of PS and support targeted optimization for performance. It hosts functionalities such as model pull/push operations, multiple sync protocols, model partitioner, etc. This layer bridges between the worker layer and the PS layer.

Deployment and Optimizations

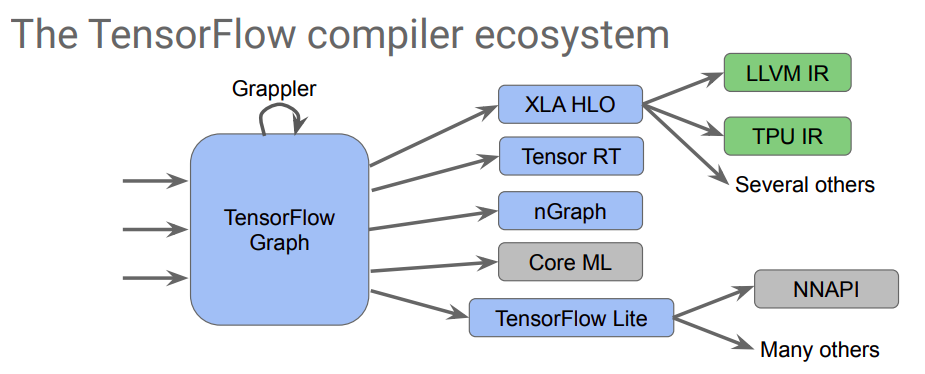

CoreML (Apple)

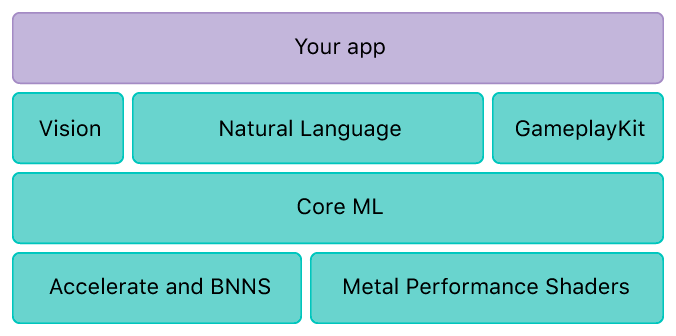

Integrate machine learning models into your app. Core ML is the foundation for domain-specific frameworks and functionality. Core ML supports Vision for image analysis, Natural Language for natural language processing, and GameplayKit for evaluating learned decision trees. Core ML itself builds on top of low-level primitives like Accelerate and BNNS, as well as Metal Performance Shaders.

Core ML is optimized for on-device performance, which minimizes memory footprint and power consumption. Running strictly on the device ensures the privacy of user data and guarantees that your app remains functional and responsive when a network connection is unavailable.

| homepage | documentation | resources |

TensorFlow Lite (Google)

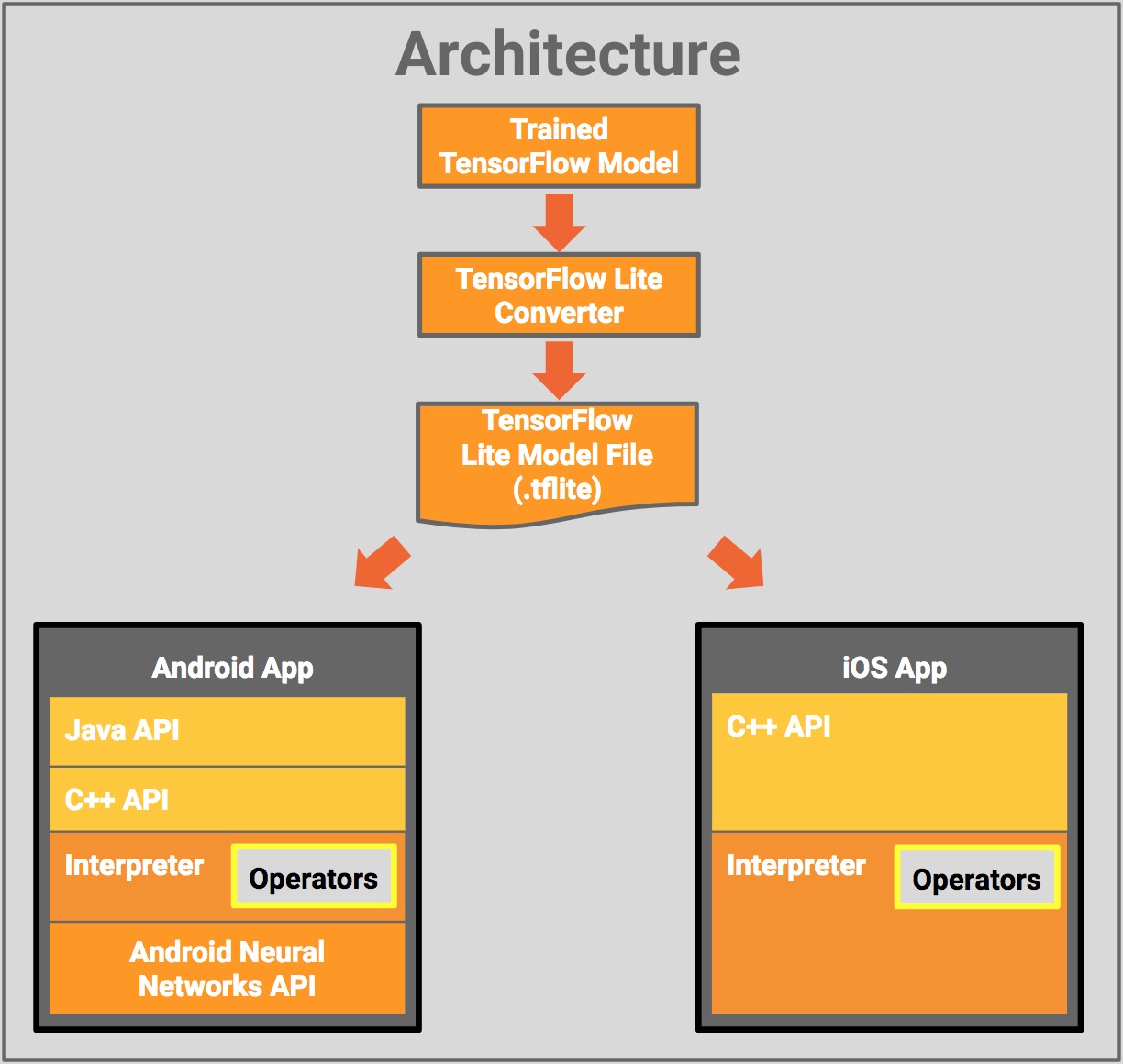

TensorFlow Lite is the official solution for running machine learning models on mobile and embedded devices. It enables on‑device machine learning inference with low latency and a small binary size on Android, iOS, and other operating systems.

Architecture:

Components:

-

TensorFlow Model: A trained TensorFlow model saved on disk.

-

TensorFlow Lite Converter: A program that converts the model to the TensorFlow Lite file format.

-

TensorFlow Lite Model File: A model file format based on FlatBuffers, that has been optimized for maximum speed and minimum size.

TensorRT (NVIDIA)

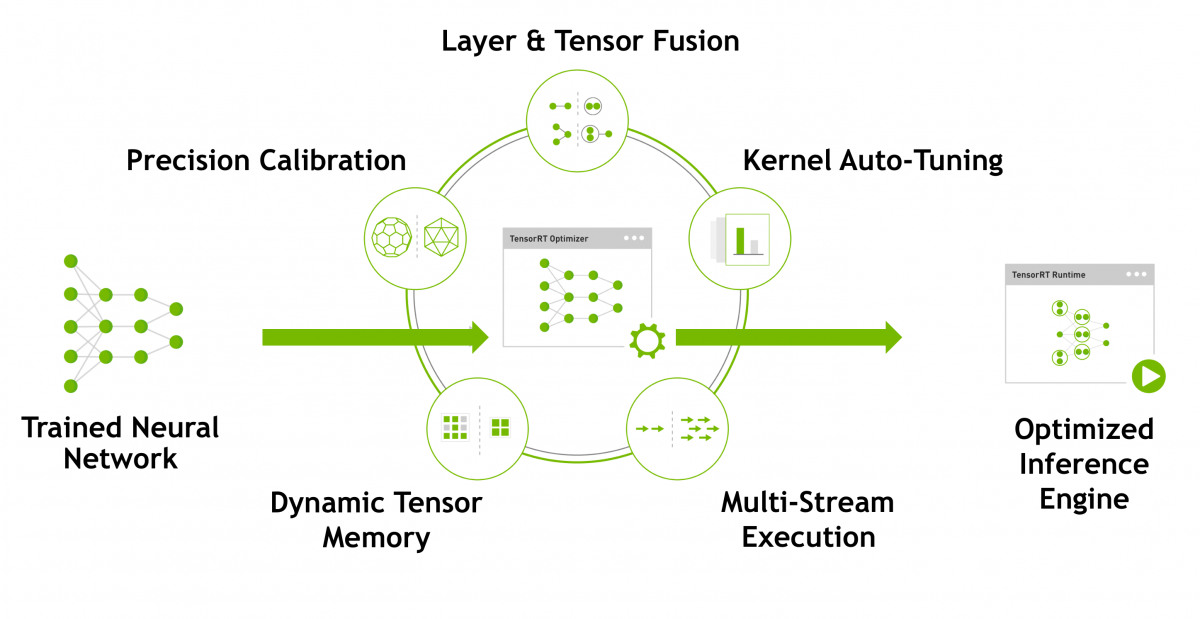

NVIDIA TensorRT™ is a platform for high-performance deep learning inference. It includes a deep learning inference optimizer and runtime that delivers low latency and high-throughput for deep learning inference applications.

| homepage | blog | documentation | benchmark |

Architecture:

Components:

-

Weight & Activation Precision Calibration: Maximizes throughput by quantizing models to INT8 while preserving accuracy.

-

Layer & Tensor Fusion: Optimizes use of GPU memory and bandwidth by fusing nodes in a kernel.

-

Kernel Auto-Tuning: Selects best data layers and algorithms based on target GPU platform.

-

Dynamic Tensor Memory: Minimizes memory footprint and re-uses memory for tensors efficiently.

-

Multi-Stream Execution: Scalable design to process multiple input streams in parallel.

Greengrass (Amazon)

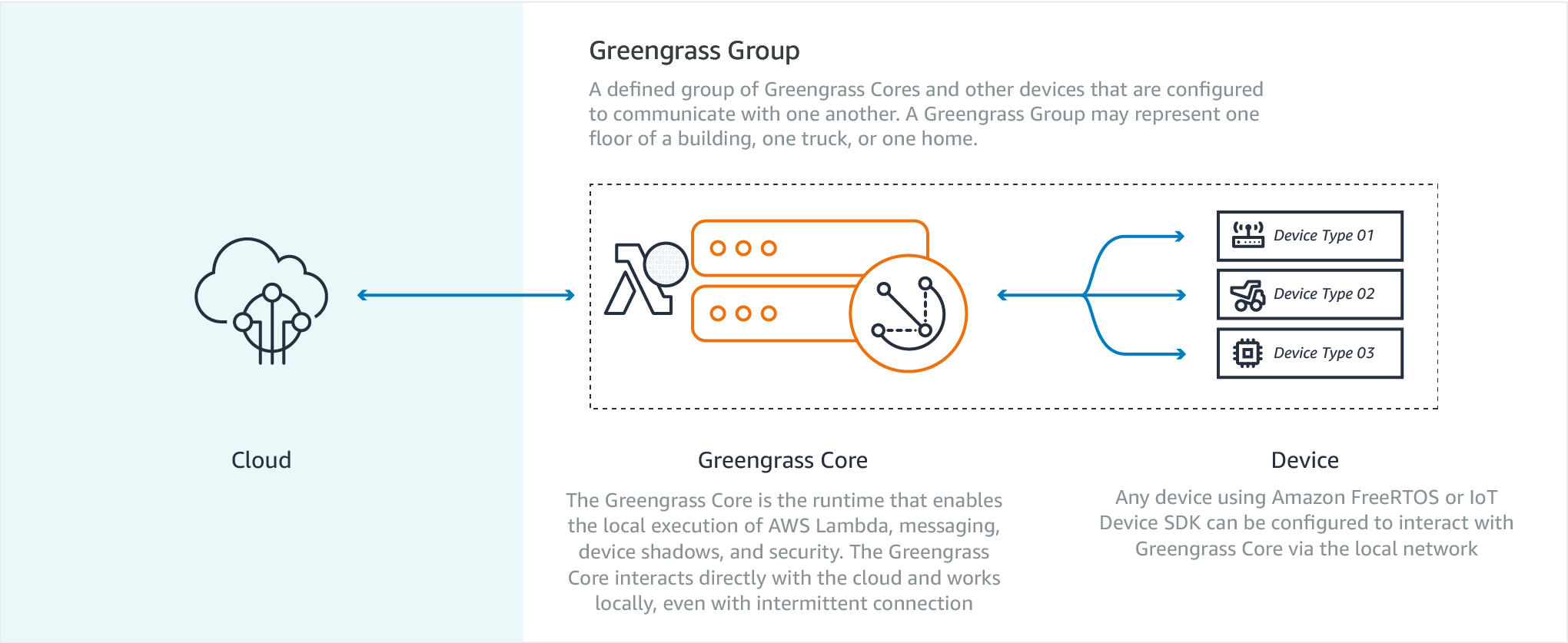

AWS Greengrass is software that lets you run local compute, messaging, data caching, sync, and ML inference capabilities for connected devices in a secure way. With AWS Greengrass, connected devices can run AWS Lambda functions, keep device data in sync, and communicate with other devices securely – even when not connected to the Internet. Using AWS Lambda, Greengrass ensures your IoT devices can respond quickly to local events, use Lambda functions running on Greengrass Core to interact with local resources, operate with intermittent connections, stay updated with over the air updates, and minimize the cost of transmitting IoT data to the cloud.

| blog |

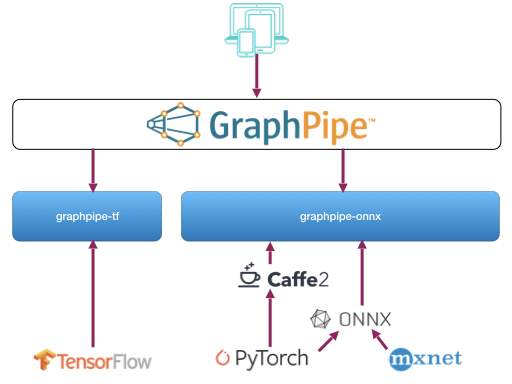

GraphPipe (Oracle)

GraphPipe is a protocol and collection of software designed to simplify machine learning model deployment and decouple it from framework-specific model implementations.

| homepage | github | documentation |

Architecture:

Features:

-

A minimalist machine learning transport specification based on flatbuffers

-

Simple, efficient reference model servers for Tensorflow, Caffe2, and ONNX.

-

Efficient client implementations in Go, Python, and Java.

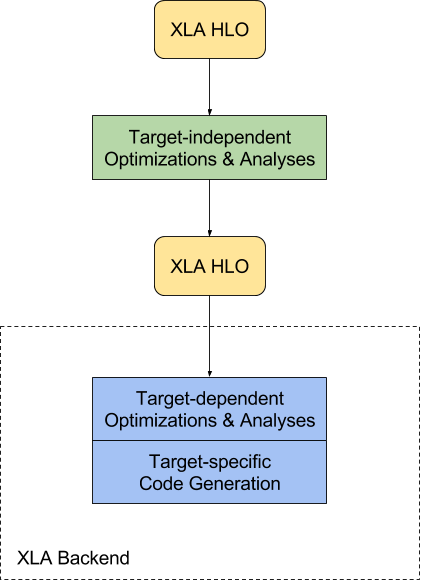

TensorFlow XLA (Accelerated Linear Algebra) (Google)

XLA (Accelerated Linear Algebra) is a domain-specific compiler for linear algebra that optimizes TensorFlow computations. The results are improvements in speed, memory usage, and portability on server and mobile platforms.

Improve execution speed. Compile subgraphs to reduce the execution time of short-lived Ops to eliminate overhead from the TensorFlow runtime, fuse pipelined operations to reduce memory overhead, and specialize to known tensor shapes to allow for more aggressive constant propagation.

Improve memory usage. Analyze and schedule memory usage, in principle eliminating many intermediate storage buffers.

Reduce reliance on custom Ops. Remove the need for many custom Ops by improving the performance of automatically fused low-level Ops to match the performance of custom Ops that were fused by hand.

Reduce mobile footprint. Eliminate the TensorFlow runtime by ahead-of-time compiling the subgraph and emitting an object/header file pair that can be linked directly into another application. The results can reduce the footprint for mobile inference by several orders of magnitude.

Improve portability. Make it relatively easy to write a new backend for novel hardware, at which point a large fraction of TensorFlow programs will run unmodified on that hardware. This is in contrast with the approach of specializing individual monolithic Ops for new hardware, which requires TensorFlow programs to be rewritten to make use of those Ops.

| homepage | github | documentation | blog | talk |

Compilation Process:

Components:

- TensorFlow graphs compiled

- Just-In-Time (JIT) compilation

- Ahead-Of-Time (AOT) compilation

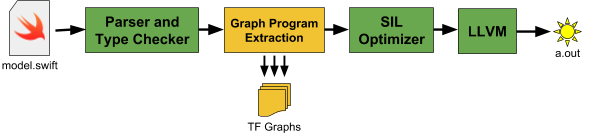

Swift for TensorFlow

Swift for TensorFlow is a new way to develop machine learning models. It gives you the power of TensorFlow directly integrated into the Swift programming language. With Swift, you can write the following imperative code, and Swift automatically turns it into a single TensorFlow Graph and runs it with the full performance of TensorFlow Sessions on CPU, GPU and TPU.

Swift combines the flexibility of Eager Execution with the high performance of Graphs and Sessions. Behind the scenes, Swift analyzes your Tensor code and automatically builds graphs for you. Swift also catches type errors and shape mismatches before running your code, and has Automatic Differentiation built right in. We believe that machine learning tools are so important that they deserve a first-class language and a compiler.

| homepage | github | design overview | tech deep dive |

Compiler:

Related project - DLVM (Modern Compiler Infrastructure for Deep Learning Systems):

| homepage | github | paper1 | paper2 | paper3 |

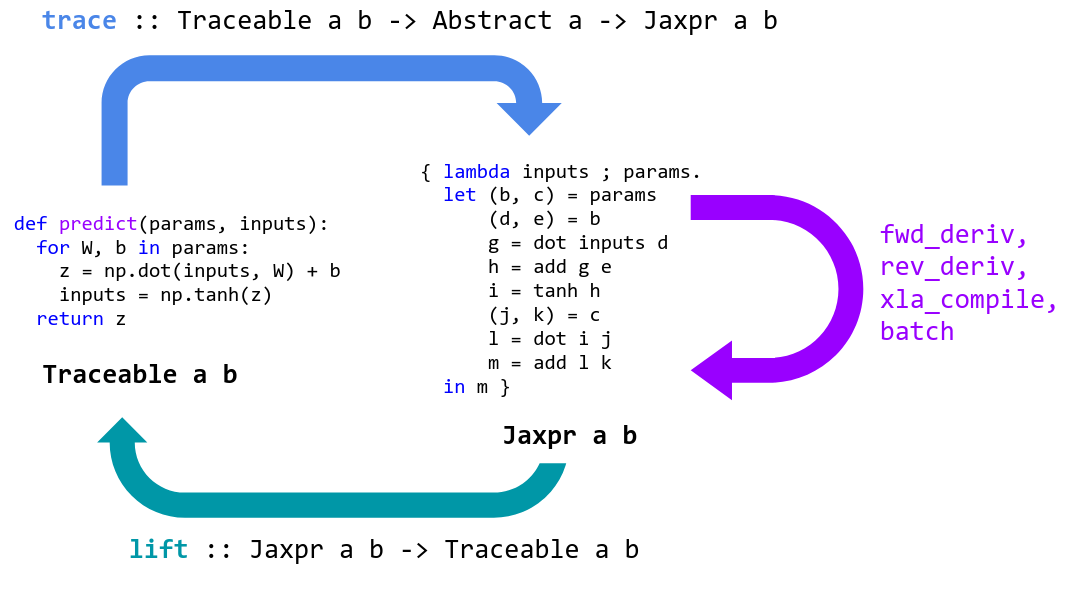

JAX - Autograd and XLA (Google)

JAX is Autograd and XLA, brought together for high-performance machine learning research. With its updated version of Autograd, JAX can automatically differentiate native Python and NumPy functions. It can differentiate through loops, branches, recursion, and closures, and it can take derivatives of derivatives of derivatives. It supports reverse-mode differentiation (a.k.a. backpropagation) as well as forward-mode differentiation, and the two can be composed arbitrarily to any order.

| github |

How It Works:

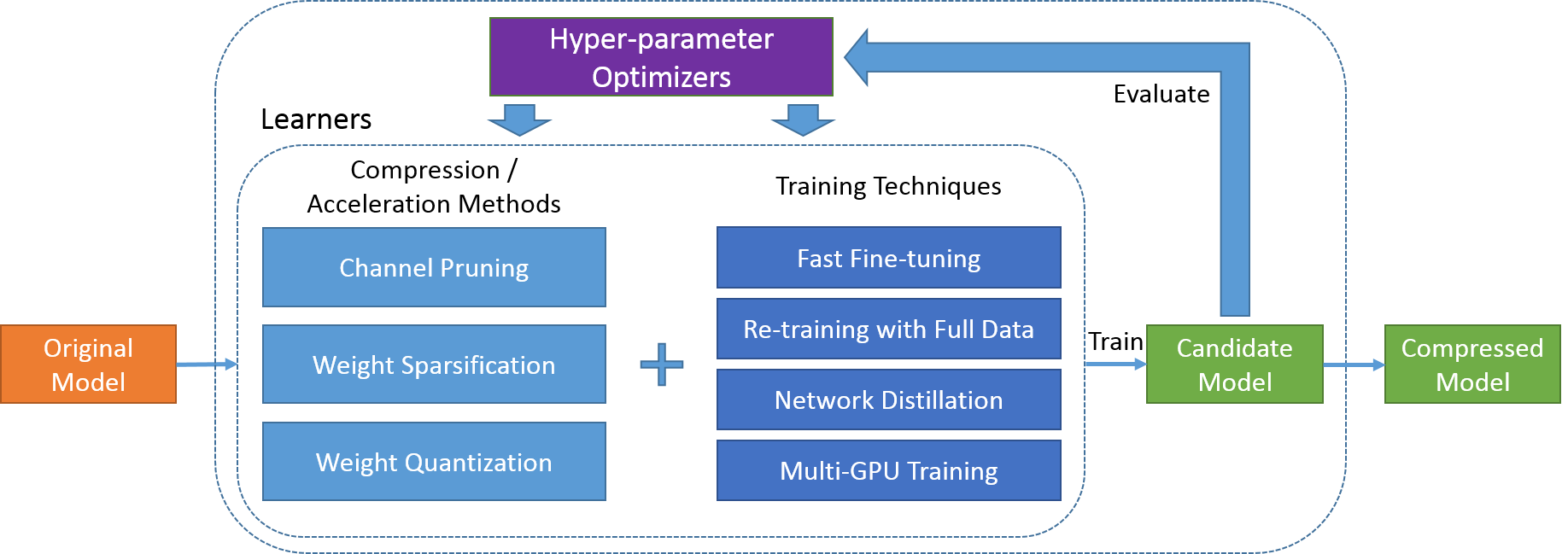

PocketFlow (Tencent)

PocketFlow is an open-source framework for compressing and accelerating deep learning models with minimal human effort. Deep learning is widely used in various areas, such as computer vision, speech recognition, and natural language translation. However, deep learning models are often computational expensive, which limits further applications on mobile devices with limited computational resources.

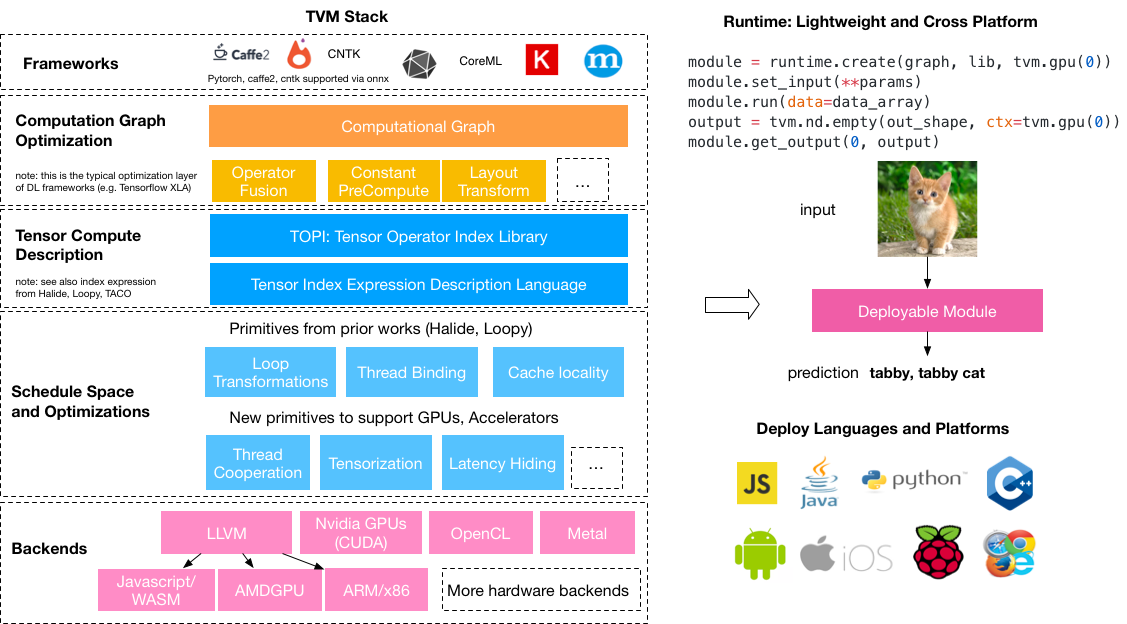

TVM - End to End Deep Learning Compiler Stack (Amazon)

TVM is a compiler stack for deep learning systems. It is designed to close the gap between the productivity-focused deep learning frameworks, and the performance- and efficiency-focused hardware backends. TVM works with deep learning frameworks to provide end to end compilation to different backends. Checkout the tvm stack homepage for more information.

| homepage | github | documentation | paper |

Architecture:

Components:

-

Compilation of deep learning models in Keras, MXNet, PyTorch, Tensorflow, CoreML, DarkNet into minimum deploy-able modules on diverse hardware backends.

-

Infrastructure to automatic generate and optimize tensor operators on more backend with better performance.

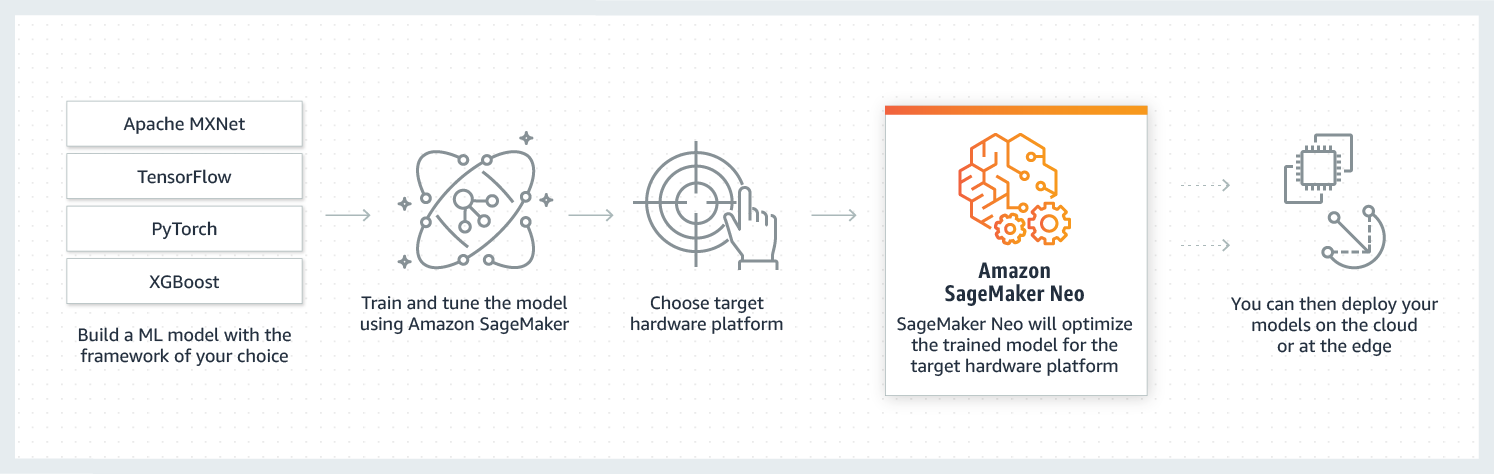

SageMaker Neo (Amazon)

Amazon SageMaker Neo enables developers to train machine learning models once and run them anywhere in the cloud and at the edge. Amazon SageMaker Neo optimizes models to run up to twice as fast, with less than a tenth of the memory footprint, with no loss in accuracy.

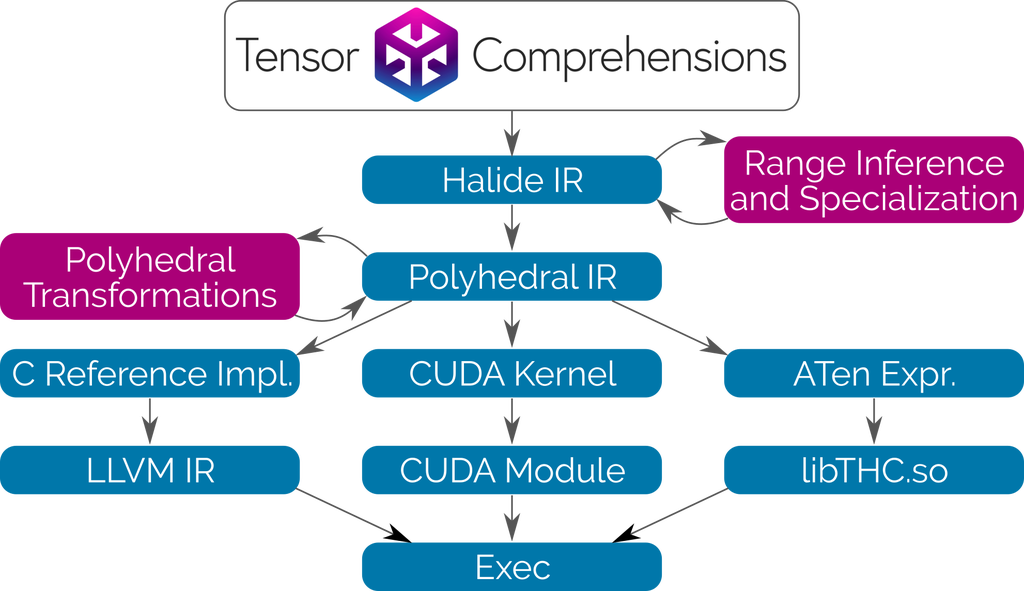

Tensor Comprehensions (Facebook)

Tensor Comprehensions (TC) is a fully-functional C++ library to automatically synthesize high-performance machine learning kernels using Halide, ISL and NVRTC or LLVM. TC additionally provides basic integration with Caffe2 and PyTorch. We provide more details in our paper on arXiv.

| homepage | github | paper | blog |

Architecture:

Glow - A community-driven approach to AI infrastructure (Facebook)

Glow is a machine learning compiler that accelerates the performance of deep learning frameworks on different hardware platforms. It enables the ecosystem of hardware developers and researchers to focus on building next gen hardware accelerators that can be supported by deep learning frameworks like PyTorch.

| homepage | github | paper | blog |

Architecture:

Components:

-

High-level intermediate representation allows the optimizer to perform domain-specific optimizations.

-

Lower-level intermediate representation, an instruction-based address-only representation allows the compiler to perform memory-related optimizations, such as instruction scheduling, static memory allocation, and copy elimination.

-

The optimizer then performs machine-specific code generation to take advantage of specialized hardware features.

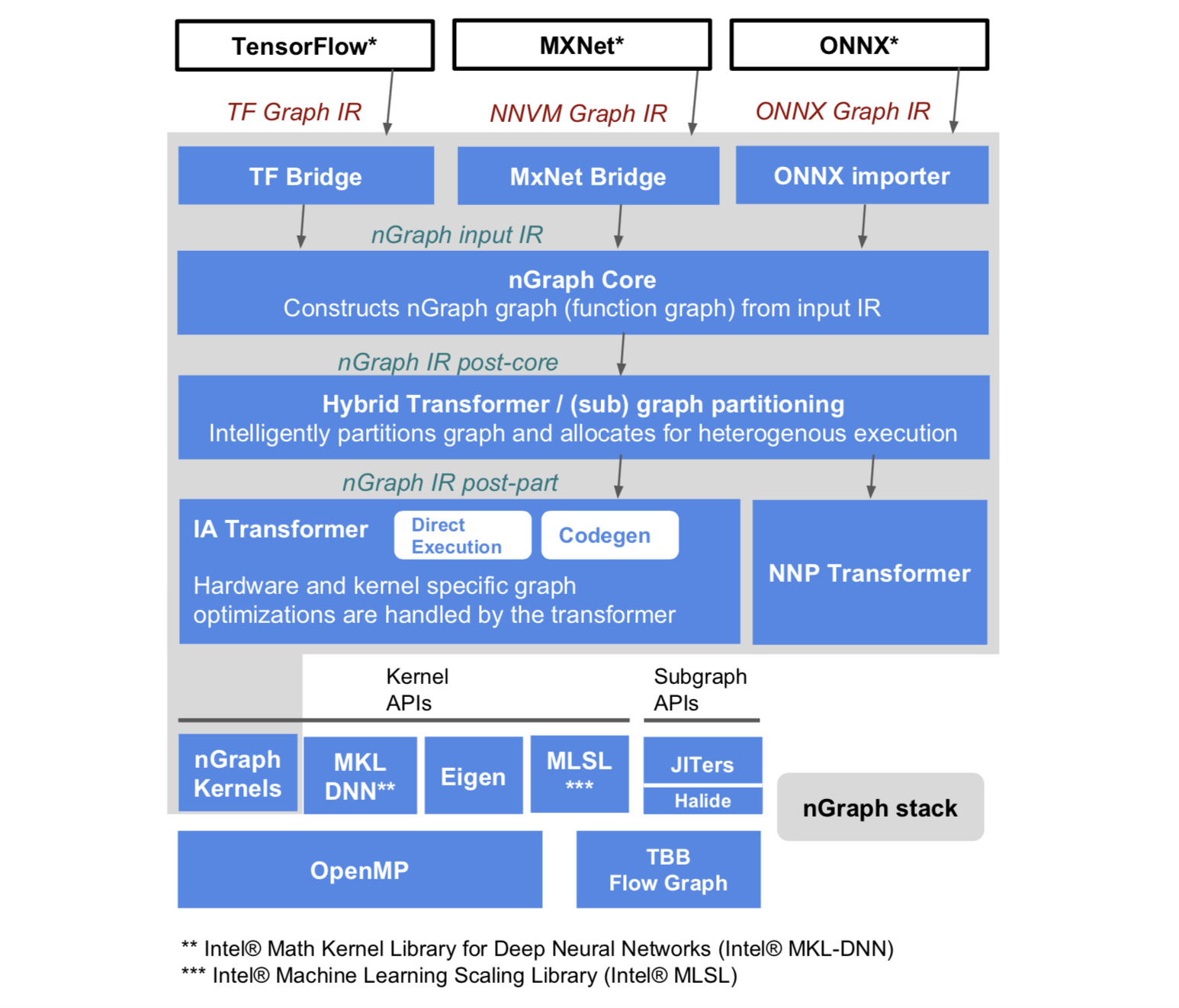

nGraph - A New Open Source Compiler for Deep Learning Systems (Intel)

We are pleased to announce the open sourcing of nGraph, a framework-neutral Deep Neural Network (DNN) model compiler that can target a variety of devices. With nGraph, data scientists can focus on data science rather than worrying about how to adapt their DNN models to train and run efficiently on different devices.

| homepage | documentation | github | paper |

Architecture:

Components:

-

Fusion: Fuse multiple ops to to decrease memory usage.

-

Data layout abstraction: Make abstraction easier and faster with nGraph translating element order to work best for a given or available device.

-

Data reuse: Save results and reuse for subgraphs with the same input.

-

Graph scheduling: Run similar subgraphs in parallel via multi-threading.

-

Graph partitioning: Partition subgraphs to run on different devices to speed up computation; make better use of spare CPU cycles with nGraph.

-

Memory management: Prevent peak memory usage by intercepting a graph with or by a "saved checkpoint," and to enable data auditing.

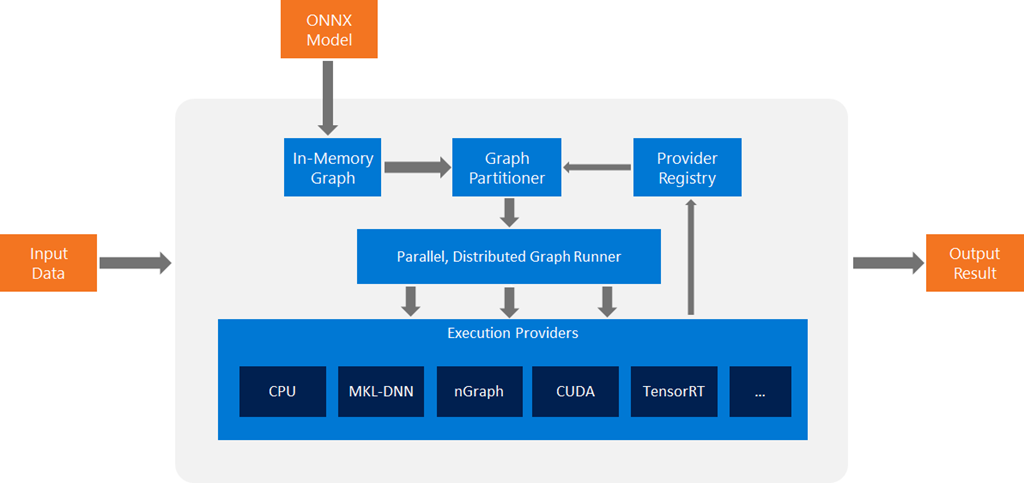

ONNX - Open Neural Network Exchange

ONNX is a open format to represent deep learning models. With ONNX, AI developers can more easily move models between state-of-the-art tools and choose the combination that is best for them. ONNX is developed and supported by a community of partners.

| homepage | documentation | github |

Architecture:

Components:

-

Framework Interoperability: enabling interoperability makes it possible to get great ideas into production faster. ONNX enables models to be trained in one framework and transferred to another for inference.

-

Hardware Optimizations: ONNX makes it easier for optimizations to reach more developers. Any tools exporting ONNX models can benefit ONNX-compatible runtimes and libraries designed to maximize performance on some of the best hardware in the industry.

MLIR - "Multi-Level Intermediate Representation" Compiler Infrastructure

The MLIR project aims to define a common intermediate representation (IR) that will unify the infrastructure required to execute high performance machine learning models in TensorFlow and similar ML frameworks. This project will include the application of HPC techniques, along with integration of search algorithms like reinforcement learning. This project aims to reduce the cost to bring up new hardware, and improve usability for existing TensorFlow users.

| homepage | github | talk | slices |

Architecture:

Goal:

Global improvements to TensorFlow infrastructure, SSA-based designs to generalize and improve ML “graphs”:

-

Better side effect modeling and control flow representation

-

Improve generality of the lowering passes

-

Dramatically increase code reuse

-

Fix location tracking and other pervasive issues for better user experience

Neural Network Distiller (Intel)

Distiller is an open-source Python package for neural network compression research. Network compression can reduce the footprint of a neural network, increase its inference speed and save energy. Distiller provides a PyTorch environment for prototyping and analyzing compression algorithms, such as sparsity-inducing methods and low precision arithmetic.

| homepage | github | documentation |

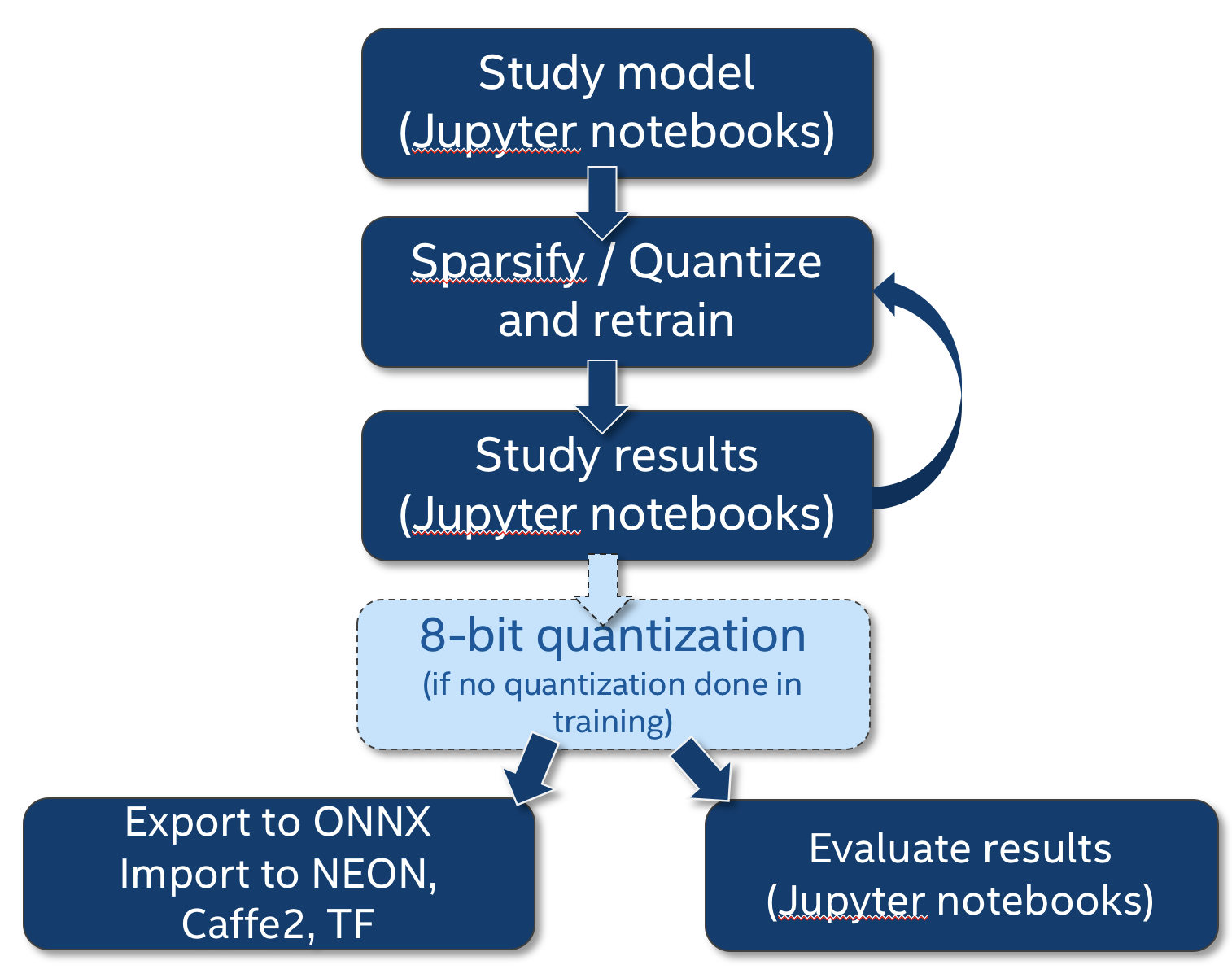

Workflow:

Components:

-

A framework for integrating pruning, regularization and quantization algorithms.

-

A set of tools for analyzing and evaluating compression performance.

-

Example implementations of state-of-the-art compression algorithms.

MACE - Mobile AI Compute Engine (XiaoMi)

MACE (Mobile AI Compute Engine) is a deep learning inference framework optimized for mobile heterogeneous computing platforms. MACE provides tools and documents to help users to deploy deep learning models to mobile phones, tablets, personal computers and IoT devices.

| github | documentation |

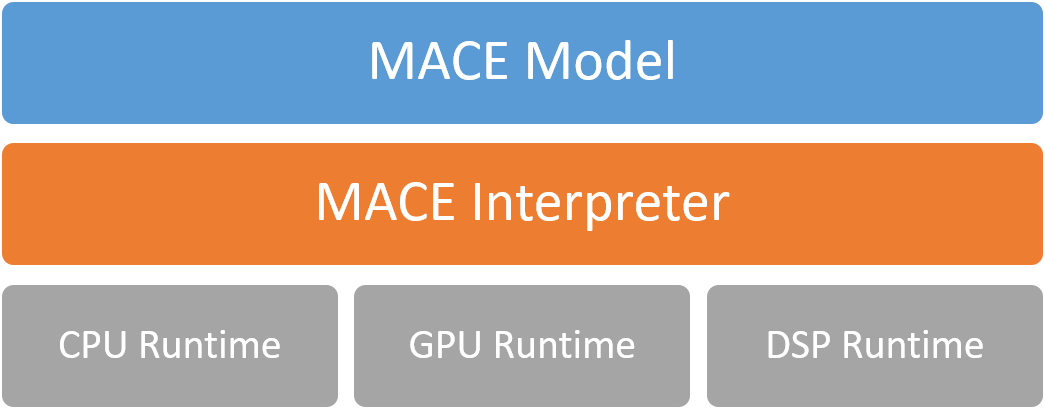

Architecture:

Components:

- Performance

- Runtime is optimized with NEON, OpenCL and Hexagon, and Winograd algorithm is introduced to speed up convolution operations. The initialization is also optimized to be faster.

- Power consumption

- Chip dependent power options like big.LITTLE scheduling, Adreno GPU hints are included as advanced APIs.

- Responsiveness

- UI responsiveness guarantee is sometimes obligatory when running a model. Mechanism like automatically breaking OpenCL kernel into small units is introduced to allow better preemption for the UI rendering task.

- Memory usage and library footprint

- Graph level memory allocation optimization and buffer reuse are supported. The core library tries to keep minimum external dependencies to keep the library footprint small.

- Model protection

- Model protection has been the highest priority since the beginning of the design. Various techniques are introduced like converting models to C++ code and literal obfuscations.

- Platform coverage

- Good coverage of recent Qualcomm, MediaTek, Pinecone and other ARM based chips. CPU runtime is also compatible with most POSIX systems and architectures with limited performance.

- Rich model formats support

- TensorFlow, Caffe and ONNX model formats are supported.

AMC - AutoML for Model Compression engine

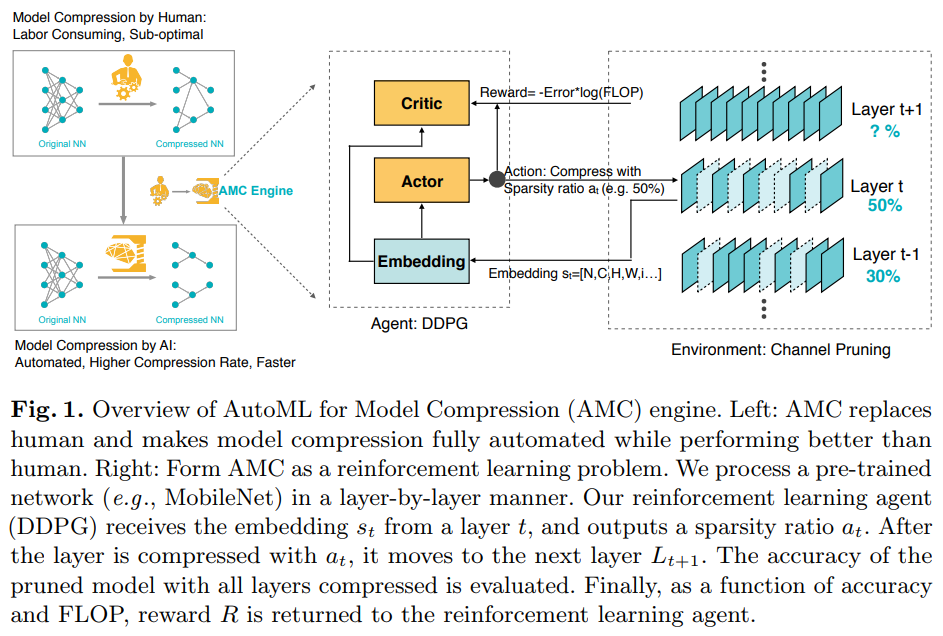

We propose AutoML for Model Compression (AMC) which leverage reinforcement learning to provide the model compression policy. This learning-based compression policy outperforms conventional rule-based compression policy by having higher compression ratio, better preserving the accuracy and freeing human labor.

| paper |

Architecture:

Insight:

-

Automated Compression with Reinforcement Learning, AMC leverages reinforcement learning for efficient search over action space.

-

Search Protocols: resource-constrained compression, accuracy-guaranteed compression.

Courses

CSE 599W Systems for ML (University of Washington)

This course will be covering various aspects of deep learning systems, including: basics of deep learning, programming models for expressing machine learning models, automatic differentiation, memory optimization, scheduling, distributed learning, hardware acceleration, domain specific languages, and model serving. Many of these topics intersect with existing research directions in databases, systems and networking, architecture and programming languages. The goal is to offer a comprehensive picture on how deep learning systems works, discuss and execute on possible research opportunities, and build open-source software that will have broad appeal.

CSCE 790: Machine Learning Systems

In this course, we will learn the fundamental differences between ML as a technique versus ML as a system in production. A machine learning system involves a significant number of components and it is important that they remain responsive in the face of failure and changes in load. This course covers several strategies to keep ML systems responsive, resilient, and elastic. Machine learning systems are different than other computer systems when it comes to building, testing, deploying, delivering, and evolving. ML systems also have unique challenges when we need to change the architecture or behavior of the system. Therefore, it is essential to learn how to deal with such unique challenges that only may happen when building real-world production-ready ML systems (e.g., performance issues, memory leaking, communication issues, multi-GPU issues, etc). The focus of this course will be primarily on deep learning systems, but the principles will remain similar across all ML systems.

Conferences

SysML - Conference on Systems and Machine Learning @ Stanford

ML Systems Workshop @ NeurIPS

ScaledML - Scaling ML models, data, algorithms & infrastructure

Papers

Survey / Reviews

A Survey on Compiler Autotuning using Machine Learning

This survey summarizes and classifies the recent advances in using machine learning for the compiler optimization field, particularly on the two major problems of (1) selecting the best optimizations and (2) the phase-ordering of optimizations. The survey highlights the approaches taken so far, the obtained results, the fine-grain classification among different approaches and finally, the influential papers of the field.

A Survey of Model Compression and Acceleration for Deep Neural Networks

In this paper, we survey the recent advanced techniques for compacting and accelerating CNNs model developed. These techniques are roughly categorized into four schemes: parameter pruning and sharing, low-rank factorization, transfered/compact convolutional filters and knowledge distillation. Methods of parameter pruning and sharing will be described at the beginning, after that the other techniques will be introduced. For each scheme, we provide insightful analysis regarding the performance, related applications, advantages and drawbacks etc. Then we will go through a few very recent additional successful methods, for example, dynamic networks and stochastic depths networks. After that, we survey the evaluation matrix, main datasets used for evaluating the model performance and recent bench-marking efforts. Finally we conclude this paper, discuss remaining challenges and possible directions in this topic.

Large-Scale / Distributed Learning

Milestones

Major milestones for "ImageNet in X nanoseconds" 🎢.

| initial date | resources | elapsed | top-1 accuracy | batch size | link |

|---|---|---|---|---|---|

| June 2017 | 256 NVIDIA P100 GPUs | 1 hour | 76.3% | 8192 | https://arxiv.org/abs/1706.02677 |

| Aug 2017 | 256 NVIDIA P100 GPUs | 50 mins | 75.01% | 8192 | https://arxiv.org/abs/1708.02188 |

| Sep 2017 | 512 KNLs -> 2048 KNLs | 1 hour -> 20 mins | 72.4% -> 75.4% | 32768 | https://arxiv.org/abs/1709.05011 |

| Nov 2017 | 128 Google TPUs (v2) | 30 mins | 76.1% | 16384 | https://arxiv.org/abs/1711.00489 |

| Nov 2017 | 1024 NVIDIA P100 GPUs | 15 mins | 74.9% | 32768 | https://arxiv.org/abs/1711.04325 |

| Jul 2018 | 2048 NVIDIA P40 GPUs | 6.6 mins | 75.8% | 65536 | https://arxiv.org/abs/1807.11205 |

| Nov 2018 | 2176 NVIDIA V100 GPUs | 3.7 mins | 75.03% | 69632 | https://arxiv.org/abs/1811.06992 |

| Nov 2018 | 1024 Google TPUs (v3) | 2.2 mins | 76.3% | 32768 | https://arxiv.org/abs/1811.06992 |

Accurate, Large Minibatch SGD: Training ImageNet in 1 Hour

- Learning rate linear scaling rule

- Learning rate warmup (constant, gradual)

- Communication: recursive halving and doubling algorithm

PowerAI DDL

- Topology-aware communication

ImageNet Training in Minutes

- Layer-wise Adaptive Rate Scaling (LARS)

Don't Decay the Learning Rate, Increase the Batch Size

- Decaying the learning rate is simulated annealing

- Instead of decaying the learning rate, increase the batch size during training

Extremely Large Minibatch SGD: Training ResNet-50 on ImageNet in 15 Minutes

- RMSprop Warm-up

- Slow-start learning rate schedule

- Batch normalization without moving averages

Highly Scalable Deep Learning Training System with Mixed-Precision: Training ImageNet in Four Minutes

- Mixed precision

- Layer-wise Adaptive Rate Scaling (LARS)

- Improvements on model architecture

- Communication: tensor fusion, hierarchical all-reduce, hybrid all-reduce

ImageNet/ResNet-50 Training in 224 Seconds

- Batch size control to reduce accuracy degradation with mini-batch size exceeding 32K

- Communication: 2D-torus all-reduce

Image Classification at Supercomputer Scale

- Mixed precision

- Layer-wise Adaptive Rate Scaling (LARS)

- Distributed batch normalization

- Input pipeline optimization: dataset sharding and caching, prefetch, fused JPEG decoding and cropping, parallel data parsing

- Communication: 2D gradient summation