GT-RIPL / Continual Learning Benchmark

Licence: mit

Evaluate three types of task shifting with popular continual learning algorithms.

Stars: ✭ 245

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Continual Learning Benchmark

Neural Api

CAI NEURAL API - Pascal based neural network API optimized for AVX, AVX2 and AVX512 instruction sets plus OpenCL capable devices including AMD, Intel and NVIDIA.

Stars: ✭ 94 (-61.63%)

Mutual labels: artificial-neural-networks

100daysofmlcode

My journey to learn and grow in the domain of Machine Learning and Artificial Intelligence by performing the #100DaysofMLCode Challenge.

Stars: ✭ 146 (-40.41%)

Mutual labels: artificial-neural-networks

Sparse Evolutionary Artificial Neural Networks

Always sparse. Never dense. But never say never. A repository for the Adaptive Sparse Connectivity concept and its algorithmic instantiation, i.e. Sparse Evolutionary Training, to boost Deep Learning scalability on various aspects (e.g. memory and computational time efficiency, representation and generalization power).

Stars: ✭ 182 (-25.71%)

Mutual labels: artificial-neural-networks

Brain Inspired Replay

A brain-inspired version of generative replay for continual learning with deep neural networks (e.g., class-incremental learning on CIFAR-100; PyTorch code).

Stars: ✭ 99 (-59.59%)

Mutual labels: artificial-neural-networks

Brainforge

A Neural Networking library based on NumPy only

Stars: ✭ 114 (-53.47%)

Mutual labels: artificial-neural-networks

Java Deep Learning Cookbook

Code for Java Deep Learning Cookbook

Stars: ✭ 156 (-36.33%)

Mutual labels: artificial-neural-networks

Malware Classification

Towards Building an Intelligent Anti-Malware System: A Deep Learning Approach using Support Vector Machine for Malware Classification

Stars: ✭ 88 (-64.08%)

Mutual labels: artificial-neural-networks

Echotorch

A Python toolkit for Reservoir Computing and Echo State Network experimentation based on pyTorch. EchoTorch is the only Python module available to easily create Deep Reservoir Computing models.

Stars: ✭ 231 (-5.71%)

Mutual labels: artificial-neural-networks

Awesome Quantum Machine Learning

Here you can get all the Quantum Machine learning Basics, Algorithms ,Study Materials ,Projects and the descriptions of the projects around the web

Stars: ✭ 1,940 (+691.84%)

Mutual labels: artificial-neural-networks

Data Science Resources

👨🏽🏫You can learn about what data science is and why it's important in today's modern world. Are you interested in data science?🔋

Stars: ✭ 171 (-30.2%)

Mutual labels: artificial-neural-networks

Top Deep Learning

Top 200 deep learning Github repositories sorted by the number of stars.

Stars: ✭ 1,365 (+457.14%)

Mutual labels: artificial-neural-networks

Lightnn

The light deep learning framework for study and for fun. Join us!

Stars: ✭ 112 (-54.29%)

Mutual labels: artificial-neural-networks

Perfect Tensorflow

TensorFlow C API Class Wrapper in Server Side Swift.

Stars: ✭ 166 (-32.24%)

Mutual labels: artificial-neural-networks

Sigma

Rocket powered machine learning. Create, compare, adapt, improve - artificial intelligence at the speed of thought.

Stars: ✭ 98 (-60%)

Mutual labels: artificial-neural-networks

Free Ai Resources

🚀 FREE AI Resources - 🎓 Courses, 👷 Jobs, 📝 Blogs, 🔬 AI Research, and many more - for everyone!

Stars: ✭ 192 (-21.63%)

Mutual labels: artificial-neural-networks

Deep Learning Drizzle

Drench yourself in Deep Learning, Reinforcement Learning, Machine Learning, Computer Vision, and NLP by learning from these exciting lectures!!

Stars: ✭ 9,717 (+3866.12%)

Mutual labels: artificial-neural-networks

Mariana

The Cutest Deep Learning Framework which is also a wonderful Declarative Language

Stars: ✭ 151 (-38.37%)

Mutual labels: artificial-neural-networks

Udemy derinogrenmeyegiris

Udemy Derin Öğrenmeye Giriş Kursunun Uygulamaları ve Daha Fazlası

Stars: ✭ 239 (-2.45%)

Mutual labels: artificial-neural-networks

Aidl kb

A Knowledge Base for the FB Group Artificial Intelligence and Deep Learning (AIDL)

Stars: ✭ 219 (-10.61%)

Mutual labels: artificial-neural-networks

Cnn Svm

An Architecture Combining Convolutional Neural Network (CNN) and Linear Support Vector Machine (SVM) for Image Classification

Stars: ✭ 170 (-30.61%)

Mutual labels: artificial-neural-networks

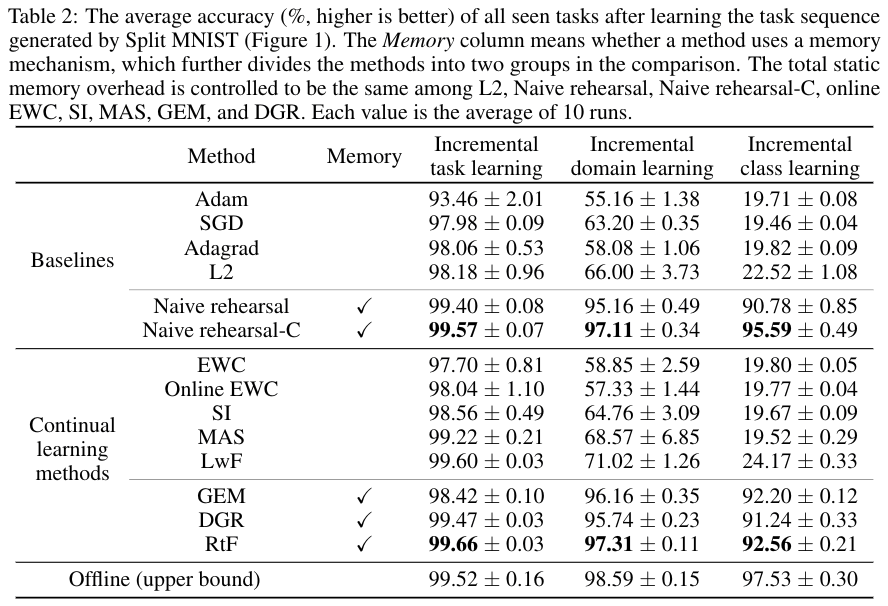

Continual-Learning-Benchmark

Evaluate three types of task shifting with popular continual learning algorithms.

This repository implemented and modularized following algorithms with PyTorch:

- EWC: code, paper (Overcoming catastrophic forgetting in neural networks)

- Online EWC: code, paper

- SI: code, paper (Continual Learning Through Synaptic Intelligence)

- MAS: code, paper (Memory Aware Synapses: Learning what (not) to forget)

- GEM: code, paper (Gradient Episodic Memory for Continual Learning)

- (More are coming)

All the above algorithms are compared to following baselines with the same static memory overhead:

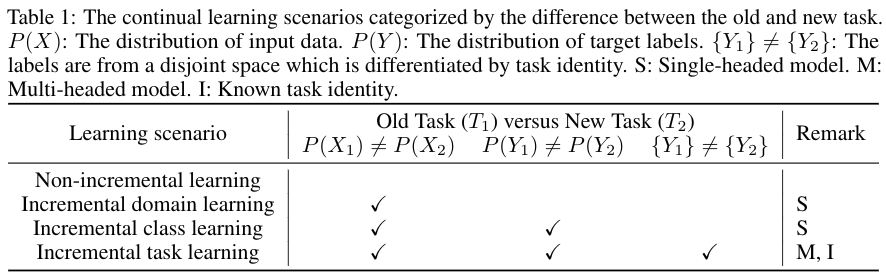

Key tables:

If this repository helps your work, please cite:

@inproceedings{Hsu18_EvalCL,

title={Re-evaluating Continual Learning Scenarios: A Categorization and Case for Strong Baselines},

author={Yen-Chang Hsu and Yen-Cheng Liu and Anita Ramasamy and Zsolt Kira},

booktitle={NeurIPS Continual learning Workshop },

year={2018},

url={https://arxiv.org/abs/1810.12488}

}

Preparation

This repository was tested with Python 3.6 and PyTorch 1.0.1.post2. Part of the cases is tested with PyTorch 1.5.1 and gives the same results.

pip install -r requirements.txt

Demo

The scripts for reproducing the results of this paper are under the scripts folder.

- Example: Run all algorithms in the incremental domain scenario with split MNIST.

./scripts/split_MNIST_incremental_domain.sh 0

# The last number is gpuid

# Outputs will be saved in ./outputs

- Eaxmple outputs: Summary of repeats

===Summary of experiment repeats: 3 / 3 ===

The regularization coefficient: 400.0

The last avg acc of all repeats: [90.517 90.648 91.069]

mean: 90.74466666666666 std: 0.23549144829955856

- Eaxmple outputs: The grid search for regularization coefficient

reg_coef: 0.1 mean: 76.08566666666667 std: 1.097717733400629

reg_coef: 1.0 mean: 77.59100000000001 std: 2.100847606721314

reg_coef: 10.0 mean: 84.33933333333334 std: 0.3592671553160509

reg_coef: 100.0 mean: 90.83800000000001 std: 0.6913701372395712

reg_coef: 1000.0 mean: 87.48566666666666 std: 0.5440161353816179

reg_coef: 5000.0 mean: 68.99133333333333 std: 1.6824762174313899

Usage

- Enable the grid search for the regularization coefficient: Use the option with a list of values, ex: -reg_coef 0.1 1 10 100 ...

- Repeat the experiment N times: Use the option -repeat N

Lookup available options:

python iBatchLearn.py -h

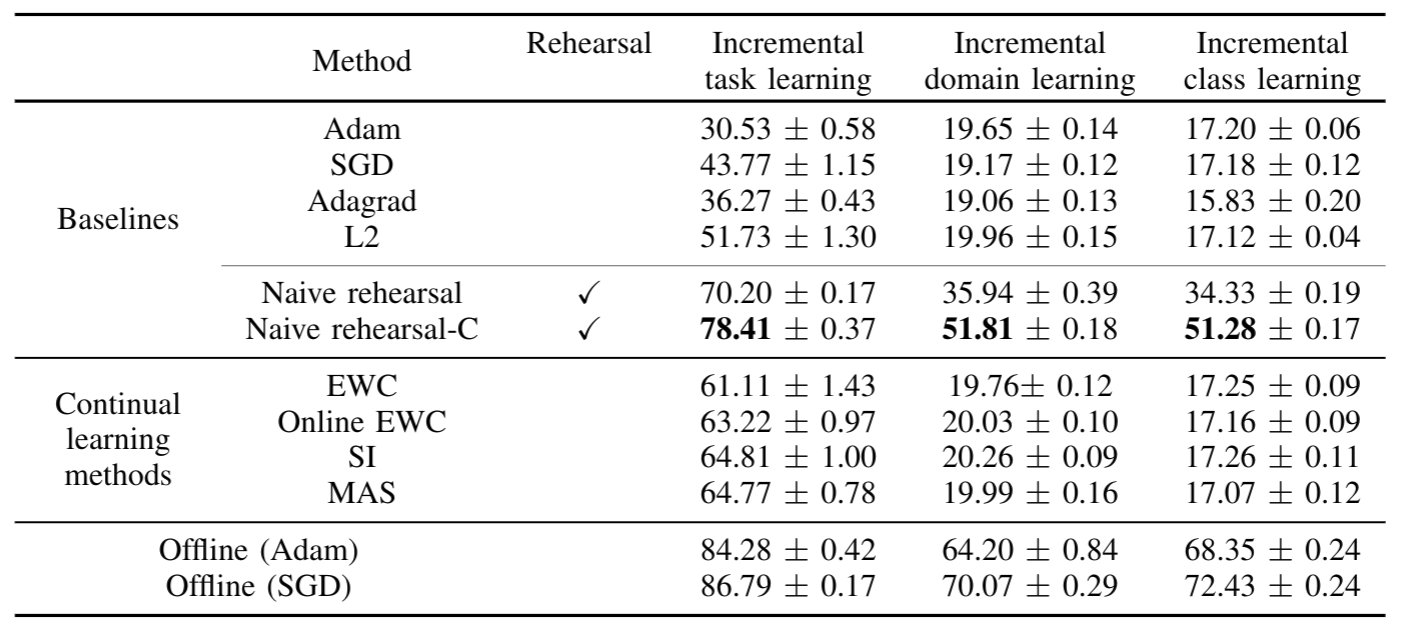

Other results

Below are CIFAR100 results. Please refer to the scripts for details.

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].