EndlessSora / Deeperforensics 1.0

Programming Languages

Projects that are alternatives of or similar to Deeperforensics 1.0

DeeperForensics-1.0: A Large-Scale Dataset for Real-World Face Forgery Detection

This repository provides the dataset and code for the following paper:

DeeperForensics-1.0: A Large-Scale Dataset for Real-World Face Forgery Detection

Liming Jiang, Ren Li, Wayne Wu, Chen Qian and Chen Change Loy

In CVPR 2020.

Project Page | Paper | YouTube Demo

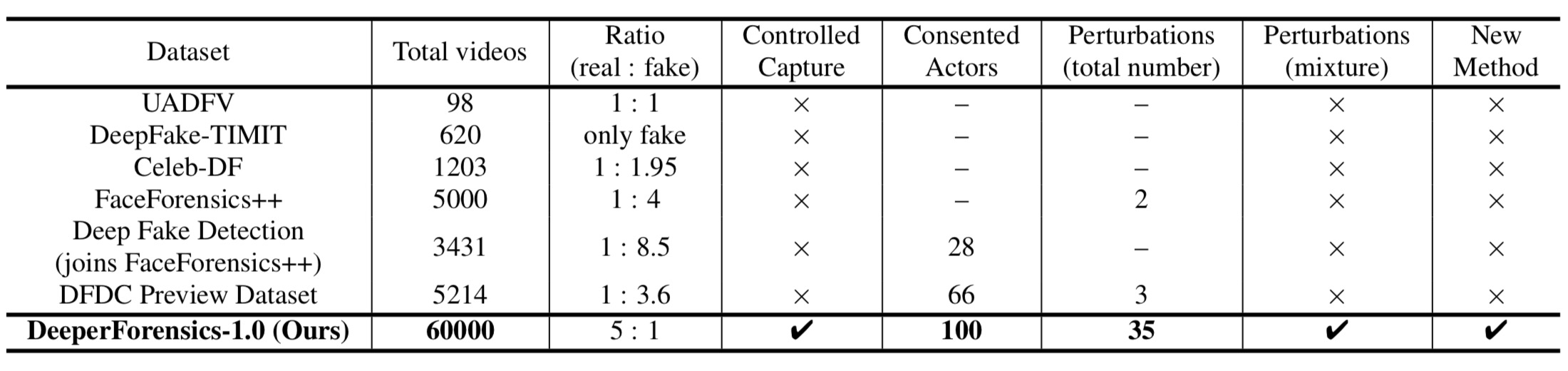

Abstract: We present our on-going effort of constructing a large-scale benchmark for face forgery detection. The first version of this benchmark, DeeperForensics-1.0, represents the largest face forgery detection dataset by far, with 60,000 videos constituted by a total of 17.6 million frames, 10 times larger than existing datasets of the same kind. Extensive real-world perturbations are applied to obtain a more challenging benchmark of larger scale and higher diversity. All source videos in DeeperForensics-1.0 are carefully collected, and fake videos are generated by a newly proposed end-to-end face swapping framework. The quality of generated videos outperforms those in existing datasets, validated by user studies. The benchmark features a hidden test set, which contains manipulated videos achieving high deceptive scores in human evaluations. We further contribute a comprehensive study that evaluates five representative detection baselines and make a thorough analysis of different settings.

Updates

-

[02/2021] The technical report of DeeperForensics Challenge 2020 is released on arXiv.

-

[08/2020] The DeeperForensics Challenge 2020 starts together with ECCV 2020 SenseHuman Workshop.

-

[05/2020] The perturbation codes of DeeperForensics-1.0 are released.

-

[05/2020] The dataset of DeeperForensics-1.0 is released.

-

[02/2020] The paper of DeeperForensics-1.0 is accepted by CVPR 2020.

Dataset

DeeperForensics-1.0 dataset has been made publicly available for non-commercial research purposes. Please visit the dataset download and document page for more details. Before using DeeperForensics-1.0 dataset for face forgery detection model training, please read these important tips first.

Code

The code to implement the diverse perturbations in our dataset has been released. Please see the perturbation implementation for more details.

Competition

We have hosted DeeperForensics Challenge 2020 based on the DeeperForensics-1.0 dataset. The challenge officially starts at the ECCV 2020 SenseHuman Workshop. The prizes of the challenge are a total of $15,000 (AWS promotional code). If you are interested in soliciting new ideas to advance state of the art in real-world face forgery detection, we look forward to your participation!

The technical report of DeeperForensics Challenge 2020 has been released on arXiv.

Summary

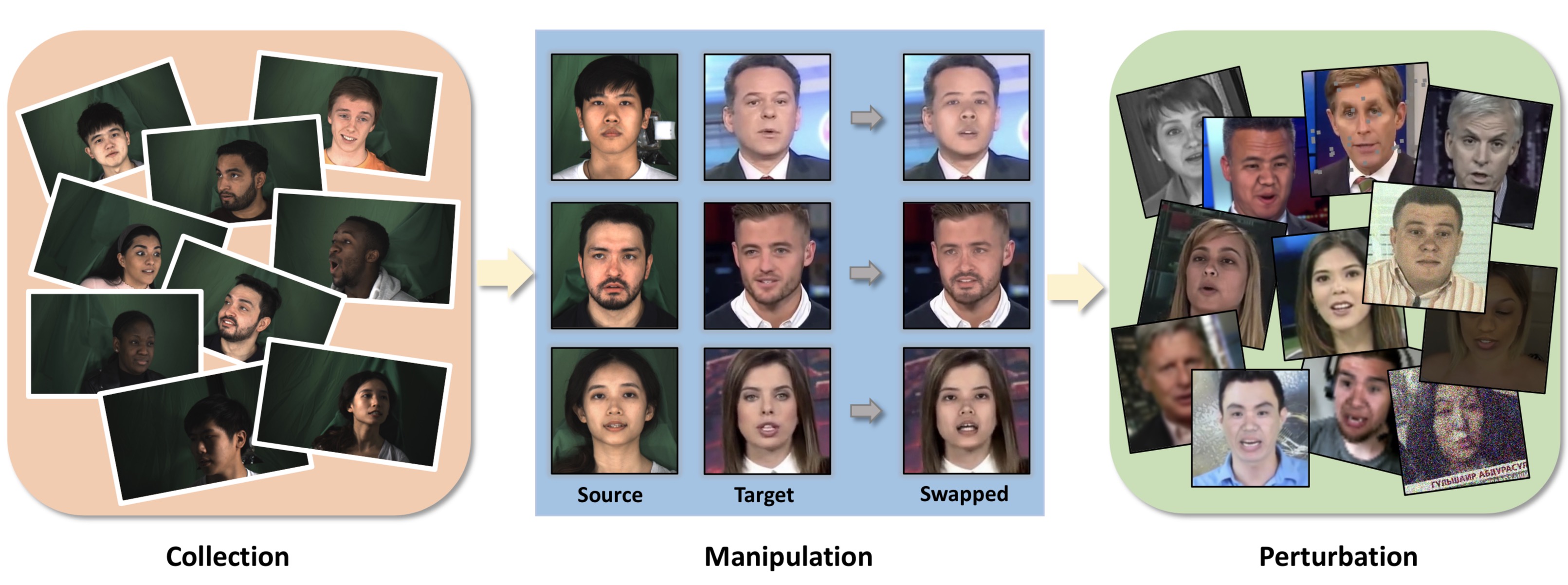

Data Collection

We invite 100 paid actors from 26 countries to record the source videos. Our high-quality collected data vary in identities, poses, expressions, emotions, lighting conditions, and 3DMM blendshapes.

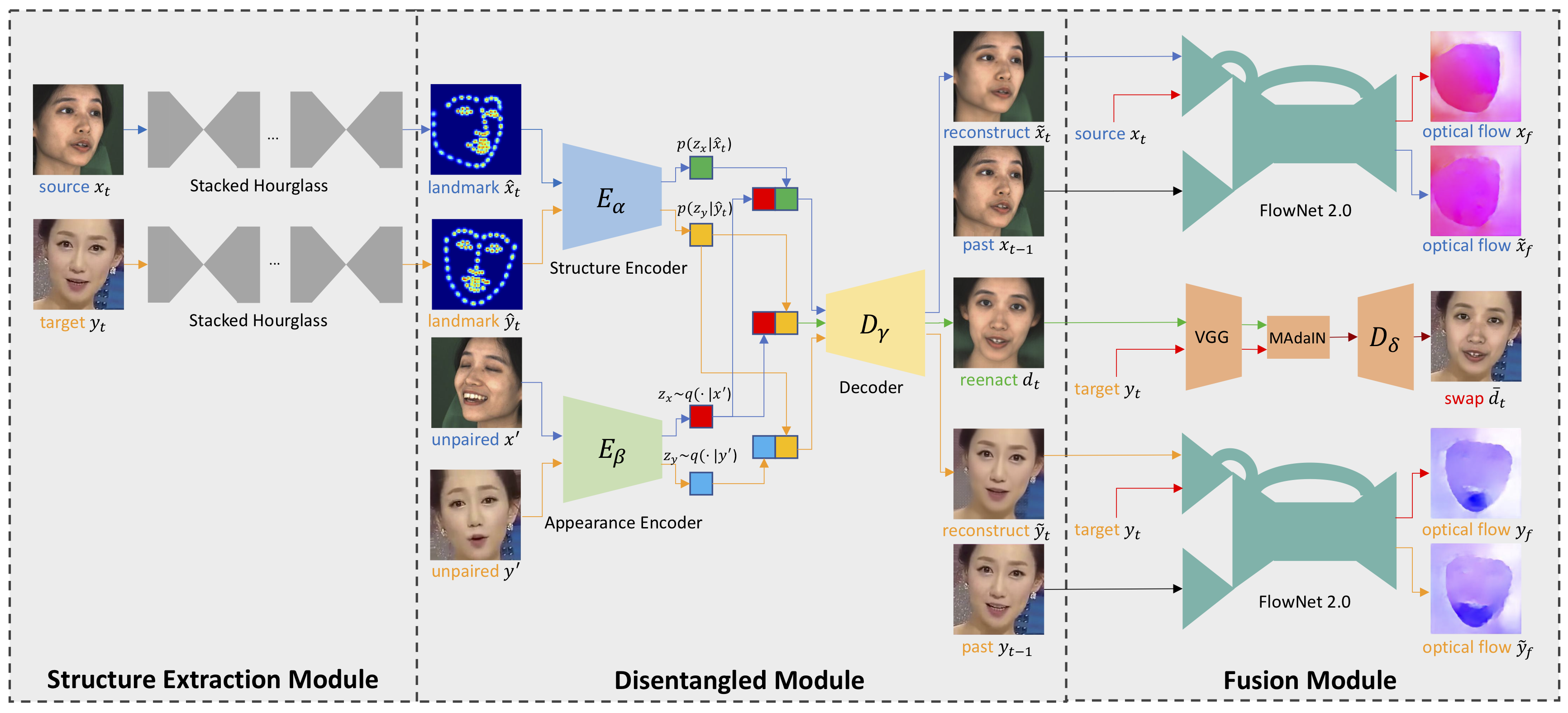

Face Manipulation

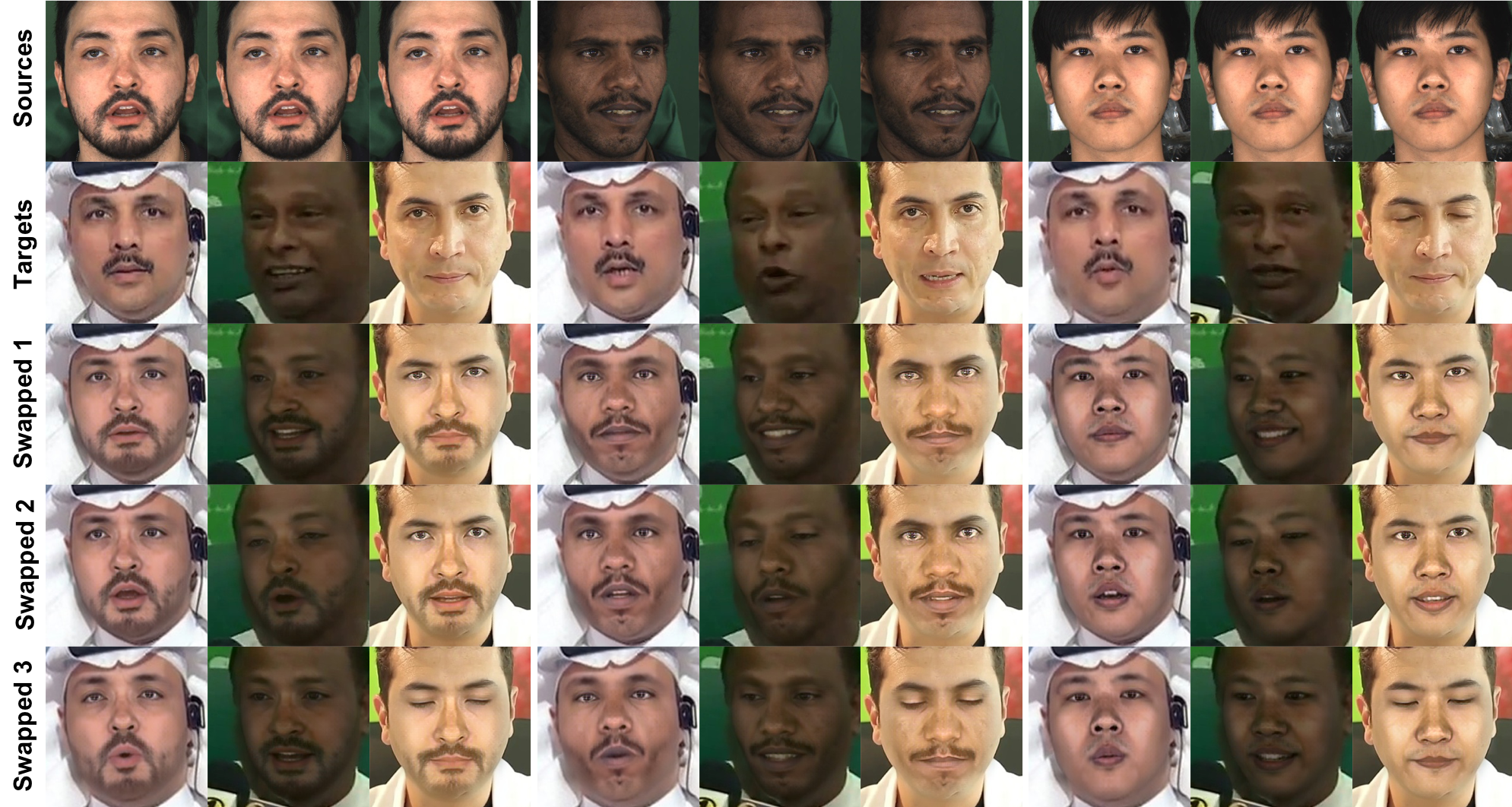

We also propose a new learning-based many-to-many face swapping method, DeepFake Variational Auto-Encoder (DF-VAE). DF-VAE improves scalability, style matching, and temporal continuity to ensure face swapping quality.

Several face manipulation results:

Many-to-many (three-to-three) face swapping by a single model:

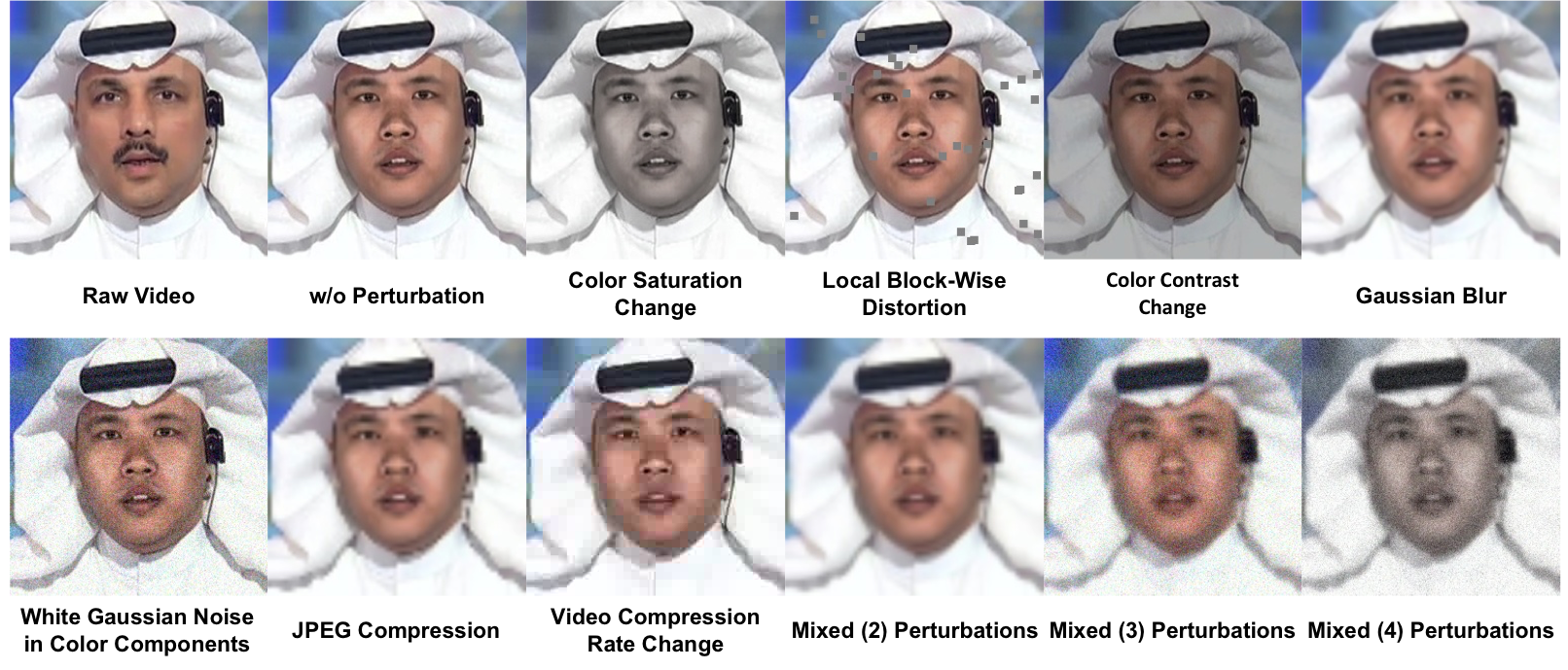

Real-World Perturbation

We apply 7 types (transmission errors, compression, etc.) of distortions at 5 intensity levels. Some videos are subjected to a mixture of more than one distortion. These perturbations make DeeperForensics-1.0 better simulate real-world scenarios.

Benchmark

We benchmark five representative forgery detection methods using the DeeperForensics-1.0 dataset. Please refer to our paper for more information.

Citation

If you find this work useful for your research, please cite our papers:

@inproceedings{jiang2020deeperforensics1,

title={{DeeperForensics-1.0}: A Large-Scale Dataset for Real-World Face Forgery Detection},

author={Jiang, Liming and Li, Ren and Wu, Wayne and Qian, Chen and Loy, Chen Change},

booktitle={CVPR},

year={2020}

}

@article{jiang2021dfc20,

title={{DeeperForensics Challenge 2020} on Real-World Face Forgery Detection: Methods and Results},

author={Jiang, Liming and Guo, Zhengkui and Wu, Wayne and Liu, Zhaoyang and Liu, Ziwei and Loy, Chen Change and Yang, Shuo and Xiong, Yuanjun and Xia, Wei and Chen, Baoying and Zhuang, Peiyu and Li, Sili and Chen, Shen and Yao, Taiping and Ding, Shouhong and Li, Jilin and Huang, Feiyue and Cao, Liujuan and Ji, Rongrong and Lu, Changlei and Tan, Ganchao},

journal={arXiv preprint},

volume={arXiv:2102.09471},

year={2021}

}

Acknowledgments

This work is supported by the SenseTime-NTU Collaboration Project, Singapore MOE AcRF Tier 1 (2018-T1-002-056), NTU SUG, and NTU NAP. We gratefully acknowledge the exceptional help from Hao Zhu and Keqiang Sun for their contribution on source data collection and coordination.

Contact

If you have any questions, please contact us by sending an email to [email protected].

Terms of Use

The use of DeeperForensics-1.0 is bounded by the Terms of Use: DeeperForensics-1.0 Dataset.

The code is released under the MIT license.

Copyright (c) 2020