kabkabm / Defensegan

Programming Languages

Projects that are alternatives of or similar to Defensegan

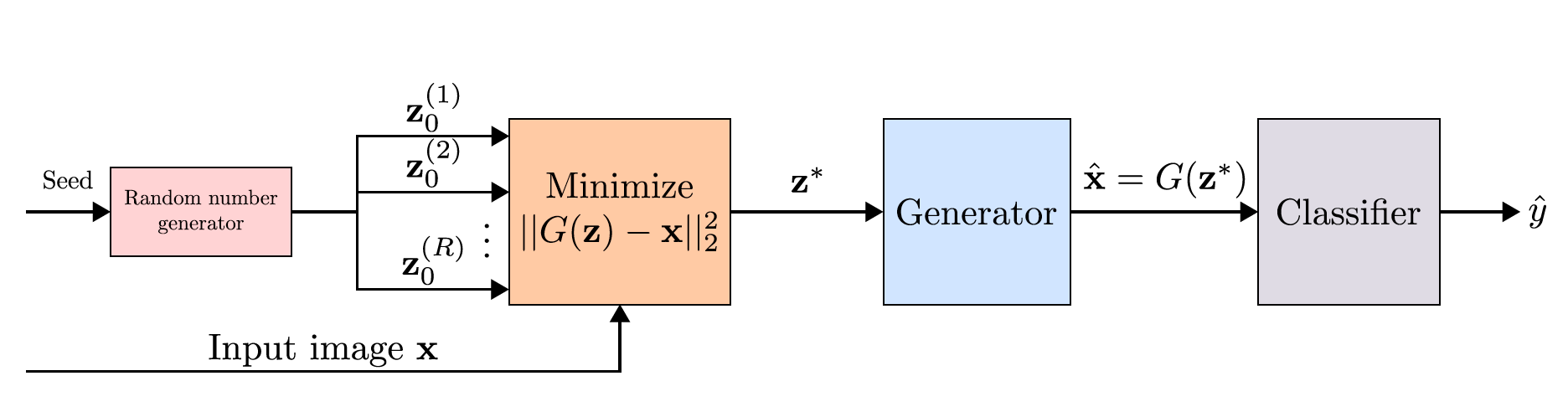

Defense-GAN: Protecting Classifiers Against Adversarial Attacks Using Generative Models

Pouya Samangouei*, Maya Kabkab*, Rama Chellappa

[*: authors contributed equally]

This repository contains the implementation of our ICLR-18 paper: Defense-GAN: Protecting Classifiers Against Adversarial Attacks Using Generative Models

If you find this code or the paper useful, please consider citing:

@inproceedings{defensegan,

title={Defense-GAN: Protecting classifiers against adversarial attacks using generative models},

author={Samangouei, Pouya and Kabkab, Maya and Chellappa, Rama},

booktitle={International Conference on Learning Representations},

year={2018}

}

Contents

Installation

- Clone this repository:

git clone --recursive https://github.com/kabkabm/defensegan

cd defensegan

git submodule update --init --recursive

- Install requirements:

pip install -r requirements.txt

Note: if you don't have a GPU install the cpu version of TensorFlow 1.7.

- Download the dataset and prepare

datadirectory:

python download_dataset.py [mnist|f-mnist|celeba]

- Create or link

outputanddebugdirectories:

mkdir output

mkdir debug

or

ln -s <path-to-output> output

ln -s <path-to-debug> debug

Usage

Train a GAN model

python train.py --cfg <path> --is_train <extra-args>

-

--cfgThis can be set to either a.ymlconfiguration file like the ones inexperiments/cfgs, or an output directory path. -

<extra-args>can be any parameter that is defined in the config file.

The training will create a directory in the output directory per experiment

with the same name as to save the model checkpoints. If

<extra-args> are different from the ones that are defined in <config>,

the output directory name will reflect the difference.

A config file is saved into each experiment directory so that they can be

loaded if <path> is the address to that directory.

Example

After running

python train.py --cfg experiments/cfgs/gans/mnist.yml --is_train

output/gans/mnist will be created.

[optional] Save reconstructions and datasets into cache:

python train.py --cfg experiments/cfgs/<config> --save_recs

python train.py --cfg experiments/cfgs/<config> --save_ds

Example

After running the training code for mnist, the reconstructions and the

dataset can be saved with:

python train.py --cfg output/gans/mnist --save_recs

python train.py --cfg output/gans/mnist --save_ds

As training goes on, sample outputs of the generator are written to debug/gans/<model_config>.

Black-box attacks

To perform black-box experiments run blackbox.py [Table 1 and 2 of the

paper]:

python blackbox.py --cfg <path> \

--results_dir <results_path> \

--bb_model {A, B, C, D, E} \

--sub_model {A, B, C, D, E} \

--fgsm_eps <epsilon> \

--defense_type {none|defense_gan|adv_tr}

[--train_on_recs or --online_training]

<optional-arguments>

-

--cfgis the path to the config file for training the iWGAN. This can also be the path to the output directory of the model. -

--results_dirThe path where the final results are saved in text files. -

--bb_modelThe black-box model architectures that are used in Table 1 and Table 2. -

--sub_modelThe substitute model architectures that are used in Table 1 and Table 2. -

--defense_typespecifies the type of defense to protect the classifier. -

--train_on_recs or --online_trainingThese parameters are optional. If they are set, the classifier will be trained on the reconstructions of Defense-GAN (e.g. in columnDefense-GAN-Recof Table 1 and 2). Otherwise, the results are forDefense-GAN-Orig. Note--online_trainingwill take a while if--rec_iters, or L in the paper, is set to a large value. -

<optional-arguments>A list of--<arg_name> <arg_val>that are the same as the hyperparemeters that are defined in config files (all lower case), and also a list of flags inblackbox.py. The most important ones are:-

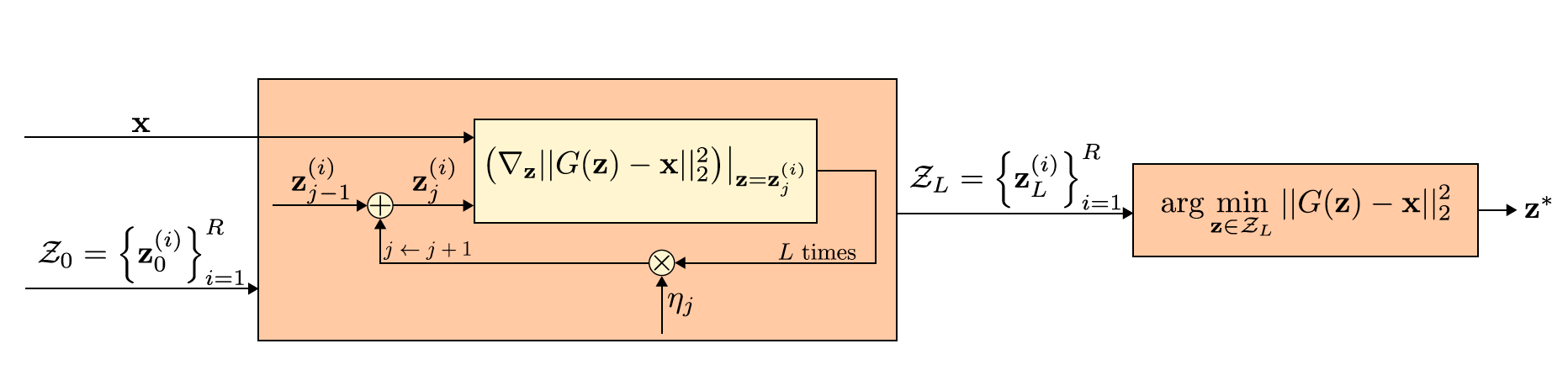

--rec_itersThe number of GD reconstruction iterations for Defense-GAN, or L in the paper. -

--rec_lrThe learning rate of the reconstruction step. -

--rec_rrThe number of random restarts for the reconstruction step, or R in the paper. -

--num_trainThe number of images to train the black-box model on. For debugging purposes set this to a small value. -

--num_testThe number of images to test on. For debugging purposes set this to a small value. -

--debugThis will save qualitative attack and reconstruction results indebugdirectory and will not run the adversarial attack part of the code.

-

-

Refer to

blackbox.pyfor more flag descriptions.

Example

- Row 1 of Table 1

Defense-GAN-Orig:

python blackbox.py --cfg output/gans/mnist \

--results_dir defensegan \

--bb_model A \

--sub_model B \

--fgsm_eps 0.3 \

--defense_type defense_gan

- If you set

--nb_epochs 1 --nb_epochs_s 1 --data_aug 1you will get a quick glance of how the script works.

White-box attacks

To test Defense-GAN for white-box attacks run whitebox.py [Tables 4, 5, 12

of the paper]:

python whitebox.py --cfg <path> \

--results_dir <results-dir> \

--attack_type {fgsm, rand_fgsm, cw} \

--defense_type {none|defense_gan|adv_tr} \

--model {A, B, C, D} \

[--train_on_recs or --online_training]

<optional-arguments>

-

--cfgis the path to the config file for training the iWGAN. This can also be the path to the output directory of the model. -

--results_dirThe path where the final results are saved in text files. -

--defense_typespecifies the type of defense to protect the classifier. -

--train_on_recs or --online_trainingThese parameters are optional. If they are set, the classifier will be trained on the reconstructions of Defense-GAN (e.g. in columnDefense-GAN-Recof Table 1 and 2). Otherwise, the results are forDefense-GAN-Orig. Note--online_trainingwill take a while if--rec_iters, or L in the paper, is set to a large value. -

<optional-arguments>A list of--<arg_name> <arg_val>that are the same as the hyperparemeters that are defined in config files (all lower case), and also a list of flags inwhitebox.py. The most important ones are:-

--rec_itersThe number of GD reconstruction iterations for Defense-GAN, or L in the paper. -

--rec_lrThe learning rate of the reconstruction step. -

--rec_rrThe number of random restarts for the reconstruction step, or R in the paper. -

--num_testThe number of images to test on. For debugging purposes set this to a small value.

-

- Refer to

whitebox.pyfor more flag descriptions.

Example

First row of Table 4:

python whitebox.py --cfg <path> \

--results_dir whitebox \

--attack_type fgsm \

--defense_type defense_gan \

--model A

- If you want to quickly see how the scripts work, add the following flags:

--nb_epochs 1 --num_tests 400