abhioncbr / Docker Airflow

Programming Languages

Projects that are alternatives of or similar to Docker Airflow

docker-airflow

This is a repository for building Docker container of Apache Airflow (incubating).

- For understanding & knowing more about Airflow, please follow curated list of resources.

- Similarly, for Docker follow curated list of resources.

Images

| Image | Pulls | Tags |

|---|---|---|

| abhioncbr/docker-airflow |  |

tags |

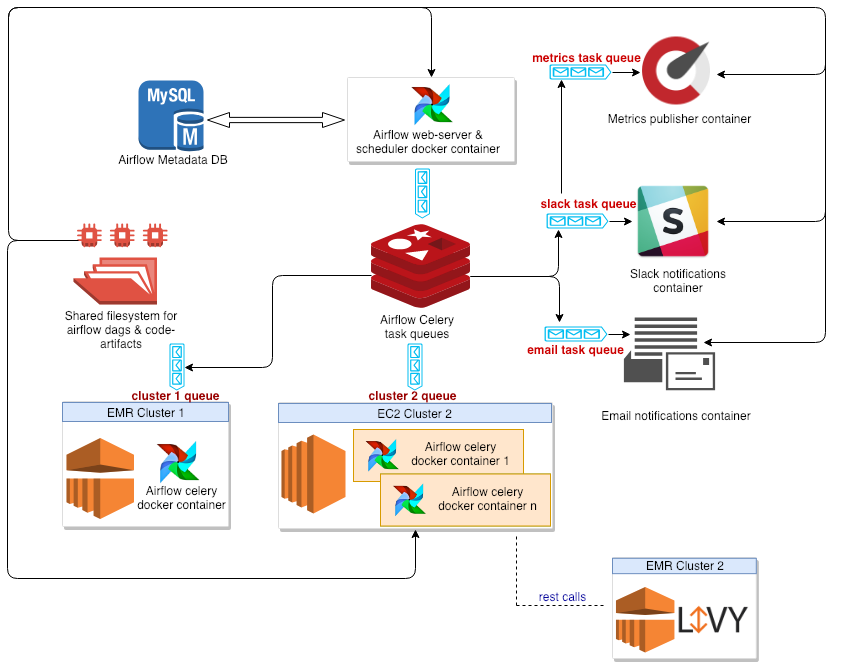

Airflow components stack

- Airflow version: Notation for representing version

XX.YY.ZZ - Execution Mode:

standalone(simple container for exploration purpose, based on sqlite as airflow metadata db & SequentialExecutor ) orprod(single node based, LocalExecutor amd mysql as airflow metadata db) andcluster(for distributed production long run use-cases, container runs as eitherserverorworker) - Backend database: standalone- Sqlite, prod & cluster- Mysql

- Scheduler: standalone- Sequential, prod- LocalExecutor and Cluster- Celery

- Task queue: cluster- Redis

- Log location: local file system (Default) or AWS S3 (through

entrypoint-s3.sh) - User authentication: Password based & support for multiple users with

superuserprivilege. - Code enhancement: password based multiple users supporting super-user(can see all dags of all owner) feature. Currently, Airflow is working on the password based multi user feature.

- Other features: support for google cloud platform packages in container.

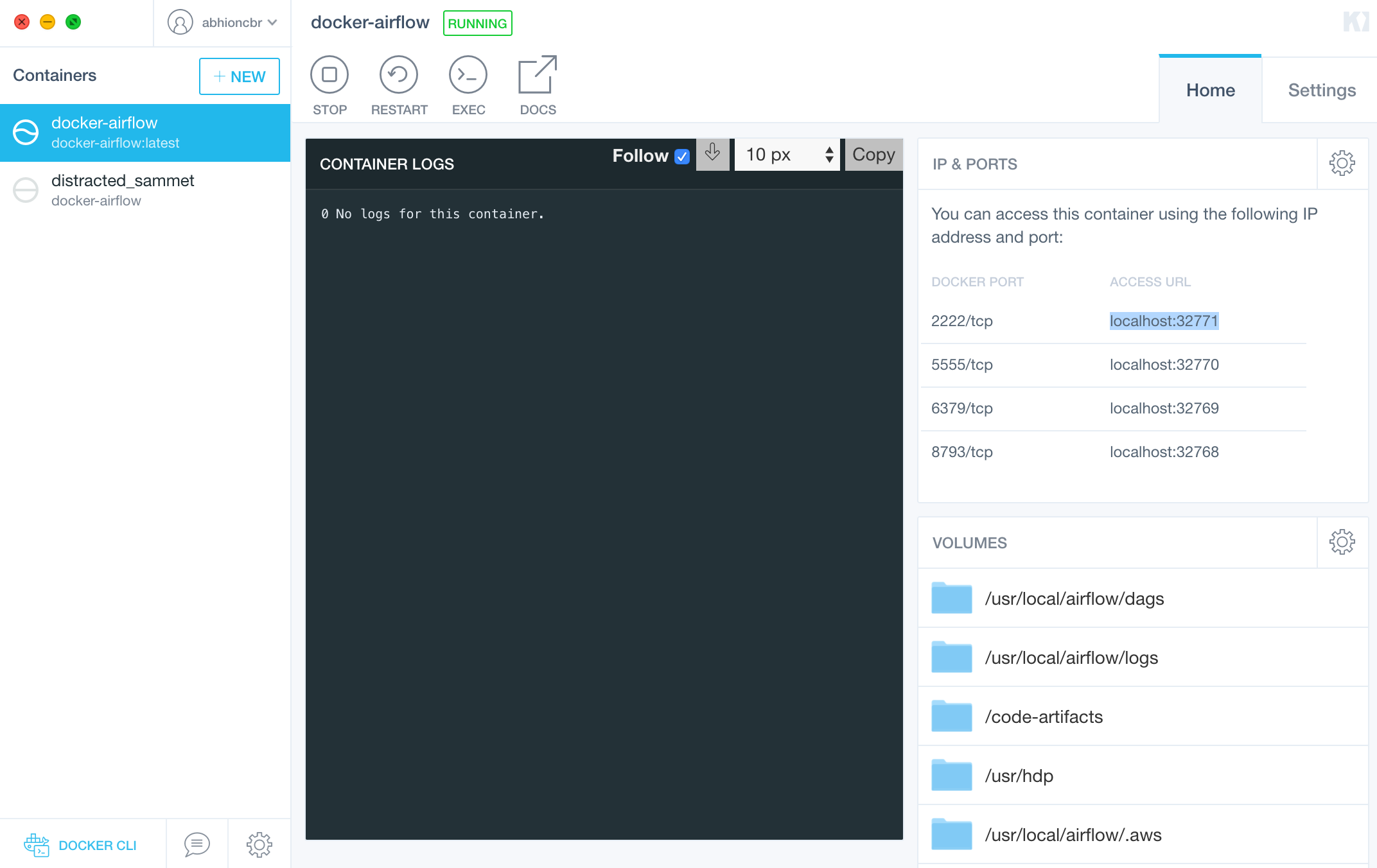

Airflow ports

- airflow portal port: 2222

- airflow celery flower: 5555

- redis port: 6379

- log files exchange port: 8793

Airflow services information

- In server container: redis, airflow webserver & scheduler is running.

- In worker container: airflow worker & celery flower ui service is running.

How to build images

-

DockerFile uses

airflow-versionas abuild-arg. - build image, if you want to do some customization -

docker build -t abhioncbr/docker-airflow:$IMAGE_VERSION --build-arg AIRFLOW_VERSION=$AIRFLOW_VERSION --build-arg AIRFLOW_PATCH_VERSION=$AIRFLOW_PATCH_VERSION -f ~/docker-airflow/docker-files/DockerFile .

- Arg IMAGE_VERSION value should be airflow version for example, 1.10.3 or 1.10.2

- Arg AIRFLOW_PATCH_VERSION value should be the major release version of airflow for example for 1.10.2 it should be 1.10.

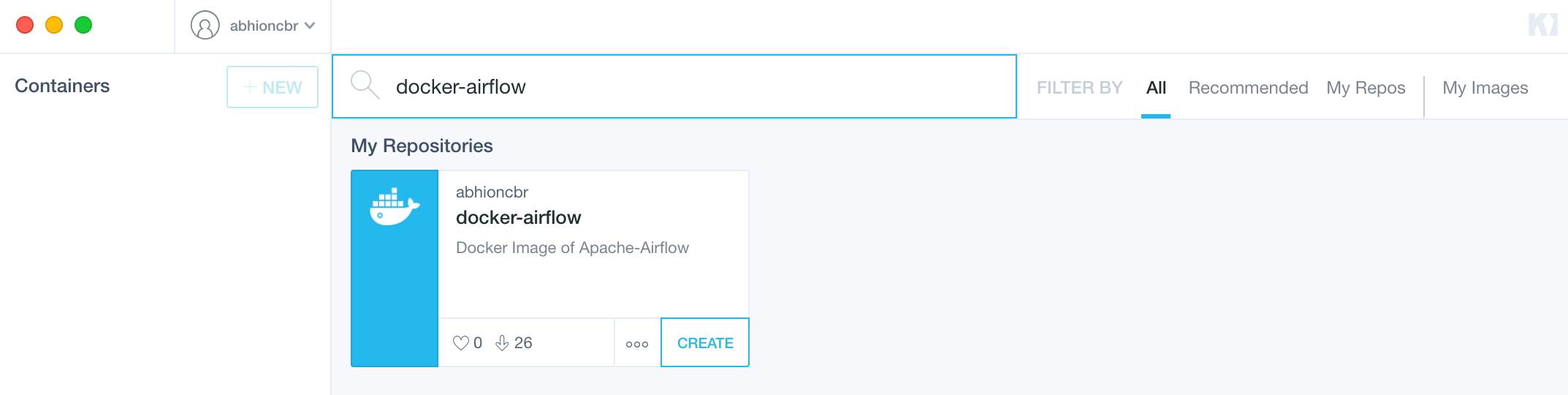

How to run using Kitmatic

- Simplest way for exploration purpose, using Kitematic(Run containers through a simple, yet powerful graphical user interface.)

-

Search abhioncbr/docker-airflow Image on docker-hub

-

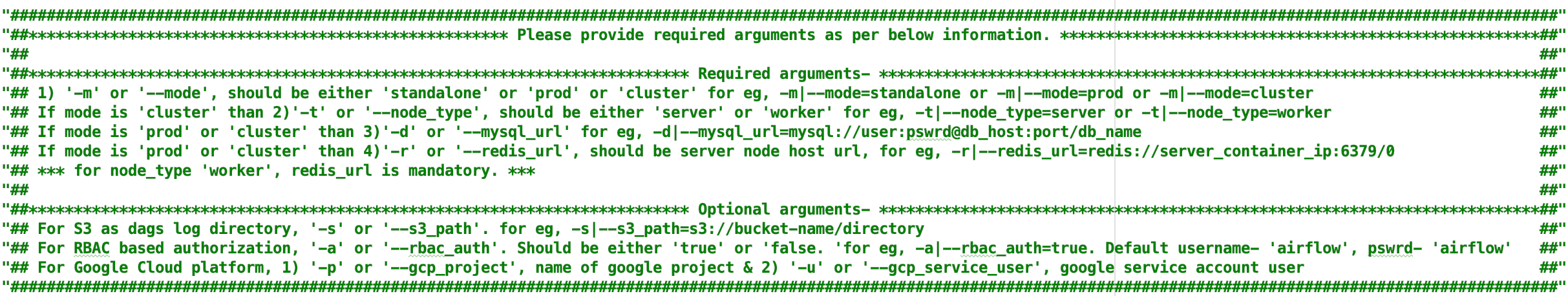

How to run

-

General commands -

-

starting airflow image as a

airflow-standalonecontainer in a standalone mode-docker run --net=host -p 2222:2222 --name=airflow-standalone abhioncbr/airflow-XX.YY.ZZ -m=standalone &

-

Starting airflow image as a

airflow-servercontainer in a cluster mode-docker run --net=host -p 2222:2222 -p 6379:6379 --name=airflow-server \ abhioncbr/airflow-XX.YY.ZZ -m=cluster -t=server -d=mysql://user:[email protected]:3306/db-name &

-

Starting airflow image as a

airflow-workercontainer in a cluster mode-docker run --net=host -p 5555:5555 -p 8739:8739 --name=airflow-worker \ abhioncbr/airflow-XX.YY.ZZ -m=cluster -t=worker -d=mysql://user:[email protected]:3306/db-name -r=redis://<airflow-server-host>:6379/0 &

-

-

In Mac using docker for mac -

-

Standalone Mode - starting airflow image in a standalone mode & mounting dags, code-artifacts & logs folder to host machine -

docker run -p 2222:2222 --name=airflow-standalone \ -v ~/airflow-data/code-artifacts:/code-artifacts \ -v ~/airflow-data/logs:/usr/local/airflow/logs \ -v ~/airflow-data/dags:/usr/local/airflow/dags \ abhioncbr/airflow-XX.YY.ZZ -m=standalone &

-

Cluster Mode

-

starting airflow image as a server container & mounting dags, code-artifacts & logs folder to host machine -

docker run -p 2222:2222 -p 6379:6379 --name=airflow-server \ -v ~/airflow-data/code-artifacts:/code-artifacts \ -v ~/airflow-data/logs:/usr/local/airflow/logs \ -v ~/airflow-data/dags:/usr/local/airflow/dags \ abhioncbr/airflow-XX.YY.ZZ \ -m=cluster -t=server -d=mysql://user:[email protected]:3306:3306/<airflow-db-name> &

-

starting airflow image as a worker container & mounting dags, code-artifacts & logs folder to host machine -

docker run -p 5555:5555 -p 8739:8739 --name=airflow-worker \ -v ~/airflow-data/code-artifacts:/code-artifacts \ -v ~/airflow-data/logs:/usr/local/airflow/logs \ -v ~/airflow-data/dags:/usr/local/airflow/dags \ abhioncbr/airflow-XX.YY.ZZ \ -m=cluster -t=worker -d=mysql://user:[email protected]:3306:3306/<airflow-db-name> -r=redis://host.docker.internal:6379/0 &

-

-

Distributed execution of airflow

- As mentioned above, docker image of airflow can be leveraged to run in complete distributed run

- single docker-airflow container in

servermode for serving the UI of the airflow, redis for celery task & scheduler. - multiple docker-airflow containers in

workermode for executing tasks using celery executor. - centralised airflow metadata database.

- single docker-airflow container in

- Image below depicts the docker-airflow distributed platform: