gentaiscool / End2end Asr Pytorch

Licence: mit

End-to-End Automatic Speech Recognition on PyTorch

Stars: ✭ 175

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to End2end Asr Pytorch

Kospeech

Open-Source Toolkit for End-to-End Korean Automatic Speech Recognition.

Stars: ✭ 190 (+8.57%)

Mutual labels: speech-recognition, asr, end-to-end, transformer

kosr

Korean speech recognition based on transformer (트랜스포머 기반 한국어 음성 인식)

Stars: ✭ 25 (-85.71%)

Mutual labels: end-to-end, transformer, speech-recognition, asr

Neural sp

End-to-end ASR/LM implementation with PyTorch

Stars: ✭ 408 (+133.14%)

Mutual labels: speech-recognition, speech, asr, transformer

kospeech

Open-Source Toolkit for End-to-End Korean Automatic Speech Recognition leveraging PyTorch and Hydra.

Stars: ✭ 456 (+160.57%)

Mutual labels: end-to-end, transformer, speech-recognition, asr

Openasr

A pytorch based end2end speech recognition system.

Stars: ✭ 69 (-60.57%)

Mutual labels: speech-recognition, speech, asr, transformer

speech-transformer

Transformer implementation speciaized in speech recognition tasks using Pytorch.

Stars: ✭ 40 (-77.14%)

Mutual labels: end-to-end, speech, transformer, asr

Tensorflow end2end speech recognition

End-to-End speech recognition implementation base on TensorFlow (CTC, Attention, and MTL training)

Stars: ✭ 305 (+74.29%)

Mutual labels: speech-recognition, asr, end-to-end

Delta

DELTA is a deep learning based natural language and speech processing platform.

Stars: ✭ 1,479 (+745.14%)

Mutual labels: speech-recognition, speech, asr

Rnn Transducer

MXNet implementation of RNN Transducer (Graves 2012): Sequence Transduction with Recurrent Neural Networks

Stars: ✭ 114 (-34.86%)

Mutual labels: speech-recognition, asr, end-to-end

Athena

an open-source implementation of sequence-to-sequence based speech processing engine

Stars: ✭ 542 (+209.71%)

Mutual labels: speech-recognition, asr, transformer

Wenet

Production First and Production Ready End-to-End Speech Recognition Toolkit

Stars: ✭ 617 (+252.57%)

Mutual labels: speech-recognition, asr, transformer

E2e Asr

PyTorch Implementations for End-to-End Automatic Speech Recognition

Stars: ✭ 106 (-39.43%)

Mutual labels: speech-recognition, asr, end-to-end

sova-asr

SOVA ASR (Automatic Speech Recognition)

Stars: ✭ 123 (-29.71%)

Mutual labels: speech, speech-recognition, asr

spokestack-android

Extensible Android mobile voice framework: wakeword, ASR, NLU, and TTS. Easily add voice to any Android app!

Stars: ✭ 52 (-70.29%)

Mutual labels: speech, speech-recognition, asr

Speech Transformer

A PyTorch implementation of Speech Transformer, an End-to-End ASR with Transformer network on Mandarin Chinese.

Stars: ✭ 565 (+222.86%)

Mutual labels: asr, end-to-end, transformer

Espresso

Espresso: A Fast End-to-End Neural Speech Recognition Toolkit

Stars: ✭ 808 (+361.71%)

Mutual labels: speech-recognition, asr, end-to-end

Syn Speech

Syn.Speech is a flexible speaker independent continuous speech recognition engine for Mono and .NET framework

Stars: ✭ 57 (-67.43%)

Mutual labels: speech-recognition, speech, asr

wenet

Production First and Production Ready End-to-End Speech Recognition Toolkit

Stars: ✭ 2,384 (+1262.29%)

Mutual labels: transformer, speech-recognition, asr

Asr audio data links

A list of publically available audio data that anyone can download for ASR or other speech activities

Stars: ✭ 128 (-26.86%)

Mutual labels: speech-recognition, speech, asr

End-to-End Speech Recognition on Pytorch

Transformer-based Speech Recognition Model

If you use any source codes included in this toolkit in your work, please cite the following paper.

- Winata, G. I., Madotto, A., Wu, C. S., & Fung, P. (2019). Code-Switched Language Models Using Neural Based Synthetic Data from Parallel Sentences. In Proceedings of the 23rd Conference on Computational Natural Language Learning (CoNLL) (pp. 271-280).

- Winata, G. I., Cahyawijaya, S., Lin, Z., Liu, Z., & Fung, P. (2019). Lightweight and Efficient End-to-End Speech Recognition Using Low-Rank Transformer. arXiv preprint arXiv:1910.13923. (Accepted by ICASSP 2020)

- Zhou, S., Dong, L., Xu, S., & Xu, B. (2018). Syllable-Based Sequence-to-Sequence Speech Recognition with the Transformer in Mandarin Chinese. Proc. Interspeech 2018, 791-795.

Highlights

- supports batch parallelization on multi-GPU

- supports multiple dataset training and evaluation

Requirements

- Python 3.5 or later

- Install Pytorch 1.4 (https://pytorch.org/)

- Install torchaudio (https://github.com/pytorch/audio)

- run

❱❱❱ bash requirement.sh

Results

AiShell-1

| Decoding strategy | CER |

|---|---|

| Greedy | 14.5% |

| Beam-search (beam width=8) | 13.5% |

Data

AiShell-1 (Chinese)

To preprocess the data. You need to download the data from https://www.openslr.org/33/. I will add a script to automate the process.

❱❱❱ python data/aishell.py

Librispeech (English)

To automatically download the data

❱❱❱ python data/librispeech.py

Training

usage: train.py [-h] [--train-manifest-list] [--valid-manifest-list] [--test-manifest-list] [--cuda] [--verbose] [--batch-size] [--labels-path] [--lr] [--name] [--save-folder] [--save-every] [--feat_extractor] [--emb_trg_sharing] [--shuffle] [--sample_rate] [--label-smoothing] [--window-size] [--window-stride] [--window] [--epochs] [--src-max-len] [--tgt-max-len] [--warmup] [--momentum] [--lr-anneal] [--num-layers] [--num-heads] [--dim-model] [--dim-key] [--dim-value] [--dim-input] [--dim-inner] [--dim-emb] [--shuffle]

Parameters

- feat_extractor: "emb_cnn" or "vgg_cnn" as the feature extractor, or set "" for none

- emb_cnn: add 4-layer 2D CNN

- vgg_cnn: add 6-layer 2D CNN

- cuda: train on GPU

- shuffle: randomly shuffle every batch

Example

❱❱❱ python train.py --train-manifest-list data/manifests/aishell_train_manifest.csv --valid-manifest-list data/manifests/aishell_dev_manifest.csv --test-manifest-list data/manifests/aishell_test_manifest.csv --cuda --batch-size 12 --labels-path data/labels/aishell_labels.json --lr 1e-4 --name aishell_drop0.1_cnn_batch12_4_vgg_layer4 --save-folder save/ --save-every 5 --feat_extractor vgg_cnn --dropout 0.1 --num-layers 4 --num-heads 8 --dim-model 512 --dim-key 64 --dim-value 64 --dim-input 161 --dim-inner 2048 --dim-emb 512 --shuffle --min-lr 1e-6 --k-lr 1

Use python train.py --help for more parameters and options.

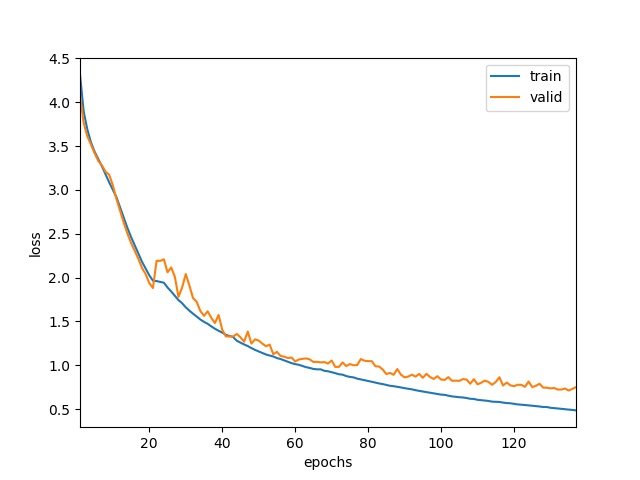

Results

AiShell-1 Loss Curve

Multi-GPU Training

usage: train.py [--parallel] [--device-ids]

Parameters

- parallel: split batches to GPUs (the number of batch has to be divisible by the number of GPUs)

- device-ids: GPU ids

Example

❱❱❱ CUDA_VISIBLE_DEVICES=0,1 python train.py --train-manifest-list data/manifests/aishell_train_manifest.csv --valid-manifest-list data/manifests/aishell_dev_manifest.csv --test-manifest-list data/manifests/aishell_test_manifest.csv --cuda --batch-size 12 --labels-path data/labels/aishell_labels.json --lr 1e-4 --name aishell_drop0.1_cnn_batch12_4_vgg_layer4 --save-folder save/ --save-every 5 --feat_extractor vgg_cnn --dropout 0.1 --num-layers 4 --num-heads 8 --dim-model 512 --dim-key 64 --dim-value 64 --dim-input 161 --dim-inner 2048 --dim-emb 512 --shuffle --min-lr 1e-6 --k-lr 1 --parallel --device-ids 0 1

Test

usage: test.py [-h] [--test-manifest] [--cuda] [--verbose] [--continue_from]

Parameters

- cuda: test on GPU

- continue_from: path to the trained model

Example

❱❱❱ python test.py --test-manifest-list libri_test_clean_manifest.csv --cuda --continue_from save/model

Use python multi_train.py --help for more parameters and options.

Custom Dataset

Manifest file

To use your own dataset, you must create a CSV manifest file using the following format:

/path/to/audio.wav,/path/to/text.txt

/path/to/audio2.wav,/path/to/text2.txt

...

Each line contains the path to the audio file and transcript file separated by a comma.

Label file

You need to specify all characters in the corpus by using the following JSON format:

[

"_",

"'",

"A",

...,

"Z",

" "

]

Bug Report

Feel free to create an issue

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].