yihui-he / Epipolar Transformers

Licence: mit

Epipolar Transformers (CVPR 2020)

Stars: ✭ 245

Projects that are alternatives of or similar to Epipolar Transformers

Pose Interpreter Networks

Real-Time Object Pose Estimation with Pose Interpreter Networks (IROS 2018)

Stars: ✭ 104 (-57.55%)

Mutual labels: jupyter-notebook, 3d, pose-estimation

Keras realtime multi Person pose estimation

Keras version of Realtime Multi-Person Pose Estimation project

Stars: ✭ 728 (+197.14%)

Mutual labels: jupyter-notebook, pose-estimation

Pytorch Openpose

pytorch implementation of openpose including Hand and Body Pose Estimation.

Stars: ✭ 716 (+192.24%)

Mutual labels: jupyter-notebook, pose-estimation

Objectron

Objectron is a dataset of short, object-centric video clips. In addition, the videos also contain AR session metadata including camera poses, sparse point-clouds and planes. In each video, the camera moves around and above the object and captures it from different views. Each object is annotated with a 3D bounding box. The 3D bounding box describes the object’s position, orientation, and dimensions. The dataset contains about 15K annotated video clips and 4M annotated images in the following categories: bikes, books, bottles, cameras, cereal boxes, chairs, cups, laptops, and shoes

Stars: ✭ 1,352 (+451.84%)

Mutual labels: jupyter-notebook, 3d

Mobilepose Pytorch

Light-weight Single Person Pose Estimator

Stars: ✭ 427 (+74.29%)

Mutual labels: jupyter-notebook, pose-estimation

Chinesetrafficpolicepose

Detects Chinese traffic police commanding poses 检测中国交警指挥手势

Stars: ✭ 49 (-80%)

Mutual labels: jupyter-notebook, pose-estimation

Pose Estimation tutorials

Tools and tutorials of pose estimation and deep learning

Stars: ✭ 79 (-67.76%)

Mutual labels: jupyter-notebook, pose-estimation

Tensorflow realtime multi Person pose estimation

Multi-Person Pose Estimation project for Tensorflow 2.0 with a small and fast model based on MobilenetV3

Stars: ✭ 129 (-47.35%)

Mutual labels: jupyter-notebook, pose-estimation

Cpm

Convolutional Pose Machines in TensorFlow

Stars: ✭ 115 (-53.06%)

Mutual labels: jupyter-notebook, pose-estimation

Iros20 6d Pose Tracking

[IROS 2020] se(3)-TrackNet: Data-driven 6D Pose Tracking by Calibrating Image Residuals in Synthetic Domains

Stars: ✭ 113 (-53.88%)

Mutual labels: 3d, pose-estimation

Amass

Data preparation and loader for AMASS

Stars: ✭ 180 (-26.53%)

Mutual labels: jupyter-notebook, pose-estimation

Rnn For Human Activity Recognition Using 2d Pose Input

Activity Recognition from 2D pose using an LSTM RNN

Stars: ✭ 165 (-32.65%)

Mutual labels: jupyter-notebook, pose-estimation

Human body prior

VPoser: Variational Human Pose Prior

Stars: ✭ 244 (-0.41%)

Mutual labels: jupyter-notebook, pose-estimation

Ml finance codes

Machine Learning in Finance: From Theory to Practice Book

Stars: ✭ 245 (+0%)

Mutual labels: jupyter-notebook

Building Machine Learning Projects With Tensorflow

Building Machine Learning Projects with TensorFlow by Packt

Stars: ✭ 247 (+0.82%)

Mutual labels: jupyter-notebook

Spinzero Jupyter Theme

A minimal Jupyter Notebook theme

Stars: ✭ 246 (+0.41%)

Mutual labels: jupyter-notebook

Parametric T Sne

Running parametric t-SNE by Laurens Van Der Maaten with Octave and oct2py.

Stars: ✭ 246 (+0.41%)

Mutual labels: jupyter-notebook

Wavegrad

Implementation of Google Brain's WaveGrad high-fidelity vocoder (paper: https://arxiv.org/pdf/2009.00713.pdf). First implementation on GitHub.

Stars: ✭ 245 (+0%)

Mutual labels: jupyter-notebook

Overcoming Catastrophic

Implementation of "Overcoming catastrophic forgetting in neural networks" in Tensorflow

Stars: ✭ 247 (+0.82%)

Mutual labels: jupyter-notebook

Bayesian Optimization

Python code for bayesian optimization using Gaussian processes

Stars: ✭ 245 (+0%)

Mutual labels: jupyter-notebook

Epipolar Transformers

Yihui He, Rui Yan, Katerina Fragkiadaki, Shoou-I Yu (Carnegie Mellon University, Facebook Reality Labs)

CVPR 2020, CVPR workshop Best Paper Award

Oral presentation and human pose demo videos (playlist):

Models

We also provide 2D to 3D lifting network implementations for these two papers:

-

3D Hand Shape and Pose from Images in the Wild, CVPR 2019

-

configs/lifting/img_lifting_rot_h36m.yaml(Human 3.6M) -

configs/lifting/img_lifting_rot.yaml(RHD)

-

-

Learning to Estimate 3D Hand Pose from Single RGB Images, ICCV 2017

-

configs/lifting/lifting_direct_h36m.yaml(Human 3.6M) -

configs/lifting/lifting_direct.yaml(RHD)

-

Setup

Requirements

Python 3, pytorch > 1.2+ and pytorch < 1.4

pip install -r requirements.txt

conda install pytorch cudatoolkit=10.0 -c pytorch

Pretrained weights download

mkdir outs

cd datasets/

bash get_pretrained_models.sh

Please follow the instructions in datasets/README.md for preparing the dataset

Training

python main.py --cfg path/to/config

tensorboard --logdir outs/

Testing

Testing with latest checkpoints

python main.py --cfg configs/xxx.yaml DOTRAIN False

Testing with weights

python main.py --cfg configs/xxx.yaml DOTRAIN False WEIGHTS xxx.pth

Visualization

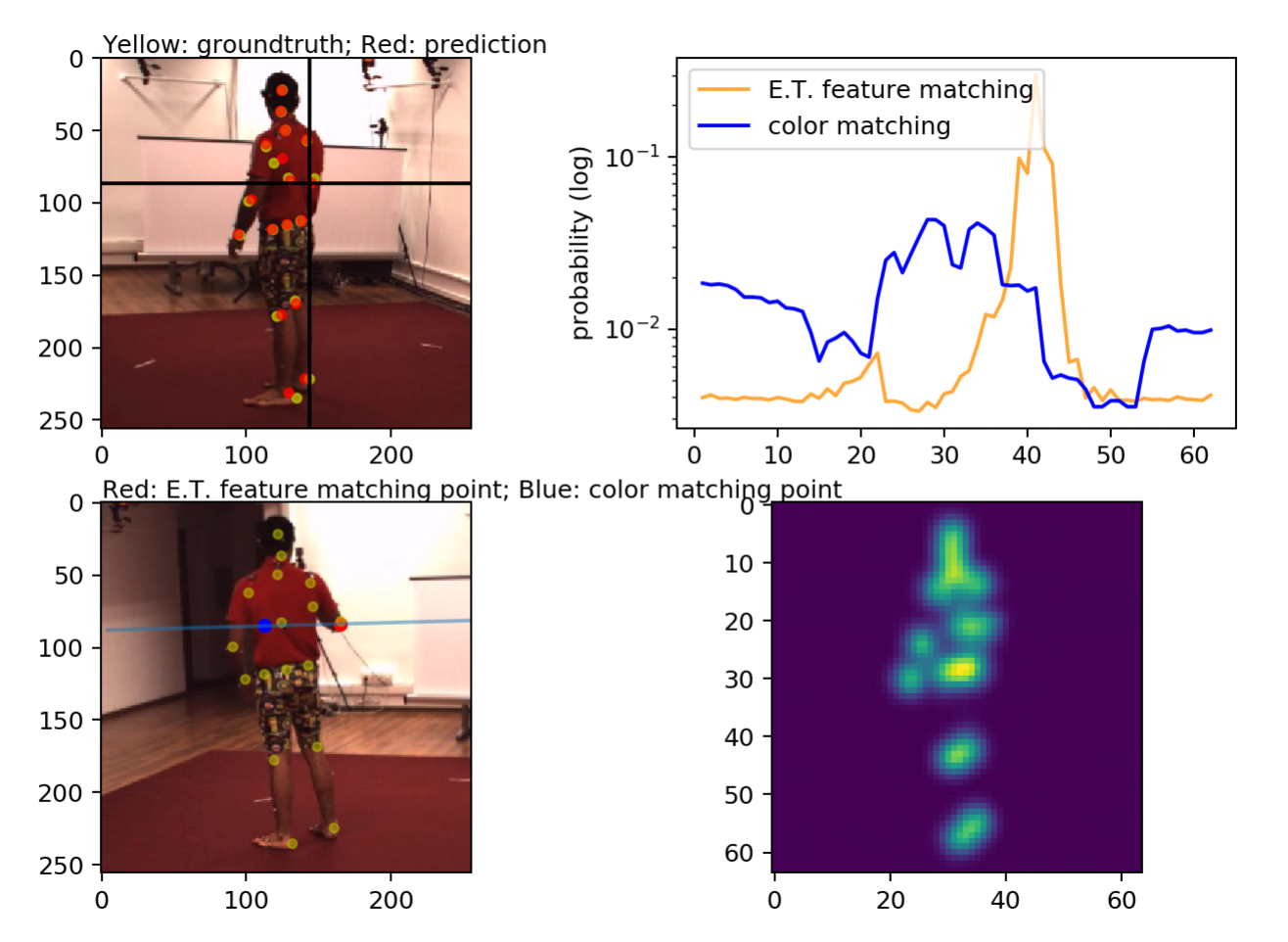

Epipolar Transformers Visualization

- Download the output pkls for non-augmented models and extract under

outs/ - Make sure

outs/epipolar/keypoint_h36m_fixed/visualizations/h36m/output_1.pklexists. - Use

scripts/vis_hm36_score.ipynb- To select a point, click on the reference view (upper left), the source view along with corresponding epipolar line, and the peaks for different feature matchings are shown at the bottom left.

Human 3.6M input visualization

python main.py --cfg configs/epipolar/keypoint_h36m.yaml DOTRAIN False DOTEST False EPIPOLAR.VIS True VIS.H36M True SOLVER.IMS_PER_BATCH 1

python main.py --cfg configs/epipolar/keypoint_h36m.yaml DOTRAIN False DOTEST False VIS.MULTIVIEWH36M True EPIPOLAR.VIS True SOLVER.IMS_PER_BATCH 1

Human 3.6M prediction visualization

# generate images

python main.py --cfg configs/epipolar/keypoint_h36m_zresidual_fixed.yaml DOTRAIN False DOTEST True VIS.VIDEO True DATASETS.H36M.TEST_SAMPLE 2

# generate images

python main.py --cfg configs/benchmark/keypoint_h36m.yaml DOTRAIN False DOTEST True VIS.VIDEO True DATASETS.H36M.TEST_SAMPLE 2

# use https://github.com/yihui-he/multiview-human-pose-estimation-pytorch to generate images for ICCV 19

python run/pose2d/valid.py --cfg experiments-local/mixed/resnet50/256_fusion.yaml # set test batch size to 1 and PRINT_FREQ to 2

# generate video

python scripts/video.py --src outs/epipolar/keypoint_h36m_fixed/video/multiview_h36m_val/

Citing Epipolar Transformers

If you find Epipolar Transformers helps your research, please cite the paper:

@inproceedings{epipolartransformers,

title={Epipolar Transformers},

author={He, Yihui and Yan, Rui and Fragkiadaki, Katerina and Yu, Shoou-I},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={7779--7788},

year={2020}

}

FAQ

Please create a new issue.

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].