xiumingzhang / Genre Shapehd

Programming Languages

Projects that are alternatives of or similar to Genre Shapehd

Generalizable Reconstruction (GenRe) and ShapeHD

Papers

This is a repo covering the following three papers. If you find the code useful, please cite the paper(s).

-

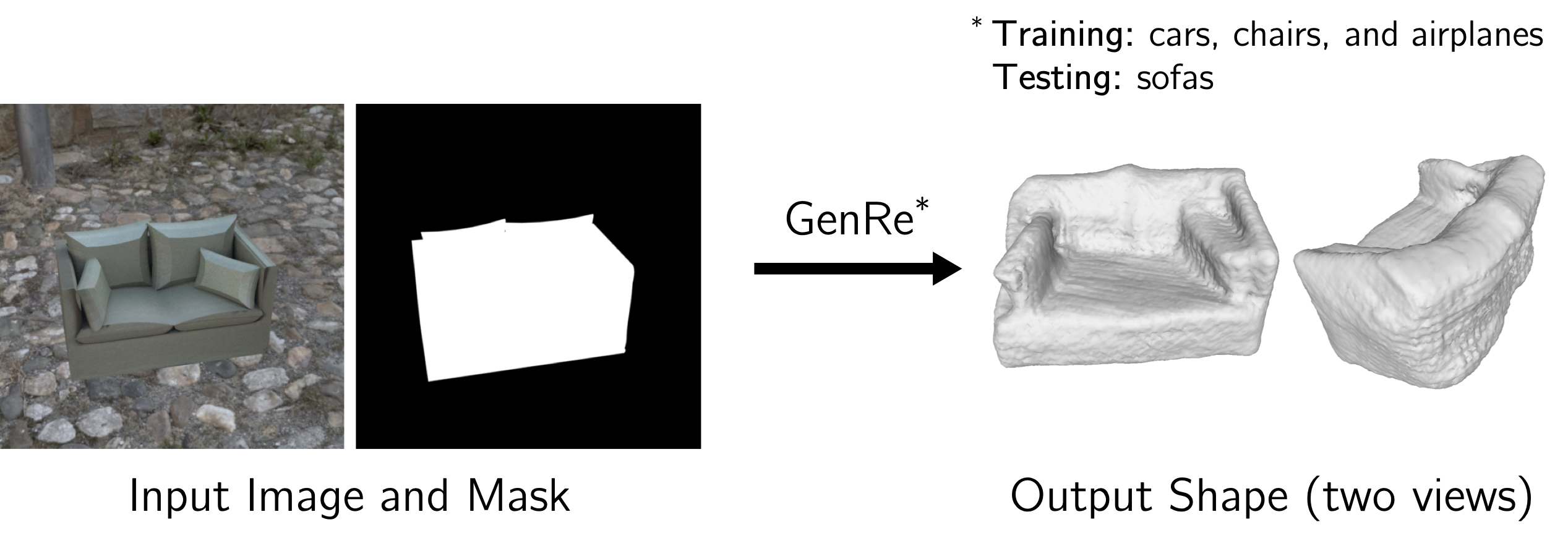

Generalizable Reconstruction (GenRe)

Learning to Reconstruct Shapes from Unseen Classes

Xiuming Zhang*, Zhoutong Zhang*, Chengkai Zhang, Joshua B. Tenenbaum, William T. Freeman, and Jiajun Wu

NeurIPS 2018 (Oral)

Paper | BibTeX | Project* indicates equal contribution.

-

ShapeHD

Learning Shape Priors for Single-View 3D Completion and Reconstruction

Jiajun Wu*, Chengkai Zhang*, Xiuming Zhang, Zhoutong Zhang, William T. Freeman, and Joshua B. Tenenbaum

ECCV 2018

Paper | BibTeX | Project -

MarrNet

MarrNet: 3D Shape Reconstruction via 2.5D Sketches

Jiajun Wu*, Yifan Wang*, Tianfan Xue, Xingyuan Sun, William T. Freeman, and Joshua B. Tenenbaum

NeurIPS 2017

Paper | BibTeX | Project

Environment Setup

All code was built and tested on Ubuntu 16.04.5 LTS with Python 3.6, PyTorch 0.4.1, and CUDA 9.0. Versions for other packages can be found in environment.yml.

-

Clone this repo with

# cd to the directory you want to work in git clone https://github.com/xiumingzhang/GenRe-ShapeHD.git cd GenRe-ShapeHDThe code below assumes you are at the repo root.

-

Create a conda environment named

shapereconwith necessary dependencies specified inenvironment.yml. In order to make sure trimesh is installed correctly, please runinstall_trimesh.shafter setting up the conda environment.conda env create -f environment.yml ./install_trimesh.shThe TensorFlow dependency in

environment.ymlis for using TensorBoard only. Remove it if you do not want to monitor your training with TensorBoard. -

The instructions below assume you have activated this environment and built the cuda extension with

source activate shaperecon ./build_toolbox.sh

Note that due to the deprecation of cffi from pytorch 1.0 and on, this only works for pytorch 0.4.1.

Downloading Our Trained Models and Training Data

Models

To download our trained GenRe and ShapeHD models (1 GB in total), run

wget http://genre.csail.mit.edu/downloads/genre_shapehd_models.tar -P downloads/models/

tar -xvf downloads/models/genre_shapehd_models.tar -C downloads/models/

- GenRe:

depth_pred_with_inpaint.ptandfull_model.pt - ShapeHD:

marrnet1_with_minmax.ptandshapehd.pt

Data

This repo comes with a few Pix3D images and ShapeNet renderings, located in downloads/data/test, for testing purposes.

For training, we make available our RGB and 2.5D sketch renderings, paired with their corresponding 3D shapes, for ShapeNet cars, chairs, and airplanes, with each object captured in 20 random views. Note that this .tar is 143 GB.

wget http://genre.csail.mit.edu/downloads/shapenet_cars_chairs_planes_20views.tar -P downloads/data/

mkdir downloads/data/shapenet/

tar -xvf downloads/data/shapenet_cars_chairs_planes_20views.tar -C downloads/data/shapenet/

New (Oct. 20, 2019)

For training, in addition to the renderings already included in the initial release, we now also release the Mitsuba scene .xml files used to produce these renderings. This download link is a .zip (394 MB) consisting of the three training classes: cars, chairs, and airplanes. Among other scene parameters, camera poses can now be retrieved from these .xml files, which we hope would be useful for tasks like camera/object pose estimation.

For testing, we release the data of the unseen categories shown in Table 1 of the paper. This download link is a .tar (44 GB) consisting of, for each of the unseen classes, the 500 random shapes we used for testing GenRe. Right now, nine classes are included, as we are tracking down the 10th.

Testing with Our Models

We provide .sh wrappers to perform testing for GenRe, ShapeHD, and MarrNet (without the reprojection consistency part).

GenRe

See scripts/test_genre.sh.

We updated our entire pipeline to support fully differentiable end-to-end finetuning. In our NeurIPS submission, the projection from depth images to spherical maps was not implemented in a differentiable way. As a result of both the pipeline and PyTorch version upgrades, the model performace is slightly different from what was reported in the original paper.

Below we tabulate the original vs. updated Chamfer distances (CD) across different Pix3D classes. The "Original" row is from Table 2 of the paper.

| Chair | Bed | Bookcase | Desk | Sofa | Table | Wardrobe | |

|---|---|---|---|---|---|---|---|

| Updated | .094 | .117 | .104 | .110 | .086 | .114 | .106 |

| Original | .093 | .113 | .101 | .109 | .083 | .116 | .109 |

ShapeHD

See scripts/test_shapehd.sh.

After ECCV, we upgraded our entire pipeline and re-trained ShapeHD with this new pipeline. The models released here are newly trained, producing quantative results slightly better than what was reported in the ECCV paper. If you use the Pix3D repo to evaluate the model released here, you will get an average CD of 0.122 for the 1,552 untruncated, unoccluded chair images (whose inplane rotation < 5°). The average CD on Pix3D chairs reported in the paper was 0.123.

MarrNet w/o Reprojection Consistency

See scripts/test_marrnet.sh.

The architectures in this implementation of MarrNet are different from those presented in the original NeurIPS 2017 paper. For instance, the reprojection consistency is not implemented here. MarrNet-1 that predicts 2.5D sketches from RGB inputs is now a U-ResNet, different from its original architecture. That said, the idea remains the same: predicting 2.5D sketches as an intermediate step to the final 3D voxel predictions.

If you want to test with the original MarrNet, see the MarrNet repo for the pretrained models.

Training Your Own Models

This repo allows you to train your own models from scratch, possibly with data different from our training data provided above. You can monitor your training with TensorBoard. For that, make sure to include --tensorboard while running train.py, and then run

python -m tensorboard.main --logdir="$logdir"/tensorboard

to visualize your losses.

GenRe

Follow these steps to train the GenRe model.

- Train the depth estimator with

scripts/train_marrnet1.sh - Train the spherical inpainting network with

scripts/train_inpaint.sh - Train the full model with

scripts/train_full_genre.sh

ShapeHD

Follow these steps to train the ShapeHD model.

- Train the 2.5D sketch estimator with

scripts/train_marrnet1.sh - Train the 2.5D-to-3D network with

scripts/train_marrnet2.sh - Train a 3D-GAN with

scripts/train_wgangp.sh - Finetune the 2.5D-to-3D network with perceptual losses provided by the discriminator of the 3D-GAN, using

scripts/finetune_shapehd.sh

MarrNet w/o Reprojection Consistency

Follow these steps to train the MarrNet model, excluding the reprojection consistency.

- Train the 2.5D sketch estimator with

scripts/train_marrnet1.sh - Train the 2.5D-to-3D network with

scripts/train_marrnet2.sh - Finetune the 2.5D-to-3D network with

scripts/finetune_marrnet.sh

Questions

Please open an issue if you encounter any problem. You will likely get a quicker response than via email.

Changelog

- Dec. 28, 2018: Initial release

- Oct. 20, 2019: Added testing data of the unseen categories, and all

.xmlscene files used to render training data