graphdeeplearning / Graphtransformer

Programming Languages

Labels

Projects that are alternatives of or similar to Graphtransformer

Graph Transformer Architecture

Source code for the paper "A Generalization of Transformer Networks to Graphs" by Vijay Prakash Dwivedi and Xavier Bresson, at AAAI'21 Workshop on Deep Learning on Graphs: Methods and Applications (DLG-AAAI'21).

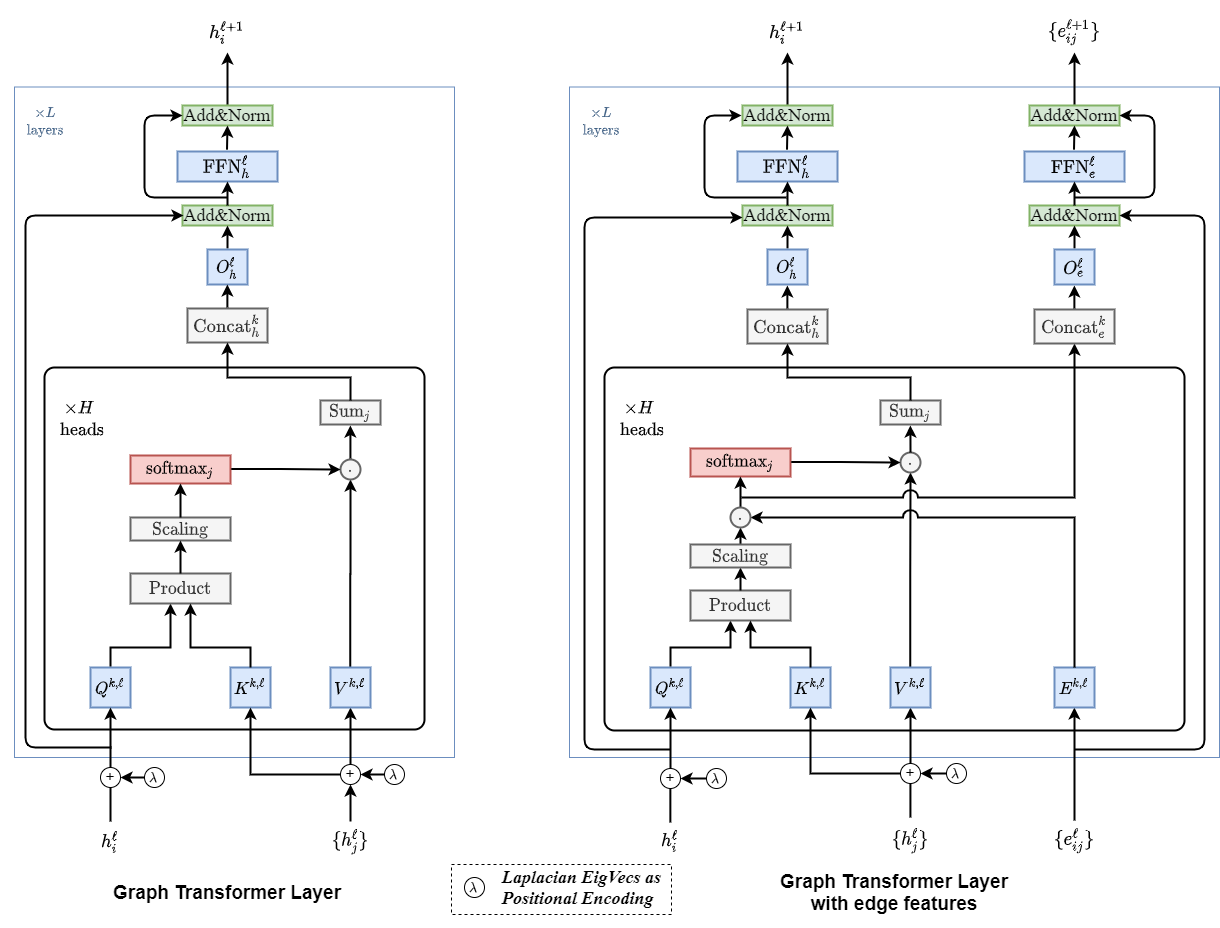

We propose a generalization of transformer neural network architecture for arbitrary graphs: Graph Transformer.

Compared to the Standard Transformer, the highlights of the presented architecture are:

- The attention mechanism is a function of neighborhood connectivity for each node in the graph.

- The position encoding is represented by Laplacian eigenvectors, which naturally generalize the sinusoidal positional encodings often used in NLP.

- The layer normalization is replaced by a batch normalization layer.

- The architecture is extended to have edge representation, which can be critical to tasks with rich information on the edges, or pairwise interactions (such as bond types in molecules, or relationship type in KGs. etc).

Figure: Block Diagram of Graph Transformer Architecture

1. Repo installation

This project is based on the benchmarking-gnns repository.

Follow these instructions to install the benchmark and setup the environment.

2. Download datasets

Proceed as follows to download the datasets used to evaluate Graph Transformer.

3. Reproducibility

Use this page to run the codes and reproduce the published results.

4. Reference

📃 Paper on arXiv

📝 Blog on Towards Data Science

🎥 Video on YouTube

@article{dwivedi2021generalization,

title={A Generalization of Transformer Networks to Graphs},

author={Dwivedi, Vijay Prakash and Bresson, Xavier},

journal={AAAI Workshop on Deep Learning on Graphs: Methods and Applications},

year={2021}

}