JihongJu / Keras Fcn

Licence: mit

A playable implementation of Fully Convolutional Networks with Keras.

Stars: ✭ 206

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Keras Fcn

Awesome Graph Neural Networks

Paper Lists for Graph Neural Networks

Stars: ✭ 1,322 (+541.75%)

Mutual labels: convolutional-networks

Glasses

High-quality Neural Networks for Computer Vision 😎

Stars: ✭ 138 (-33.01%)

Mutual labels: convolutional-networks

Tf Adnet Tracking

Deep Object Tracking Implementation in Tensorflow for 'Action-Decision Networks for Visual Tracking with Deep Reinforcement Learning(CVPR 2017)'

Stars: ✭ 162 (-21.36%)

Mutual labels: convolutional-networks

Lsuvinit

Reference caffe implementation of LSUV initialization

Stars: ✭ 99 (-51.94%)

Mutual labels: convolutional-networks

Cn24

Convolutional (Patch) Networks for Semantic Segmentation

Stars: ✭ 125 (-39.32%)

Mutual labels: convolutional-networks

Livianet

This repository contains the code of LiviaNET, a 3D fully convolutional neural network that was employed in our work: "3D fully convolutional networks for subcortical segmentation in MRI: A large-scale study"

Stars: ✭ 143 (-30.58%)

Mutual labels: convolutional-networks

Micronet

micronet, a model compression and deploy lib. compression: 1、quantization: quantization-aware-training(QAT), High-Bit(>2b)(DoReFa/Quantization and Training of Neural Networks for Efficient Integer-Arithmetic-Only Inference)、Low-Bit(≤2b)/Ternary and Binary(TWN/BNN/XNOR-Net); post-training-quantization(PTQ), 8-bit(tensorrt); 2、 pruning: normal、regular and group convolutional channel pruning; 3、 group convolution structure; 4、batch-normalization fuse for quantization. deploy: tensorrt, fp32/fp16/int8(ptq-calibration)、op-adapt(upsample)、dynamic_shape

Stars: ✭ 1,232 (+498.06%)

Mutual labels: convolutional-networks

Affnet

Code and weights for local feature affine shape estimation paper "Repeatability Is Not Enough: Learning Discriminative Affine Regions via Discriminability"

Stars: ✭ 191 (-7.28%)

Mutual labels: convolutional-networks

Keras Yolo2

Easy training on custom dataset. Various backends (MobileNet and SqueezeNet) supported. A YOLO demo to detect raccoon run entirely in brower is accessible at https://git.io/vF7vI (not on Windows).

Stars: ✭ 1,693 (+721.84%)

Mutual labels: convolutional-networks

Deblurgan

Image Deblurring using Generative Adversarial Networks

Stars: ✭ 2,033 (+886.89%)

Mutual labels: convolutional-networks

Keras transfer cifar10

Object classification with CIFAR-10 using transfer learning

Stars: ✭ 120 (-41.75%)

Mutual labels: convolutional-networks

Deep Learning Specialization Coursera

Deep Learning Specialization courses by Andrew Ng, deeplearning.ai

Stars: ✭ 146 (-29.13%)

Mutual labels: convolutional-networks

Pytorch Fcn

PyTorch Implementation of Fully Convolutional Networks. (Training code to reproduce the original result is available.)

Stars: ✭ 1,351 (+555.83%)

Mutual labels: convolutional-networks

Antialiased Cnns

Repository has been moved: https://github.com/adobe/antialiased-cnns

Stars: ✭ 169 (-17.96%)

Mutual labels: convolutional-networks

Stringlifier

Stringlifier is on Opensource ML Library for detecting random strings in raw text. It can be used in sanitising logs, detecting accidentally exposed credentials and as a pre-processing step in unsupervised ML-based analysis of application text data.

Stars: ✭ 85 (-58.74%)

Mutual labels: convolutional-networks

Eye Tracker

Implemented and improved the iTracker model proposed in the paper "Eye Tracking for Everyone"

Stars: ✭ 140 (-32.04%)

Mutual labels: convolutional-networks

Squeeze and excitation

PyTorch Implementation of 2D and 3D 'squeeze and excitation' blocks for Fully Convolutional Neural Networks

Stars: ✭ 192 (-6.8%)

Mutual labels: convolutional-networks

Naszilla

Naszilla is a Python library for neural architecture search (NAS)

Stars: ✭ 181 (-12.14%)

Mutual labels: convolutional-networks

Spynet

Spatial Pyramid Network for Optical Flow

Stars: ✭ 158 (-23.3%)

Mutual labels: convolutional-networks

keras-fcn

A re-implementation of Fully Convolutional Networks with Keras

Installation

Dependencies

Install with pip

$ pip install git+https://github.com/JihongJu/keras-fcn.git

Build from source

$ git clone https://github.com/JihongJu/keras-fcn.git

$ cd keras-fcn

$ pip install --editable .

Usage

FCN with VGG16

from keras_fcn import FCN

fcn_vgg16 = FCN(input_shape=(500, 500, 3), classes=21,

weights='imagenet', trainable_encoder=True)

fcn_vgg16.compile(optimizer='rmsprop',

loss='categorical_crossentropy',

metrics=['accuracy'])

fcn_vgg16.fit(X_train, y_train, batch_size=1)

FCN with VGG19

from keras_fcn import FCN

fcn_vgg19 = FCN_VGG19(input_shape=(500, 500, 3), classes=21,

weights='imagenet', trainable_encoder=True)

fcn_vgg19.compile(optimizer='rmsprop',

loss='categorical_crossentropy',

metrics=['accuracy'])

fcn_vgg19.fit(X_train, y_train, batch_size=1)

Custom FCN (VGG16 as an example)

from keras.layers import Input

from keras.models import Model

from keras_fcn.encoders import Encoder

from keras_fcn.decoders import VGGUpsampler

from keras_fcn.blocks import (vgg_conv, vgg_fc)

inputs = Input(shape=(224, 224, 3))

blocks = [vgg_conv(64, 2, 'block1'),

vgg_conv(128, 2, 'block2'),

vgg_conv(256, 3, 'block3'),

vgg_conv(512, 3, 'block4'),

vgg_conv(512, 3, 'block5'),

vgg_fc(4096)]

encoder = Encoder(inputs, blocks, weights='imagenet',

trainable=True)

feat_pyramid = encoder.outputs # A feature pyramid with 5 scales

feat_pyramid = feat_pyramid[:3] # Select only the top three scale of the pyramid

feat_pyramid.append(inputs) # Add image to the bottom of the pyramid

outputs = VGGUpsampler(feat_pyramid, scales=[1, 1e-2, 1e-4], classes=21)

outputs = Activation('softmax')(outputs)

fcn_custom = Model(inputs=inputs, outputs=outputs)

And implement a custom Fully Convolutional Network becomes simply define a series of convolutional blocks that one stacks on top of another.

Custom decoders

from keras_fcn.blocks import vgg_upsampling

from keras_fcn.decoders import Decoder

decode_blocks = [

vgg_upsampling(classes=21, target_shape=(None, 14, 14, None), scale=1),

vgg_upsampling(classes=21, target_shape=(None, 28, 28, None), scale=0.01),

vgg_upsampling(classes=21, target_shape=(None, 224, 224, None), scale=0.0001)

]

outputs = Decoder(feat_pyramid[-1], decode_blocks)

The decode_blocks can be customized as well.

from keras_fcn.layers import BilinearUpSampling2D

def vgg_upsampling(classes, target_shape=None, scale=1, block_name='featx'):

"""A VGG convolutional block with bilinear upsampling for decoding.

:param classes: Integer, number of classes

:param scale: Float, scale factor to the input feature, varing from 0 to 1

:param target_shape: 4D Tuples with targe_height, target_width as

the 2nd, 3rd elements if `channels_last` or as the 3rd, 4th elements if

`channels_first`.

>>> from keras_fcn.blocks import vgg_upsampling

>>> feat1, feat2, feat3 = feat_pyramid[:3]

>>> y = vgg_upsampling(classes=21, target_shape=(None, 14, 14, None),

>>> scale=1, block_name='feat1')(feat1, None)

>>> y = vgg_upsampling(classes=21, target_shape=(None, 28, 28, None),

>>> scale=1e-2, block_name='feat2')(feat2, y)

>>> y = vgg_upsampling(classes=21, target_shape=(None, 224, 224, None),

>>> scale=1e-4, block_name='feat3')(feat3, y)

"""

def f(x, y):

score = Conv2D(filters=classes, kernel_size=(1, 1),

activation='linear',

padding='valid',

kernel_initializer='he_normal',

name='score_{}'.format(block_name))(x)

if y is not None:

def scaling(xx, ss=1):

return xx * ss

scaled = Lambda(scaling, arguments={'ss': scale},

name='scale_{}'.format(block_name))(score)

score = add([y, scaled])

upscore = BilinearUpSampling2D(

target_shape=target_shape,

name='upscore_{}'.format(block_name))(score)

return upscore

return f

Try Examples

- Download VOC2011 dataset

$ wget "http://host.robots.ox.ac.uk/pascal/VOC/voc2011/VOCtrainval_25-May-2011.tar"

$ tar -xvzf VOCtrainval_25-May-2011.tar

$ mkdir ~/Datasets

$ mv TrainVal/VOCdevkit/VOC2011 ~/Datasets

- Mount dataset from host to container and start bash in container image

From repository keras-fcn

$ nvidia-docker run -it --rm -v `pwd`:/root/workspace -v ${Home}/Datasets/:/root/workspace/data jihong/keras-gpu bash

or equivalently,

$ make bash

- Within the container, run the following codes.

$ cd ~/workspace

$ pip setup.py -e .

$ cd voc2011

$ python train.py

More details see source code of the example in Training Pascal VOC2011 Segmention

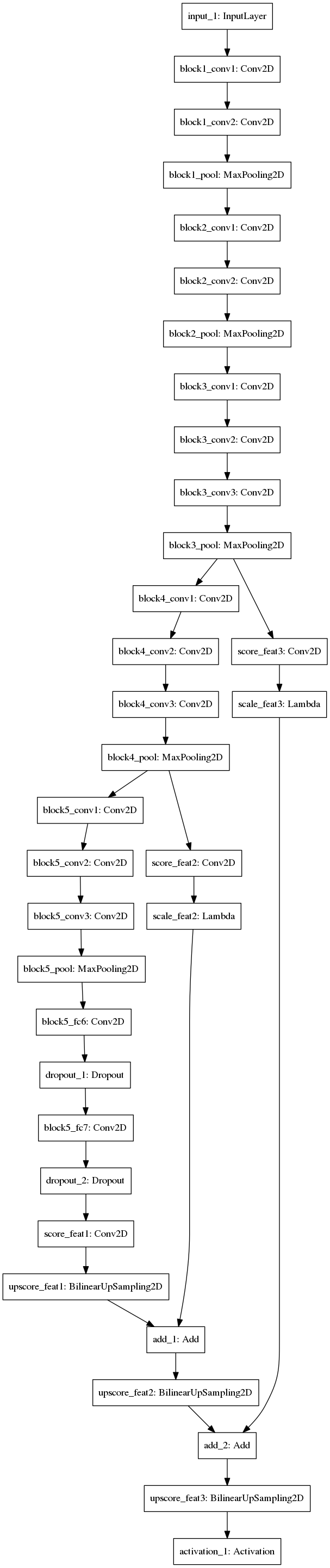

Model Architecture

FCN8s with VGG16 as base net:

TODO

- Add ResNet

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].