rchakode / Kube Opex Analytics

Programming Languages

Projects that are alternatives of or similar to Kube Opex Analytics

Overview

In a nutshell, kube-opex-analytics or literally Kubernetes Opex Analytics is a tool to help organizations track the resources being consumed by their Kubernetes clusters to prevent overpaying. To this end, it generates short-, mid- and long-term usage reports showing relevant insights on what amount of resources each project is consuming over time. The final goal being to ease cost allocation and capacity planning decisions with factual analytics.

Multi-cluster analytics:

kube-opex-analyticstracks the usage for a single instance of Kubernetes. For a centralized multi-Kubernetes usage analytics, you may have to consider our Krossboard project. Watch a demo video here.

Table of Contents

- Overview

- Table of Contents

- Concepts

- Screenshots

- Getting Started

- Multi-cluster analytics

- License & Copyrights

- Support & Contributions

Concepts

kube-opex-analytics periodically collects CPU and memory usage metrics from Kubernetes's API, processes and consolidates them over various time-aggregation perspectives (hourly, daily, monthly), to produce resource usage reports covering up to a year. The reports focus on namespace level, while a special care is taken to also account and highlight shares of non-allocatable capacities.

Fundamentals Principles

kube-opex-analytics is designed atop the following core concepts and features:

-

Namespace-focused: Means that consolidated resource usage metrics consider individual namespaces as fundamental units for resource sharing. A special care is taken to also account and highlight

non-allocatableresources . - Hourly Usage & Trends: Like on public clouds, resource consumption for each namespace is consolidated on a hourly-basic. This actually corresponds to the ratio (%) of resource used per namespace during each hour. It's the foundation for cost allocation and also allows to get over time trends about resources being consuming per namespace and also at the Kubernetes cluster scale.

- Daily and Monthly Usage Costs: Provides for each period (daily/monthly), namespace, and resource type (CPU/memory), consolidated cost computed given one of the following ways: (i) accumulated hourly usage over the period; (ii) actual costs computed based on resource usage and a given hourly billing rate; (iii) normalized ratio of usage per namespace compared against the global cluster usage.

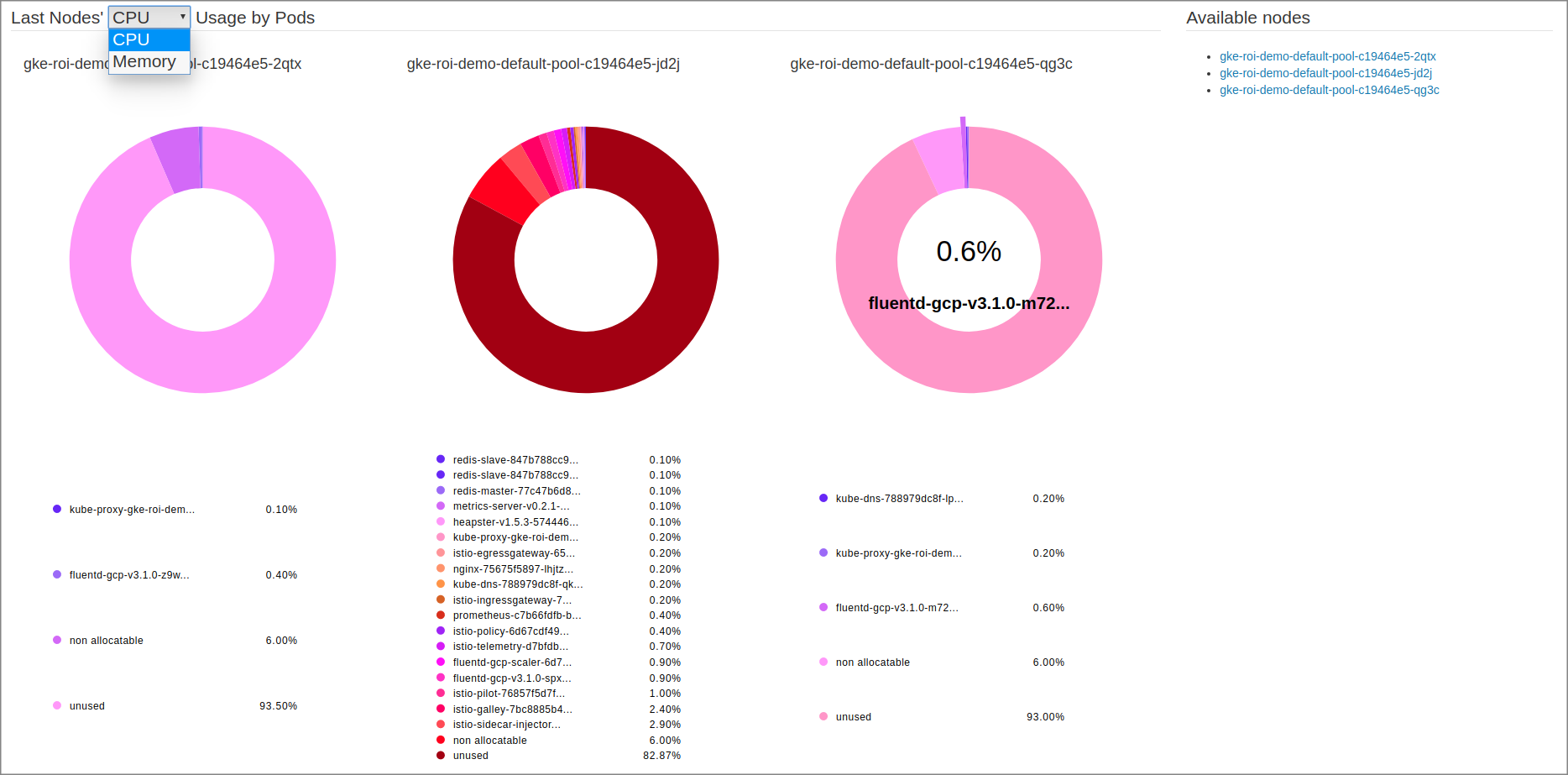

- Occupation of Nodes by Namespaced Pods: Highlights for each node the share of resources used by active pods labelled by their namespace.

-

Efficient Visualization: For metrics generated,

kube-opex-analyticsprovides dashboards with relevant charts covering as well the last couple of hours than the last 12 months (i.e. year). For this there are built-in charts, a Prometheus Exporter along with Grafana Dashboard that all work out of the box.

Cost Models

Cost allocation models can be set through the startup configuration variable KOA_COST_MODEL. Possible values are:

-

CUMULATIVE_RATIO: (default value) compute costs as cumulative resource usage for each period of time (daily, monthly). -

RATIO: compute costs as normalized ratios (%) of resource usage during each period of time. -

CHARGE_BACK: compute actual costs using a given cluster hourly rate and the cumulative resource usage during each period of time.

Read the Configuration section for more details.

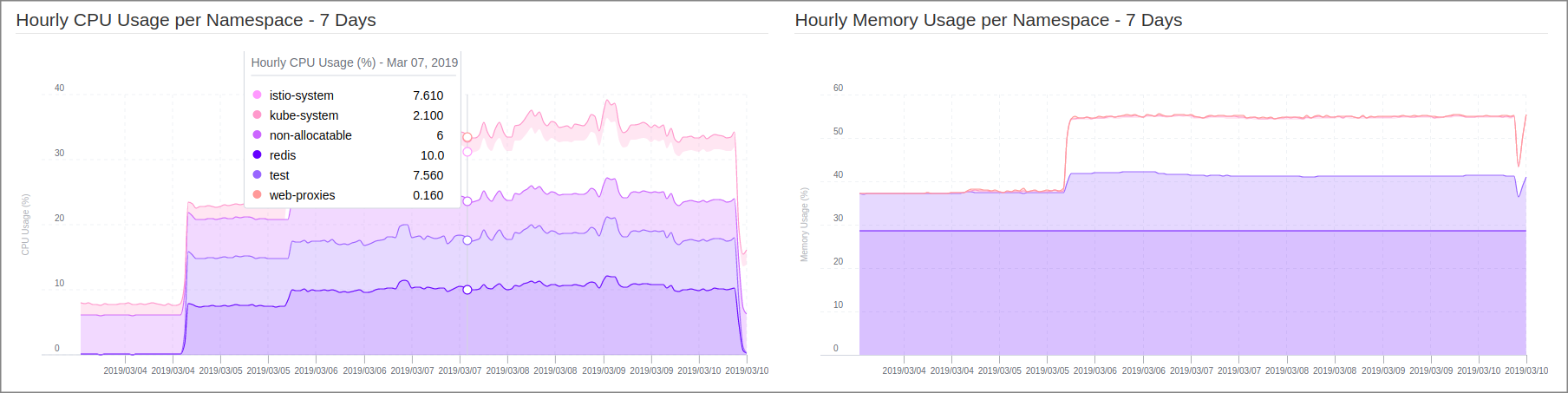

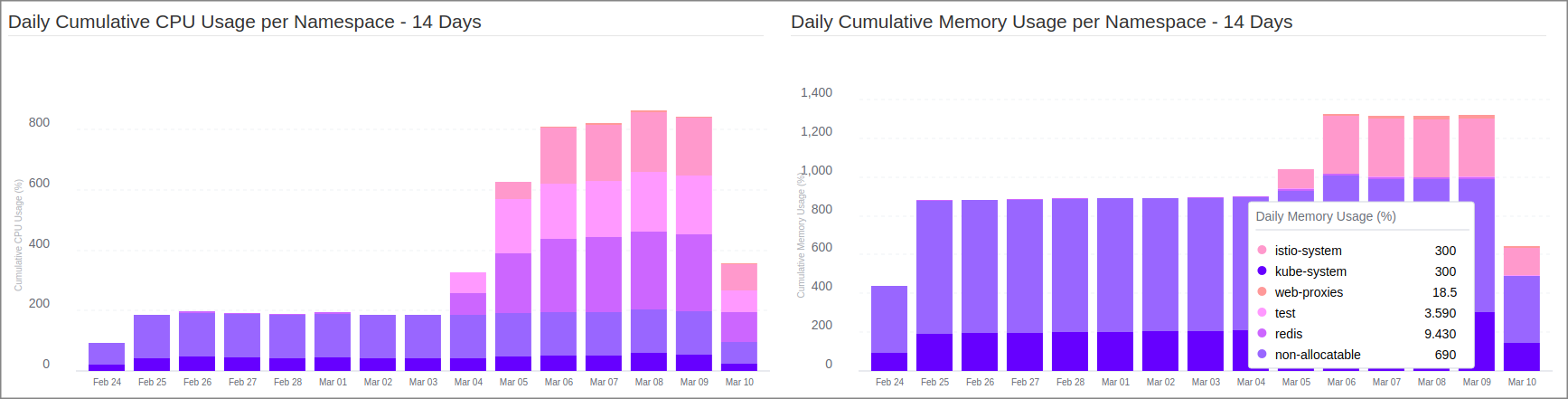

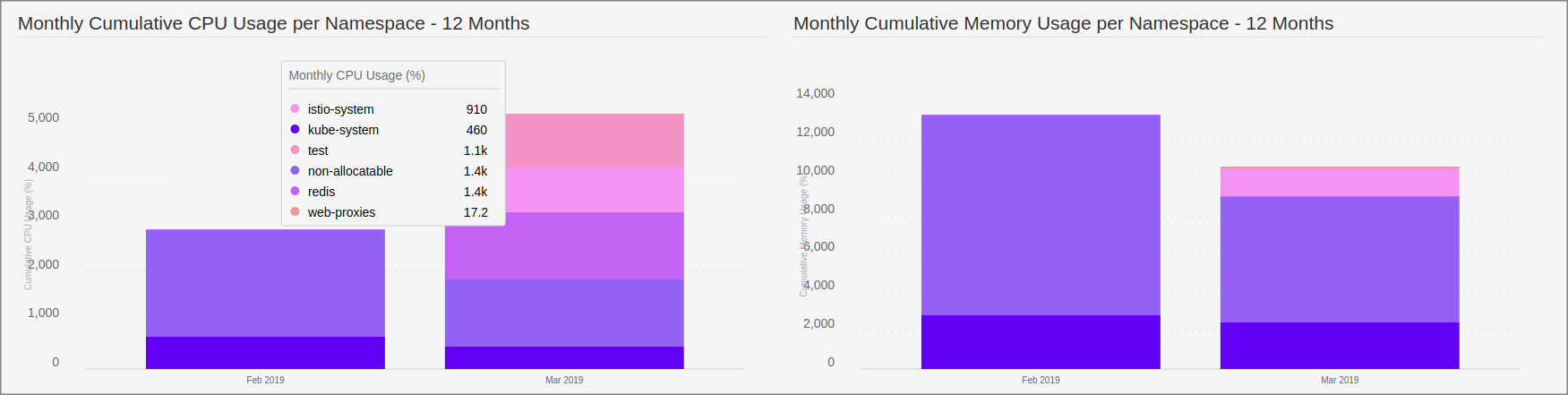

Screenshots

The below screenshots illustrate some reports leveraged via the kube-opex-analytics's built-in charts or via Grafana backed by the kube-opex-analytics's Prometheus exporter.

Last Week Hourly Resource Usage Trends

Two-weeks Daily CPU and Memory Usage

One-year Monthly CPU and Memory Usage

Nodes' Occupation by Pods

Grafana Dashboard

This is a screenshot of our official one backed by the kube-opex-analytics's built-in Prometheus Exporter.

Getting Started

Kubernetes API Access

kube-opex-analytics needs read-only access to the following Kubernetes APIs.

- /api/v1

- /apis/metrics.k8s.io/v1beta1 (provided by Kubernetes Metrics Server, which shall be installed on the cluster if it's not yet the case).

You need to provide the base URL of the Kubernetes API when starting the program.

- For a typically deployment inside the Kubernetes cluster, you have to provide the local cluster API endpoint at (i.e.

https://kubernetes.default). - Otherwise, if you're planning an installation outside the Kubernetes cluster you can provide either, the URL to the Kubernetes API (e.g. https://1.2.3.4:6443), or a proxied API (the command

kubectl proxyshall open a proxied access to the Kubernetes API with the following endpoint by defaulthttp://127.0.0.1:8001).

When deployed outside the cluster without a proxy access, it'll be likely required to provide credentials to authenticate against the Kubernetes API. The credentials can be a Bearer token, a Basic auth token, or even X509 client certifcate credentials. See Configuration Variables for more details.

Configuration Variables

When needed, these configuration environment variables shall be set before starting kube-opex-analytics:

-

KOA_DB_LOCATIONsets the path to use to store internal data. Typically when you consider to set a volume to store those data, you should also take care to set this path to belong to the mounting point. -

KOA_K8S_API_ENDPOINTsets the endpoint to the Kubernetes API. -

KOA_K8S_CACERTsets the path to CA file for a self-signed certificate. -

KOA_K8S_AUTH_TOKENsets a Bearer token to authenticate against the Kubernetes API. -

KOA_K8S_AUTH_CLIENT_CERTsets the path to the X509 client certificate to authenticate against the Kubernetes API. -

KOA_K8S_AUTH_CLIENT_CERT_KEYsets the path to the X509 client certificate key. -

KOA_K8S_AUTH_USERNAMEsets the username to authenticate against the Kubernetes API using Basic Authentication. -

KOA_K8S_AUTH_PASSWORDsets the password for Basic Authentication. -

KOA_COST_MODEL(version >=0.2.0): sets the model of cost allocation to use. Possible values are: CUMULATIVE_RATIO (default) indicates to compute cost as cumulative resource usage for each period of time (daily, monthly); CHARGE_BACK calculates cost based on a given cluster hourly rate (seeKOA_BILLING_HOURLY_RATE); RATIO indicates to compute cost as a normalized percentage of resource usage during each period of time. -

KOA_BILLING_HOURLY_RATE(required if cost model is CHARGE_BACK): defines a positive floating number corresponding to an estimated hourly rate for the Kubernetes cluster. For example if your cluster cost is $5,000 dollars a month (i.e.~30*24hours), its estimated hourly cost would be6.95 = 5000/(30*24). -

KOA_BILLING_CURRENCY_SYMBOL(optional, default is '$'): sets a currency string to use to annotate costs on reports.

Deployment on Docker

kube-opex-analytics is released as a Docker image. So you can quickly start an instance of the service by running the following command:

$ docker run -d \

--net="host" \

--name 'kube-opex-analytics' \

-v /var/lib/kube-opex-analytics:/data \

-e KOA_DB_LOCATION=/data/db \

-e KOA_K8S_API_ENDPOINT=http://127.0.0.1:8001 \

rchakode/kube-opex-analytics

In this command:

- We provide a local path

/var/lib/kube-opex-analyticsas data volume for the container. That's wherekube-opex-analyticswill store its internal analytics data. You can change this local path to another location, but please keep the container volume/dataas is. - The environment variable

KOA_DB_LOCATIONpoints to the container path to store data. You may note that this directory belongs to the data volume atached to the container. - The environment variable

KOA_K8S_API_ENDPOINTset the address of the Kubernetes API endpoint.

Get Access to the User Interface

Once the container started you can open access the kube-opex-analytics's web interface at http://<DOCKER_HOST>:5483/. Where <DOCKER_HOST> should be replaced by the IP address or the hostmane of the Docker server.

For instance, if you're running Docker on your local machine the interface will be available at: http://127.0.0.1:5483/

You typically need to wait almost an hour to have all charts filled. This is a normal operations of

kube-opex-analyticswhich is an hourly-based analytics tool.

Deployment on a Kubernetes cluster

There is a Helm chart to ease the deployment on Kubernetes using either Helm or kubectl.

First review the values.yaml file to customize the configuration options according to your specific environment.

In particular, you may need to customize the default settings used for the persistent data volume, the Prometheus Operator and its ServiceMonitor, the security context, and many others.

Security Context:

kube-opex-analytics's pod is deployed with a unprivileged security context by default. However, if needed, it's possible to launch the pod in privileged mode by setting the Helm configuration valuesecurityContext.enabledtofalse.

In the next deployment commands, it's assumed that the target namespace kube-opex-analytics exists. You thus need to create it first or, alternatively, adapt the commands to use any other namespace of your choice.

Installation using Helm

The deployment, which is validated with Helm 2 and 3, can be performed as follows.

helm upgrade \

--namespace kube-opex-analytics \

--install kube-opex-analytics \

helm/kube-opex-analytics/

Installation using Kubectl

This approach requires to have the Helm client (version 2 or 3) installed to generate a raw template for kubectl.

$ helm template \

kube-opex-analytics \

--namespace kube-opex-analytics \

helm/kube-opex-analytics/ | kubectl apply -f -

Get Access to UI Service

The Helm deploys an HTTP service named kube-opex-analytics on port 80 in the selected namespace, providing to the built-in dashboard of kube-opex-analytics.

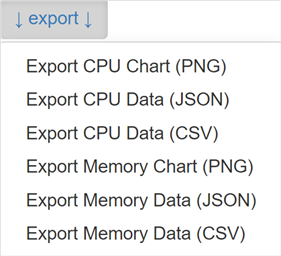

Export Charts and Datasets (PNG, CSV, JSON)

Any chart provided by kube-opex-analytics can be exported, either as PNG image, CSV or JSON data files.

The exportation steps are the following:

-

Get access to kube-opex-analytics's interface.

-

Go to the chart that you want to export dataset.

-

Click on the

tricolonicon near the chart title, then select the desired export format. -

You're done, the last step shall download the result file instantly.

Prometheus Exporter

Starting from version 0.3.0, kube-opex-analytics enables a Prometheus exporter through the endpoint /metrics.

The exporter exposes the following metrics:

-

koa_namespace_hourly_usageexposes for each namespace its current hourly resource usage for both CPU and memory. -

koa_namespace_daily_usageexposes for each namespace and for the ongoing day, its current resource usage for both CPU and memory. -

koa_namespace_monthly_usageexposes for each namespace and for the ongoing month, its current resource usage for both CPU and memory.

The Prometheus scraping job can be configured like below (adapt the target URL if needed). A scraping interval less than 5 minutes (i.e. 300s) is useless as kube-opex-analytics would not generate any new metrics in the meantime.

scrape_configs:

- job_name: 'kube-opex-analytics'

scrape_interval: 300s

static_configs:

- targets: ['kube-opex-analytics:5483']

When the option

prometheusOperatoris enabled during the deployment (see Helm values.yaml file), you have nothing to do as the scraping should be automatically configured by the deployedPrometheus ServiceMonitor.

Grafana Dashboards

You can either build your own Grafana dashboard or use our official one.

This official Grafana dashboard looks as below and is designed to work out-of-the box with the kube-opex-analytics's Prometheus exporter. It requires to set a Grafana variable named KOA_DS_PROMETHEUS, which shall point to your Prometheus server data source.

The dashboard currently provides the following reports:

- Hourly resource usage over time.

- Current day's ongoing resource usage.

- Current month's ongoing resource usage.

You should notice those reports are less rich compared against the ones enabled by the built-in

kube-opex-analyticsdashboard. In particular, the daily and the monthly usage for the different namespaces are not stacked, neither than there are not analytics for past days and months. These limitations are inherent to how Grafana handles timeseries and bar charts.

Multi-cluster analytics

Thanks to a partnership with the 2Alchemists SAS company, this feature is now implemented by Krossboard.

It's actively tested against Amazon EKS, Microsoft AKS, Google GKE Red Hat OpenShift, Rancher RKE, and various vanilla deployments. [Learn more...]

License & Copyrights

kube-opex-analytics (code and documentation) is licensed under the terms of Apache License 2.0. Read the LICENSE file for more details on the license terms.

It includes and is bound to third-party libraries provided with their owns licenses and copyrights. Read the NOTICE file for additional information.

Support & Contributions

We encourage feedback and always make our best to handle any troubles you may encounter when using it.

Here is the link to submit issues: https://github.com/rchakode/kube-opex-analytics/issues.

New ideas are welcomed, if you have any idea to improve it please open an issue to submit it.

Contributions are accepted subject that the code and documentation be released under the terms of Apache 2.0 License.

To contribute bug patches or new features, please submit a Pull Request.