amansrivastava17 / Lstm Siamese Text Similarity

Licence: mit

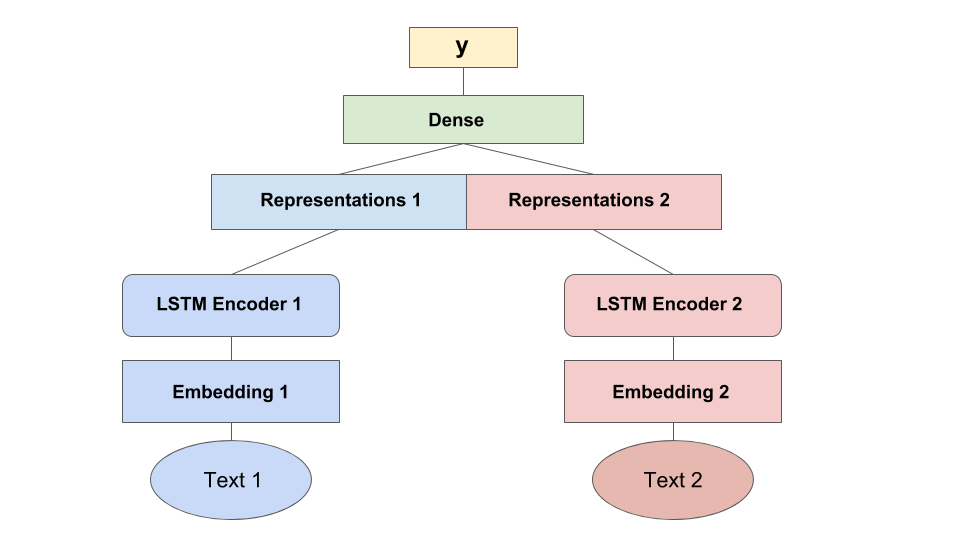

⚛️ It is keras based implementation of siamese architecture using lstm encoders to compute text similarity

Stars: ✭ 216

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Lstm Siamese Text Similarity

Tensorflow Sentiment Analysis On Amazon Reviews Data

Implementing different RNN models (LSTM,GRU) & Convolution models (Conv1D, Conv2D) on a subset of Amazon Reviews data with TensorFlow on Python 3. A sentiment analysis project.

Stars: ✭ 34 (-84.26%)

Mutual labels: lstm, lstm-neural-networks

Contextual Utterance Level Multimodal Sentiment Analysis

Context-Dependent Sentiment Analysis in User-Generated Videos

Stars: ✭ 86 (-60.19%)

Mutual labels: lstm, lstm-neural-networks

Image Captioning

Image Captioning: Implementing the Neural Image Caption Generator with python

Stars: ✭ 52 (-75.93%)

Mutual labels: lstm, lstm-neural-networks

Chameleon recsys

Source code of CHAMELEON - A Deep Learning Meta-Architecture for News Recommender Systems

Stars: ✭ 202 (-6.48%)

Mutual labels: lstm, lstm-neural-networks

Deep Generation

I used in this project a reccurent neural network to generate c code based on a dataset of c files from the linux repository.

Stars: ✭ 101 (-53.24%)

Mutual labels: lstm, lstm-neural-networks

Deep Learning Time Series

List of papers, code and experiments using deep learning for time series forecasting

Stars: ✭ 796 (+268.52%)

Mutual labels: lstm, lstm-neural-networks

Lstm anomaly thesis

Anomaly detection for temporal data using LSTMs

Stars: ✭ 178 (-17.59%)

Mutual labels: lstm, lstm-neural-networks

Paraphraser

Sentence paraphrase generation at the sentence level

Stars: ✭ 283 (+31.02%)

Mutual labels: lstm, lstm-neural-networks

Text predictor

Char-level RNN LSTM text generator📄.

Stars: ✭ 99 (-54.17%)

Mutual labels: lstm, lstm-neural-networks

Pytorch Learners Tutorial

PyTorch tutorial for learners

Stars: ✭ 97 (-55.09%)

Mutual labels: lstm, lstm-neural-networks

Stockpriceprediction

Stock Price Prediction using Machine Learning Techniques

Stars: ✭ 700 (+224.07%)

Mutual labels: lstm, lstm-neural-networks

Image Caption Generator

[DEPRECATED] A Neural Network based generative model for captioning images using Tensorflow

Stars: ✭ 141 (-34.72%)

Mutual labels: lstm, lstm-neural-networks

Predictive Maintenance Using Lstm

Example of Multiple Multivariate Time Series Prediction with LSTM Recurrent Neural Networks in Python with Keras.

Stars: ✭ 352 (+62.96%)

Mutual labels: lstm, lstm-neural-networks

Simple Chatbot Keras

Design and build a chatbot using data from the Cornell Movie Dialogues corpus, using Keras

Stars: ✭ 30 (-86.11%)

Mutual labels: lstm, lstm-neural-networks

Personality Detection

Implementation of a hierarchical CNN based model to detect Big Five personality traits

Stars: ✭ 338 (+56.48%)

Mutual labels: lstm, lstm-neural-networks

Bitcoin Price Prediction Using Lstm

Bitcoin price Prediction ( Time Series ) using LSTM Recurrent neural network

Stars: ✭ 67 (-68.98%)

Mutual labels: lstm, lstm-neural-networks

OCR

Optical character recognition Using Deep Learning

Stars: ✭ 25 (-88.43%)

Mutual labels: lstm, lstm-neural-networks

object-tracking

Multiple Object Tracking System in Keras + (Detection Network - YOLO)

Stars: ✭ 89 (-58.8%)

Mutual labels: lstm, lstm-neural-networks

Cnn lstm for text classify

CNN, LSTM, NBOW, fasttext 中文文本分类

Stars: ✭ 90 (-58.33%)

Mutual labels: lstm, lstm-neural-networks

Repo 2016

R, Python and Mathematica Codes in Machine Learning, Deep Learning, Artificial Intelligence, NLP and Geolocation

Stars: ✭ 103 (-52.31%)

Mutual labels: lstm, lstm-neural-networks

Text Similarity Using Siamese Deep Neural Network

Siamese neural network is a class of neural network architectures that contain two or more identical subnetworks. identical here means they have the same configuration with the same parameters and weights. Parameter updating is mirrored across both subnetworks.

It is a keras based implementation of deep siamese Bidirectional LSTM network to capture phrase/sentence similarity using word embeddings.

Below is the architecture description for the same.

Install dependencies

pip install -r requirements.txt

Usage

Training

from model import SiameseBiLSTM

from inputHandler import word_embed_meta_data, create_test_data

from config import siamese_config

import pandas as pd

############ Data Preperation ##########

df = pd.read_csv('sample_data.csv')

sentences1 = list(df['sentences1'])

sentences2 = list(df['sentences2'])

is_similar = list(df['is_similar'])

del df

######## Word Embedding ############

tokenizer, embedding_matrix = word_embed_meta_data(sentences1 + sentences2, siamese_config['EMBEDDING_DIM'])

embedding_meta_data = {

'tokenizer': tokenizer,

'embedding_matrix': embedding_matrix

}

## creating sentence pairs

sentences_pair = [(x1, x2) for x1, x2 in zip(sentences1, sentences2)]

del sentences1

del sentences2

######## Training ########

class Configuration(object):

"""Dump stuff here"""

CONFIG = Configuration()

CONFIG.embedding_dim = siamese_config['EMBEDDING_DIM']

CONFIG.max_sequence_length = siamese_config['MAX_SEQUENCE_LENGTH']

CONFIG.number_lstm_units = siamese_config['NUMBER_LSTM']

CONFIG.rate_drop_lstm = siamese_config['RATE_DROP_LSTM']

CONFIG.number_dense_units = siamese_config['NUMBER_DENSE_UNITS']

CONFIG.activation_function = siamese_config['ACTIVATION_FUNCTION']

CONFIG.rate_drop_dense = siamese_config['RATE_DROP_DENSE']

CONFIG.validation_split_ratio = siamese_config['VALIDATION_SPLIT']

siamese = SiameseBiLSTM(CONFIG.embedding_dim , CONFIG.max_sequence_length, CONFIG.number_lstm_units , CONFIG.number_dense_units, CONFIG.rate_drop_lstm, CONFIG.rate_drop_dense, CONFIG.activation_function, CONFIG.validation_split_ratio)

best_model_path = siamese.train_model(sentences_pair, is_similar, embedding_meta_data, model_save_directory='./')

Testing

from operator import itemgetter

from keras.models import load_model

model = load_model(best_model_path)

test_sentence_pairs = [('What can make Physics easy to learn?','How can you make physics easy to learn?'),('How many times a day do a clocks hands overlap?','What does it mean that every time I look at the clock the numbers are the same?')]

test_data_x1, test_data_x2, leaks_test = create_test_data(tokenizer,test_sentence_pairs, siamese_config['MAX_SEQUENCE_LENGTH'])

preds = list(model.predict([test_data_x1, test_data_x2, leaks_test], verbose=1).ravel())

results = [(x, y, z) for (x, y), z in zip(test_sentence_pairs, preds)]

results.sort(key=itemgetter(2), reverse=True)

print results

References:

- Siamese Recurrent Architectures for Learning Sentence Similarity (2016)

- Inspired from Tensorflow Implementation of https://github.com/dhwajraj/deep-siamese-text-similarity

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].