naszilla / Naszilla

Programming Languages

Labels

Projects that are alternatives of or similar to Naszilla

A repository to compare many popular NAS algorithms seamlessly across three popular benchmarks (NASBench 101, 201, and 301). You can implement your own NAS algorithm, and then easily compare it with eleven algorithms across three benchmarks.

This repository contains the official code for the following three papers, including a NeurIPS2020 spotlight paper:

Installation

Clone this repository and install its requirements (which includes nasbench, nas-bench-201, and nasbench301). It may take a few minutes.

git clone https://github.com/naszilla/naszilla

cd naszilla

cat requirements.txt | xargs -n 1 -L 1 pip install

pip install -e .

You might need to replace line 32 of src/nasbench301/surrogate_models/surrogate_models.py

with a new path to the configspace file:

self.config_loader = utils.ConfigLoader(os.path.expanduser('~/naszilla/src/nasbench301/configspace.json'))

Next, download the nas benchmark datasets (either with the terminal commands below,

or from their respective websites

(nasbench,

nas-bench-201, and

nasbench301).

The versions recommended for use with naszilla are nasbench_only108.tfrecord, NAS-Bench-201-v1_0-e61699.pth, and nasbench301_models_v0.9.zip.

If you use a different version, you might need to edit some of the naszilla code.

# these files are 0.5GB, 2.1GB, and 1.6GB, respectively

wget https://storage.googleapis.com/nasbench/nasbench_only108.tfrecord

wget https://ndownloader.figshare.com/files/25506206?private_link=7d47bf57803227af4909 -O NAS-Bench-201-v1_0-e61699.pth

wget https://ndownloader.figshare.com/files/24693026 -O nasbench301_models_v0.9.zip

unzip nasbench301_models_v0.9.zip

Place the three downloaded benchmark data files in ~/nas_benchmark_datasets (or choose

another directory and edit line 15 of naszilla/nas_benchmarks.py accordingly).

Now you have successfully installed all of the requirements to run eleven NAS algorithms on three benchmark search spaces!

Test Installation

You can test the installation by running these commands:

cd naszilla

python naszilla/run_experiments.py --search_space nasbench_101 --algo_params all_algos --queries 30 --trials 1

python naszilla/run_experiments.py --search_space nasbench_201 --algo_params all_algos --queries 30 --trials 1

python naszilla/run_experiments.py --search_space nasbench_301 --algo_params all_algos --queries 30 --trials 1

These experiments should finish running within a few minutes.

Run NAS experiments on NASBench-101/201/301 search spaces

cd naszilla

python naszilla/run_experiments.py --search_space nasbench_201 --dataset cifar100 --queries 100 --trials 100

This will test several NAS algorithms against each other on the NASBench-201 search

space. Note that NASBench-201 allows you to specify one of three datasets: cifar10, cifar100, or imagenet.

To customize your experiment, open naszilla/params.py. Here, you can change the

algorithms and their hyperparameters. For details on running specific methods,

see these docs.

Contributions

Contributions are welcome!

Reproducibility

If you have any questions about reproducing an experiment, please open an issue

or email [email protected].

Citation

Please cite our papers if you use code from this repo:

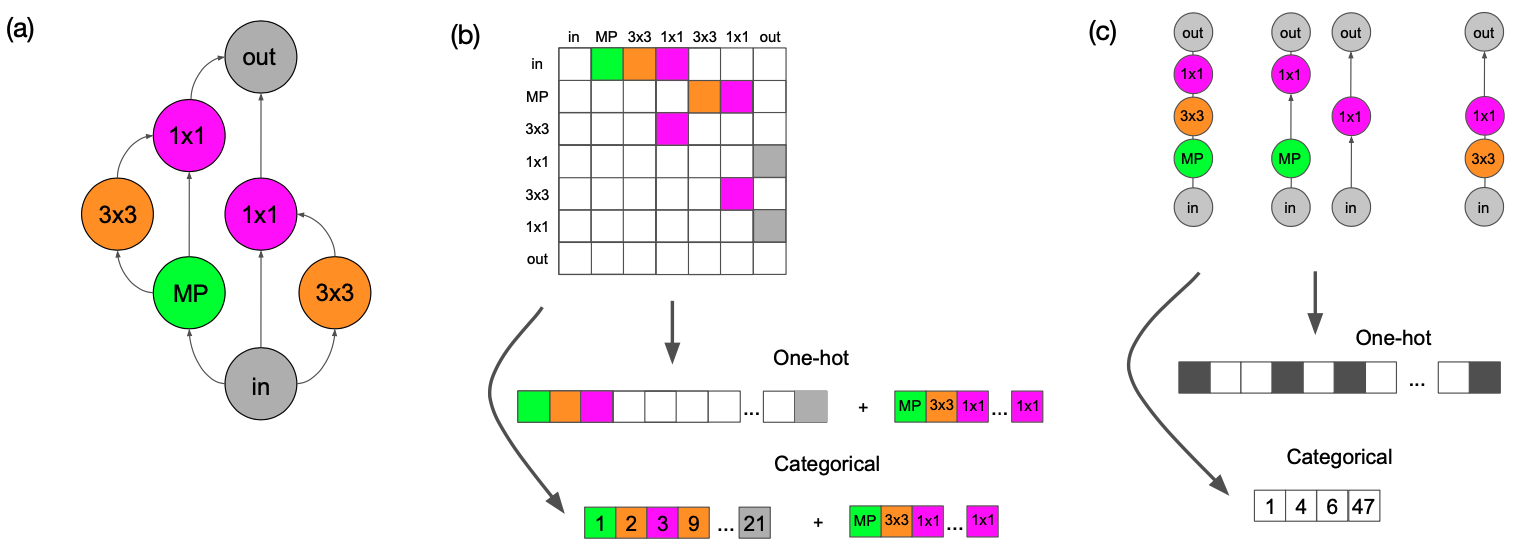

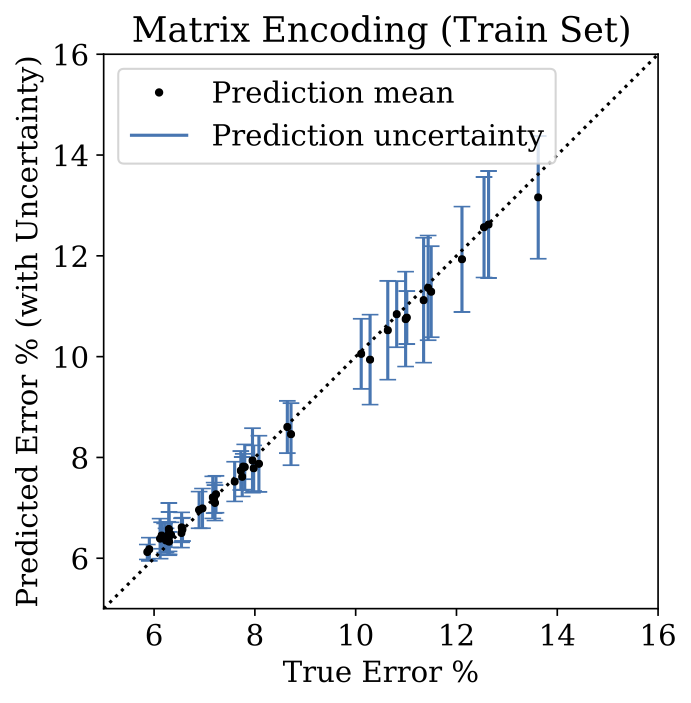

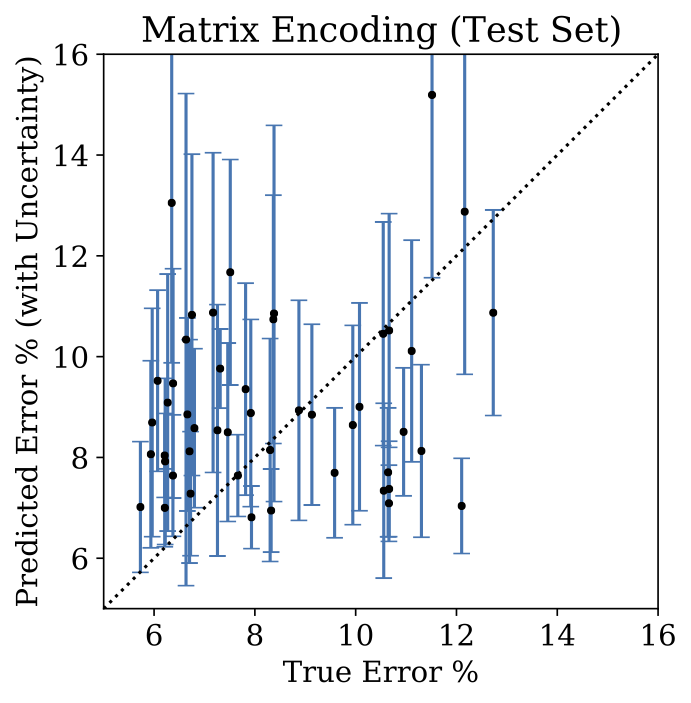

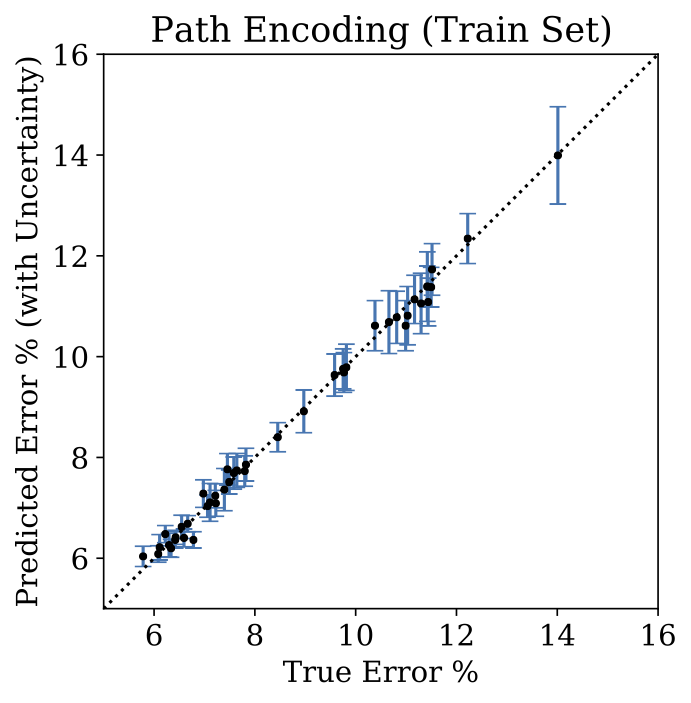

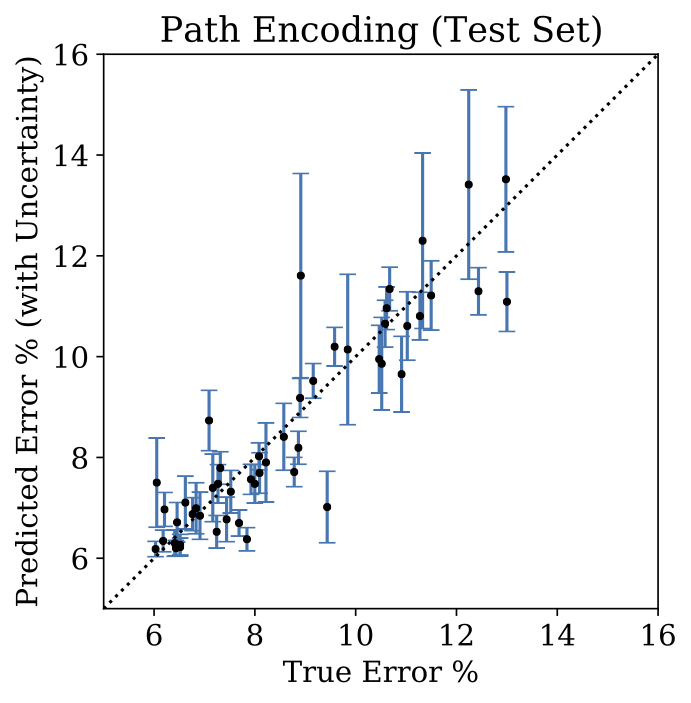

@inproceedings{white2020study,

title={A Study on Encodings for Neural Architecture Search},

author={White, Colin and Neiswanger, Willie and Nolen, Sam and Savani, Yash},

booktitle={Advances in Neural Information Processing Systems},

year={2020}

}

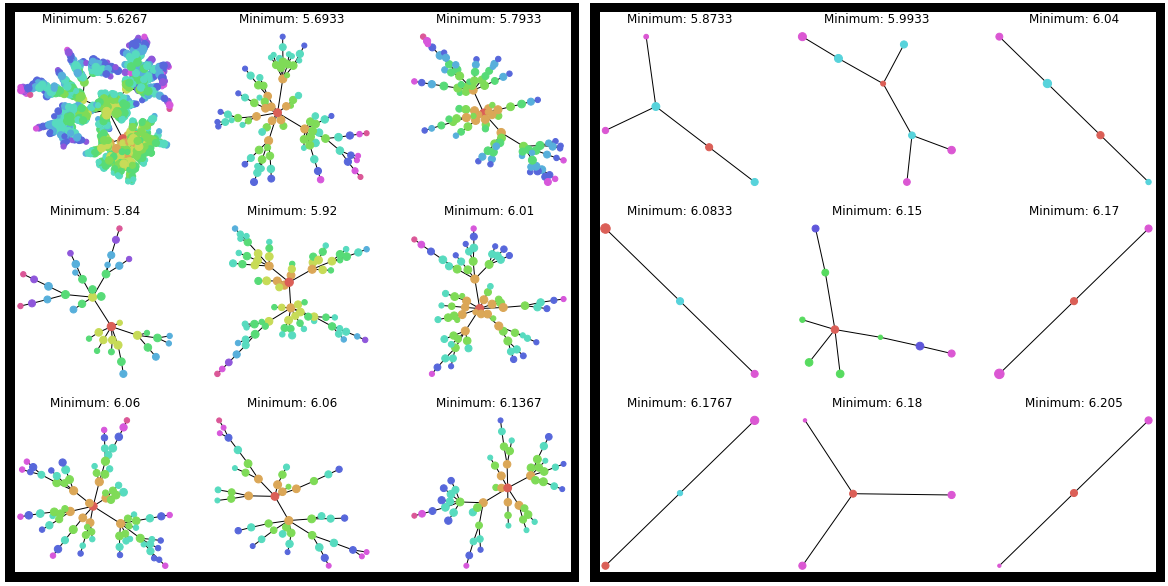

@inproceedings{white2019bananas,

title={BANANAS: Bayesian Optimization with Neural Architectures for Neural Architecture Search},

author={White, Colin and Neiswanger, Willie and Savani, Yash},

booktitle={Proceedings of the AAAI Conference on Artificial Intelligence},

year={2021}

}

@article{white2020local,

title={Local Search is State of the Art for Neural Architecture Search Benchmarks},

author={White, Colin and Nolen, Sam and Savani, Yash},

journal={arXiv preprint arXiv:2005.02960},

year={2020}

}

Contents

This repo contains encodings for neural architecture search, a variety of NAS methods (including BANANAS, a neural predictor Bayesian optimization method, and local search for NAS), and an easy interface for using multiple NAS benchmarks.

Encodings:

BANANAS:

Local search: