kimhc6028 / Pathnet Pytorch

Licence: bsd-3-clause

PyTorch implementation of PathNet: Evolution Channels Gradient Descent in Super Neural Networks

Stars: ✭ 63

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Pathnet Pytorch

Pathnet

Tensorflow Implementation of PathNet: Evolution Channels Gradient Descent in Super Neural Networks

Stars: ✭ 96 (+52.38%)

Mutual labels: agi, transfer-learning

Spacy Transformers

🛸 Use pretrained transformers like BERT, XLNet and GPT-2 in spaCy

Stars: ✭ 919 (+1358.73%)

Mutual labels: transfer-learning

Qh finsight

国内首个迁移学习赛题 中国平安前海征信“好信杯”迁移学习大数据算法大赛 FInSight团队作品(算法方案排名第三)

Stars: ✭ 55 (-12.7%)

Mutual labels: transfer-learning

Bert language understanding

Pre-training of Deep Bidirectional Transformers for Language Understanding: pre-train TextCNN

Stars: ✭ 933 (+1380.95%)

Mutual labels: transfer-learning

Graphrole

Automatic feature extraction and node role assignment for transfer learning on graphs (ReFeX & RolX)

Stars: ✭ 38 (-39.68%)

Mutual labels: transfer-learning

Bert Keras

Keras implementation of BERT with pre-trained weights

Stars: ✭ 820 (+1201.59%)

Mutual labels: transfer-learning

Weakly Supervised 3d Object Detection

Weakly Supervised 3D Object Detection from Point Clouds (VS3D), ACM MM 2020

Stars: ✭ 61 (-3.17%)

Mutual labels: transfer-learning

Teacher Student Training

This repository stores the files used for my summer internship's work on "teacher-student learning", an experimental method for training deep neural networks using a trained teacher model.

Stars: ✭ 34 (-46.03%)

Mutual labels: transfer-learning

Average Word2vec

🔤 Calculate average word embeddings (word2vec) from documents for transfer learning

Stars: ✭ 52 (-17.46%)

Mutual labels: transfer-learning

Densedepth

High Quality Monocular Depth Estimation via Transfer Learning

Stars: ✭ 963 (+1428.57%)

Mutual labels: transfer-learning

Transferlearning

Transfer learning / domain adaptation / domain generalization / multi-task learning etc. Papers, codes, datasets, applications, tutorials.-迁移学习

Stars: ✭ 8,481 (+13361.9%)

Mutual labels: transfer-learning

Awesome Knowledge Distillation

Awesome Knowledge-Distillation. 分类整理的知识蒸馏paper(2014-2021)。

Stars: ✭ 1,031 (+1536.51%)

Mutual labels: transfer-learning

Deepfakes video classification

Deepfakes Video classification via CNN, LSTM, C3D and triplets

Stars: ✭ 24 (-61.9%)

Mutual labels: transfer-learning

Big transfer

Official repository for the "Big Transfer (BiT): General Visual Representation Learning" paper.

Stars: ✭ 1,096 (+1639.68%)

Mutual labels: transfer-learning

Skin Cancer Image Classification

Skin cancer classification using Inceptionv3

Stars: ✭ 16 (-74.6%)

Mutual labels: transfer-learning

Seismic Transfer Learning

Deep-learning seismic facies on state-of-the-art CNN architectures

Stars: ✭ 32 (-49.21%)

Mutual labels: transfer-learning

Drivebot

tensorflow deep RL for driving a rover around

Stars: ✭ 62 (-1.59%)

Mutual labels: transfer-learning

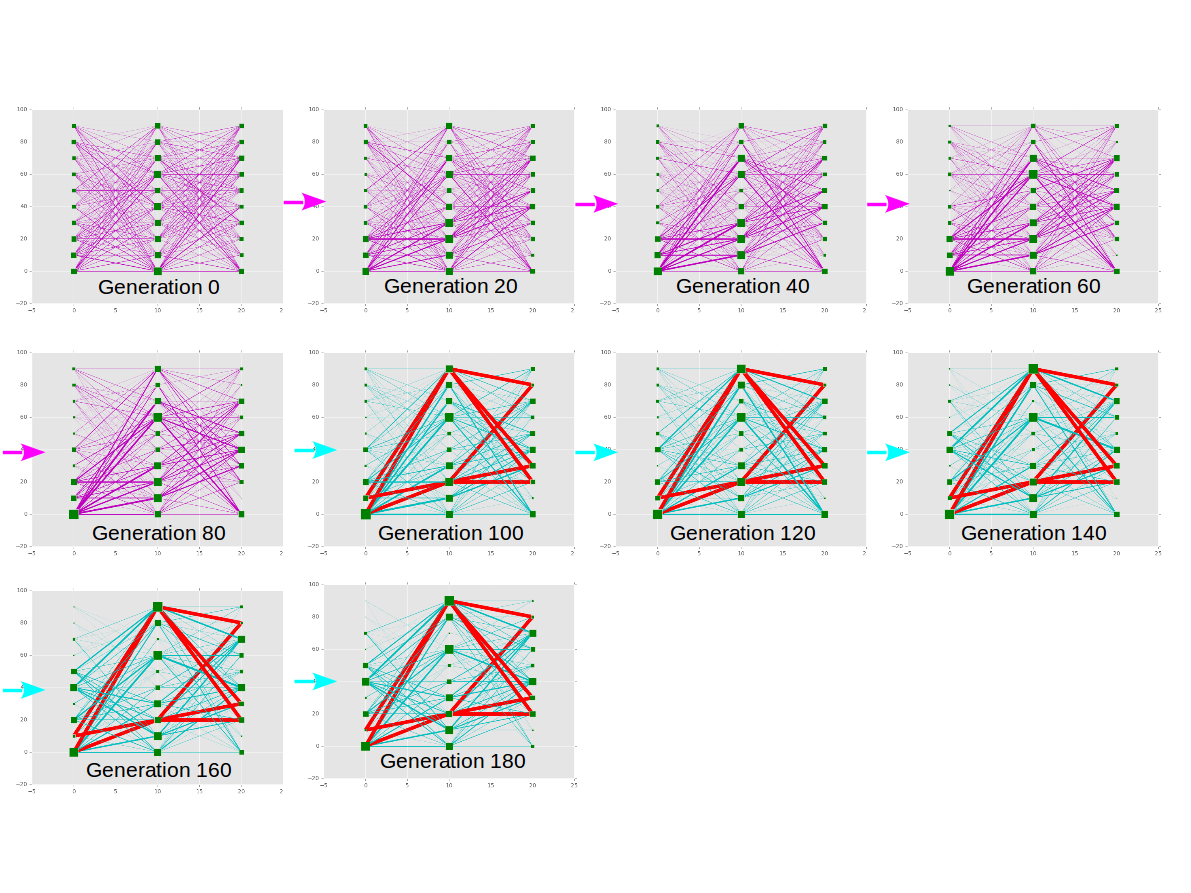

PyTorch implementation of PathNet: Evolution Channels Gradient Descent in Super Neural Networks. "It is a neural network algorithm that uses agents embedded in the neural network whose task is to discover which parts of the network to re-use for new tasks".

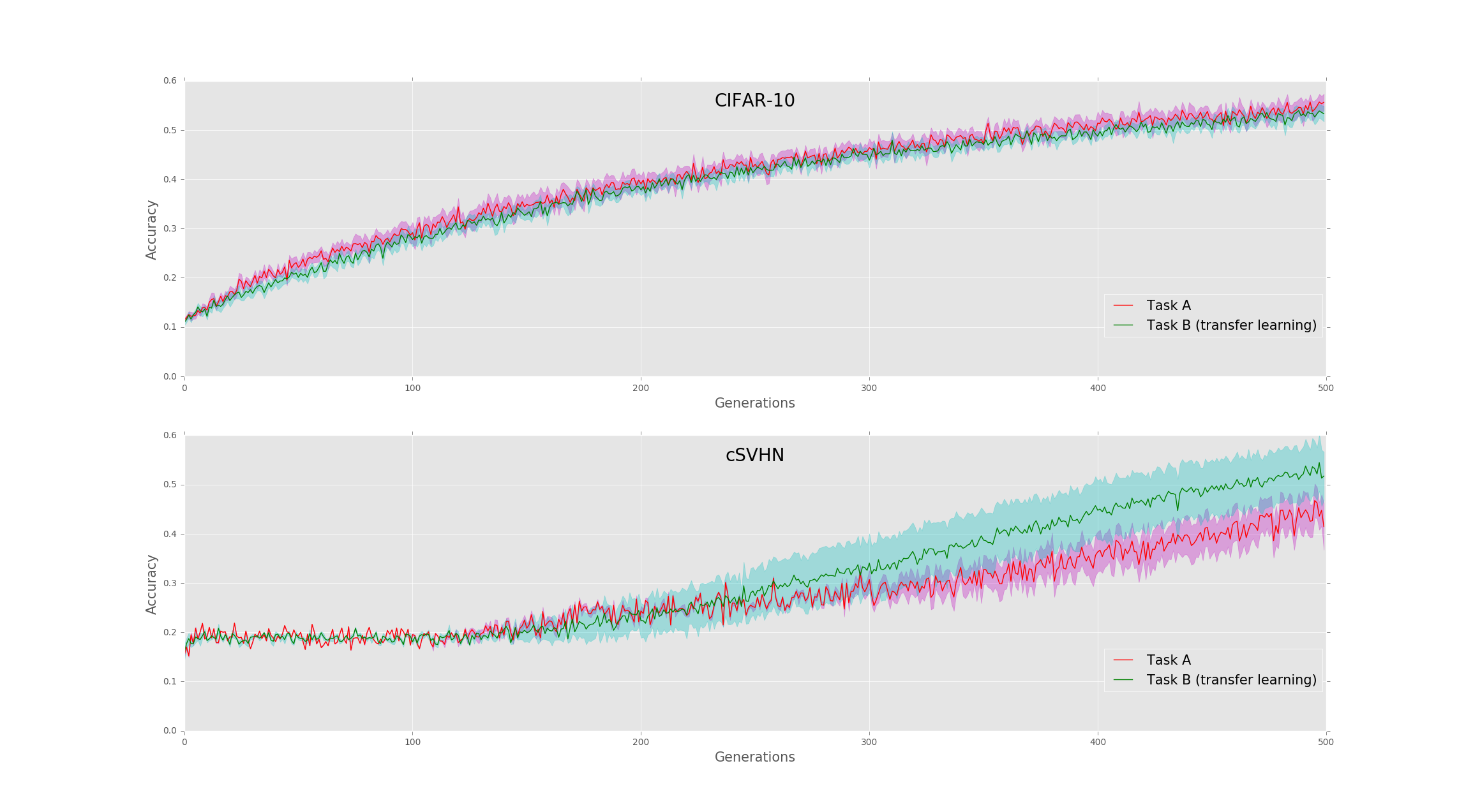

Currently implemented binary MNIST task and CIFAR & cropped SVHN classification task.

Requirements

- Python 2.7

- numpy

- matplotlib

- networkx

- python-mnist

- pytorch

Usage

Install prerequisites:

$ apt-get install python-numpy python-matplotlib

$ pip install python-mnist networkx

And install pytorch: See http://pytorch.org/.

Run with command:

$ python main.py

If you want to repeat experiment:

$ ./repeat_experiment.sh

To check the result:

$ python plotter.py

Modifications

- Learning rate is changed from 0.0001(paper) to 0.01.

Result

Transfer learning of CIFAR10 -> cropped SVHN recorded higher accuracy than cropped SVHN classification accuracy solely (41.5% -> 51.8%, Second figure).

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].