sthalles / Pytorch Byol

PyTorch implementation of Bootstrap Your Own Latent: A New Approach to Self-Supervised Learning

Stars: ✭ 213

Projects that are alternatives of or similar to Pytorch Byol

Simclr

SimCLRv2 - Big Self-Supervised Models are Strong Semi-Supervised Learners

Stars: ✭ 2,720 (+1177%)

Mutual labels: jupyter-notebook, unsupervised-learning, representation-learning

Simclr

PyTorch implementation of SimCLR: A Simple Framework for Contrastive Learning of Visual Representations

Stars: ✭ 750 (+252.11%)

Mutual labels: jupyter-notebook, unsupervised-learning, representation-learning

Weakly Supervised 3d Object Detection

Weakly Supervised 3D Object Detection from Point Clouds (VS3D), ACM MM 2020

Stars: ✭ 61 (-71.36%)

Mutual labels: jupyter-notebook, unsupervised-learning

Codesearchnet

Datasets, tools, and benchmarks for representation learning of code.

Stars: ✭ 1,378 (+546.95%)

Mutual labels: jupyter-notebook, representation-learning

Transferlearning

Transfer learning / domain adaptation / domain generalization / multi-task learning etc. Papers, codes, datasets, applications, tutorials.-迁移学习

Stars: ✭ 8,481 (+3881.69%)

Mutual labels: representation-learning, unsupervised-learning

Discogan Pytorch

PyTorch implementation of "Learning to Discover Cross-Domain Relations with Generative Adversarial Networks"

Stars: ✭ 961 (+351.17%)

Mutual labels: jupyter-notebook, unsupervised-learning

Pointglr

Global-Local Bidirectional Reasoning for Unsupervised Representation Learning of 3D Point Clouds (CVPR 2020)

Stars: ✭ 86 (-59.62%)

Mutual labels: unsupervised-learning, representation-learning

Lemniscate.pytorch

Unsupervised Feature Learning via Non-parametric Instance Discrimination

Stars: ✭ 532 (+149.77%)

Mutual labels: unsupervised-learning, representation-learning

Sfmlearner

An unsupervised learning framework for depth and ego-motion estimation from monocular videos

Stars: ✭ 1,661 (+679.81%)

Mutual labels: jupyter-notebook, unsupervised-learning

Autoregressive Predictive Coding

Autoregressive Predictive Coding: An unsupervised autoregressive model for speech representation learning

Stars: ✭ 138 (-35.21%)

Mutual labels: unsupervised-learning, representation-learning

Bagofconcepts

Python implementation of bag-of-concepts

Stars: ✭ 18 (-91.55%)

Mutual labels: unsupervised-learning, representation-learning

Variational Ladder Autoencoder

Implementation of VLAE

Stars: ✭ 196 (-7.98%)

Mutual labels: unsupervised-learning, representation-learning

Gdynet

Unsupervised learning of atomic scale dynamics from molecular dynamics.

Stars: ✭ 37 (-82.63%)

Mutual labels: jupyter-notebook, unsupervised-learning

Unsupervised Classification

SCAN: Learning to Classify Images without Labels (ECCV 2020), incl. SimCLR.

Stars: ✭ 605 (+184.04%)

Mutual labels: unsupervised-learning, representation-learning

Self Supervised Learning Overview

📜 Self-Supervised Learning from Images: Up-to-date reading list.

Stars: ✭ 73 (-65.73%)

Mutual labels: unsupervised-learning, representation-learning

Vl Bert

Code for ICLR 2020 paper "VL-BERT: Pre-training of Generic Visual-Linguistic Representations".

Stars: ✭ 493 (+131.46%)

Mutual labels: jupyter-notebook, representation-learning

Hidt

Official repository for the paper "High-Resolution Daytime Translation Without Domain Labels" (CVPR2020, Oral)

Stars: ✭ 513 (+140.85%)

Mutual labels: jupyter-notebook, unsupervised-learning

Sigver wiwd

Learned representation for Offline Handwritten Signature Verification. Models and code to extract features from signature images.

Stars: ✭ 112 (-47.42%)

Mutual labels: jupyter-notebook, representation-learning

Dragan

A stable algorithm for GAN training

Stars: ✭ 189 (-11.27%)

Mutual labels: jupyter-notebook, unsupervised-learning

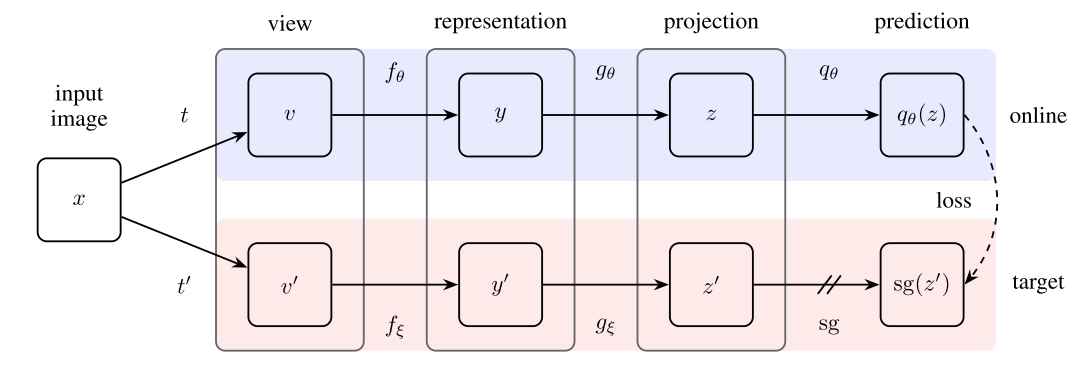

PyTorch-BYOL

PyTorch implementation of Bootstrap Your Own Latent: A New Approach to Self-Supervised Learning.

Installation

Clone the repository and run

$ conda env create --name byol --file env.yml

$ conda activate byol

$ python main.py

Config

Before running PyTorch BYOL, make sure you choose the correct running configurations on the config.yaml file.

network:

name: resnet18 # base encoder. choose one of resnet18 or resnet50

# Specify a folder containing a pre-trained model to fine-tune. If training from scratch, pass None.

fine_tune_from: 'resnet-18_40-epochs'

# configurations for the projection and prediction heads

projection_head:

mlp_hidden_size: 512 # Original implementation uses 4096

projection_size: 128 # Original implementation uses 256

data_transforms:

s: 1

input_shape: (96,96,3)

trainer:

batch_size: 64 # Original implementation uses 4096

m: 0.996 # momentum update

checkpoint_interval: 5000

max_epochs: 40 # Original implementation uses 1000

num_workers: 4 # number of worker for the data loader

optimizer:

params:

lr: 0.03

momentum: 0.9

weight_decay: 0.0004

Feature Evaluation

We measure the quality of the learned representations by linear separability.

During training, BYOL learns features using the STL10 train+unsupervised set and evaluates in the held-out test set.

| Linear Classifier | Feature Extractor | Architecture | Feature dim | Projection Head dim | Epochs | Batch Size | STL10 Top 1 |

|---|---|---|---|---|---|---|---|

| Logistic Regression | PCA Features | - | 256 | - | - | 36.0% | |

| KNN | PCA Features | - | 256 | - | - | 31.8% | |

| Logistic Regression (Adam) | BYOL (SGD) | ResNet-18 | 512 | 128 | 40 | 64 | 70.1% |

| Logistic Regression (Adam) | BYOL (SGD) | ResNet-18 | 512 | 128 | 80 | 64 | 75.2% |

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].