nianticlabs / Stereo From Mono

Programming Languages

Projects that are alternatives of or similar to Stereo From Mono

Learning Stereo from Single Images

Jamie Watson, Oisin Mac Aodha, Daniyar Turmukhambetov, Gabriel J. Brostow and Michael Firman – ECCV 2020 (Oral presentation)

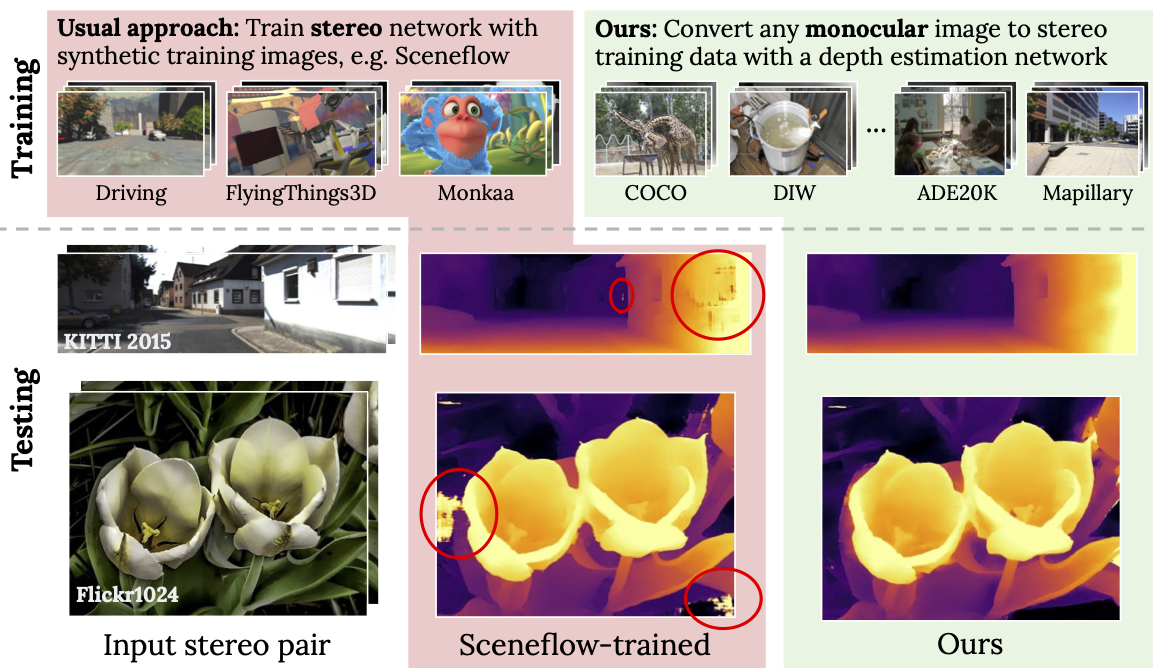

Supervised deep networks are among the best methods for finding correspondences in stereo image pairs. Like all supervised approaches, these networks require ground truth data during training. However, collecting large quantities of accurate dense correspondence data is very challenging. We propose that it is unnecessary to have such a high reliance on ground truth depths or even corresponding stereo pairs.

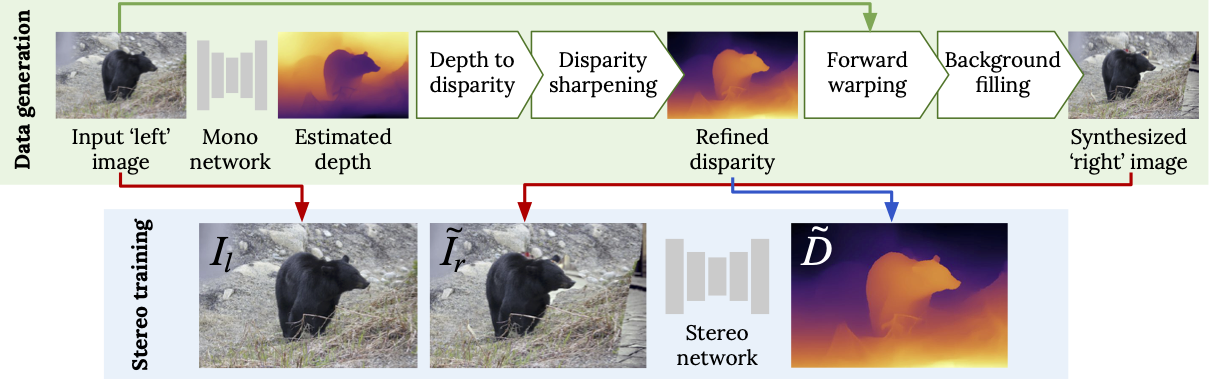

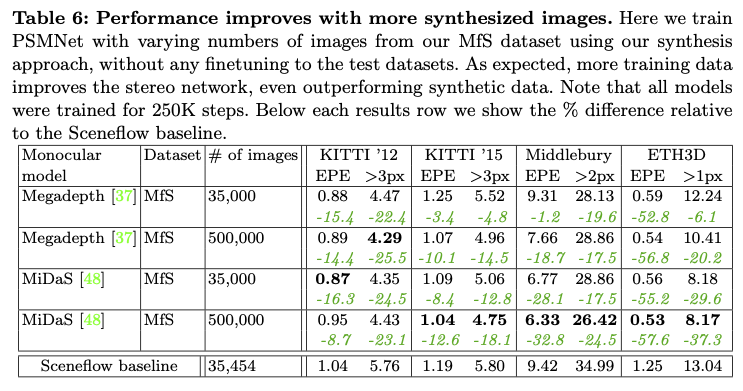

Inspired by recent progress in monocular depth estimation, we generate plausible disparity maps from single images. In turn, we use those flawed disparity maps in a carefully designed pipeline to generate stereo training pairs. Training in this manner makes it possible to convert any collection of single RGB images into stereo training data. This results in a significant reduction in human effort, with no need to collect real depths or to hand-design synthetic data. We can consequently train a stereo matching network from scratch on datasets like COCO, which were previously hard to exploit for stereo.

Through extensive experiments we show that our approach outperforms stereo networks trained with standard synthetic datasets, when evaluated on KITTI, ETH3D, and Middlebury.

✏️ 📄 Citation

If you find our work useful or interesting, please consider citing our paper:

@inproceedings{watson-2020-stereo-from-mono,

title = {Learning Stereo from Single Images},

author = {Jamie Watson and

Oisin Mac Aodha and

Daniyar Turmukhambetov and

Gabriel J. Brostow and

Michael Firman

},

booktitle = {European Conference on Computer Vision ({ECCV})},

year = {2020}

}

📊 Evaluation

We evaluate our performance on several datasets:

KITTI (2015 and 2012),

Middlebury (full resolution) and ETH3D (Low res two view).

To run inference on these datasets first download them, and update paths_config.yaml to point to these locations.

Note that we report scores on the training sets of each dataset since we never see these images during training.

Run evaluation using:

CUDA_VISIBLE_DEVICES=X python main.py \

--mode inference \

--load_path <downloaded_model_path>

optionally setting --test_data_types and --save_disparities.

Trained models can be found HERE.

🎯 Training

To train a new model, you will need to download several datasets:

ADE20K, DIODE,

Depth in the Wild,

Mapillary

and COCO. After doing so, update paths_config.yaml to point to these directories.

Additionally you will need some precomputed monocular depth estimates for these images.

We provide these for MiDaS - HERE.

Download these and put them in the corresponding data paths (i.e. your paths specified in paths_config.yaml).

Now you can train a new model using:

CUDA_VISIBLE_DEVICES=X python main.py --mode train \

--log_path <where_to_save_your_model> \

--model_name <name_of_your_model>

Please see options.py for full list of training options.

👩⚖️ License

Copyright © Niantic, Inc. 2020. Patent Pending. All rights reserved. Please see the license file for terms.