LiyuanLucasLiu / Transformer Clinic

Programming Languages

Labels

Projects that are alternatives of or similar to Transformer Clinic

Admin

Understanding the Difficulty of Training Transformers

Guided by our analyses, we propose Adaptive Model Initialization (Admin), which successfully stabilizes previously-diverged Transformer training and achieves better performance, without introducing additional hyper-parameters. Admin is adapted for better half-precision stability and can be reparameterized into the original Transformer.

We are in an early-release beta. Expect some adventures and rough edges.

Table of Contents

Introduction

What complicates Transformer training?

In our study, we go beyond gradient vanishing and identify an amplification effect that substantially influences Transformer training. Specifically, for each layer in a multi-layer Transformer, heavy dependency on its residual branch makes training unstable, yet light dependency leads to sub-optimal performance.

Dependency and Amplification Effect

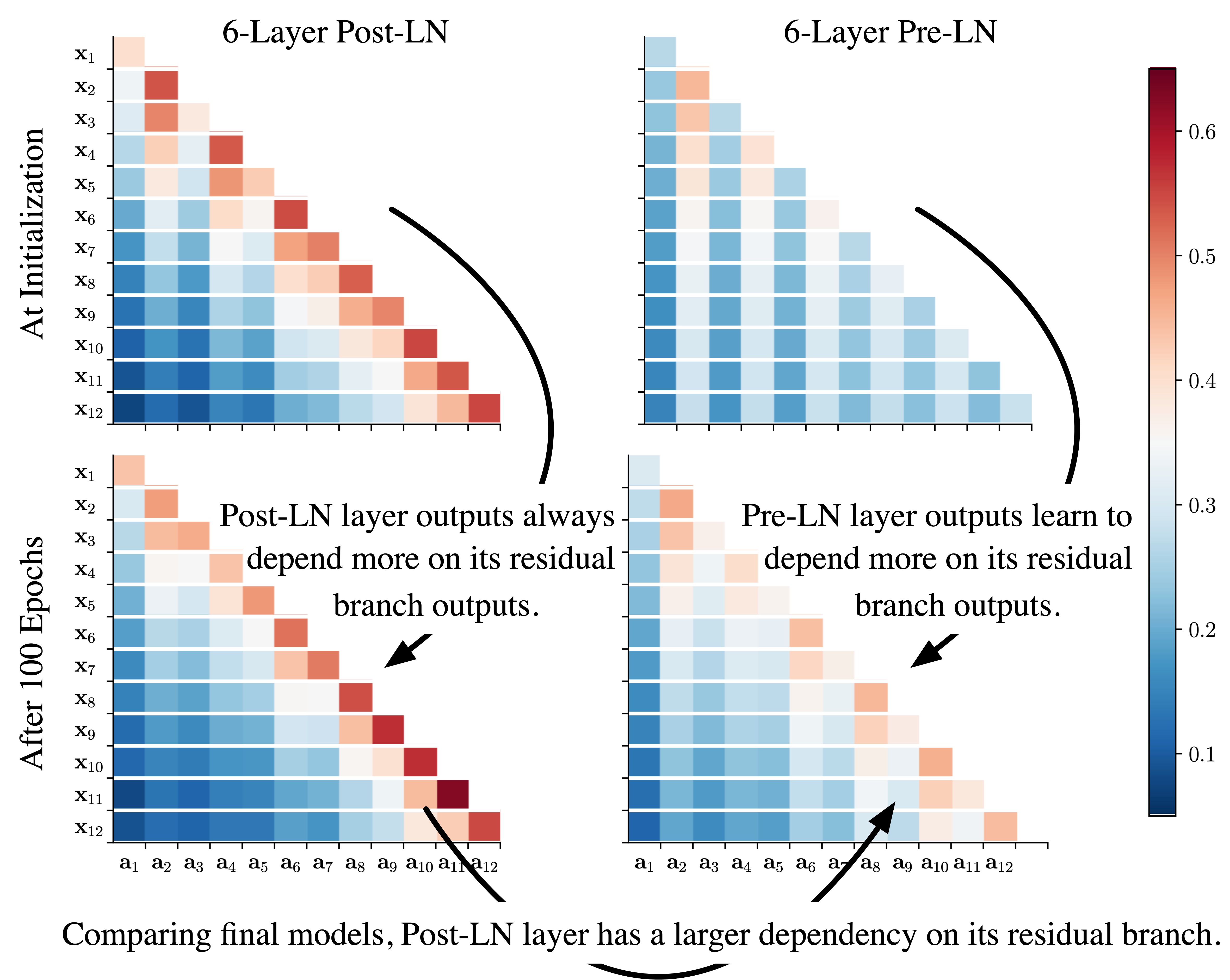

Our analysis starts from the observation that Pre-LN is more robust than Post-LN, whereas Post-LN typically leads to a better performance. As shown in Figure 1, we find these two variants have different layer dependency patterns.

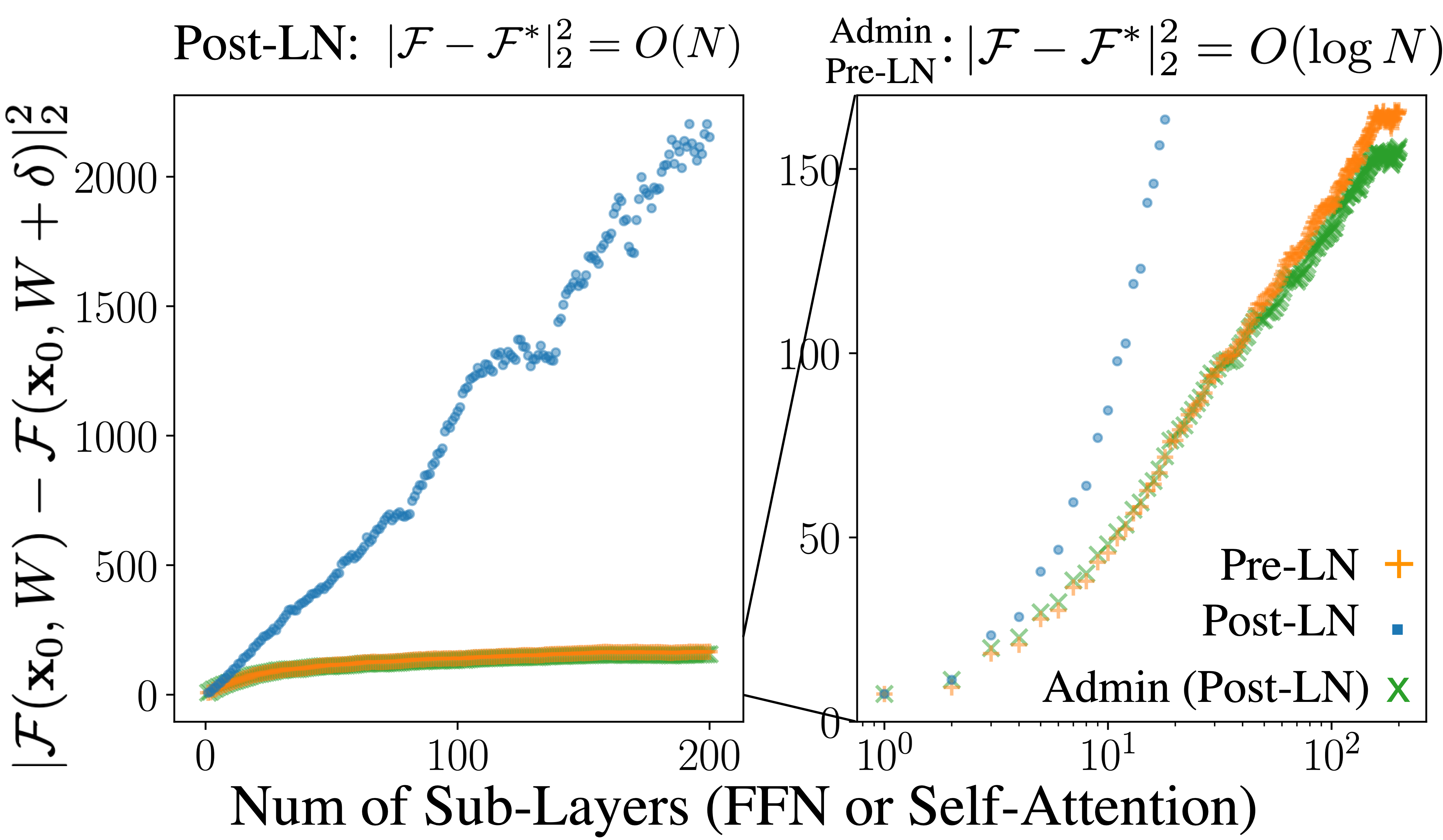

With further exploration, we find that for a N-layer residual network, after updating its parameters W to W*, its outputs change is proportion to the dependency on residual branches.

Intuitively, since a larger output change indicates a more unsmooth loss surface, the large dependency complicates training. Moreover, we propose Admin (adaptive model initialization), which starts the training from the area with a smoother surface. More details can be found in our paper.

Quick Start Guide

Our implementation is based on the fairseq package (python 3.6, torch 1.5/1.6 are recommended). It can be installed by:

git clone https://github.com/LiyuanLucasLiu/Transforemr-Clinic.git

cd fairseq

pip install --editable .

The guidance for reproducing our results is available at:

Specifically, our implementation requires to first set --init-type adaptive-profiling and use one GPU for this profiling stage, then set --init-type adaptive and start training.

Citation

Please cite the following papers if you found our model useful. Thanks!

Liyuan Liu, Xiaodong Liu, Jianfeng Gao, Weizhu Chen, and Jiawei Han (2020). Understanding the Difficulty of Training Transformers. Proc. 2020 Conf. on Empirical Methods in Natural Language Processing (EMNLP'20).

@inproceedings{liu2020admin,

title={Understanding the Difficulty of Training Transformers},

author = {Liu, Liyuan and Liu, Xiaodong and Gao, Jianfeng and Chen, Weizhu and Han, Jiawei},

booktitle = {Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP 2020)},

year={2020}

}

Xiaodong Liu, Kevin Duh, Liyuan Liu, and Jianfeng Gao (2020). Very Deep Transformers for Neural Machine Translation. arXiv preprint arXiv:2008.07772 (2020).

@inproceedings{liu_deep_2020,

author = {Liu, Xiaodong and Duh, Kevin and Liu, Liyuan and Gao, Jianfeng},

booktitle = {arXiv:2008.07772 [cs]},

title = {Very Deep Transformers for Neural Machine Translation},

year = {2020}

}