pbiecek / Xai_resources

Programming Languages

Labels

Projects that are alternatives of or similar to Xai resources

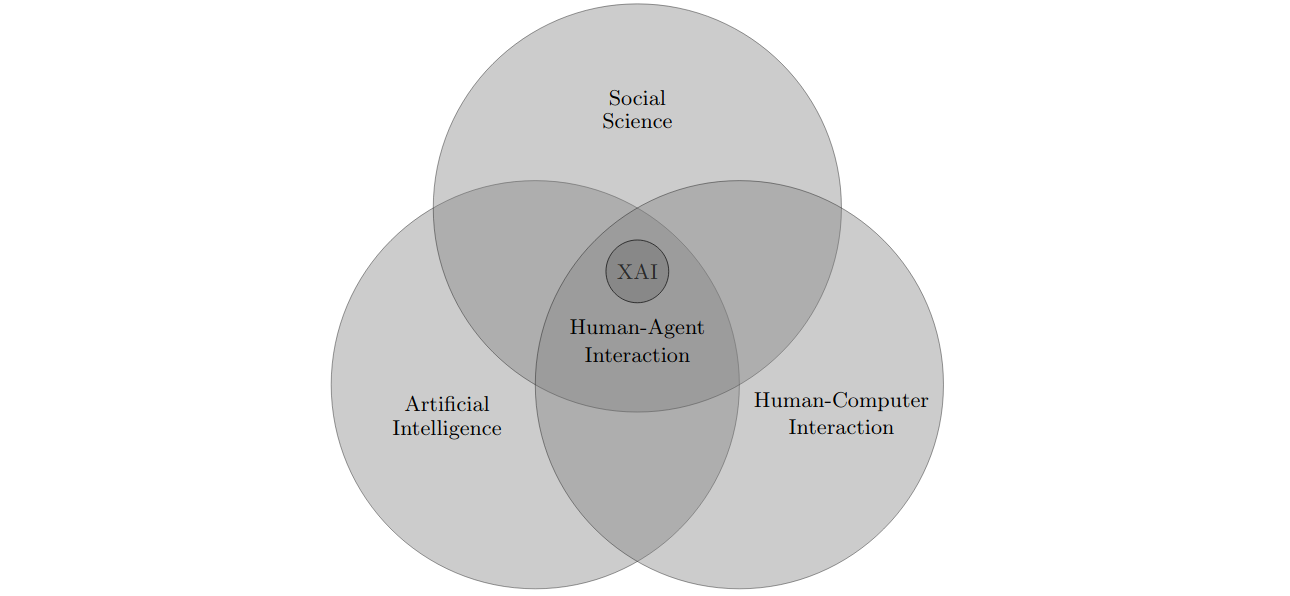

Interesting resources related to XAI (Explainable Artificial Intelligence)

- Papers and preprints in scientific journals

- Books and longer materials

- Software tools

- Short articles in newspapers

- Misc

Papers

2020

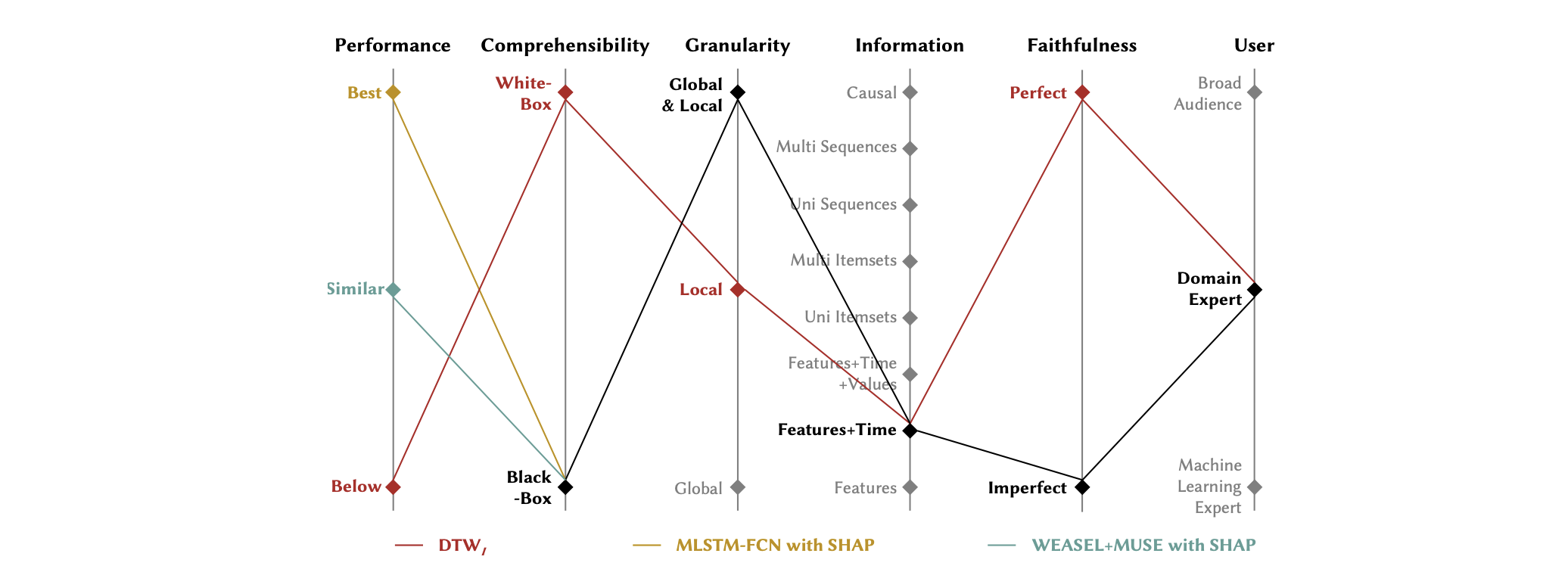

- A Performance-Explainability Framework to Benchmark Machine Learning Methods: Application to Multivariate Time Series Classifiers; Kevin Fauvel, Véronique Masson, Élisa Fromont; Our research aims to propose a new performance-explainability analytical framework to assess and benchmark machine learning methods. The framework details a set of characteristics that operationalize the performance-explainability assessment of existing machine learning methods. In order to illustrate the use of the framework, we apply it to benchmark the current state-of-the-art multivariate time series classifiers.

-

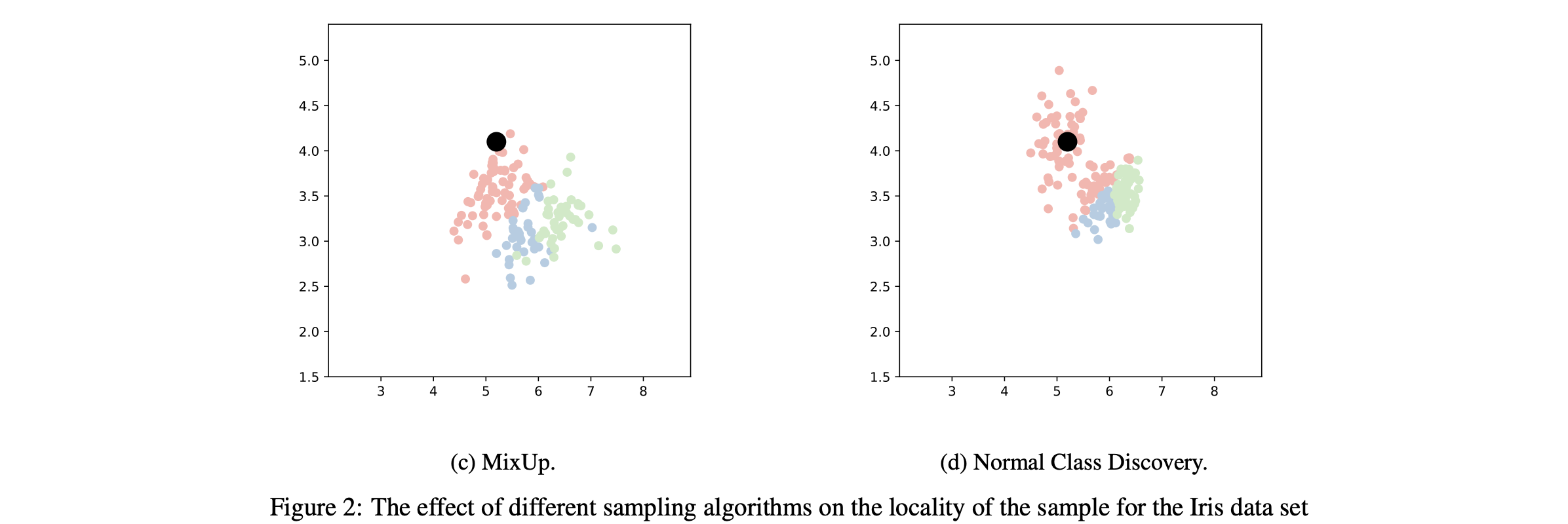

EXPLAN: Explaining Black-box Classifiers using Adaptive Neighborhood Generation; Peyman Rasouli and Ingrid Chieh Yu; Defining a representative locality is an urgent challenge in perturbation-based explanation methods, which influences the fidelity and soundness of explanations. We address this issue by proposing a robust and intuitive approach for EXPLaining black-box classifiers using Adaptive Neighborhood generation (EXPLAN). EXPLAN is a module-based algorithm consisted of dense data generation, representative data selection, data balancing, and rule-based interpretable model. It takes into account the adjacency information derived from the black-box decision function and the structure of the data for creating a representative neighborhood for the instance being explained. As a local model-agnostic explanation method, EXPLAN generates explanations in the form of logical rules that are highly interpretable and well-suited for qualitative analysis of the model's behavior. We discuss fidelity-interpretability trade-offs and demonstrate the performance of the proposed algorithm by a comprehensive comparison with state-of-the-art explanation methods LIME, LORE, and Anchor. The conducted experiments on real-world data sets show our method achieves solid empirical results in terms of fidelity, precision, and stability of explanations. [Paper] [Github]

-

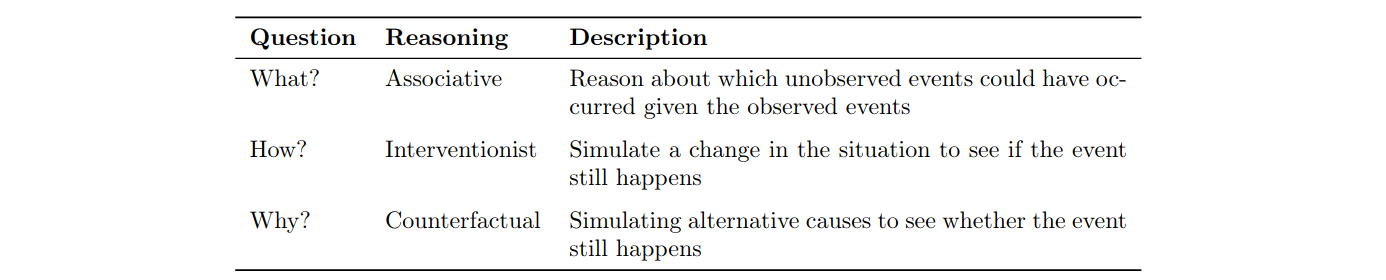

GRACE: Generating Concise and Informative Contrastive Sample to Explain Neural Network Model's Prediction; Thai Le, Suhang Wang, Dongwon Lee; Despite the recent development in the topic of explainable AI/ML for image and text data, the majority of current solutions are not suitable to explain the prediction of neural network models when the datasets are tabular and their features are in high-dimensional vectorized formats. To mitigate this limitation, therefore, we borrow two notable ideas (i.e., "explanation by intervention" from causality and "explanation are contrastive" from philosophy) and propose a novel solution, named as GRACE, that better explains neural network models' predictions for tabular datasets. In particular, given a model's prediction as label X, GRACE intervenes and generates a minimally-modified contrastive sample to be classified as Y, with an intuitive textual explanation, answering the question of "Why X rather than Y?" We carry out comprehensive experiments using eleven public datasets of different scales and domains (e.g., # of features ranges from 5 to 216) and compare GRACE with competing baselines on different measures: fidelity, conciseness, info-gain, and influence. The user-studies show that our generated explanation is not only more intuitive and easy-to-understand but also facilitates end-users to make as much as 60% more accurate post-explanation decisions than that of Lime.

-

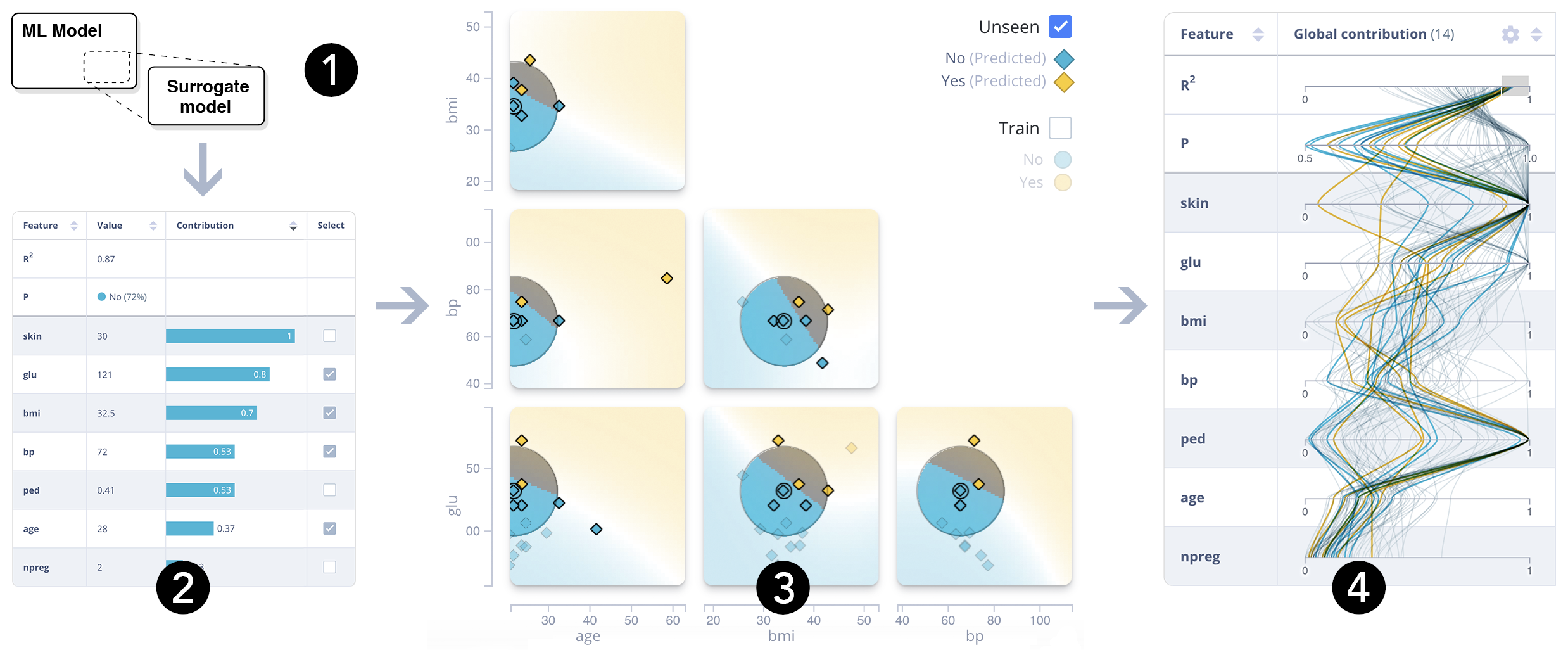

ExplainExplore: Visual Exploration of Machine Learning Explanation; Dennis Collaris, Jack J. van Wijk; Machine learning models often exhibit complex behavior that is difficult to understand. Recent research in explainable AI has produced promising techniques to explain the inner workings of such models using feature contribution vectors. These vectors are helpful in a wide variety of applications. However, there are many parameters involved in this process and determining which settings are best is difficult due to the subjective nature of evaluating interpretability. To this end, we introduce ExplainExplore: an interactive explanation system to explore explanations that fit the subjective preference of data scientists. We leverage the domain knowledge of the data scientist to find optimal parameter settings and instance perturbations, and enable the discussion of the model and its explanation with domain experts. We present a use case on a real-world dataset to demonstrate the effectiveness of our approach for the exploration and tuning of machine learning explanations. [website]

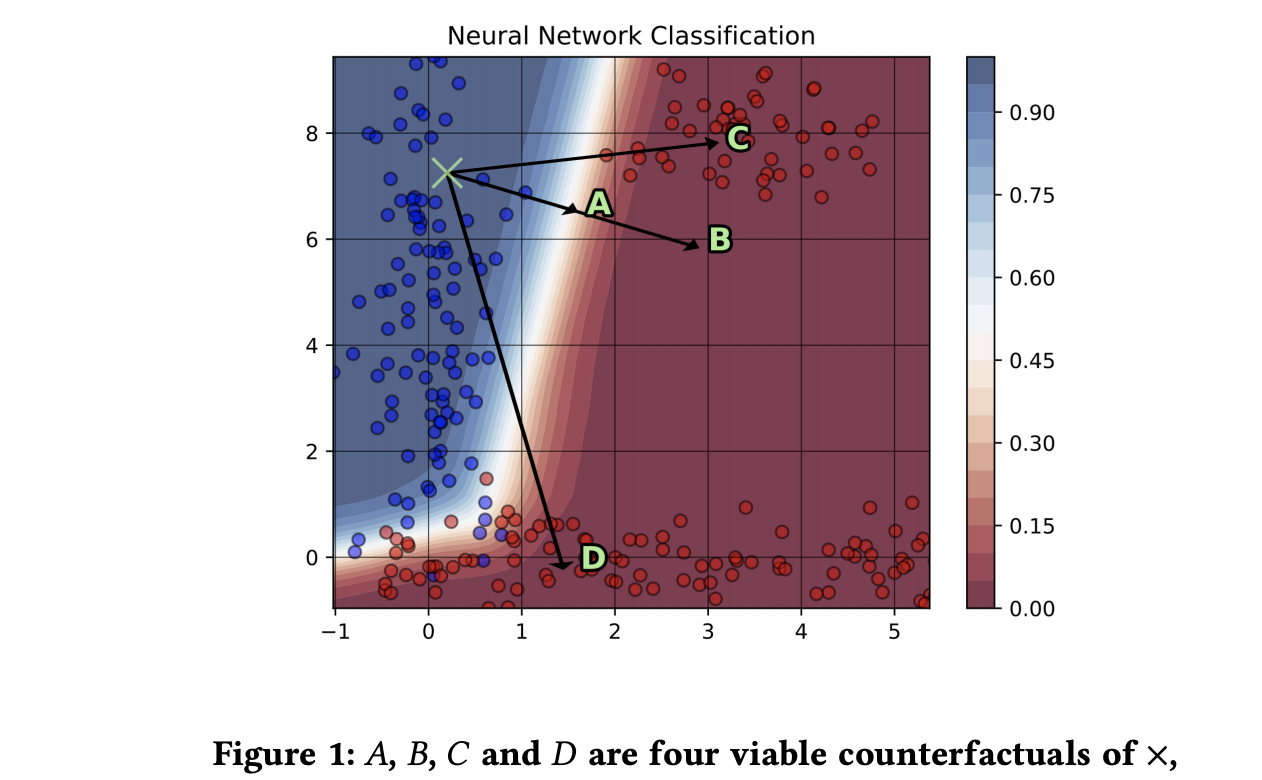

- FACE: Feasible and Actionable Counterfactual Explanations; Rafael Poyiadzi, Kacper Sokol, Raul Santos-Rodriguez, Tijl De Bie, Peter Flach; Work in Counterfactual Explanations tends to focus on the principle of "the closest possible world" that identifies small changes leading to the desired outcome. In this paper we argue that while this approach might initially seem intuitively appealing it exhibits shortcomings not addressed in the current literature. First, a counterfactual example generated by the state-of-the-art systems is not necessarily representative of the underlying data distribution, and may therefore prescribe unachievable goals(e.g., an unsuccessful life insurance applicant with severe disability may be advised to do more sports). Secondly, the counterfactuals may not be based on a "feasible path" between the current state of the subject and the suggested one, making actionable recourse infeasible (e.g., low-skilled unsuccessful mortgage applicants may be told to double their salary, which may be hard without first increasing their skill level).

-

Explainability Fact Sheets: A Framework for Systematic Assessment of Explainable Approaches; Kacper Sokol, Peter Flach; Explanations in Machine Learning come in many forms, but a consensus regarding their desired properties is yet to emerge. In this paper we introduce a taxonomy and a set of descriptors that can be used to characterise and systematically assess explainable systems along five key dimensions: functional, operational, usability, safety and validation. In order to design a comprehensive and representative taxonomy and associated descriptors we surveyed the eXplainable Artificial Intelligence literature, extracting the criteria and desiderata that other authors have proposed or implicitly used in their research. The survey includes papers introducing new explainability algorithms to see what criteria are used to guide their development and how these algorithms are evaluated, as well as papers proposing such criteria from both computer science and social science perspectives. This novel framework allows to systematically compare and contrast explainability approaches, not just to better understand their capabilities but also to identify discrepancies between their theoretical qualities and properties of their implementations. We developed an operationalisation of the framework in the form of Explainability Fact Sheets, which enable researchers and practitioners alike to quickly grasp capabilities and limitations of a particular explainable method.

-

One Explanation Does Not Fit All: The Promise of Interactive Explanations for Machine Learning Transparency; Kacper Sokol, Peter Flach; The need for transparency of predictive systems based on Machine Learning algorithms arises as a consequence of their ever-increasing proliferation in the industry. Whenever black-box algorithmic predictions influence human affairs, the inner workings of these algorithms should be scrutinised and their decisions explained to the relevant stakeholders, including the system engineers, the system's operators and the individuals whose case is being decided. While a variety of interpretability and explainability methods is available, none of them is a panacea that can satisfy all diverse expectations and competing objectives that might be required by the parties involved. We address this challenge in this paper by discussing the promises of Interactive Machine Learning for improved transparency of black-box systems using the example of contrastive explanations -- a state-of-the-art approach to Interpretable Machine Learning. Specifically, we show how to personalise counterfactual explanations by interactively adjusting their conditional statements and extract additional explanations by asking follow-up "What if?" questions.

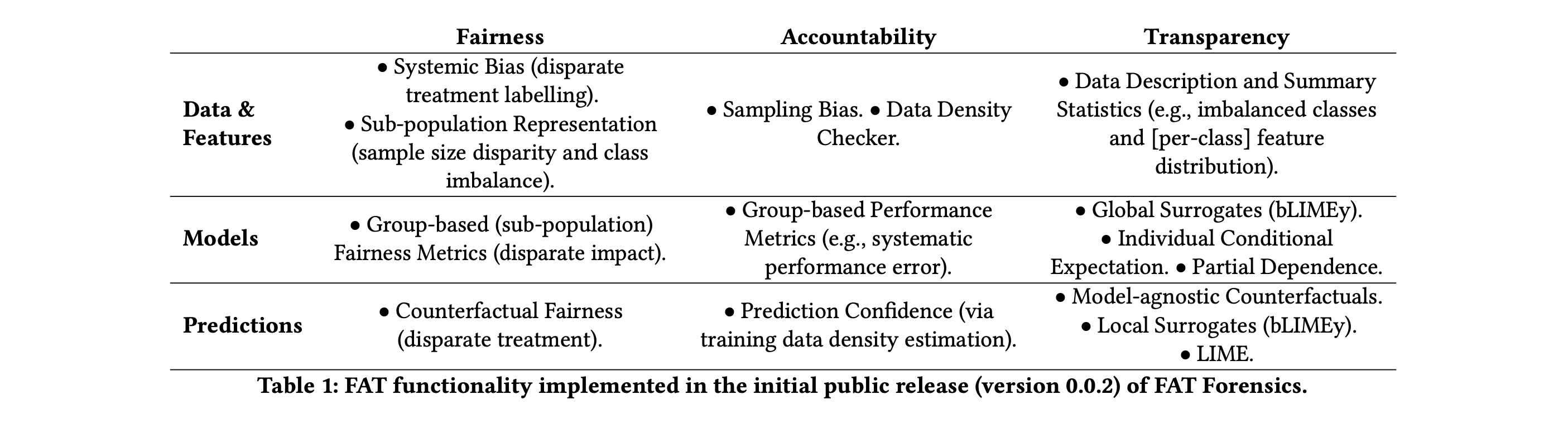

- FAT Forensics: A Python Toolbox for Algorithmic Fairness, Accountability and Transparency; Kacper Sokol, Raul Santos-Rodriguez, Peter Flach; Given the potential harm that ML algorithms can cause, qualities such as fairness, accountability and transparency of predictive systems are of paramount importance. Recent literature suggested voluntary self-reporting on these aspects of predictive systems -- e.g., data sheets for data sets -- but their scope is often limited to a single component of a machine learning pipeline, and producing them requires manual labour. To resolve this impasse and ensure high-quality, fair, transparent and reliable machine learning systems, we developed an open source toolbox that can inspect selected fairness, accountability and transparency aspects of these systems to automatically and objectively report them back to their engineers and users. We describe design, scope and usage examples of this Python toolbox in this paper. The toolbox provides functionality for inspecting fairness, accountability and transparency of all aspects of the machine learning process: data (and their features), models and predictions.

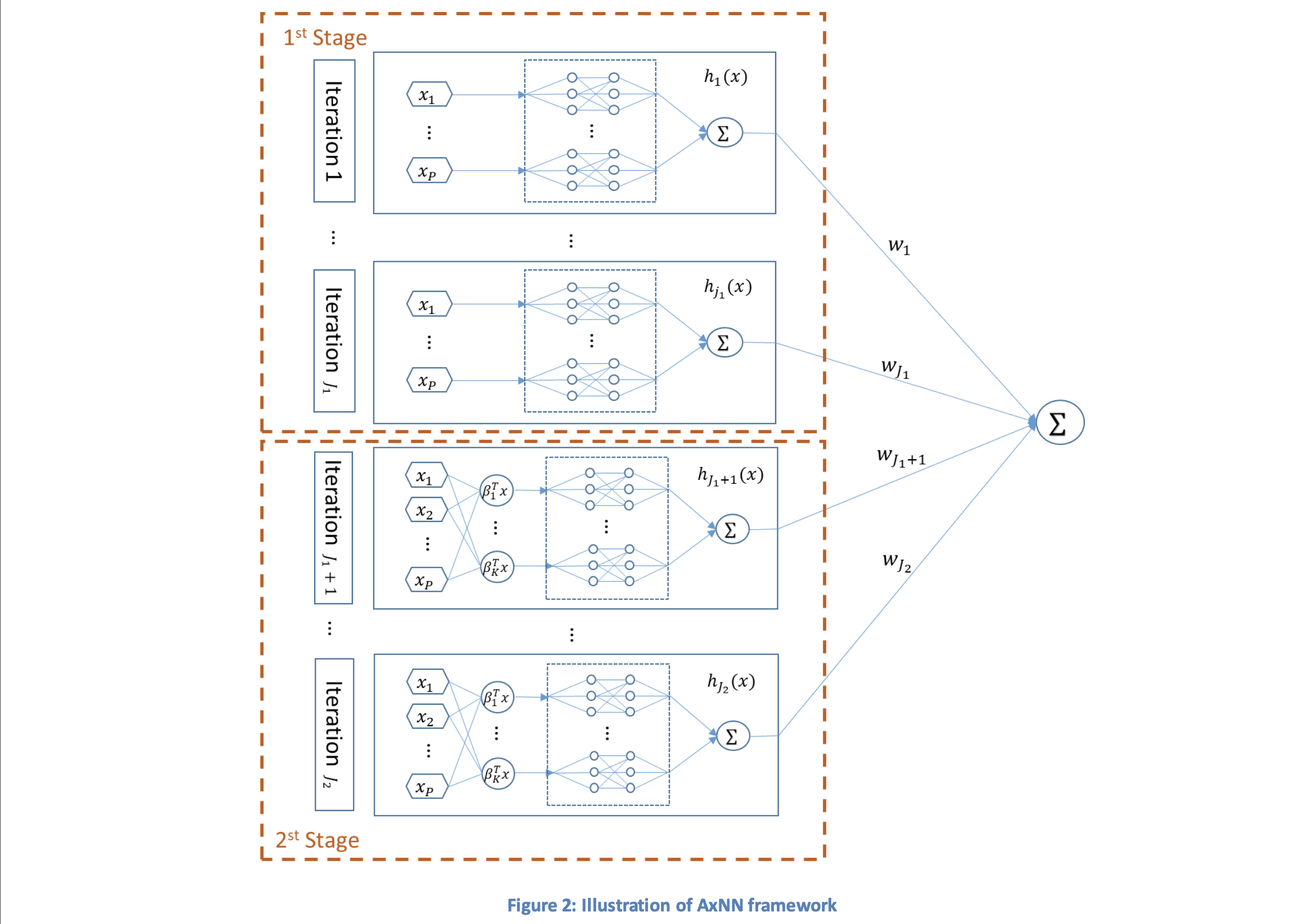

- Adaptive Explainable Neural Networks (AxNNs); Jie Chen, Joel Vaughan, Vijayan Nair, Agus Sudjianto; While machine learning techniques have been successfully applied in several fields, the black-box nature of the models presents challenges for interpreting and explaining the results. We develop a new framework called Adaptive Explainable Neural Networks (AxNN) for achieving the dual goals of good predictive performance and model interpretability. For predictive performance, we build a structured neural network made up of ensembles of generalized additive model networks and additive index models (through explainable neural networks) using a two-stage process. This can be done using either a boosting or a stacking ensemble. For interpretability, we show how to decompose the results of AxNN into main effects and higher-order interaction effects.

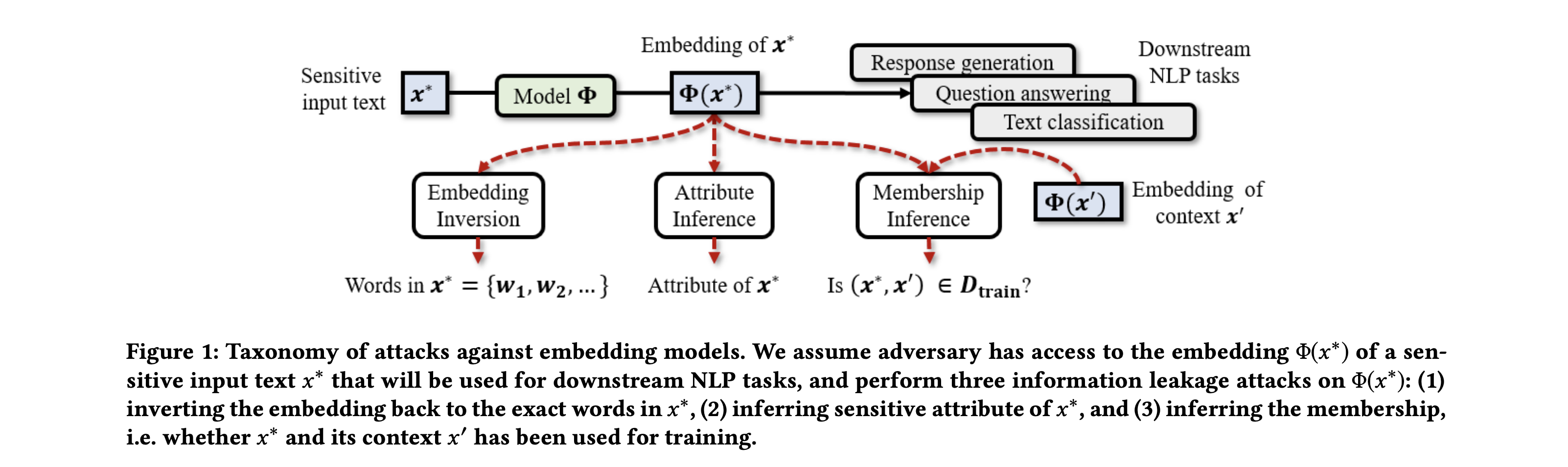

- Information Leakage in Embedding Models; Congzheng Song, Ananth Raghunathan; We demonstrate that embeddings, in addition to encoding generic semantics, often also present a vector that leaks sensitive information about the input data. We develop three classes of attacks to systematically study information that might be leaked by embeddings. First, embedding vectors can be inverted to partially recover some of the input data. Second, embeddings may reveal sensitive attributes inherent in inputs and independent of the underlying semantic task at hand. Third, embedding models leak moderate amount of membership information for infrequent training data inputs. We extensively evaluate our attacks on various state-of-the-art embedding models in the text domain. We also propose and evaluate defenses that can prevent the leakage to some extent at a minor cost in utility.

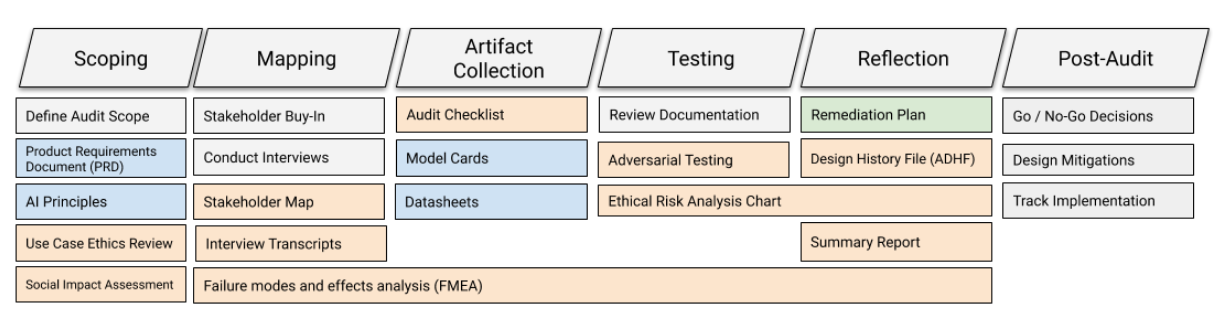

- Closing the AI Accountability Gap: Defining an End-to-End Framework for Internal Algorithmic Auditing; Inioluwa Deborah Raji, et. al. Rising concern for the societal implications of artificial intelligence systems has inspired a wave of academic and journalistic literature in which deployed systems are audited for harm by investigators from outside the organizations deploying the algorithms. However, it remains challenging for practitioners to identify the harmful repercussions of their own systems prior to deployment, and, once deployed, emergent issues can become difficult or impossible to trace back to their source. In this paper, we introduce a framework for algorithmic auditing that supports artificial intelligence system development end-to-end, to be applied throughout the internal organization development lifecycle. Each stage of the audit yields a set of documents that together form an overall audit report, drawing on an organization's values or principles to assess the fit of decisions made throughout the process.

- Explaining the Explainer: A First Theoretical Analysis of LIME; Damien Garreau, Ulrike von Luxburg; Machine learning is used more and more often for sensitive applications, sometimes replacing humans in critical decision-making processes. As such, interpretability of these algorithms is a pressing need. One popular algorithm to provide interpretability is LIME (Local Interpretable Model-Agnostic Explanation). In this paper, we provide the first theoretical analysis of LIME. We derive closed-form expressions for the coefficients of the interpretable model when the function to explain is linear. The good news is that these coefficients are proportional to the gradient of the function to explain: LIME indeed discovers meaningful features. However, our analysis also reveals that poor choices of parameters can lead LIME to miss important features.

2019

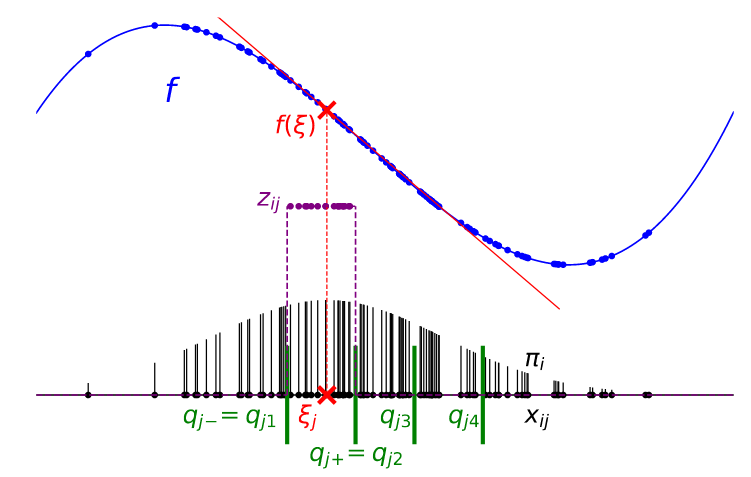

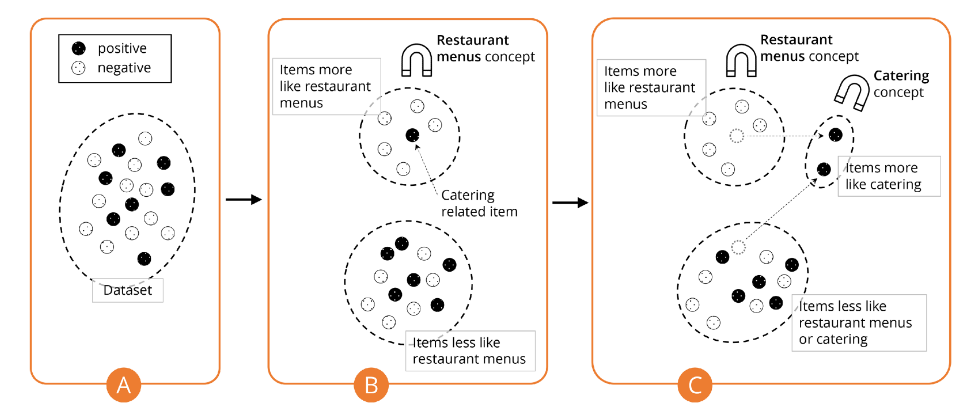

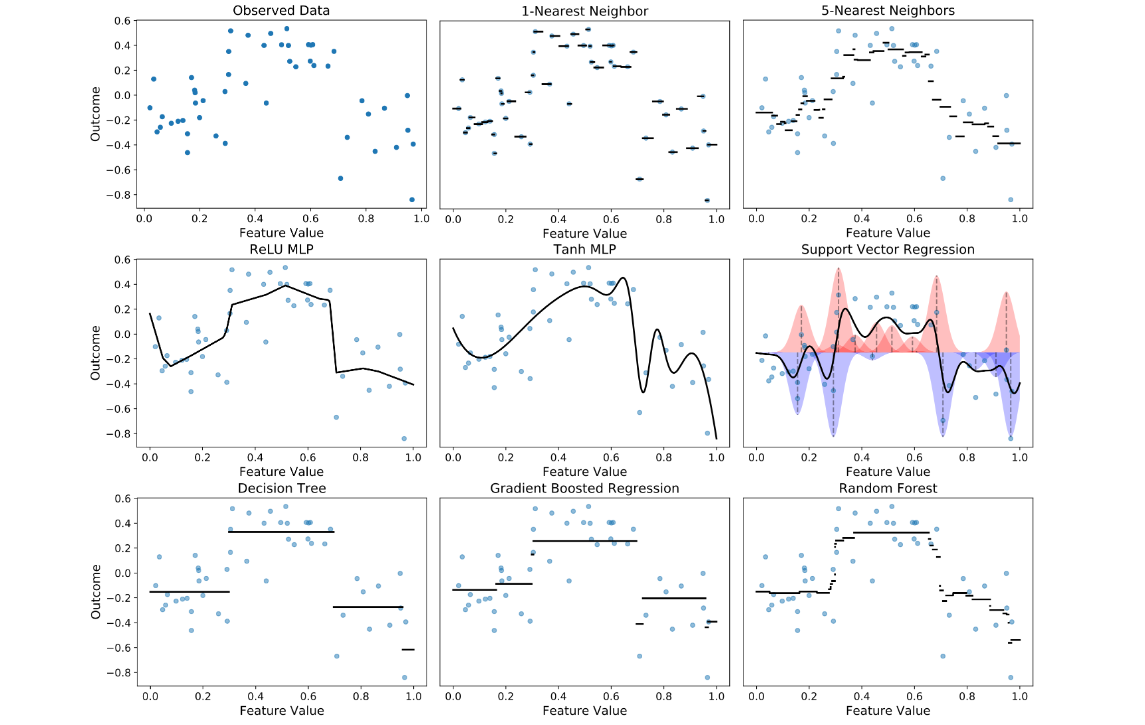

- bLIMEy: Surrogate Prediction Explanations Beyond LIME?; Kacper Sokol, Alexander Hepburn, Raul Santos-Rodriguez, Peter Flach. Surrogate explainers of black-box machine learning predictions are of paramount importance in the field of eXplainable Artificial Intelligence since they can be applied to any type of data (images, text and tabular), are model-agnostic and are post-hoc (i.e., can be retrofitted). The Local Interpretable Model-agnostic Explanations (LIME) algorithm is often mistakenly unified with a more general framework of surrogate explainers, which may lead to a belief that it is the solution to surrogate explainability. In this paper we empower the community to "build LIME yourself" (bLIMEy) by proposing a principled algorithmic framework for building custom local surrogate explainers of black-box model predictions, including LIME itself. To this end, we demonstrate how to decompose the surrogate explainers family into algorithmically independent and interoperable modules and discuss the influence of these component choices on the functional capabilities of the resulting explainer, using the example of LIME.

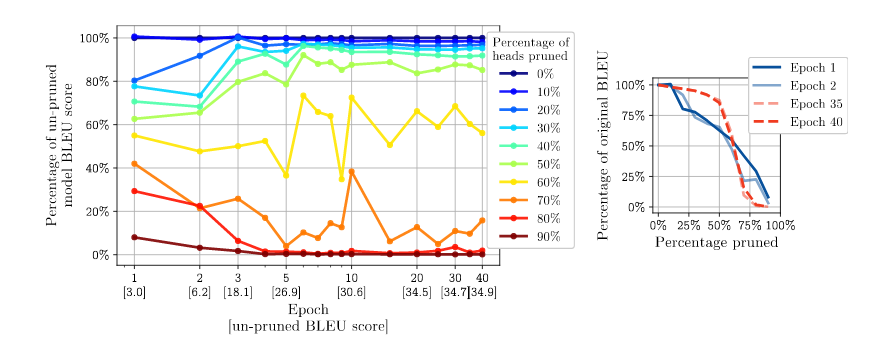

- Are Sixteen Heads Really Better than One?; Paul Michel, Omer Levy, Graham Neubig. Attention is a powerful and ubiquitous mechanism for allowing neural models to focus on particular salient pieces of information by taking their weighted average when making predictions. In particular, multi-headed attention is a driving force behind many recent state-of-the-art NLP models such as Transformer-based MT models and BERT. In this paper we make the surprising observation that even if models have been trained using multiple heads, in practice, a large percentage of attention heads can be removed at test time without significantly impacting performance. In fact, some layers can even be reduced to a single head. We further examine greedy algorithms for pruning down models, and the potential speed, memory efficiency, and accuracy improvements obtainable therefrom.

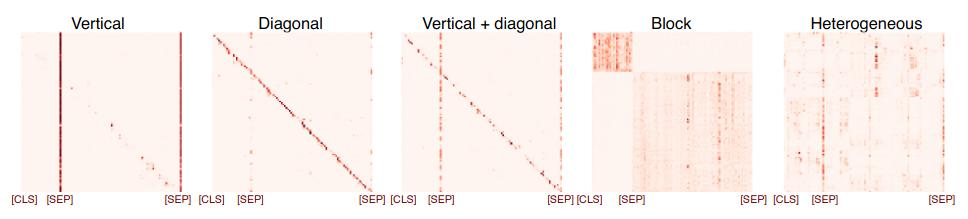

- Revealing the Dark Secrets of BERT; Olga Kovaleva, Alexey Romanov, Anna Rogers, Anna Rumshisky. BERT-based architectures currently give state-of-the-art performance on many NLP tasks, but little is known about the exact mechanisms that contribute to its success. In the current work, we focus on the interpretation of self-attention, which is one of the fundamental underlying components of BERT. Using a subset of GLUE tasks and a set of handcrafted features-of-interest, we propose the methodology and carry out a qualitative and quantitative analysis of the information encoded by the individual BERT's heads. Our findings suggest that there is a limited set of attention patterns that are repeated across different heads, indicating the overall model overparametrization. While different heads consistently use the same attention patterns, they have varying impact on performance across different tasks. We show that manually disabling attention in certain heads leads to a performance improvement over the regular fine-tuned BERT models.

- Explanation in Artificial Intelligence:Insights from the Social Sciences; Tim Miller. There has been a recent resurgence in the area of explainable artificial intelligence as researchers and practitioners seek to make their algorithms more understandable. Much of this research is focused on explicitly explaining decisions or actions to a human observer, and it should not be controversial to say that looking at how humans explain to each other can serve as a useful starting point for explanation in artificial intelligence. However, it is fair to say that most work in explainable artificial intelligence uses only the researchers' intuition of what constitutes a `good' explanation. There exists vast and valuable bodies of research in philosophy, psychology, and cognitive science of how people define, generate, select, evaluate, and present explanations, which argues that people employ certain cognitive biases and social expectations towards the explanation process. This paper argues that the field of explainable artificial intelligence should build on this existing research, and reviews relevant papers from philosophy, cognitive psychology/science, and social psychology, which study these topics.

- AnchorViz: Facilitating Semantic Data Exploration and Concept Discovery for Interactive Machine Learning; Jina Suh et. al., When building a classifier in interactive machine learning (iML), human knowledge about the target class can be a powerful reference to make the classifier robust to unseen items. The main challenge lies in finding unlabeled items that can either help discover or refine concepts for which the current classifier has no corresponding features (i.e., it has feature blindness). Yet it is unrealistic to ask humans to come up with an exhaustive list of items, especially for rare concepts that are hard to recall. This article presents AnchorViz, an interactive visualization that facilitates the discovery of prediction errors and previously unseen concepts through human-driven semantic data exploration.

-

Randomized Ablation Feature Importance; Luke Merrick; Given a model f that predicts a target y from a vector of input features x=x1,x2,…,xM, we seek to measure the importance of each feature with respect to the model's ability to make a good prediction. To this end, we consider how (on average) some measure of goodness or badness of prediction (which we term "loss"), changes when we hide or ablate each feature from the model. To ablate a feature, we replace its value with another possible value randomly. By averaging over many points and many possible replacements, we measure the importance of a feature on the model's ability to make good predictions. Furthermore, we present statistical measures of uncertainty that quantify how confident we are that the feature importance we measure from our finite dataset and finite number of ablations is close to the theoretical true importance value.

-

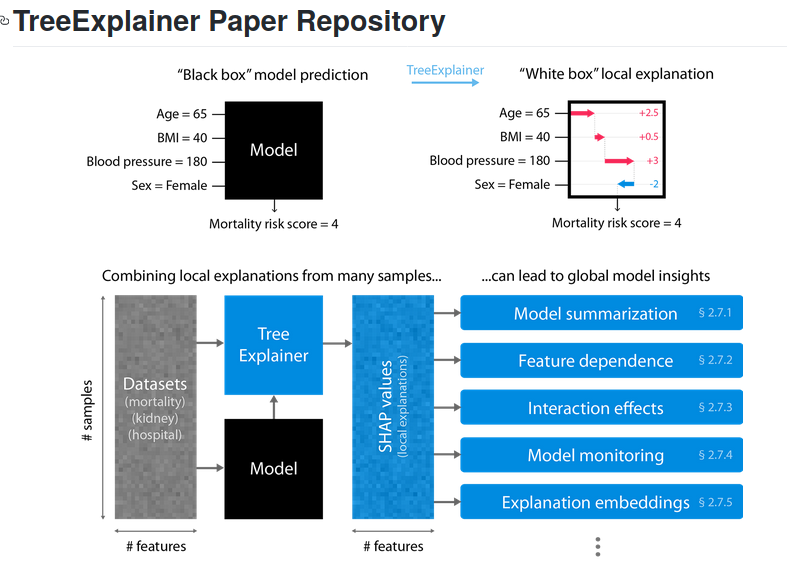

Explainable AI for Trees: From Local Explanations to Global Understanding; Scott M. Lundberg, Gabriel Erion, Hugh Chen, Alex DeGrave, Jordan M. Prutkin, Bala Nair, Ronit Katz, Jonathan Himmelfarb, Nisha Bansal, Su-In Lee; Tree-based machine learning models such as random forests, decision trees, and gradient boosted trees are the most popular non-linear predictive models used in practice today, yet comparatively little attention has been paid to explaining their predictions. Here we significantly improve the interpretability of tree-based models through three main contributions: 1) The first polynomial time algorithm to compute optimal explanations based on game theory. 2) A new type of explanation that directly measures local feature interaction effects. 3) A new set of tools for understanding global model structure based on combining many local explanations of each prediction. We apply these tools to three medical machine learning problems and show how combining many high-quality local explanations allows us to represent global structure while retaining local faithfulness to the original model. These tools enable us to i) identify high magnitude but low frequency non-linear mortality risk factors in the general US population, ii) highlight distinct population sub-groups with shared risk characteristics, iii) identify non-linear interaction effects among risk factors for chronic kidney disease, and iv) monitor a machine learning model deployed in a hospital by identifying which features are degrading the model's performance over time. Given the popularity of tree-based machine learning models, these improvements to their interpretability have implications across a broad set of domains. GitHub

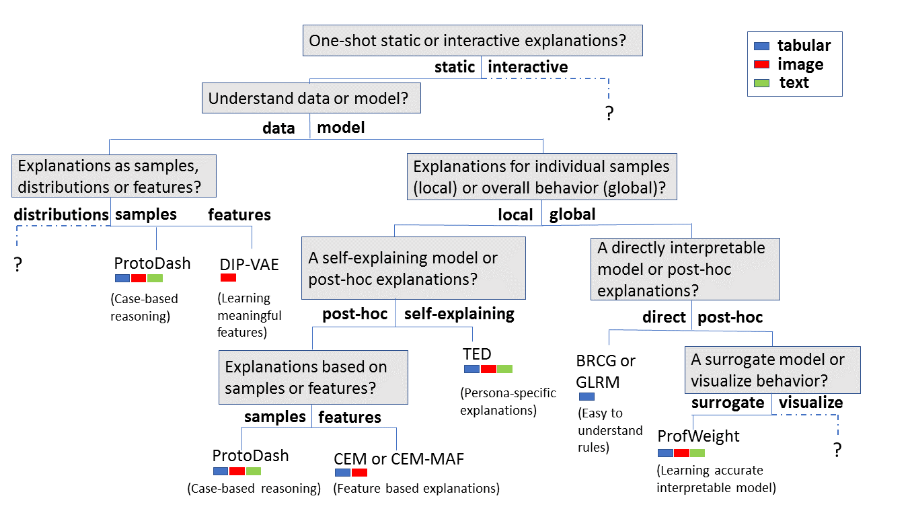

- One Explanation Does Not Fit All: A Toolkit and Taxonomy of AI Explainability Techniques; Vijay Arya, Rachel K. E. Bellamy, Pin-Yu Chen, Amit Dhurandhar, Michael Hind, Samuel C. Hoffman, Stephanie Houde, Q. Vera Liao, Ronny Luss, Aleksandra Mojsilović, Sami Mourad, Pablo Pedemonte, Ramya Raghavendra, John Richards, Prasanna Sattigeri, Karthikeyan Shanmugam, Moninder Singh, Kush R. Varshney, Dennis Wei, Yunfeng Zhang; As artificial intelligence and machine learning algorithms make further inroads into society, calls are increasing from multiple stakeholders for these algorithms to explain their outputs. At the same time, these stakeholders, whether they be affected citizens, government regulators, domain experts, or system developers, present different requirements for explanations. Toward addressing these needs, we introduce AI Explainability 360 (this http URL), an open-source software toolkit featuring eight diverse and state-of-the-art explainability methods and two evaluation metrics. Equally important, we provide a taxonomy to help entities requiring explanations to navigate the space of explanation methods, not only those in the toolkit but also in the broader literature on explainability. For data scientists and other users of the toolkit, we have implemented an extensible software architecture that organizes methods according to their place in the AI modeling pipeline. We also discuss enhancements to bring research innovations closer to consumers of explanations, ranging from simplified, more accessible versions of algorithms, to tutorials and an interactive web demo to introduce AI explainability to different audiences and application domains. Together, our toolkit and taxonomy can help identify gaps where more explainability methods are needed and provide a platform to incorporate them as they are developed. GitHub; Demo

-

LIRME: Locally Interpretable Ranking Model Explanation; Manisha Verma, Debasis Ganguly; Information retrieval (IR) models often employ complex variations in term weights to compute an aggregated similarity score of a query-document pair. Treating IR models as black-boxes makes it difficult to understand or explain why certain documents are retrieved at top-ranks for a given query. Local explanation models have emerged as a popular means to understand individual predictions of classification models. However, there is no systematic investigation that learns to interpret IR models, which is in fact the core contribution of our work in this paper. We explore three sampling methods to train an explanation model and propose two metrics to evaluate explanations generated for an IR model. Our experiments reveal some interesting observations, namely that a) diversity in samples is important for training local explanation models, and b) the stability of a model is inversely proportional to the number of parameters used to explain the model.

-

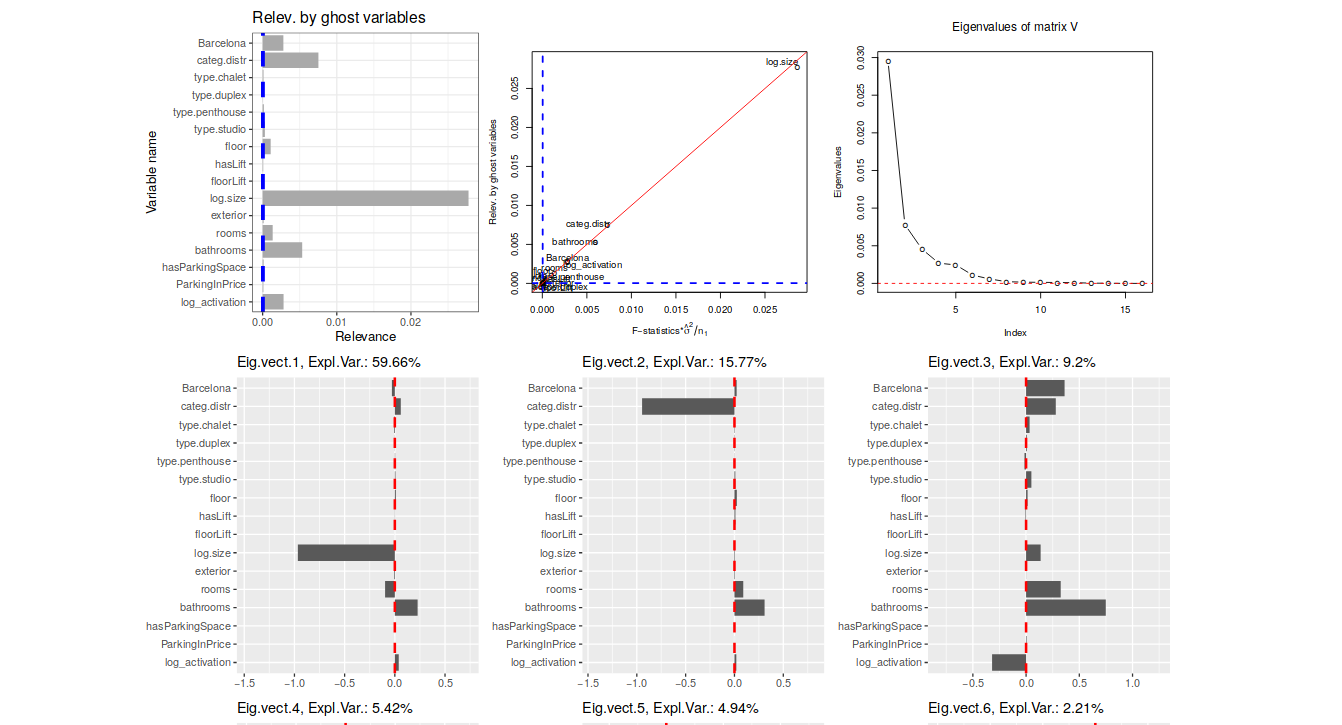

Understanding complex predictive models with Ghost Variables; Pedro Delicado, Daniel Peña; Procedure for assigning a relevance measure to each explanatory variable in a complex predictive model. We assume that we have a training set to fit the model and a test set to check the out of sample performance. First, the individual relevance of each variable is computed by comparing the predictions in the test set, given by the model that includes all the variables with those of another model in which the variable of interest is substituted by its ghost variable, defined as the prediction of this variable by using the rest of explanatory variables. Second, we check the joint effects among the variables by using the eigenvalues of a relevance matrix that is the covariance matrix of the vectors of individual effects. It is shown that in simple models, as linear or additive models, the proposed measures are related to standard measures of significance of the variables and in neural networks models (and in other algorithmic prediction models) the procedure provides information about the joint and individual effects of the variables that is not usually available by other methods.

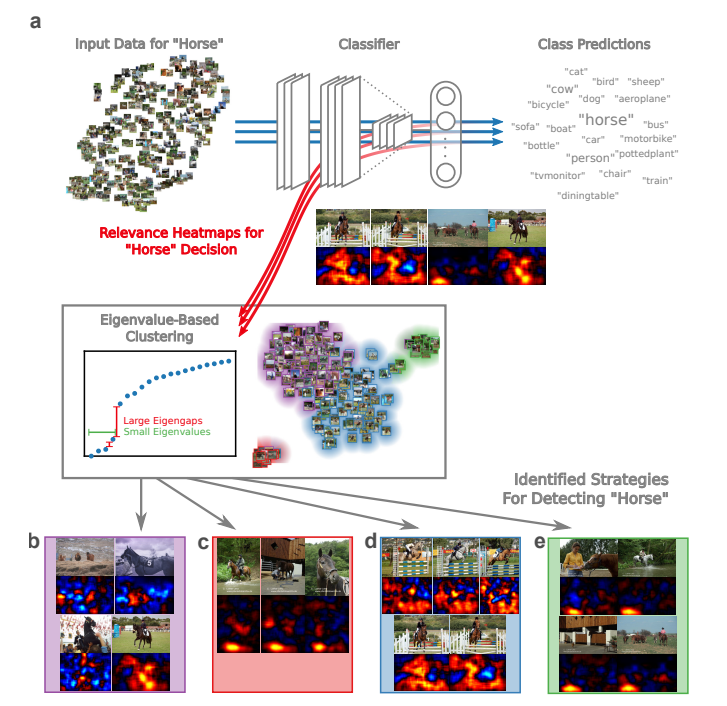

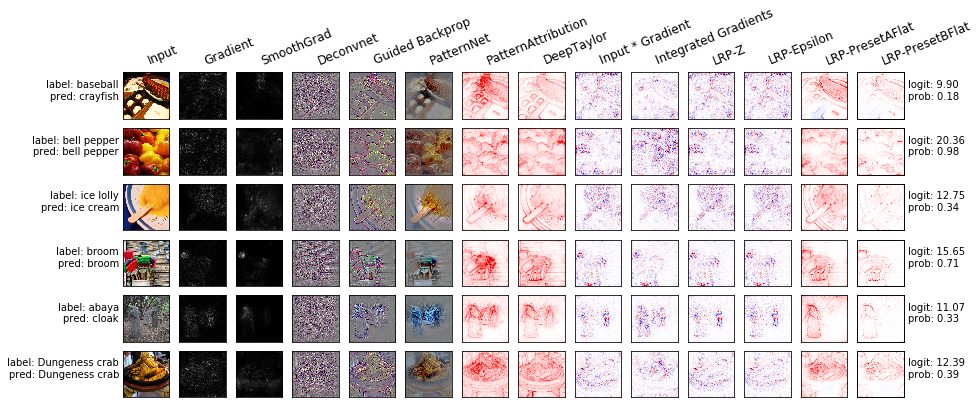

- Unmasking Clever Hans predictors and assessing what machines really learn; Sebastian Lapuschkin, Stephan Wäldchen, Alexander Binder, Grégoire Montavon, Wojciech Samek, Klaus-Robert Müller; Current learning machines have successfully solved hard application problems, reaching high accuracy and displaying seemingly intelligent behavior. Here we apply recent techniques for explaining decisions of state-of-the-art learning machines and analyze various tasks from computer vision and arcade games. This showcases a spectrum of problem-solving behaviors ranging from naive and short-sighted, to well-informed and strategic. We observe that standard performance evaluation metrics can be oblivious to distinguishing these diverse problem solving behaviors. Furthermore, we propose our semi-automated Spectral Relevance Analysis that provides a practically effective way of characterizing and validating the behavior of nonlinear learning machines. This helps to assess whether a learned model indeed delivers reliably for the problem that it was conceived for. Furthermore, our work intends to add a voice of caution to the ongoing excitement about machine intelligence and pledges to evaluate and judge some of these recent successes in a more nuanced manner.

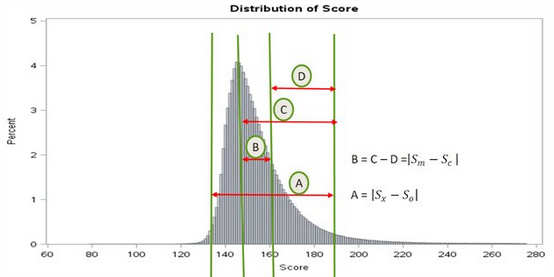

- Feature Impact for Prediction Explanation; Mohammad Bataineh; Companies across the globe have been adapting complex Machine Learning (ML) techniques to build advanced predictive models to improve their operations and services and help in decision making. While these ML techniques are extremely powerful and have found success in different industries for helping with decision making, a common feedback heard across many industries worldwide is that too often these techniques are opaque in nature with no details as to why a particular prediction probability was reached. T his work presents an innovative algorithm that addresses this limitation by providing a ranked list of all features according to their contribution to a model's prediction. T his new algorithm, Feature Impact for Prediction Explanation (FIPE), incorporates individual feature variations and correlations to calculate feature imp act for a prediction. T he true power of FIPE lies in its computationally-efficient ability to provide feature impact irrespective of the base ML technique used.

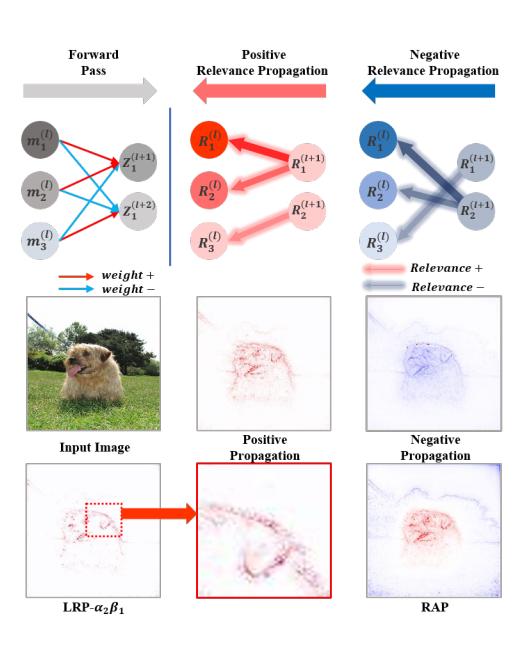

- Relative Attributing Propagation: Interpreting the Comparative Contributions of Individual Units in Deep Neural Networks; Woo-Jeoung Nam, Shir Gur, Jaesik Choi, Lior Wolf, Seong-Whan Lee; As Deep Neural Networks (DNNs) have demonstrated superhuman performance in a variety of fields, there is an increasing interest in understanding the complex internal mechanisms of DNNs. In this paper, we propose Relative Attributing Propagation (RAP), which decomposes the output predictions of DNNs with a new perspective of separating the relevant (positive) and irrelevant (negative) attributions according to the relative influence between the layers. The relevance of each neuron is identified with respect to its degree of contribution, separated into positive and negative, while preserving the conservation rule. Considering the relevance assigned to neurons in terms of relative priority, RAP allows each neuron to be assigned with a bi-polar importance score concerning the output: from highly relevant to highly irrelevant. Therefore, our method makes it possible to interpret DNNs with much clearer and attentive visualizations of the separated attributions than the conventional explaining methods. To verify that the attributions propagated by RAP correctly account for each meaning, we utilize the evaluation metrics: (i) Outside-inside relevance ratio, (ii) Segmentation mIOU and (iii) Region perturbation. In all experiments and metrics, we present a sizable gap in comparison to the existing literature.

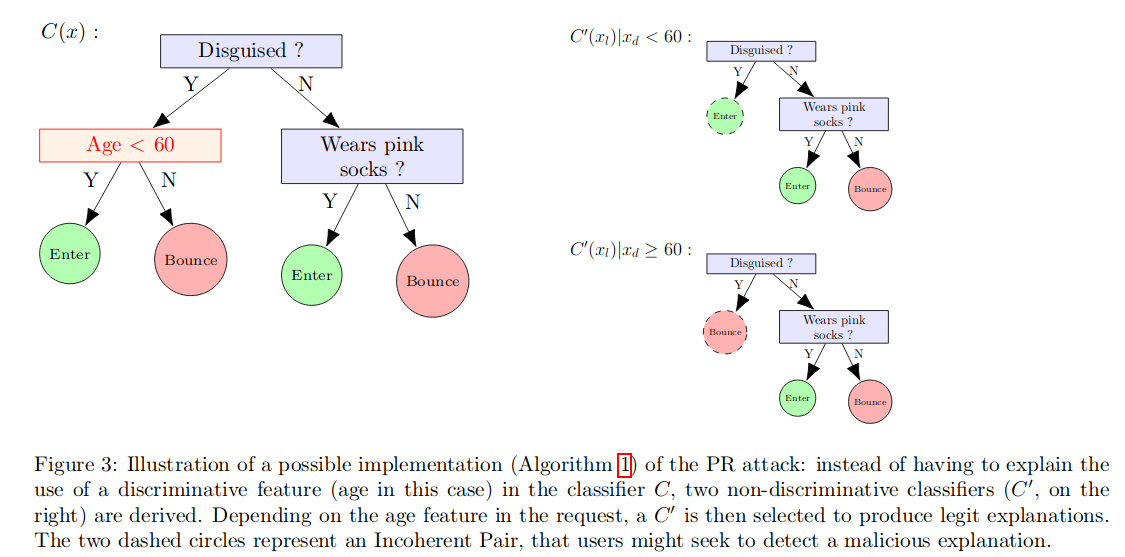

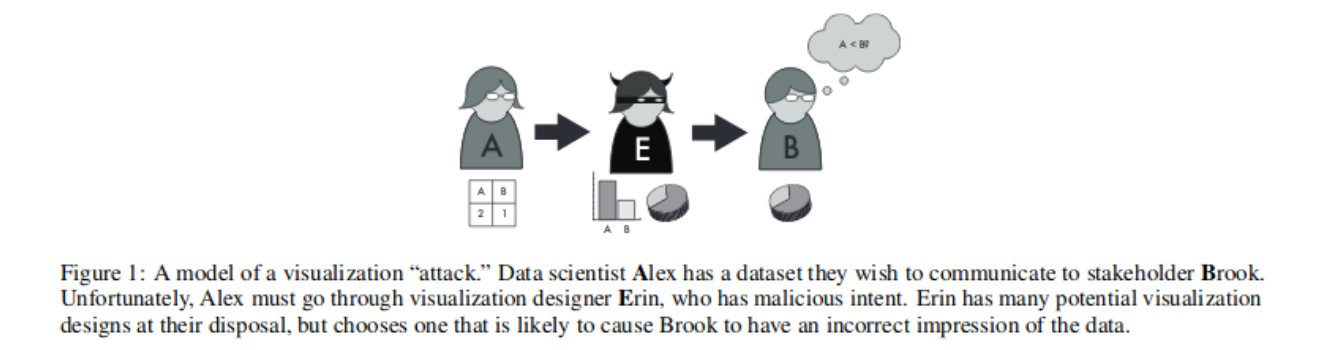

- The Bouncer Problem: Challenges to Remote Explainability; Erwan Le Merrer, Gilles Tredan; The concept of explainability is envisioned to satisfy society's demands for transparency on machine learning decisions. The concept is simple: like humans, algorithms should explain the rationale behind their decisions so that their fairness can be assessed. While this approach is promising in a local context (e.g. to explain a model during debugging at training time), we argue that this reasoning cannot simply be transposed in a remote context, where a trained model by a service provider is only accessible through its API. This is problematic as it constitutes precisely the target use-case requiring transparency from a societal perspective. Through an analogy with a club bouncer (which may provide untruthful explanations upon customer reject), we show that providing explanations cannot prevent a remote service from lying about the true reasons leading to its decisions. More precisely, we prove the impossibility of remote explainability for single explanations, by constructing an attack on explanations that hides discriminatory features to the querying user. We provide an example implementation of this attack. We then show that the probability that an observer spots the attack, using several explanations for attempting to find incoherences, is low in practical settings. This undermines the very concept of remote explainability in general.

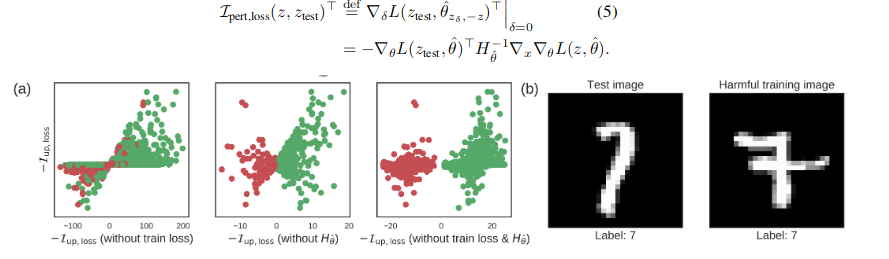

- Understanding Black-box Predictions via Influence Functions; Pang Wei Koh, Percy Liang; How can we explain the predictions of a black-box model? In this paper, we use influence functions -- a classic technique from robust statistics -- to trace a model's prediction through the learning algorithm and back to its training data, thereby identifying training points most responsible for a given prediction. To scale up influence functions to modern machine learning settings, we develop a simple, efficient implementation that requires only oracle access to gradients and Hessian-vector products. We show that even on non-convex and non-differentiable models where the theory breaks down, approximations to influence functions can still provide valuable information. On linear models and convolutional neural networks, we demonstrate that influence functions are useful for multiple purposes: understanding model behavior, debugging models, detecting dataset errors, and even creating visually-indistinguishable training-set attacks.

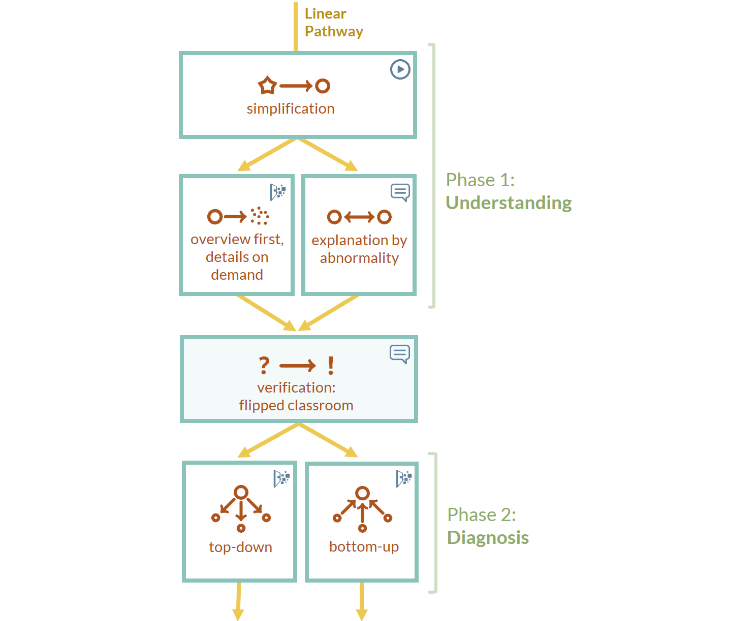

- Towards XAI: Structuringthe Processes of Explanations; Mennatallah El-Assady, et al; Explainable Artificial Intelligence describes aprocessto reveal the logical propagation of operationsthat transform a given input to a certain output. In this paper, we investigate the design space ofexplanation processes based on factors gathered from six research areas, namely, Pedagogy, Story-telling, Argumentation, Programming, Trust-Building, and Gamification. We contribute a conceptualmodel describing the building blocks of explanation processes, including a comprehensive overview ofexplanation and verification phases, pathways, mediums, and strategies. We further argue for theimportance of studying effective methods of explainable machine learning, and discuss open researchchallenges and opportunities.

- Towards Automated Machine Learning: Evaluation and Comparison of AutoML Approaches and Tools; Anh Truong, Austin Walters, Jeremy Goodsitt, Keegan Hines, C. Bayan Bruss, Reza Farivar; There has been considerable growth and interest in industrial applications of machine learning (ML) in recent years. ML engineers, as a consequence, are in high demand across the industry, yet improving the efficiency of ML engineers remains a fundamental challenge. Automated machine learning (AutoML) has emerged as a way to save time and effort on repetitive tasks in ML pipelines, such as data pre-processing, feature engineering, model selection, hyperparameter optimization, and prediction result analysis. In this paper, we investigate the current state of AutoML tools aiming to automate these tasks. We conduct various evaluations of the tools on many datasets, in different data segments, to examine their performance, and compare their advantages and disadvantages on different test cases.

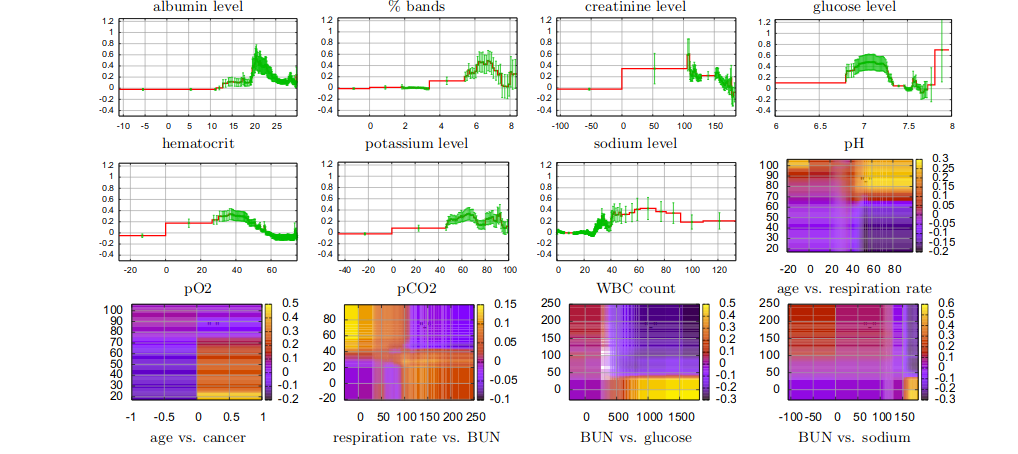

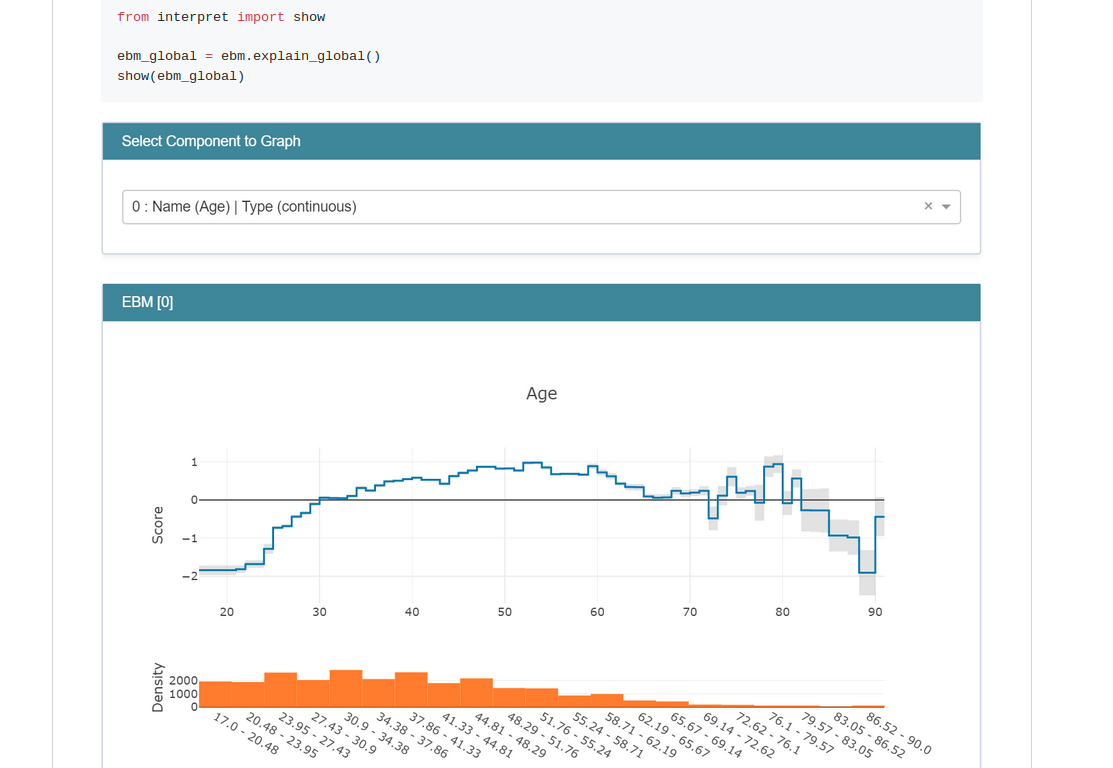

- Intelligible Models for HealthCare: Predicting PneumoniaRisk and Hospital 30-day Readmission; Rich Caruana et al; In machine learning often a tradeoff must be made betweenaccuracy and intelligibility. More accurate models such as boosted trees, random forests, and neural nets usually arenot intelligible, but more intelligible models such as logistic regression, naive-Bayes, and single decision trees often havesignificantly worse accuracy. This tradeoff sometimes limits the accuracy of models that can be applied in mission-criticalapplications such as healthcare where being able to under-stand, validate, edit, and trust a learned model is important.We present two case studies where high-performance gener-alized additive models with pairwise interactions (GA2Ms) are applied to real healthcare problems yielding intelligiblemodels with state-of-the-art accuracy. In the pneumoniarisk prediction case study, the intelligible model uncoverssurprising patterns in the data that previously had pre-vented complex learned models from being fielded in thisdomain, but because it is intelligible and modular allowsthese patterns to be recognized and removed. In the 30-day hospital readmission case study, we show that the samemethods scale to large datasets containing hundreds of thou-sands of patients and thousands of attributes while remaining intelligible and providing accuracy comparable to the best (unintelligible) machine learning methods .

-

Shapley Decomposition of R-Squared in Machine Learning Models; Nickalus Redell; In this paper we introduce a metric aimed at helping machine learning practitioners quickly summarize and communicate the overall importance of each feature in any black-box machine learning prediction model. Our proposed metric, based on a Shapley-value variance decomposition of the familiar R2 from classical statistics, is a model-agnostic approach for assessing feature importance that fairly allocates the proportion of model-explained variability in the data to each model feature. This metric has several desirable properties including boundedness at 0 and 1 and a feature-level variance decomposition summing to the overall model R2. Our implementation is available in the R package shapFlex.

-

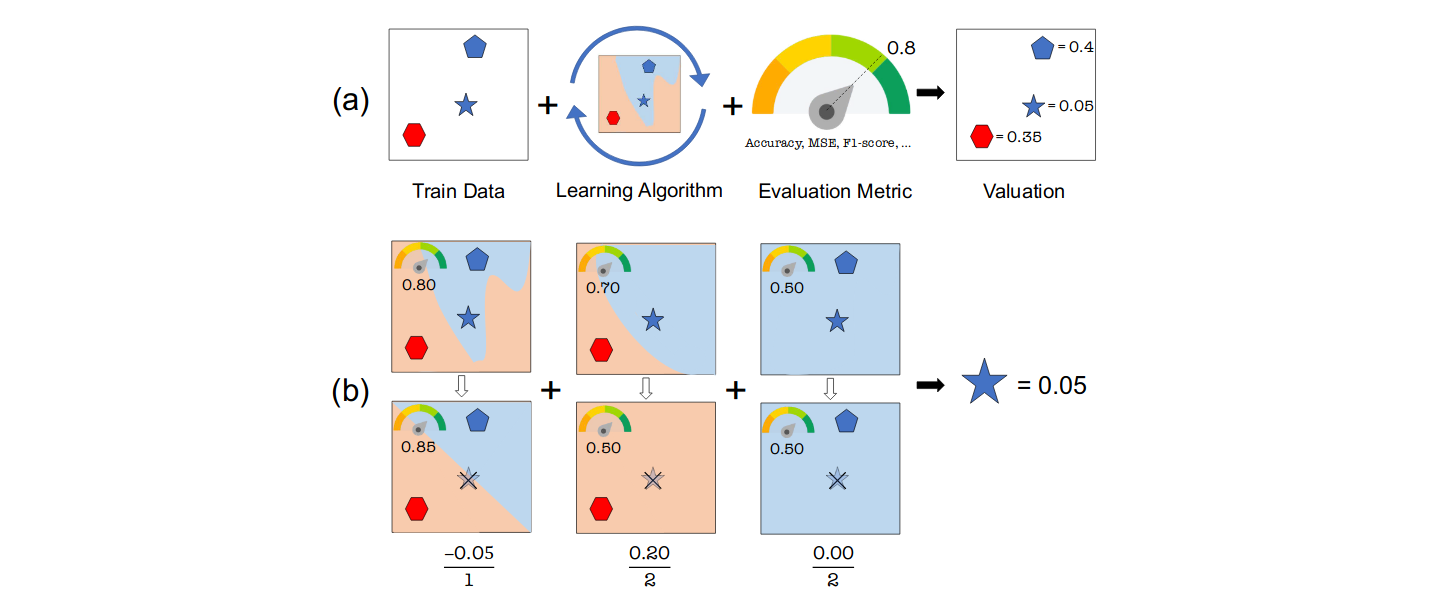

Data Shapley: Equitable Valuation of Data for Machine Learning; Amirata Ghorbani, James Zou; As data becomes the fuel driving technological and economic growth, a fundamental challenge is how to quantify the value of data in algorithmic predictions and decisions. For example, in healthcare and consumer markets, it has been suggested that individuals should be compensated for the data that they generate, but it is not clear what is an equitable valuation for individual data. In this work, we develop a principled framework to address data valuation in the context of supervised machine learning. Given a learning algorithm trained on n data points to produce a predictor, we propose data Shapley as a metric to quantify the value of each training datum to the predictor performance. Data Shapley value uniquely satisfies several natural properties of equitable data valuation. We develop Monte Carlo and gradient-based methods to efficiently estimate data Shapley values in practical settings where complex learning algorithms, including neural networks, are trained on large datasets. In addition to being equitable, extensive experiments across biomedical, image and synthetic data demonstrate that data Shapley has several other benefits: 1) it is more powerful than the popular leave-one-out or leverage score in providing insight on what data is more valuable for a given learning task; 2) low Shapley value data effectively capture outliers and corruptions; 3) high Shapley value data inform what type of new data to acquire to improve the predictor.

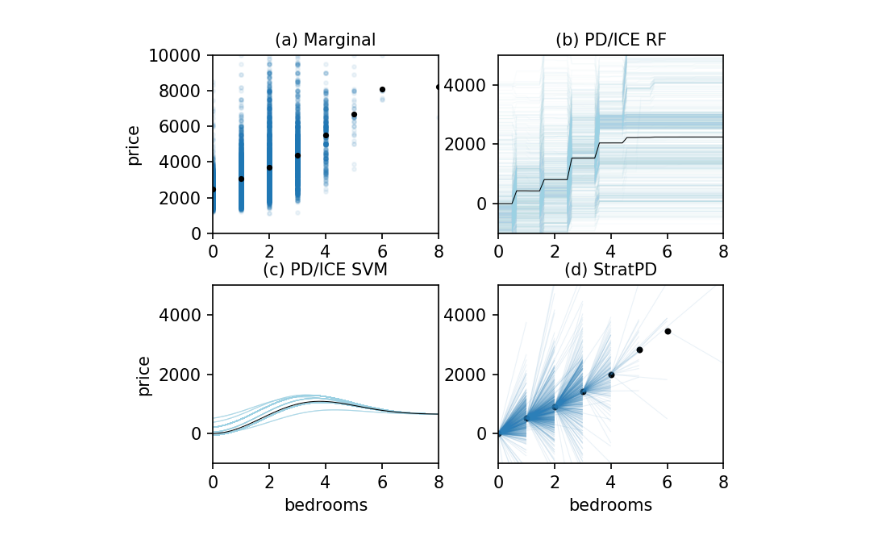

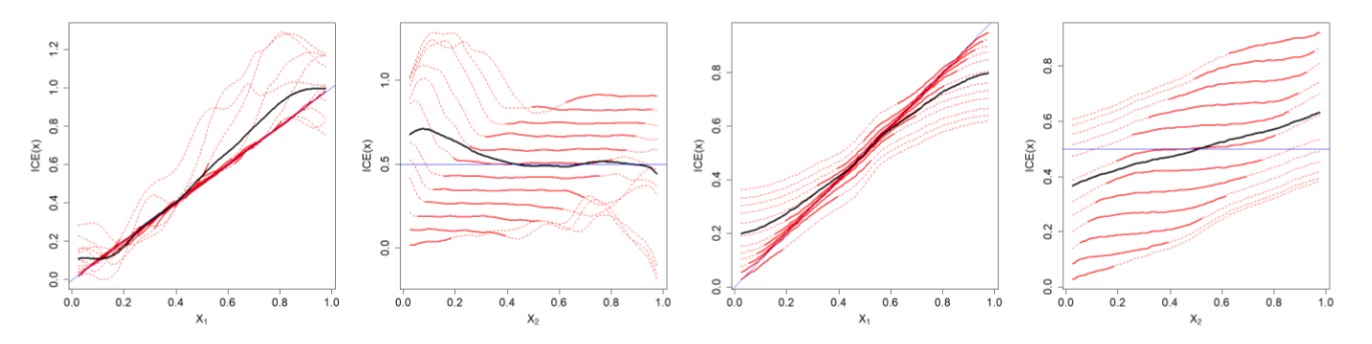

- A Stratification Approach to Partial Dependence for Codependent Variables; Terence Parr, James Wilson; Model interpretability is important to machine learning practitioners, and a key component of interpretation is the characterization of partial dependence of the response variable on any subset of features used in the model. The two most common strategies for assessing partial dependence suffer from a number of critical weaknesses. In the first strategy, linear regression model coefficients describe how a unit change in an explanatory variable changes the response, while holding other variables constant. But, linear regression is inapplicable for high dimensional (p>n) data sets and is often insufficient to capture the relationship between explanatory variables and the response. In the second strategy, Partial Dependence (PD) plots and Individual Conditional Expectation (ICE) plots give biased results for the common situation of codependent variables and they rely on fitted models provided by the user. When the supplied model is a poor choice due to systematic bias or overfitting, PD/ICE plots provide little (if any) useful information. To address these issues, we introduce a new strategy, called StratPD, that does not depend on a user's fitted model, provides accurate results in the presence codependent variables, and is applicable to high dimensional settings. The strategy works by stratifying a data set into groups of observations that are similar, except in the variable of interest, through the use of a decision tree. Any fluctuations of the response variable within a group is likely due to the variable of interest. We apply StratPD to a collection of simulations and case studies to show that StratPD is a fast, reliable, and robust method for assessing partial dependence with clear advantages over state-of-the-art methods.

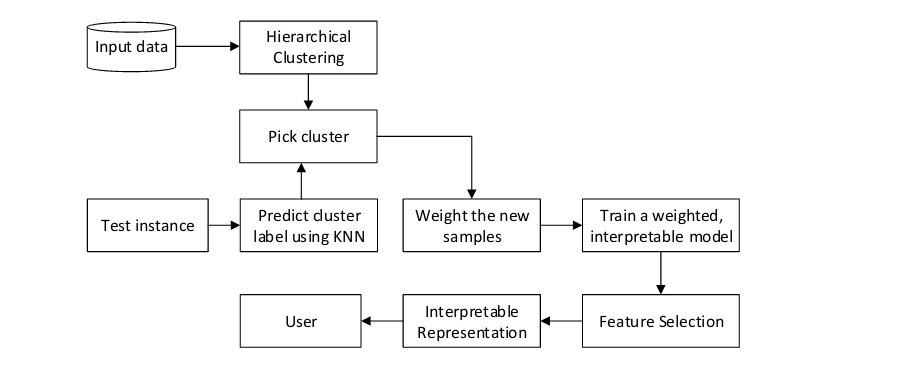

- DLIME: A Deterministic Local Interpretable Model-Agnostic Explanations Approach for Computer-Aided Diagnosis Systems; Muhammad Rehman Zafar, Naimul Mefraz Khan; While LIME and similar local algorithms have gained popularity due to their simplicity, the random perturbation and feature selection methods result in "instability" in the generated explanations, where for the same prediction, different explanations can be generated. This is a critical issue that can prevent deployment of LIME in a Computer-Aided Diagnosis (CAD) system, where stability is of utmost importance to earn the trust of medical professionals. In this paper, we propose a deterministic version of LIME. Instead of random perturbation, we utilize agglomerative Hierarchical Clustering (HC) to group the training data together and K-Nearest Neighbour (KNN) to select the relevant cluster of the new instance that is being explained. After finding the relevant cluster, a linear model is trained over the selected cluster to generate the explanations. Experimental results on three different medical datasets show the superiority for Deterministic Local Interpretable Model-Agnostic Explanations (DLIME), where we quantitatively determine the stability of DLIME compared to LIME utilizing the Jaccard similarity among multiple generated explanations.

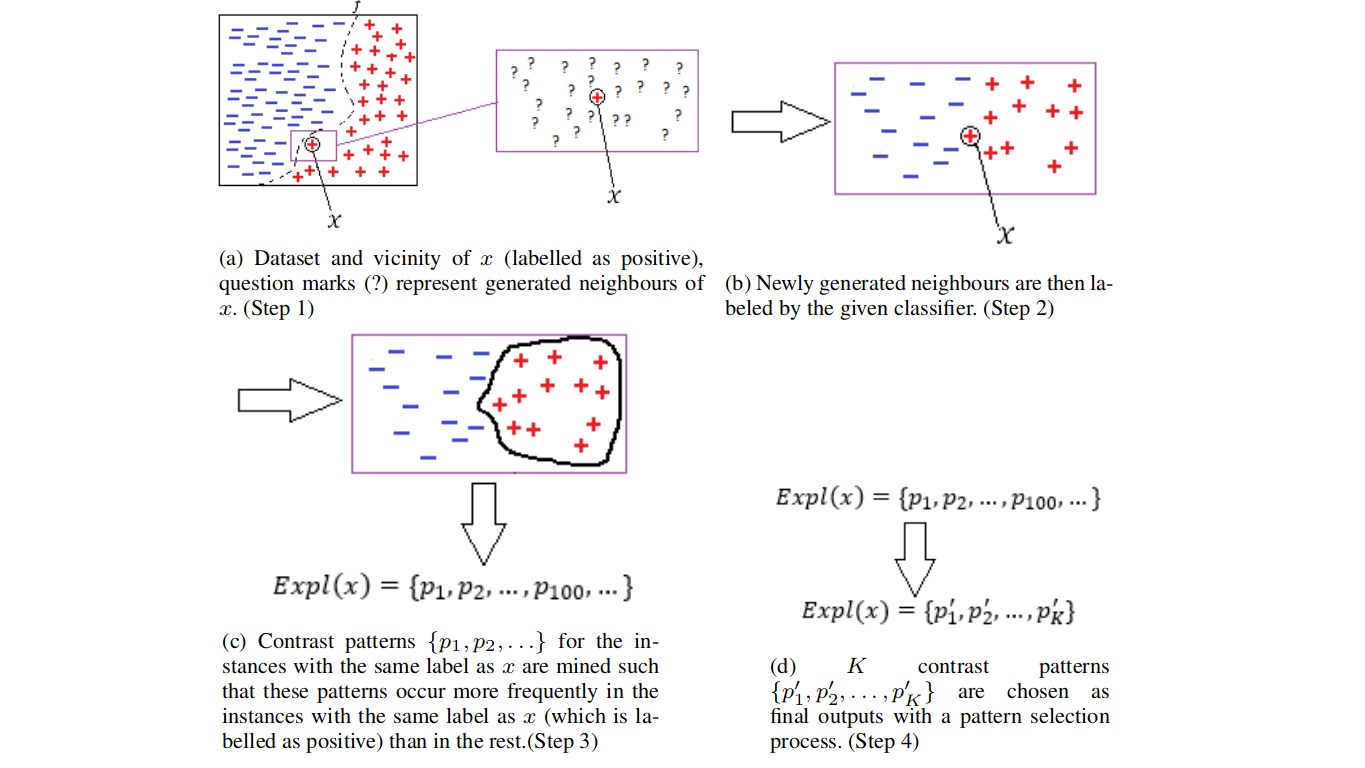

- Exploiting patterns to explain individual predictions; Yunzhe Jia, James Bailey, Kotagiri Ramamohanarao, Christopher Leckie, Xingjun Ma; Users need to understand the predictions of a classifier, especially when decisions based on the predictions can have severe consequences. The explanation of a prediction reveals the reason why a classifier makes a certain prediction and it helps users to accept or reject the prediction with greater confidence. This paper proposes an explanation method called Pattern Aided Local Explanation (PALEX) to provide instance-level explanations for any classifier. PALEX takes a classifier, a test instance and a frequent pattern set summarizing the training data of the classifier as inputs, then outputs the supporting evidence that the classifier considers important for the prediction of the instance. To study the local behavior of a classifier in the vicinity of the test instance, PALEX uses the frequent pattern set from the training data as an extra input to guide generation of new synthetic samples in the vicinity of the test instance. Contrast patterns are also used in PALEX to identify locally discriminative features in the vicinity of a test instance. PALEXis particularly effective for scenarios where there exist multiple explanations.

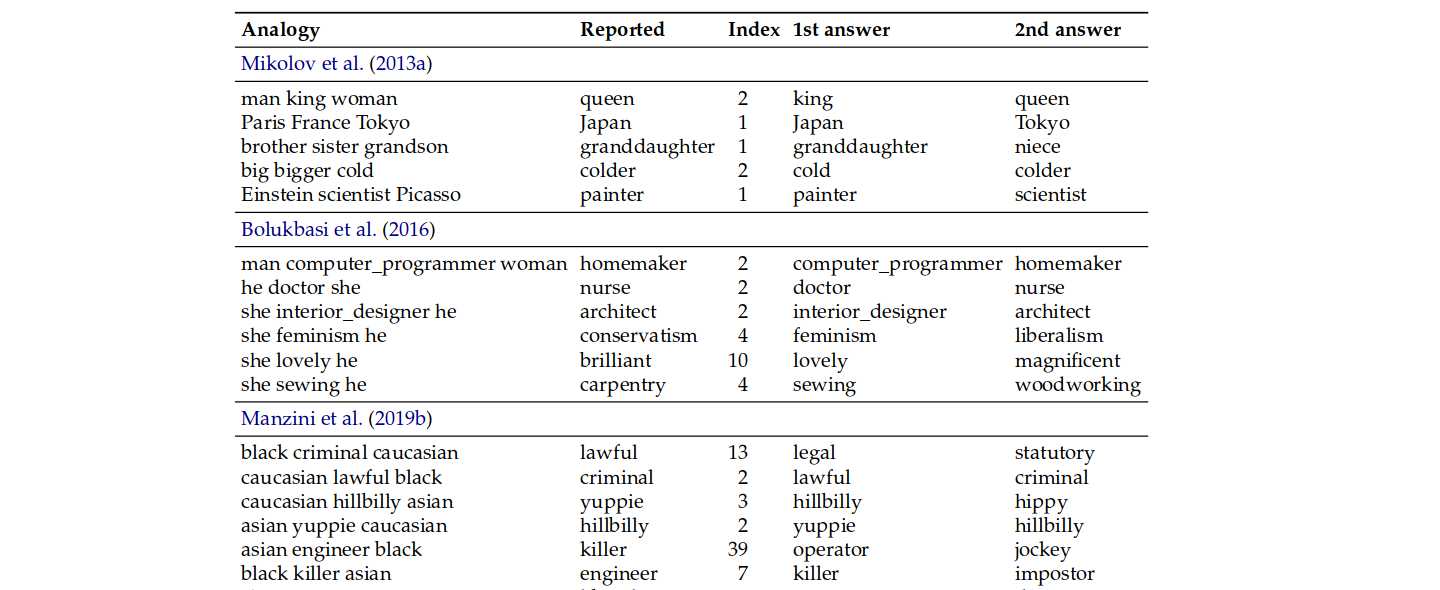

- Fair is Better than Sensational:Man is to Doctor as Woman is to Doctor; Malvina Nissim, Rik van Noord, Rob van der Goot; Analogies such as man is to king as woman is to X are often used to illustrate the amazing power of word embeddings. Concurrently, they have also exposed how strongly human biases are encoded in vector spaces built on natural language. While finding that queen is the answer to man is to king as woman is to X leaves us in awe, papers have also reported finding analogies deeply infused with human biases, like man is to computer programmer as woman is to homemaker, which instead leave us with worry and rage. In this work we show that,often unknowingly, embedding spaces have not been treated fairly. Through a series of simple experiments, we highlight practical and theoretical problems in previous works, and demonstrate that some of the most widely used biased analogies are in fact not supported by the data.

- Interpretable Counterfactual Explanations Guided by Prototypes; Arnaud Van Looveren, Janis Klaise; We propose a fast, model agnostic method for finding interpretable counterfactual explanations of classifier predictions by using class prototypes. We show that class prototypes, obtained using either an encoder or through class specific k-d trees, significantly speed up the the search for counterfactual instances and result in more interpretable explanations. We introduce two novel metrics to quantitatively evaluate local interpretability at the instance level. We use these metrics to illustrate the effectiveness of our method on an image and tabular dataset, respectively MNIST and Breast Cancer Wisconsin (Diagnostic).

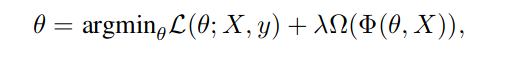

- Learning Explainable Models Using Attribution Priors; Gabriel Erion, Joseph D. Janizek, Pascal Sturmfels, Scott Lundberg, Su-In Lee; Two important topics in deep learning both involve incorporating humans into the modeling process: Model priors transfer information from humans to a model by constraining the model's parameters; Model attributions transfer information from a model to humans by explaining the model's behavior. We propose connecting these topics with attribution priors, which allow humans to use the common language of attributions to enforce prior expectations about a model's behavior during training. We develop a differentiable axiomatic feature attribution method called expected gradients and show how to directly regularize these attributions during training. We demonstrate the broad applicability of attribution priors: 1) on image data, 2) on gene expression data, 3) on a health care dataset.

-

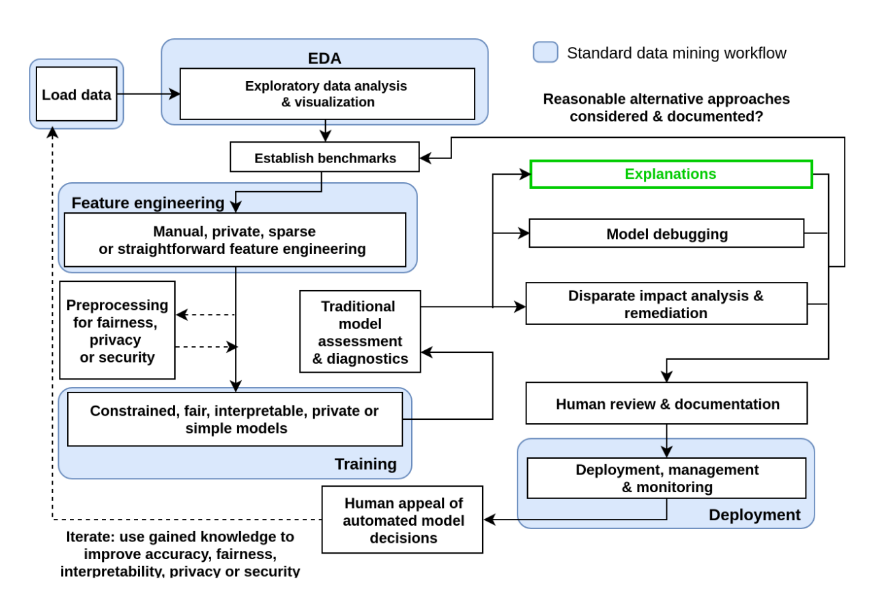

Guidelines for Responsible and Human-Centered Use of Explainable Machine Learning; Patrick Hall; Explainable machine learning (ML) has been implemented in numerous open source and proprietary software packages and explainable ML is an important aspect of commercial predictive modeling. However, explainable ML can be misused, particularly as a faulty safeguard for harmful black-boxes, e.g. fairwashing, and for other malevolent purposes like model stealing. This text discusses definitions, examples, and guidelines that promote a holistic and human-centered approach to ML which includes interpretable (i.e. white-box ) models and explanatory, debugging, and disparate impact analysis techniques.

-

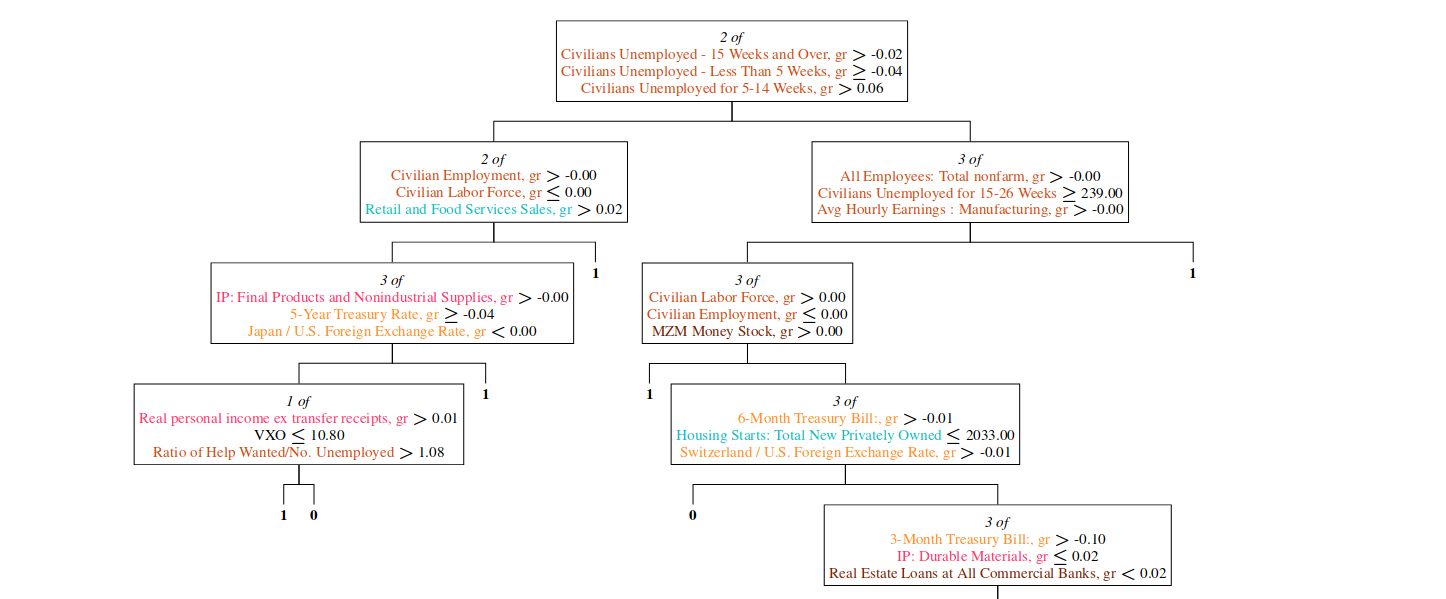

Concept Tree: High-Level Representation of Variables for More Interpretable Surrogate Decision Trees; Xavier Renard, Nicolas Woloszko, Jonathan Aigrain, Marcin Detyniecki; Interpretable surrogates of black-box predictors trained on high-dimensional tabular datasets can struggle to generate comprehensible explanations in the presence of correlated variables. We propose a model-agnostic interpretable surrogate that provides global and local explanations of black-box classifiers to address this issue. We introduce the idea of concepts as intuitive groupings of variables that are either defined by a domain expert or automatically discovered using correlation coefficients. Concepts are embedded in a surrogate decision tree to enhance its comprehensibility.

- The Secrets of Machine Learning: Ten Things You Wish You Had Known Earlier to be More Effective at Data Analysis; Cynthia Rudin, David Carlson; Despite the widespread usage of machine learning throughout organizations, there are some key principles that are commonly missed. In particular: 1) There are at least four main families for supervised learning: logical modeling methods, linear combination methods, case-based reasoning methods, and iterative summarization methods. 2) For many application domains, almost all machine learning methods perform similarly (with some caveats). Deep learning methods, which are the leading technique for computer vision problems, do not maintain an edge over other methods for most problems (and there are reasons why). 3) Neural networks are hard to train and weird stuff often happens when you try to train them. 4) If you don't use an interpretable model, you can make bad mistakes. 5) Explanations can be misleading and you can't trust them. 6) You can pretty much always find an accurate-yet-interpretable model, even for deep neural networks. 7) Special properties such as decision making or robustness must be built in, they don't happen on their own. 8) Causal inference is different than prediction (correlation is not causation). 9) There is a method to the madness of deep neural architectures, but not always. 10) It is a myth that artificial intelligence can do anything.

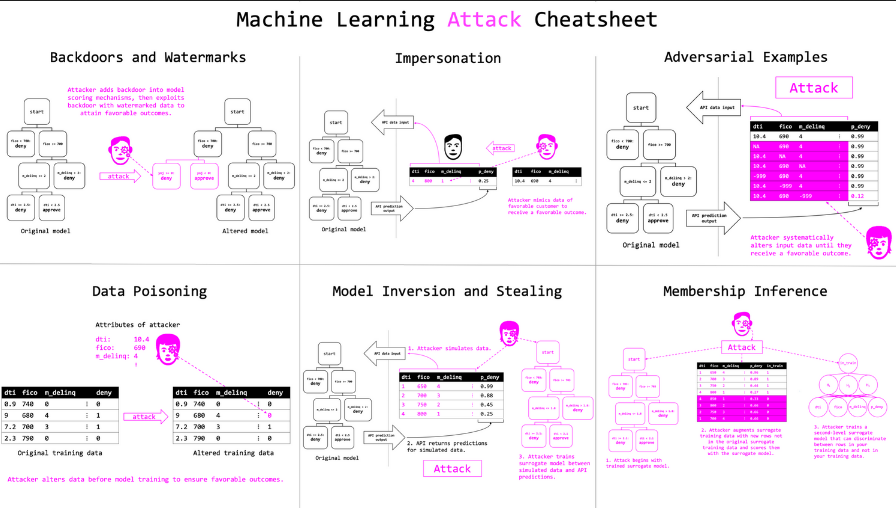

- Proposals for model vulnerability and security; Patrick Hall; Apply fair and private models, white-hat and forensic model debugging, and common sense to protect machine learning models from malicious actors.

- On Explainable Machine Learning Misconceptions and A More Human-Centered Machine Learning; Patrick Hall; Due to obvious community and commercial demand, explainable machine learning (ML) methods have already been implemented in popular open source software and in commercial software. Yet, as someone who has been involved in the implementation of explainable ML software for the past three years, I find a lot of what I read about the topic confusing and detached from my personal, hands-on experiences. This short text presents arguments, proposals, and references to address some observed explainable ML misconceptions.

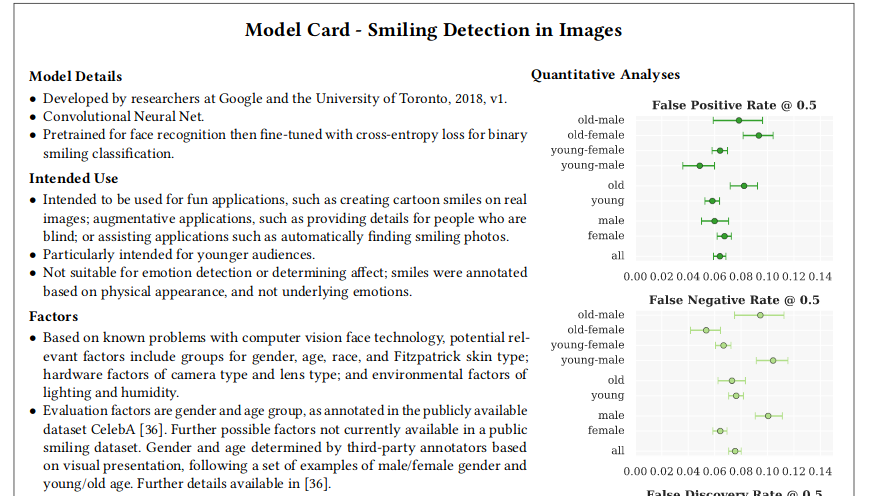

- Model Cards for Model Reporting; Margaret Mitchell, Simone Wu, Andrew Zaldivar, Parker Barnes, Lucy Vasserman, Ben Hutchinson, Elena Spitzer, Inioluwa Deborah Raji, Timnit Gebru; Trained machine learning models are increasingly used to perform high-impact tasks in areas such as law enforcement, medicine, education, and employment. In order to clarify the intended use cases of machine learning models and minimize their usage in contexts for which they are not well suited, we recommend that released models be accompanied by documentation detailing their performance characteristics. In this paper, we propose a framework that we call model cards, to encourage such transparent model reporting. Model cards are short documents accompanying trained machine learning models that provide benchmarked evaluation in a variety of conditions, such as across different cultural, demographic, or phenotypic groups (e.g., race, geographic location, sex, Fitzpatrick skin type) and intersectional groups (e.g., age and race, or sex and Fitzpatrick skin type) that are relevant to the intended application domains. Model cards also disclose the context in which models are intended to be used, details of the performance evaluation procedures, and other relevant information. While we focus primarily on human-centered machine learning models in the application fields of computer vision and natural language processing, this framework can be used to document any trained machine learning model. To solidify the concept, we provide cards for two supervised models: One trained to detect smiling faces in images, and one trained to detect toxic comments in text. We propose model cards as a step towards the responsible democratization of machine learning and related AI technology, increasing transparency into how well AI technology works. We hope this work encourages those releasing trained machine learning models to accompany model releases with similar detailed evaluation numbers and other relevant documentation.

- Unbiased Measurement of Feature Importance in Tree-Based Methods; Zhengze Zhou, Giles Hooker; We propose a modification that corrects for split-improvement variable importance measures in Random Forests and other tree-based methods. These methods have been shown to be biased towards increasing the importance of features with more potential splits. We show that by appropriately incorporating split-improvement as measured on out of sample data, this bias can be corrected yielding better summaries and screening tools.

- Please Stop Permuting Features: An Explanation and Alternatives; Giles Hooker, Lucas Mentch; This paper advocates against permute-and-predict (PaP) methods for interpreting black box functions. Methods such as the variable importance measures proposed for random forests, partial dependence plots, and individual conditional expectation plots remain popular because of their ability to provide model-agnostic measures that depend only on the pre-trained model output. However, numerous studies have found that these tools can produce diagnostics that are highly misleading, particularly when there is strong dependence among features. Rather than simply add to this growing literature by further demonstrating such issues, here we seek to provide an explanation for the observed behavior. In particular, we argue that breaking dependencies between features in hold-out data places undue emphasis on sparse regions of the feature space by forcing the original model to extrapolate to regions where there is little to no data. We explore these effects through various settings where a ground-truth is understood and find support for previous claims in the literature that PaP metrics tend to over-emphasize correlated features both in variable importance and partial dependence plots, even though applying permutation methods to the ground-truth models do not. As an alternative, we recommend more direct approaches that have proven successful in other settings: explicitly removing features, conditional permutations, or model distillation methods.

- Why should you trust my interpretation? Understanding uncertainty in LIME predictions; Hui Fen (Sarah)Tan, Kuangyan Song, Madeilene Udell, Yiming Sun, Yujia Zhang; Methods for interpreting machine learning black-box models increase the outcomes' transparency and in turn generates insight into the reliability and fairness of the algorithms. However, the interpretations themselves could contain significant uncertainty that undermines the trust in the outcomes and raises concern about the model's reliability. Focusing on the method "Local Interpretable Model-agnostic Explanations" (LIME), we demonstrate the presence of two sources of uncertainty, namely the randomness in its sampling procedure and the variation of interpretation quality across different input data points. Such uncertainty is present even in models with high training and test accuracy. We apply LIME to synthetic data and two public data sets, text classification in 20 Newsgroup and recidivism risk-scoring in COMPAS, to support our argument.

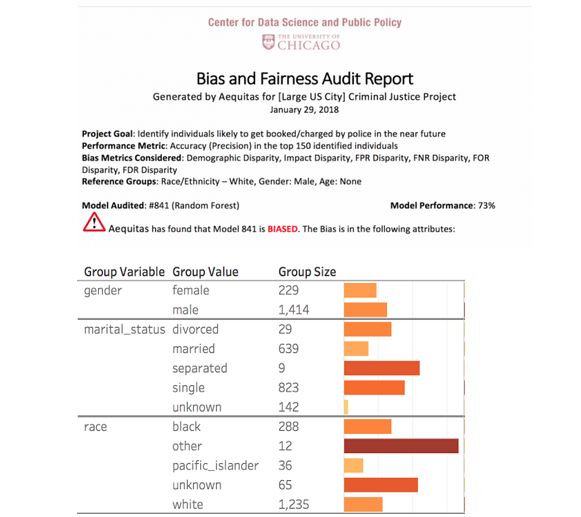

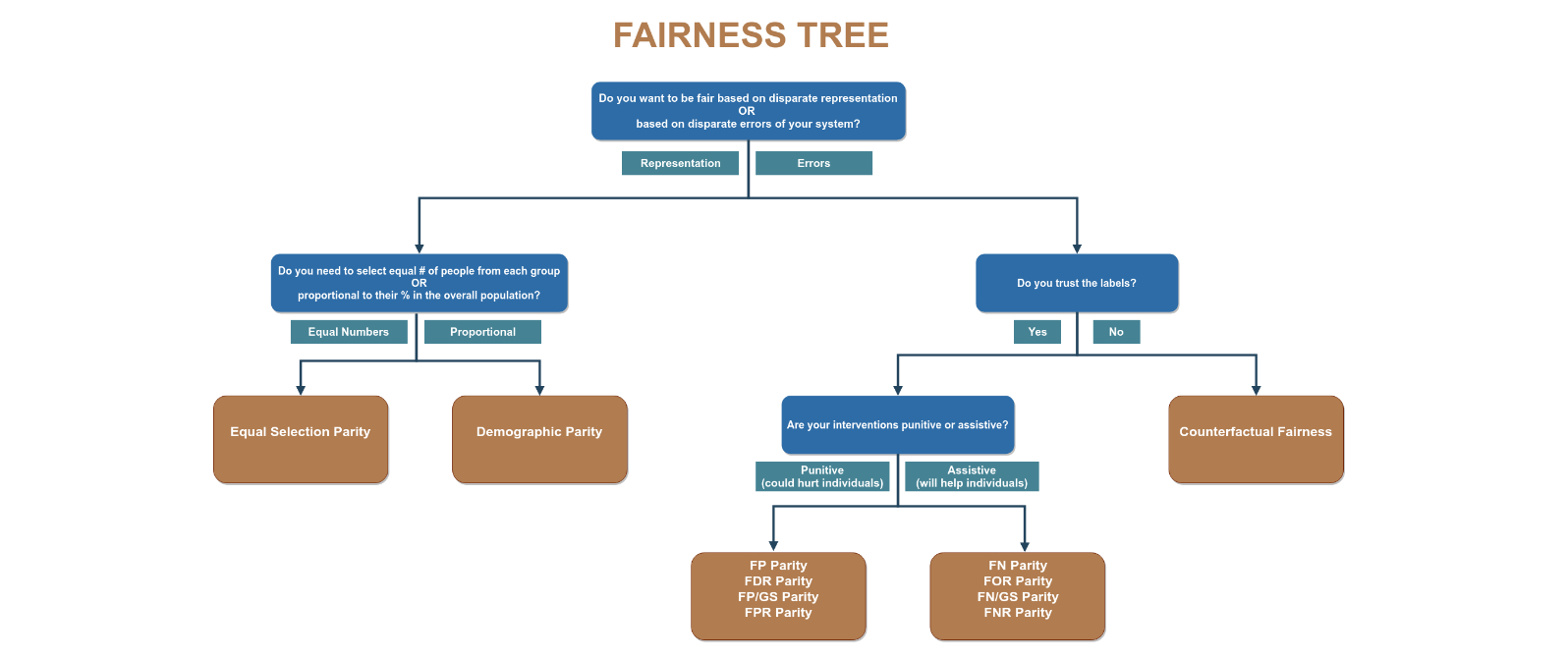

- Aequitas: A Bias and Fairness Audit Toolkit; Pedro Saleiro, Benedict Kuester, Loren Hinkson, Jesse London, Abby Stevens, Ari Anisfeld, Kit T. Rodolfa, Rayid Ghani; Recent work has raised concerns on the risk of unintended bias in AI systems being used nowadays that can affect individuals unfairly based on race, gender or religion, among other possible characteristics. While a lot of bias metrics and fairness definitions have been proposed in recent years, there is no consensus on which metric/definition should be used and there are very few available resources to operationalize them. Therefore, despite recent awareness, auditing for bias and fairness when developing and deploying AI systems is not yet a standard practice. We present Aequitas, an open source bias and fairness audit toolkit that is an intuitive and easy to use addition to the machine learning workflow, enabling users to seamlessly test models for several bias and fairness metrics in relation to multiple population sub-groups. Aequitas facilitates informed and equitable decisions around developing and deploying algorithmic decision making systems for both data scientists, machine learning researchers and policymakers.

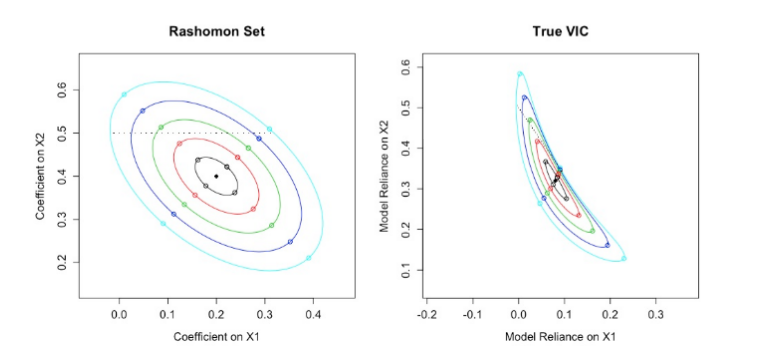

- Variable Importance Clouds: A Way to Explore Variable Importance for the Set of Good Models; Jiayun Dong, Cynthia Rudin; Variable importance is central to scientific studies, including the social sciences and causal inference, healthcare, and in other domains. However, current notions of variable importance are often tied to a specific predictive model. This is problematic: what if there were multiple well-performing predictive models, and a specific variable is important to some of them and not to others? In that case, we may not be able to tell from a single well-performing model whether a variable is always important in predicting the outcome. Rather than depending on variable importance for a single predictive model, we would like to explore variable importance for all approximately-equally-accurate predictive models. This work introduces the concept of a variable importance cloud, which maps every variable to its importance for every good predictive model. We show properties of the variable importance cloud and draw connections other areas of statistics. We introduce variable importance diagrams as a projection of the variable importance cloud into two dimensions for visualization purposes.

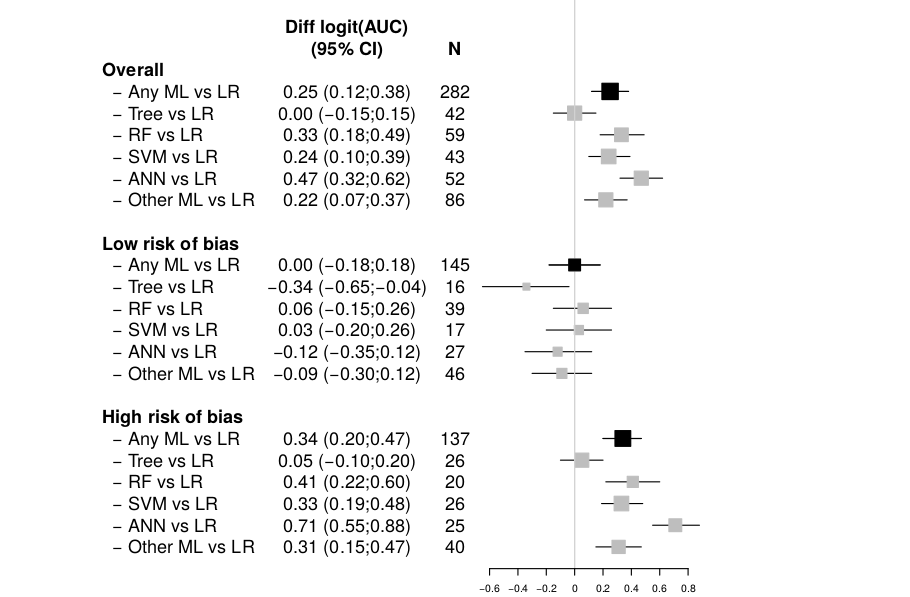

- A systematic review shows no performance benefit of machine learning over logistic regression for clinical prediction models; Evangelia Christodoulou, Jie Ma, Gary Collins, Ewout Steyerberg, Jan Yerbakela, Ben Van Calster; Objectives: The objective of this study was to compare performance of logistic regression (LR) with machine learning (ML) for clinical prediction modeling in the literature. Study Design and Setting: We conducted a Medline literature search (1/2016 to 8/2017) and extracted comparisons between LR and ML models for binary outcomes. Results: We included 71 of 927 studies. The median sample size was 1,250 (range 72–3,994,872), with 19 predictors considered (range 5–563) and eight events per predictor (range 0.3–6,697). The most common ML methods were classification trees, random forests, artificial neural networks, and support vector machines. In 48 (68%) studies, we observed potential bias in the validation procedures. Sixty-four (90%) studies used the area under the receiver operating characteristic curve (AUC) to assess discrimination. Calibration was not addressed in 56 (79%) studies. We identified 282 comparisons between an LR and ML model (AUC range, 0.52–0.99). For 145 comparisons at low risk of bias, the difference in logit(AUC) between LR and ML was 0.00 (95% confidence interval, −0.18 to 0.18). For 137 comparisons at high risk of bias, logit(AUC) was 0.34 (0.20–0.47) higher for ML. Conclusion: We found no evidence of superior performance of ML over LR. Improvements in methodology and reporting are needed for studies that compare modeling algorithms.

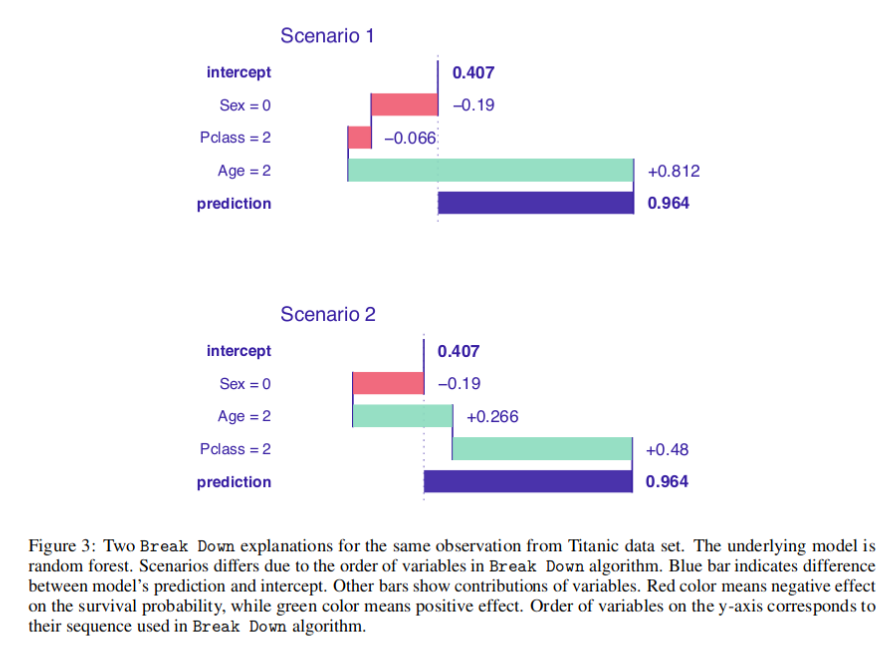

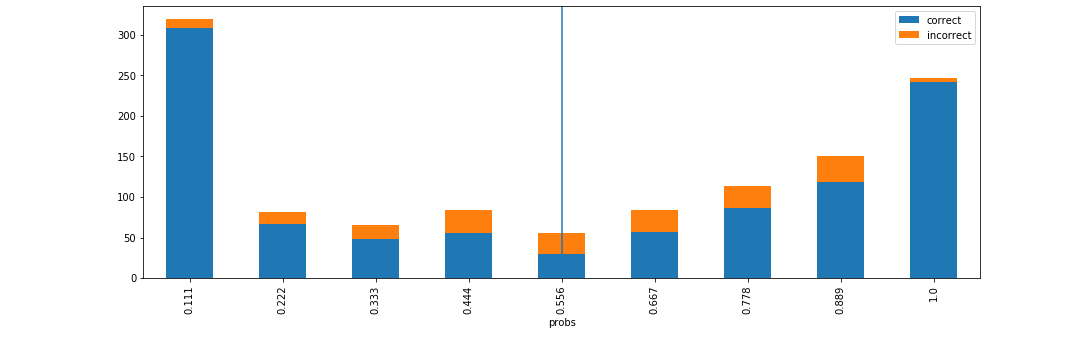

- iBreakDown: Uncertainty of Model Explanations for Non-additive Predictive Models; Alicja Gosiewska, Przemyslaw Biecek; Explainable Artificial Intelligence (XAI) brings a lot of attention recently. Explainability is being presented as a remedy for lack of trust in model predictions. Model agnostic tools such as LIME, SHAP, or Break Down promise instance level interpretability for any complex machine learning model. But how certain are these explanations? Can we rely on additive explanations for non-additive models? In this paper, we examine the behavior of model explainers under the presence of interactions. We define two sources of uncertainty, model level uncertainty, and explanation level uncertainty. We show that adding interactions reduces explanation level uncertainty. We introduce a new method iBreakDown that generates non-additive explanations with local interaction.

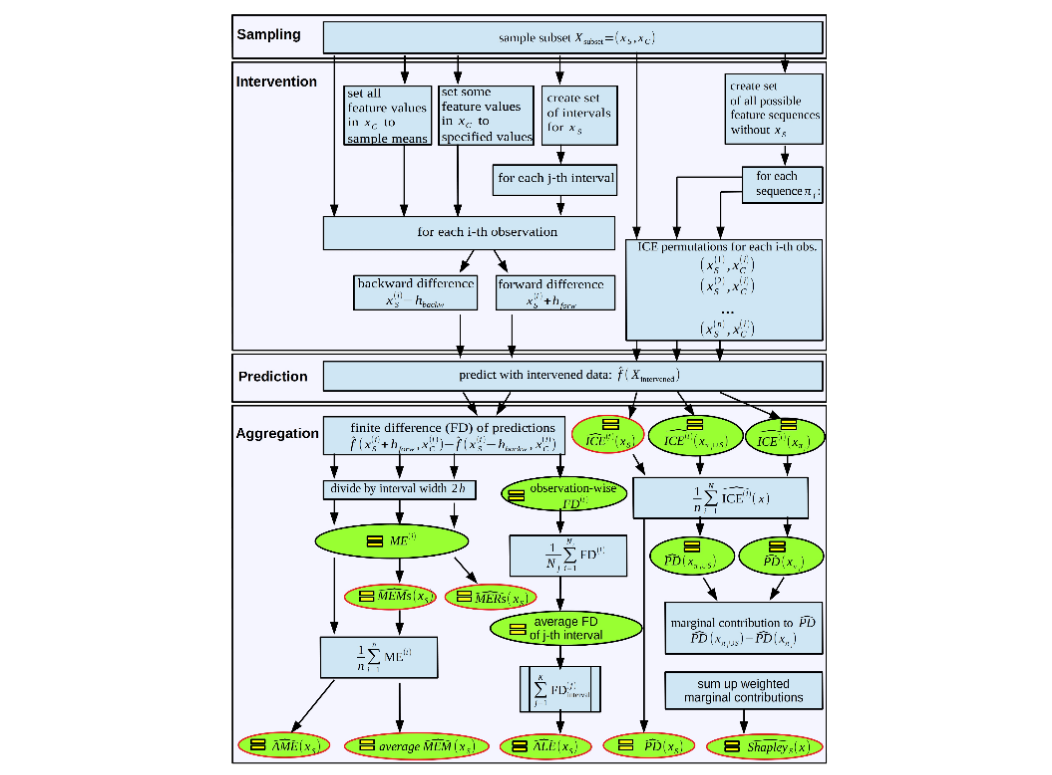

- Sampling, Intervention, Prediction, Aggregation: A Generalized Framework for Model Agnostic Interpretations; Christian A. Scholbeck, Christoph Molnar, Christian Heumann, Bernd Bischl, Giuseppe Casalicchio; Non-linear machine learning models often trade off a great predictive performance for a lack of interpretability. However, model agnostic interpretation techniques now allow us to estimate the effect and importance of features for any predictive model. Different notations and terminology have complicated their understanding and how they are related. A unified view on these methods has been missing. We present the generalized SIPA (Sampling, Intervention, Prediction, Aggregation) framework of work stages for model agnostic interpretation techniques and demonstrate how several prominent methods for feature effects can be embedded into the proposed framework. We also formally introduce pre-existing marginal effects to describe feature effects for black box models. Furthermore, we extend the framework to feature importance computations by pointing out how variance-based and performance-based importance measures are based on the same work stages. The generalized framework may serve as a guideline to conduct model agnostic interpretations in machine learning.

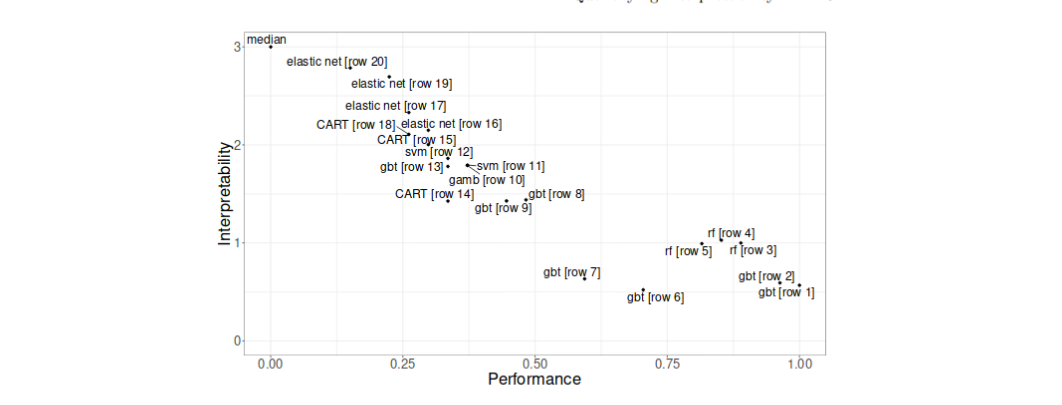

- Quantifying Interpretability of Arbitrary Machine Learning Models Through Functional Decomposition; Christoph Molnar, Giuseppe Casalicchio, Bernd Bischl; To obtain interpretable machine learning models, either interpretable models are constructed from the outset - e.g. shallow decision trees, rule lists, or sparse generalized linear models - or post-hoc interpretation methods - e.g. partial dependence or ALE plots - are employed. Both approaches have disadvantages. While the former can restrict the hypothesis space too conservatively, leading to potentially suboptimal solutions, the latter can produce too verbose or misleading results if the resulting model is too complex, especially w.r.t. feature interactions. We propose to make the compromise between predictive power and interpretability explicit by quantifying the complexity / interpretability of machine learning models. Based on functional decomposition, we propose measures of number of features used, interaction strength and main effect complexity. We show that post-hoc interpretation of models that minimize the three measures becomes more reliable and compact. Furthermore, we demonstrate the application of such measures in a multi-objective optimization approach which considers predictive power and interpretability at the same time.

- One pixel attack for fooling deep neural networks; Jiawei Su, Danilo Vasconcellos Vargas, Sakurai Kouichi; Recent research has revealed that the output of Deep Neural Networks (DNN) can be easily altered by adding relatively small perturbations to the input vector. In this paper, we analyze an attack in an extremely limited scenario where only one pixel can be modified. For that we propose a novel method for generating one-pixel adversarial perturbations based on differential evolution(DE). It requires less adversarial information(a black-box attack) and can fool more types of networks due to the inherent features of DE. The results show that 68.36% of the natural images in CIFAR-10 test dataset and 41.22% of the ImageNet (ILSVRC 2012) validation images can be perturbed to at least one target class by modifying just one pixel with 73.22% and 5.52% confidence on average. Thus, the proposed attack explores a different take on adversarial machine learning in an extreme limited scenario, showing that current DNNs are also vulnerable to such low dimension attacks. Besides, we also illustrate an important application of DE (or broadly speaking, evolutionary computation) in the domain of adversarial machine learning: creating tools that can effectively generate low-cost adversarial attacks against neural networks for evaluating robustness.

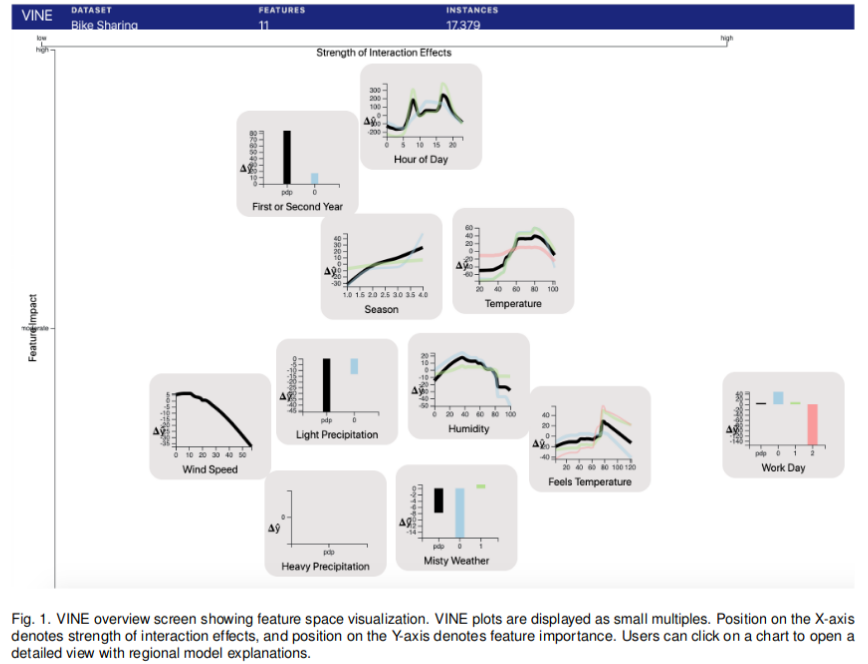

- VINE: Visualizing Statistical Interactions in Black Box Models; Matthew Britton; As machine learning becomes more pervasive, there is an urgent need for interpretable explanations of predictive models. Prior work has developed effective methods for visualizing global model behavior, as well as generating local (instance-specific) explanations. However, relatively little work has addressed regional explanations - how groups of similar instances behave in a complex model, and the related issue of visualizing statistical feature interactions. The lack of utilities available for these analytical needs hinders the development of models that are mission-critical, transparent, and align with social goals. We present VINE (Visual INteraction Effects), a novel algorithm to extract and visualize statistical interaction effects in black box models. We also present a novel evaluation metric for visualizations in the interpretable ML space.

- Clinical applications of machine learning algorithms: beyond the black box; David Watson et al; Machine learning algorithms may radically improve our ability to diagnose and treat disease; For moral, legal, and scientific reasons, it is essential that doctors and patients be able to understand and explain the predictions of these models; Scalable, customisable, and ethical solutions can be achieved by working together with relevant stakeholders, including patients, data scientists, and policy makers

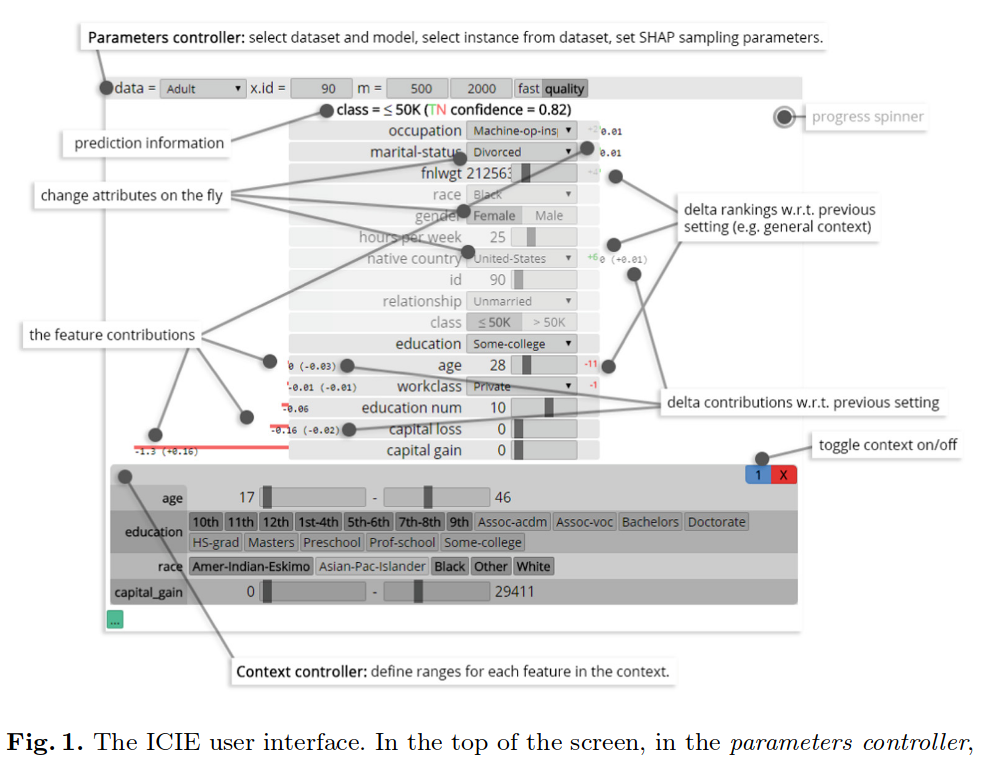

- ICIE 1.0: A Novel Tool for InteractiveContextual Interaction Explanations; Simon B. van der Zon et al; With the rise of new laws around privacy and awareness,explanation of automated decision making becomes increasingly impor-tant. Nowadays, machine learning models are used to aid experts indomains such as banking and insurance to find suspicious transactions,approve loans and credit card applications. Companies using such sys-tems have to be able to provide the rationale behind their decisions;blindly relying on the trained model is not sufficient. There are currentlya number of methods that provide insights in models and their decisions,but often they are either good at showing global or local behavior. Globalbehavior is often too complex to visualize or comprehend, so approxima-tions are shown, and visualizing local behavior is often misleading as itis difficult to define what local exactly means (i.e. our methods don’t“know” how easily a feature-value can be changed; which ones are flexi-ble, and which ones are static). We introduce theICIEframework (Inter-active Contextual Interaction Explanations) which enables users to viewexplanations of individual instances under differentcontexts.Wewillseethat various contexts for the same case lead to different explanations,revealing different feature interaction

-

Explanation in Human-AI Systems: A Literature Meta-Review, Synopsis of Key Ideas and Publications, and Bibliography for Explainable AI; Shane T. Mueller, Robert R. Hoffman, William Clancey, Abigail Emrey, Gary Klein; This is an integrative review that address the question, "What makes for a good explanation?" with reference to AI systems. Pertinent literatures are vast. Thus, this review is necessarily selective. That said, most of the key concepts and issues are expressed in this Report. The Report encapsulates the history of computer science efforts to create systems that explain and instruct (intelligent tutoring systems and expert systems). The Report expresses the explainability issues and challenges in modern AI, and presents capsule views of the leading psychological theories of explanation. Certain articles stand out by virtue of their particular relevance to XAI, and their methods, results, and key points are highlighted.

-

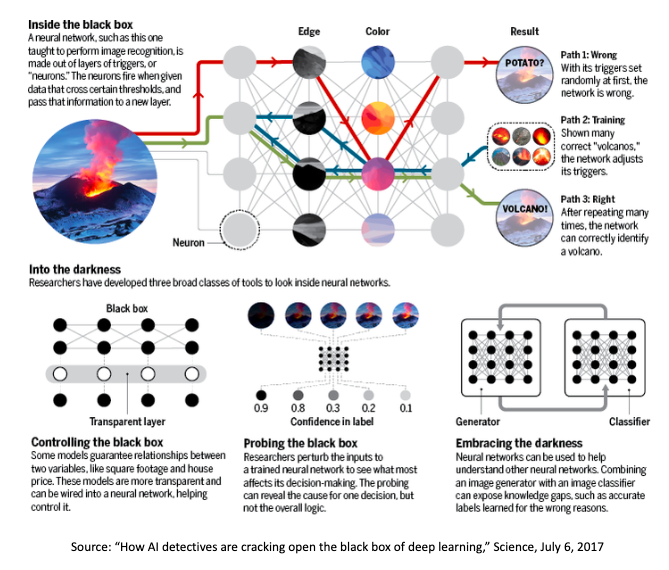

Explaining Explanations: An Overview of Interpretability of Machine Learning; Leilani H. Gilpin, David Bau, Ben Z. Yuan, Ayesha Bajwa, Michael Specter, Lalana Kagal; There has recently been a surge of work in explanatory artificial intelligence (XAI). This research area tackles the important problem that complex machines and algorithms often cannot provide insights into their behavior and thought processes. XAI allows users and parts of the internal system to be more transparent, providing explanations of their decisions in some level of detail. These explanations are important to ensure algorithmic fairness, identify potential bias/problems in the training data, and to ensure that the algorithms perform as expected. However, explanations produced by these systems is neither standardized nor systematically assessed. In an effort to create best practices and identify open challenges, we provide our definition of explainability and show how it can be used to classify existing literature. We discuss why current approaches to explanatory methods especially for deep neural networks are insufficient.

-

SAFE ML: Surrogate Assisted Feature Extraction for Model Learning; Alicja Gosiewska, Aleksandra Gacek, Piotr Lubon, Przemyslaw Biecek; Complex black-box predictive models may have high accuracy, but opacity causes problems like lack of trust, lack of stability, sensitivity to concept drift. On the other hand, interpretable models require more work related to feature engineering, which is very time consuming. Can we train interpretable and accurate models, without timeless feature engineering? In this article, we show a method that uses elastic black-boxes as surrogate models to create a simpler, less opaque, yet still accurate and interpretable glass-box models. New models are created on newly engineered features extracted/learned with the help of a surrogate model. We show applications of this method for model level explanations and possible extensions for instance level explanations. We also present an example implementation in Python and benchmark this method on a number of tabular data sets.

-

Attention is not Explanation; Sarthak Jain, Byron C. Wallace; Attention mechanisms have seen wide adoption in neural NLP models. In addition to improving predictive performance, these are often touted as affording transparency: models equipped with attention provide a distribution over attended-to input units, and this is often presented (at least implicitly) as communicating the relative importance of inputs. However, it is unclear what relationship exists between attention weights and model outputs. In this work, we perform extensive experiments across a variety of NLP tasks that aim to assess the degree to which attention weights provide meaningful

explanationsfor predictions. We find that they largely do not. For example, learned attention weights are frequently uncorrelated with gradient-based measures of feature importance, and one can identify very different attention distributions that nonetheless yield equivalent predictions. Our findings show that standard attention modules do not provide meaningful explanations and should not be treated as though they do. -

Efficient Search for Diverse Coherent Explanations; Chris Russell; This paper proposes new search algorithms for counterfactual explanations based upon mixed integer programming. We are concerned with complex data in which variables may take any value from a contiguous range or an additional set of discrete states. We propose a novel set of constraints that we refer to as a "mixed polytope" and show how this can be used with an integer programming solver to efficiently find coherent counterfactual explanations i.e. solutions that are guaranteed to map back onto the underlying data structure, while avoiding the need for brute-force enumeration. We also look at the problem of diverse explanations and show how these can be generated within our framework.

-

Seven Myths in Machine Learning Research; Oscar Chang, Hod Lipson; As deep learning becomes more and more ubiquitous in high stakes applications like medical imaging, it is important to be careful of how we interpret decisions made by neural networks. For example, while it would be nice to have a CNN identify a spot on an MRI image as a malignant cancer-causing tumor, these results should not be trusted if they are based on fragile interpretation methods

-

Towards Aggregating Weighted Feature Attributions; Umang Bhatt, Pradeep Ravikumar, Jose M. F. Moura; Current approaches for explaining machine learning models fall into two distinct classes: antecedent event influence and value attribution. The former leverages training instances to describe how much influence a training point exerts on a test point, while the latter attempts to attribute value to the features most pertinent to a given prediction. In this work, we discuss an algorithm, AVA: Aggregate Valuation of Antecedents, that fuses these two explanation classes to form a new approach to feature attribution that not only retrieves local explanations but also captures global patterns learned by a model.

-

An Evaluation of the Human-Interpretability of Explanation; Isaac Lage, Emily Chen, Jeffrey He, Menaka Narayanan, Been Kim, Sam Gershman, Finale Doshi-Velez; What kinds of explanation are truly human-interpretable remains poorly understood. This work advances our understanding of what makes explanations interpretable under three specific tasks that users may perform with machine learning systems: simulation of the response, verification of a suggested response, and determining whether the correctness of a suggested response changes under a change to the inputs. Through carefully controlled human-subject experiments, we identify regularizers that can be used to optimize for the interpretability of machine learning systems. Our results show that the type of complexity matters: cognitive chunks (newly defined concepts) affect performance more than variable repetitions, and these trends are consistent across tasks and domains. This suggests that there may exist some common design principles for explanation systems.

-

Interpretable machine learning: definitions, methods, and applications; W. James Murdocha, Chandan Singh, Karl Kumbiera, Reza Abbasi-As, and Bin Yu; Machine-learning models have demonstrated great success in learning complex patterns that enable them to make predictions about unobserved data. In addition to using models for prediction, the ability to interpret what a model has learned is receiving an increasing amount of attention. However, this increased focus has led to considerable confusion about the notion of interpretability. In particular, it is unclear how the wide array of proposed interpretation methods are related, and what common concepts can be used to evaluate them.

-

Learning Optimal and Fair Decision Trees for Non-Discriminative Decision-Making; Sina Aghaei, Mohammad Javad Azizi, Phebe Vayanos; In recent years, automated data-driven decision-making systems have enjoyed a tremendous success in a variety of fields (e.g., to make product recommendations, or to guide the production of entertainment). More recently, these algorithms are increasingly being used to assist socially sensitive decisionmaking (e.g., to decide who to admit into a degree program or to prioritize individuals for public housing). Yet, these automated tools may result in discriminative decision-making in the sense that they may treat individuals unfairly or unequally based on membership to a category or a minority, resulting in disparate treatment or disparate impact and violating both moral and ethical standards. This may happen when the training dataset is itself biased (e.g., if individuals belonging to a particular group have historically been discriminated upon). However, it may also happen when the training dataset is unbiased, if the errors made by the system affect individuals belonging to a category or minority differently (e.g., if misclassification rates for Blacks are higher than for Whites). In this paper, we unify the definitions of unfairness across classification and regression. We propose a versatile mixed-integer optimization framework for learning optimal and fair decision trees and variants thereof to prevent disparate treatment and/or disparate impact as appropriate. This translates to a flexible schema for designing fair and interpretable policies suitable for socially sensitive decision-making. We conduct extensive computational studies that show that our framework improves the state-of-the-art in the field (which typically relies on heuristics) to yield non-discriminative decisions at lower cost to overall accuracy.

-

Understanding Individual Decisions of CNNs via Contrastive Backpropagation; Jindong Gu, Yinchong Yang, Volker Tresp; A number of backpropagation-based approaches such as DeConvNets, vanilla Gradient Visualization and Guided Backpropagation have been proposed to better understand individual decisions of deep convolutional neural networks. The saliency maps produced by them are proven to be non-discriminative. Recently, the Layer-wise Relevance Propagation (LRP) approach was proposed to explain the classification decisions of rectifier neural networks. In this work, we evaluate the discriminativeness of the generated explanations and analyze the theoretical foundation of LRP, i.e. Deep Taylor Decomposition. The experiments and analysis conclude that the explanations generated by LRP are not class-discriminative. Based on LRP, we propose Contrastive Layer-wise Relevance Propagation (CLRP), which is capable of producing instance-specific, class-discriminative, pixel-wise explanations. In the experiments, we use the CLRP to explain the decisions and understand the difference between neurons in individual classification decisions. We also evaluate the explanations quantitatively with a Pointing Game and an ablation study. Both qualitative and quantitative evaluations show that the CLRP generates better explanations than the LRP. The code is available.

2018

-

Conversational Explanations of Machine Learning PredictionsThrough Class-contrastive Counterfactual Statements; Kacper Sokol, Peter Flach; Machine learning models have become pervasivein our everyday life; they decide on important mat-ters influencing our education, employment and ju-dicial system. Many of these predictive systemsare commercial products protected by trade secrets,hence their decision-making is opaque. Therefore,in our research we address interpretability and ex-plainability of predictions made by machine learn-ing models. Our work draws heavily on human ex-planation research in social sciences: contrastiveand exemplar explanations provided through a di-alogue. This user-centric design, focusing on a layaudience rather than domain experts, applied to ma-chine learning allows explainees to drive the expla-nation to suit their needs instead of being served aprecooked template.

-

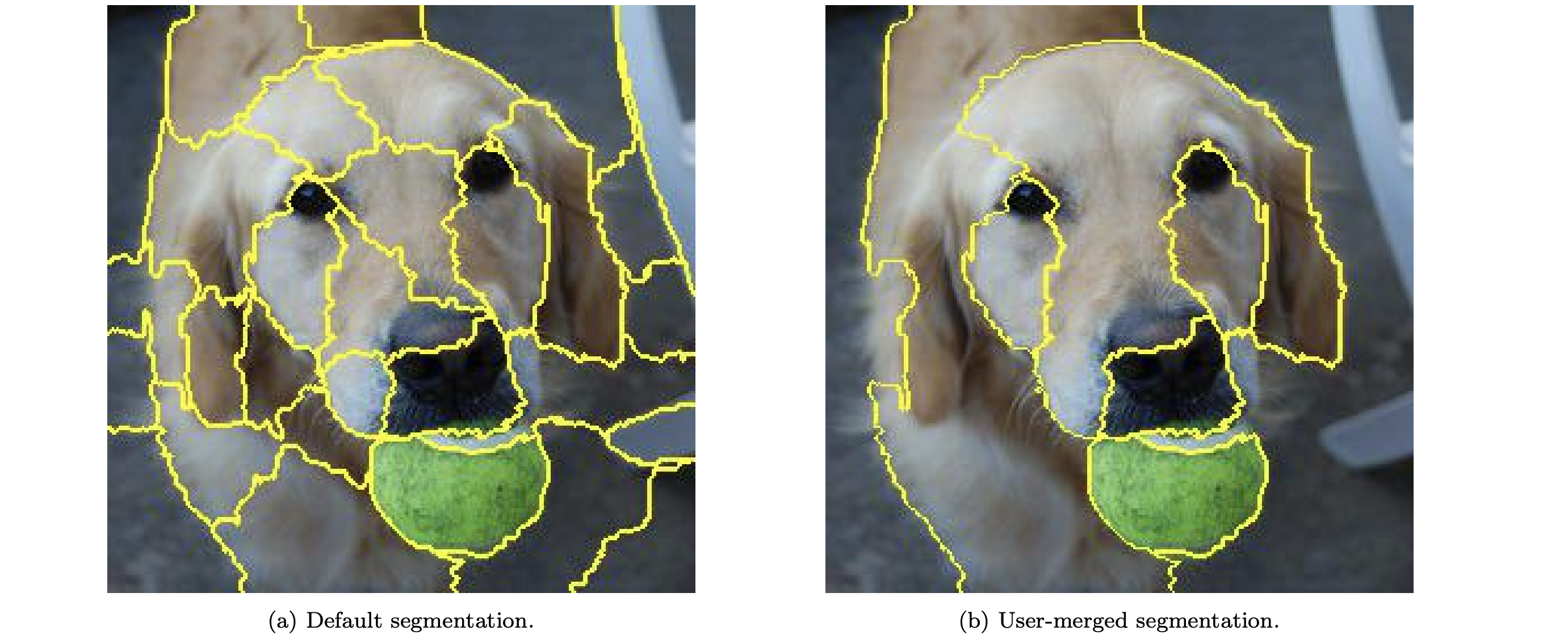

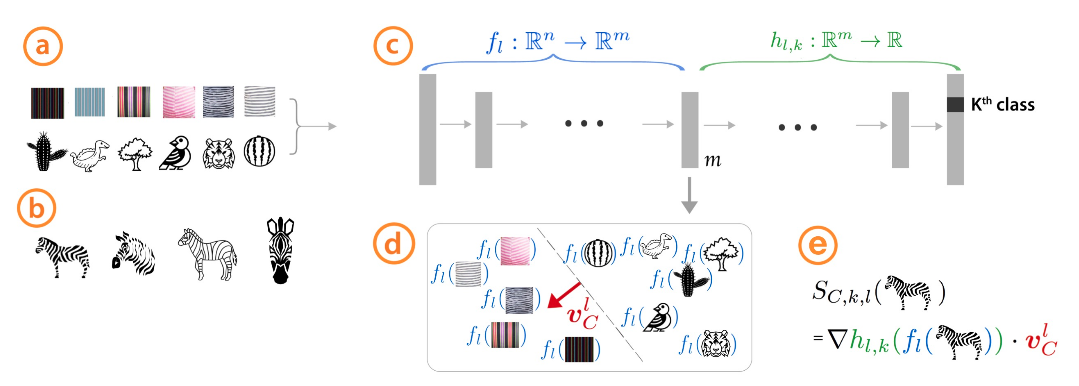

Interpretability Beyond Feature Attribution: Quantitative Testing with Concept Activation Vectors (TCAV); Been Kim, Martin Wattenberg, Justin Gilmer, Carrie Cai, James Wexler, Fernanda Viegas, Rory Sayres; The interpretation of deep learning models is a challenge due to their size, complexity, and often opaque internal state. In addition, many systems, such as image classifiers, operate on low-level features rather than high-level concepts. To address these challenges, we introduce Concept Activation Vectors (CAVs), which provide an interpretation of a neural net's internal state in terms of human-friendly concepts. The key idea is to view the high-dimensional internal state of a neural net as an aid, not an obstacle. We show how to use CAVs as part of a technique, Testing with CAVs (TCAV), that uses directional derivatives to quantify the degree to which a user-defined concept is important to a classification result--for example, how sensitive a prediction of "zebra" is to the presence of stripes. Using the domain of image classification as a testing ground, we describe how CAVs may be used to explore hypotheses and generate insights for a standard image classification network as well as a medical application. TowardsDataScience.

-