alesee / Abstractive Text Summarization

Labels

Projects that are alternatives of or similar to Abstractive Text Summarization

abstractive-text-summarization

This repository and notebook contains code for in-progress implementation/experiments on Abstractive Text Summarization using Sequence-to-sequence RNNs and Beyond paper.

Requirements

- Create conda environment

conda env create -f environment.yml --gpu

conda env create -f environment-cpu.yml --cpu

- Activate environment

source activate abs-sum -- gpu

source activate abs-sum-cpu -- cpu

- Install dependencies (PyTorch, Fastai, etc) via:

pip install -r requirements.txt

- Download

spacyenglish module

python -m spacy download en

Dataset

The dataset used is a subset of the gigaword dataset and can be found here.

It contains 3,803,955 parallel source & target examples for training and 189,649 examples for validation.

After downloading, we created article-title pairs, saved in tabular datset format (.csv) and extracted a sample subset (80,000 for training & 20,000 for validation). This data preparation can be found here.

An example article-title pair looks like this:

source: the algerian cabinet chaired by president abdelaziz bouteflika on sunday adopted the #### finance bill predicated on an oil price of ## dollars a barrel and a growth rate of #.# percent , it was announced here .

target: algeria adopts #### finance bill with oil put at ## dollars a barrel

Experimenting on the complete dataset (3M) would take a really long time (also $$$$). So in order to train and experiment faster we use our sample subset of 80,000.

Current Features

- model architecture supports LSTM & GRU (biLSTM-to-uniLSTM or biGRU-to-uniGRU)

- implements batch data processing

- implements attention mechanism (Bahdanau et al. & Luong et al.(global dot))

- implements scheduled sampling (teacher forcing)

- implements tied embeddings

- initializes encoder-decoder with pretrained vectors (glove.6B.200d)

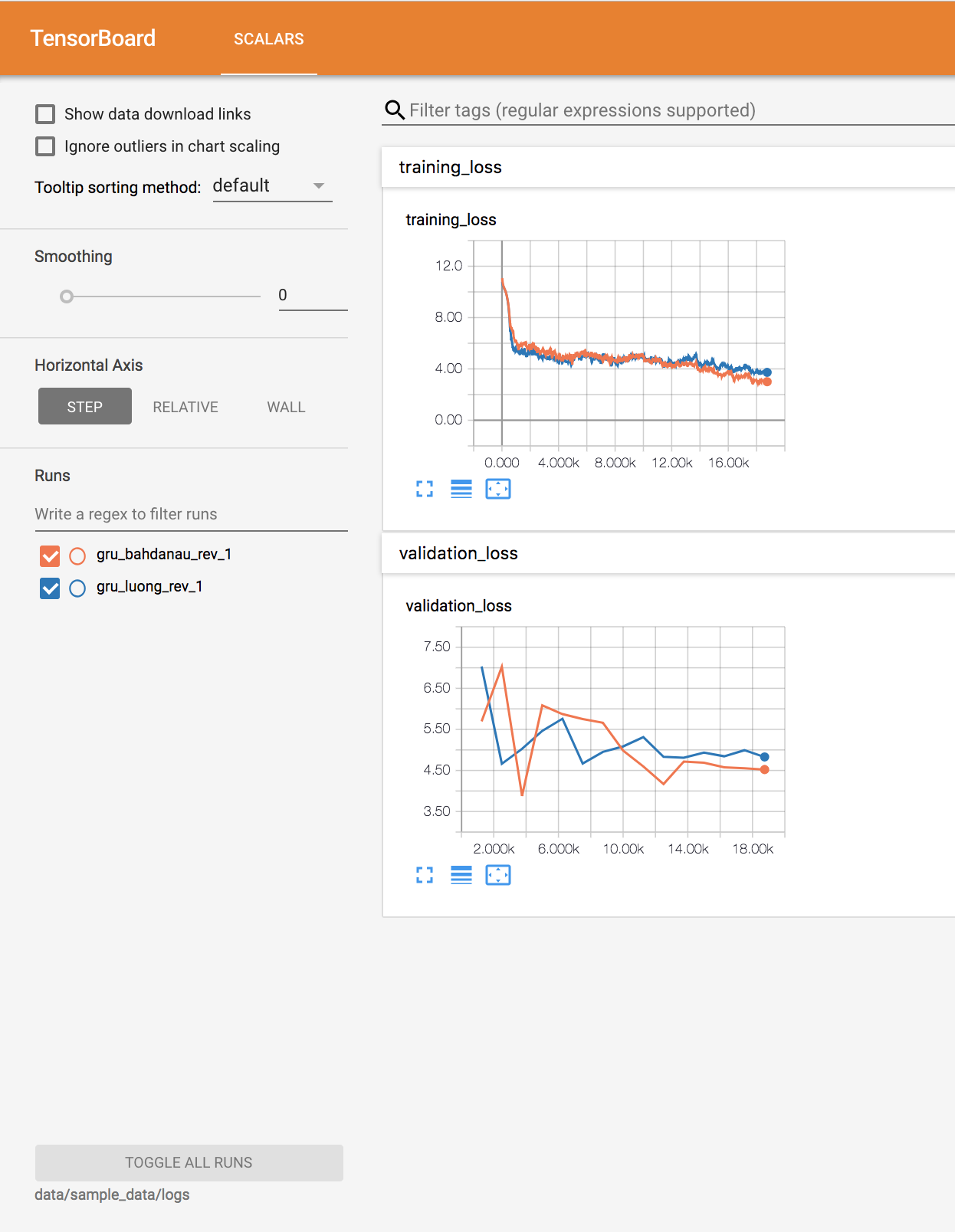

- implements custom training callbacks (tensorboard visualization for PyTorch, save best model & log checkpoint)

- implements attention plots

To-Do

- Implement additional linguistic features embeddings

- Implement generator-pointer switch and replace unknown words by selecting source token with the highest attention score.

- Implement large vocabulary trick

- Implement sentence level attention

- Implement beam search during inference

- implement rouge evaluation