NVIDIA / Aistore

Programming Languages

Projects that are alternatives of or similar to Aistore

AIStore is a lightweight object storage system with the capability to linearly scale-out with each added storage node and a special focus on petascale deep learning.

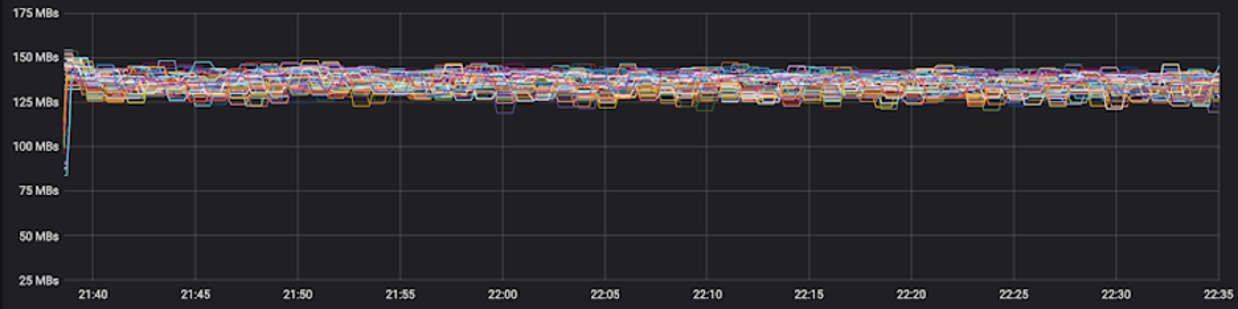

AIStore (AIS for short) is a built from scratch, lightweight storage stack tailored for AI apps. AIS consistently shows balanced I/O distribution and linear scalability across arbitrary numbers of clustered servers, producing performance charts that look as follows:

The picture above comprises 120 HDDs.

The ability to scale linearly with each added disk was, and remains, one of the main incentives behind AIStore. Much of the development is also driven by the ideas to offload dataset transformation and other I/O intensive stages of the ETL pipelines.

Features

- scale-out with no downtime and no limitation;

- comprehensive HTTP REST API to GET and PUT objects, create, destroy, list and configure buckets, and more;

- Amazon S3 API to run unmodified S3 apps;

- FUSE client (

aisfs) to access AIS objects as files; - arbitrary number of extremely lightweight access points;

- easy-to-use CLI that supports TAB auto-completions;

- automated cluster rebalancing upon: changes in cluster membership, drive failures and attachments, bucket renames;

- N-way mirroring (RAID-1), Reed–Solomon erasure coding, end-to-end data protection.

- ETL offload: running user-defined extract-transform-load workloads on (and by) performance-optimized storage cluster;

Also, AIStore:

- can be deployed on any commodity hardware;

- supports single-command infrastructure and software deployment on Google Cloud Platform via ais-k8s GitHub repo;

- supports Amazon S3, Google Cloud, and Microsoft Azure backends (and all S3, GCS, and Azure-compliant object storages);

- provides unified global namespace across (ad-hoc) connected AIS clusters;

- can be used as a fast cache for GCS and S3; can be populated on-demand and/or via

prefetchanddownloadAPIs; - can be used as a standalone highly-available protected storage;

- includes MapReduce extension for massively parallel resharding of very large datasets;

- supports existing PyTorch and TensorFlow-based training models.

AIS runs natively on Kubernetes and features open format - thus, the freedom to copy or move your data from AIS at any time using the familiar Linux tar(1), scp(1), rsync(1) and similar.

For AIStore white paper and design philosophy, for introduction to large-scale deep learning and the most recently added features, please see AIStore Overview (where you can also find six alternative ways to work with existing datasets). Videos and animated presentations can be found at videos. To get started with AIS, please click on Getting Started.

Table of Contents

- Introduction

- Monitoring

- Configuration

- Amazon S3 compatibility

- TensorFlow integration

- Guides and References

- Assorted Tips

- Selected Package READMEs

Introduction

AIStore supports numerous deployment options covering a spectrum from a single-laptop to petascale bare-metal clusters of any size. This includes:

| Deployment option | Targeted audience and objective |

|---|---|

| Local playground | AIS developers and development, Linux or Mac OS |

| Minimal production-ready deployment | This option utilizes preinstalled docker image and is targeting first-time users or researchers (who could immediately start training their models on smaller datasets) |

| Easy automated GCP/GKE deployment | Developers, first-time users, AI researchers |

| Large-scale production deployment | Requires Kubernetes and is provided (documented, automated) via a separate repository: ais-k8s |

For detailed information on these and other supported options, and for a step-by-step instruction, please refer to Getting Started.

Monitoring

As is usually the case with storage clusters, there are multiple ways to monitor their performance.

AIStore includes

aisloader- the tool to stress-test and benchmark storage performance. For background, command-line options, and usage, please see Load Generator and How To Benchmark AIStore.

For starters, AIS collects and logs a fairly large and growing number of counters that describe all aspects of its operation, including (but not limited to) those that reflect cluster recovery/rebalancing, all extended long-running operations, and, of course, object storage transactions.

In particular:

- For dSort monitoring, please see dSort

- For Downloader monitoring, please see Internet Downloader

The logging interval is called stats_time (default 10s) and is configurable on the level of both each specific node and the entire cluster.

However. Speaking of ways to monitor AIS remotely, the two most obvious ones would be:

- AIS CLI

- Graphite/Grafana

As far as Graphite/Grafana, AIS integrates with these popular backends via StatsD - the daemon for easy but powerful stats aggregation. StatsD can be connected to Graphite, which then can be used as a data source for Grafana to get a visual overview of the statistics and metrics.

The scripts for easy deployment of both Graphite and Grafana are included (see below).

For local non-containerized deployments, use

./deploy/dev/local/deploy_grafana.shto start Graphite and Grafana containers. Local deployment scripts will automatically "notice" the presence of the containers and will send statistics to the Graphite.

For local docker-compose based deployments, make sure to use

-grafanacommand-line option. The./deploy/dev/docker/deploy_docker.shscript will then spin-up Graphite and Grafana containers.

In both of these cases, Grafana will be accessible at localhost:3000.

For information on AIS statistics, please see Statistics, Collected Metrics, Visualization

Configuration

AIS configuration is consolidated in a single JSON template where the configuration sections and the knobs within those sections must be self-explanatory, whereby the majority of those (except maybe just a few) have pre-assigned default values. The configuration template serves as a single source for all deployment-specific configurations, examples of which can be found under the folder that consolidates both containerized-development and production deployment scripts.

AIS production deployment, in particular, requires careful consideration of at least some of the configurable aspects. For example, AIS supports 3 (three) logical networks and will, therefore, benefit, performance-wise, if provisioned with up to 3 isolated physical networks or VLANs. The logical networks are:

- user (aka public)

- intra-cluster control

- intra-cluster data

with the corresponding JSON names, respectively:

hostnamehostname_intra_controlhostname_intra_data

Assorted Tips

- To enable an optional AIStore authentication server, execute

$ AUTH_ENABLED=true make deploy. For information on AuthN server, please see AuthN documentation. - In addition to AIStore - the storage cluster, you can also deploy aisfs - to access AIS objects as files, and AIS CLI - to monitor, configure and manage AIS nodes and buckets.

- AIS CLI is an easy-to-use command-line management tool supporting a growing number of commands and options (one of the first ones you may want to try could be

ais show cluster- show the state and status of an AIS cluster). The CLI is documented in the readme; getting started with it boils down to runningmake cliand following the prompts. - For more testing commands and options, please refer to the testing README.

- For

aisnodecommand-line options, see: command-line options. - For helpful links and/or background on Go, AWS, GCP, and Deep Learning: helpful links.

- And again, run

make helpto find out how to build, run, and test AIStore and tools.

Guides and References

- AIS Overview

- Tutorials

- Videos

-

CLI

- Create, destroy, list, and other operations on buckets

- GET, PUT, APPEND, PROMOTE, and other operations on objects

- Cluster and Node management

- Mountpath (Disk) management

- Attach, Detach, and monitor remote clusters

- Start, Stop, and monitor downloads

- Distributed Sort

- User account and access management

- Xaction (Job) management

- ETL with AIStore

- On-Disk Layout

- System Files

- Command line parameters

- AIS Load Generator: integrated benchmark tool

- Batch List and Range Operations: Prefetch, and more

- Object checksums: Brief Theory of Operations

- Configuration

- Traffic patterns

- Highly available control plane

- How to benchmark

- RESTful API

- FUSE with AIStore

- Joining AIS cluster

- Removing a node from AIS cluster

- AIS Buckets: definition, operations, properties

- Statistics, Collected Metrics, Visualization

- Performance: Tuning and Testing

- Rebalance

- Storage Services

- Extended Actions

- Integrated Internet Downloader

- Docker for AIS developers

- Troubleshooting Cluster Operation

Selected Package READMEs

- Package

api - Package

cli - Package

fuse - Package

downloader - Package

memsys - Package

transport - Package

dSort

License

MIT

Author

Alex Aizman (NVIDIA)