kamalkraj / Albert Tf2.0

Programming Languages

Labels

Projects that are alternatives of or similar to Albert Tf2.0

ALBERT-TF2.0

ALBERT model Fine Tuning using TF2.0

This repository contains TensorFlow 2.0 implementation for ALBERT.

Requirements

- python3

- pip install -r requirements.txt

ALBERT Pre-training

ALBERT model pre-training from scratch and Domain specific fine-tuning. Instructions here

Download ALBERT TF 2.0 weights

| Verison 1 | Version 2 |

|---|---|

| base | base |

| large | large |

| xlarge | xlarge |

| xxlarge | xxlarge |

unzip the model inside repo.

Above weights does not contain the final layer in original model. Now can only be used for fine tuning downstream tasks.

For full Weights conversion from TF-HUB to TF 2.0 here

Download glue data

Download using the below cmd

python download_glue_data.py --data_dir glue_data --tasks all

Fine-tuning

To prepare the fine-tuning data for final model training, use the

create_finetuning_data.py script. Resulting

datasets in tf_record format and training meta data should be later passed to

training or evaluation scripts. The task-specific arguments are described in

following sections:

Creating finetuninig data

- Example CoLA

export GLUE_DIR=glue_data/

export ALBERT_DIR=large/

export TASK_NAME=CoLA

export OUTPUT_DIR=cola_processed

mkdir $OUTPUT_DIR

python create_finetuning_data.py \

--input_data_dir=${GLUE_DIR}/ \

--spm_model_file=${ALBERT_DIR}/vocab/30k-clean.model \

--train_data_output_path=${OUTPUT_DIR}/${TASK_NAME}_train.tf_record \

--eval_data_output_path=${OUTPUT_DIR}/${TASK_NAME}_eval.tf_record \

--meta_data_file_path=${OUTPUT_DIR}/${TASK_NAME}_meta_data \

--fine_tuning_task_type=classification --max_seq_length=128 \

--classification_task_name=${TASK_NAME}

Running classifier

export MODEL_DIR=CoLA_OUT

python run_classifer.py \

--train_data_path=${OUTPUT_DIR}/${TASK_NAME}_train.tf_record \

--eval_data_path=${OUTPUT_DIR}/${TASK_NAME}_eval.tf_record \

--input_meta_data_path=${OUTPUT_DIR}/${TASK_NAME}_meta_data \

--albert_config_file=${ALBERT_DIR}/config.json \

--task_name=${TASK_NAME} \

--spm_model_file=${ALBERT_DIR}/vocab/30k-clean.model \

--output_dir=${MODEL_DIR} \

--init_checkpoint=${ALBERT_DIR}/tf2_model.h5 \

--do_train \

--do_eval \

--train_batch_size=16 \

--learning_rate=1e-5 \

--custom_training_loop

By default run_classifier will run 3 epochs. and evaluate on development set

Above cmd would result in dev set accuracy of 76.22 in CoLA task

The above code tested on TITAN RTX 24GB single GPU

SQuAD

Data and Evalution scripts

Training Data Preparation

export SQUAD_DIR=SQuAD

export SQUAD_VERSION=v1.1

export ALBERT_DIR=large

export OUTPUT_DIR=squad_out_${SQUAD_VERSION}

mkdir $OUTPUT_DIR

python create_finetuning_data.py \

--squad_data_file=${SQUAD_DIR}/train-${SQUAD_VERSION}.json \

--spm_model_file=${ALBERT_DIR}/vocab/30k-clean.model \

--train_data_output_path=${OUTPUT_DIR}/squad_${SQUAD_VERSION}_train.tf_record \

--meta_data_file_path=${OUTPUT_DIR}/squad_${SQUAD_VERSION}_meta_data \

--fine_tuning_task_type=squad \

--max_seq_length=384

Running Model

python run_squad.py \

--mode=train_and_predict \

--input_meta_data_path=${OUTPUT_DIR}/squad_${SQUAD_VERSION}_meta_data \

--train_data_path=${OUTPUT_DIR}/squad_${SQUAD_VERSION}_train.tf_record \

--predict_file=${SQUAD_DIR}/dev-${SQUAD_VERSION}.json \

--albert_config_file=${ALBERT_DIR}/config.json \

--init_checkpoint=${ALBERT_DIR}/tf2_model.h5 \

--spm_model_file=${ALBERT_DIR}/vocab/30k-clean.model \

--train_batch_size=48 \

--predict_batch_size=48 \

--learning_rate=1e-5 \

--num_train_epochs=3 \

--model_dir=${OUTPUT_DIR} \

--strategy_type=mirror

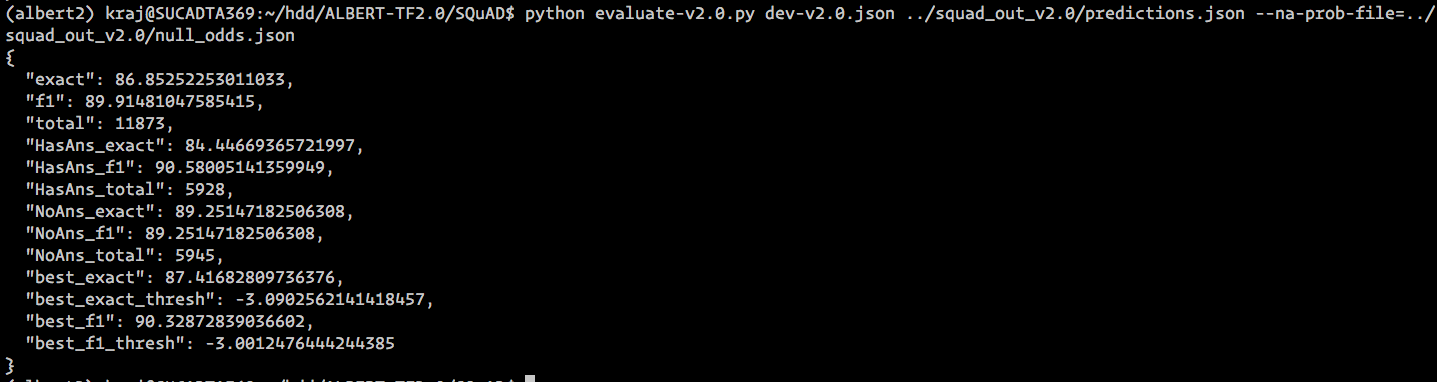

Runnig SQuAD V2.0

export SQUAD_DIR=SQuAD

export SQUAD_VERSION=v2.0

export ALBERT_DIR=xxlarge

export OUTPUT_DIR=squad_out_${SQUAD_VERSION}

mkdir $OUTPUT_DIR

python create_finetuning_data.py \

--squad_data_file=${SQUAD_DIR}/train-${SQUAD_VERSION}.json \

--spm_model_file=${ALBERT_DIR}/vocab/30k-clean.model \

--train_data_output_path=${OUTPUT_DIR}/squad_${SQUAD_VERSION}_train.tf_record \

--meta_data_file_path=${OUTPUT_DIR}/squad_${SQUAD_VERSION}_meta_data \

--fine_tuning_task_type=squad \

--max_seq_length=384

python run_squad.py \

--mode=train_and_predict \

--input_meta_data_path=${OUTPUT_DIR}/squad_${SQUAD_VERSION}_meta_data \

--train_data_path=${OUTPUT_DIR}/squad_${SQUAD_VERSION}_train.tf_record \

--predict_file=${SQUAD_DIR}/dev-${SQUAD_VERSION}.json \

--albert_config_file=${ALBERT_DIR}/config.json \

--init_checkpoint=${ALBERT_DIR}/tf2_model.h5 \

--spm_model_file=${ALBERT_DIR}/vocab/30k-clean.model \

--train_batch_size=24 \

--predict_batch_size=24 \

--learning_rate=1.5e-5 \

--num_train_epochs=3 \

--model_dir=${OUTPUT_DIR} \

--strategy_type=mirror \

--version_2_with_negative \

--max_seq_length=384

Experiment done on 4 x NVIDIA TITAN RTX 24 GB.

Result

Multi-GPU training and XLA

- Use flag

--strategy_type=mirrorfor Multi GPU training. Currently All the existing GPUs in the environment will be used. - Use flag

--enable-xlato enable XLA. Model training starting time will be increase.(JIT compilation)

Ignore

Below warning will be displayed if you use keras model.fit method at end of each epoch. Issue with training steps calculation when tf.data provided to model.fit()

Have no effect on model performance so ignore. Mostly will fixed in the next tf2 relase . Issue-link

2019-10-31 13:35:48.322897: W tensorflow/core/common_runtime/base_collective_executor.cc:216] BaseCollectiveExecutor::StartAbort Out of range:

End of sequence

[[{{node IteratorGetNext}}]]

[[model_1/albert_model/word_embeddings/Shape/_10]]

2019-10-31 13:36:03.302722: W tensorflow/core/common_runtime/base_collective_executor.cc:216] BaseCollectiveExecutor::StartAbort Out of range:

End of sequence

[[{{node IteratorGetNext}}]]

[[IteratorGetNext/_4]]