nathanhubens / Autoencoders

Licence: mit

Implementation of simple autoencoders networks with Keras

Stars: ✭ 123

Projects that are alternatives of or similar to Autoencoders

Mish

Official Repsoitory for "Mish: A Self Regularized Non-Monotonic Neural Activation Function" [BMVC 2020]

Stars: ✭ 1,072 (+771.54%)

Mutual labels: jupyter-notebook, neural-networks

Math And Ml Notes

Books, papers and links to latest research in ML/AI

Stars: ✭ 76 (-38.21%)

Mutual labels: jupyter-notebook, neural-networks

Convisualize nb

Visualisations for Convolutional Neural Networks in Pytorch

Stars: ✭ 57 (-53.66%)

Mutual labels: jupyter-notebook, neural-networks

Yann

This toolbox is support material for the book on CNN (http://www.convolution.network).

Stars: ✭ 41 (-66.67%)

Mutual labels: jupyter-notebook, neural-networks

Codesearchnet

Datasets, tools, and benchmarks for representation learning of code.

Stars: ✭ 1,378 (+1020.33%)

Mutual labels: jupyter-notebook, neural-networks

Machine Learning From Scratch

Succinct Machine Learning algorithm implementations from scratch in Python, solving real-world problems (Notebooks and Book). Examples of Logistic Regression, Linear Regression, Decision Trees, K-means clustering, Sentiment Analysis, Recommender Systems, Neural Networks and Reinforcement Learning.

Stars: ✭ 42 (-65.85%)

Mutual labels: jupyter-notebook, neural-networks

Mit Deep Learning

Tutorials, assignments, and competitions for MIT Deep Learning related courses.

Stars: ✭ 8,912 (+7145.53%)

Mutual labels: jupyter-notebook, neural-networks

Udacity Deep Learning Nanodegree

This is just a collection of projects that made during my DEEPLEARNING NANODEGREE by UDACITY

Stars: ✭ 15 (-87.8%)

Mutual labels: jupyter-notebook, neural-networks

Neural Tangents

Fast and Easy Infinite Neural Networks in Python

Stars: ✭ 1,357 (+1003.25%)

Mutual labels: jupyter-notebook, neural-networks

Knet.jl

Koç University deep learning framework.

Stars: ✭ 1,260 (+924.39%)

Mutual labels: jupyter-notebook, neural-networks

Pytorchnlpbook

Code and data accompanying Natural Language Processing with PyTorch published by O'Reilly Media https://nlproc.info

Stars: ✭ 1,390 (+1030.08%)

Mutual labels: jupyter-notebook, neural-networks

Teacher Student Training

This repository stores the files used for my summer internship's work on "teacher-student learning", an experimental method for training deep neural networks using a trained teacher model.

Stars: ✭ 34 (-72.36%)

Mutual labels: jupyter-notebook, neural-networks

Mckinsey Smartcities Traffic Prediction

Adventure into using multi attention recurrent neural networks for time-series (city traffic) for the 2017-11-18 McKinsey IronMan (24h non-stop) prediction challenge

Stars: ✭ 49 (-60.16%)

Mutual labels: jupyter-notebook, neural-networks

Lovaszsoftmax

Code for the Lovász-Softmax loss (CVPR 2018)

Stars: ✭ 1,148 (+833.33%)

Mutual labels: jupyter-notebook, neural-networks

Sentence Aspect Category Detection

Aspect-Based Sentiment Analysis

Stars: ✭ 24 (-80.49%)

Mutual labels: jupyter-notebook, neural-networks

Traffic Sign Classifier

Udacity Self-Driving Car Engineer Nanodegree. Project: Build a Traffic Sign Recognition Classifier

Stars: ✭ 12 (-90.24%)

Mutual labels: jupyter-notebook, neural-networks

Neural Networks

brief introduction to Python for neural networks

Stars: ✭ 82 (-33.33%)

Mutual labels: jupyter-notebook, neural-networks

Sigmoidal ai

Tutoriais de Python, Data Science, Machine Learning e Deep Learning - Sigmoidal

Stars: ✭ 103 (-16.26%)

Mutual labels: jupyter-notebook, neural-networks

Autoencoders

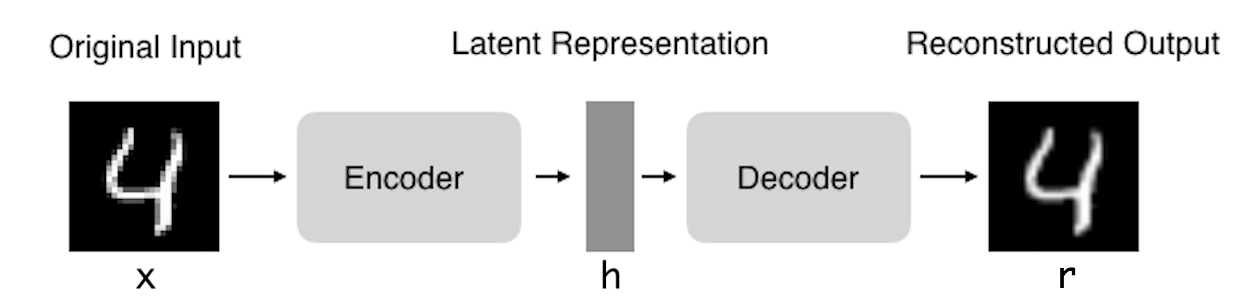

Autoencoders (AE) are neural networks that aims to copy their inputs to their outputs. They work by compressing the input into a latent-space representation, and then reconstructing the output from this representation. This kind of network is composed of two parts :

- Encoder: This is the part of the network that compresses the input into a latent-space representation. It can be represented by an encoding function h=f(x).

- Decoder: This part aims to reconstruct the input from the latent space representation. It can be represented by a decoding function r=g(h).

This notebook show the implementation of five types of autoencoders :

- Vanilla Autoencoder

- Multilayer Autoencoder

- Convolutional Autoencoder

- Regularized Autoencoder

- Variational Autoencoder

The explanation of each (except VAE) can be found here

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].