odpf / Beast

Licence: apache-2.0

Load data from Kafka to any data warehouse

Stars: ✭ 119

Programming Languages

java

68154 projects - #9 most used programming language

Projects that are alternatives of or similar to Beast

Bireme

Bireme is an incremental synchronization tool for the Greenplum / HashData data warehouse

Stars: ✭ 110 (-7.56%)

Mutual labels: kafka

Spec

The AsyncAPI specification allows you to create machine-readable definitions of your asynchronous APIs.

Stars: ✭ 1,860 (+1463.03%)

Mutual labels: kafka

Myth

Reliable messages resolve distributed transactions

Stars: ✭ 1,470 (+1135.29%)

Mutual labels: kafka

Lenses Docker

❤for real-time DataOps - where the application and data fabric blends - Lenses

Stars: ✭ 115 (-3.36%)

Mutual labels: kafka

Cube.js

📊 Cube — Open-Source Analytics API for Building Data Apps

Stars: ✭ 11,983 (+9969.75%)

Mutual labels: bigquery

Springboot Labs

一个涵盖六个专栏:Spring Boot 2.X、Spring Cloud、Spring Cloud Alibaba、Dubbo、分布式消息队列、分布式事务的仓库。希望胖友小手一抖,右上角来个 Star,感恩 1024

Stars: ✭ 12,804 (+10659.66%)

Mutual labels: kafka

Pq

a command-line Protobuf parser with Kafka support and JSON output

Stars: ✭ 120 (+0.84%)

Mutual labels: kafka

Fast Data Dev

Kafka Docker for development. Kafka, Zookeeper, Schema Registry, Kafka-Connect, Landoop Tools, 20+ connectors

Stars: ✭ 1,707 (+1334.45%)

Mutual labels: kafka

Mmo Server

Distributed Java game server, including login, gateway, game demo

Stars: ✭ 114 (-4.2%)

Mutual labels: kafka

Kafka Stack Docker Compose

docker compose files to create a fully working kafka stack

Stars: ✭ 1,836 (+1442.86%)

Mutual labels: kafka

Go Kafka Example

Golang Kafka consumer and producer example

Stars: ✭ 108 (-9.24%)

Mutual labels: kafka

Cmak

CMAK is a tool for managing Apache Kafka clusters

Stars: ✭ 10,544 (+8760.5%)

Mutual labels: kafka

Example Spark Kafka

Apache Spark and Apache Kafka integration example

Stars: ✭ 120 (+0.84%)

Mutual labels: kafka

Kafka Avro Course

Learn the Confluent Schema Registry & REST Proxy

Stars: ✭ 120 (+0.84%)

Mutual labels: kafka

Kafka Flow

KafkaFlow is a .NET framework to consume and produce Kafka messages with multi-threading support. It's very simple to use and very extendable. You just need to install, configure, start/stop the bus with your app and create a middleware/handler to process the messages.

Stars: ✭ 118 (-0.84%)

Mutual labels: kafka

Beast

Kafka to BigQuery Sink

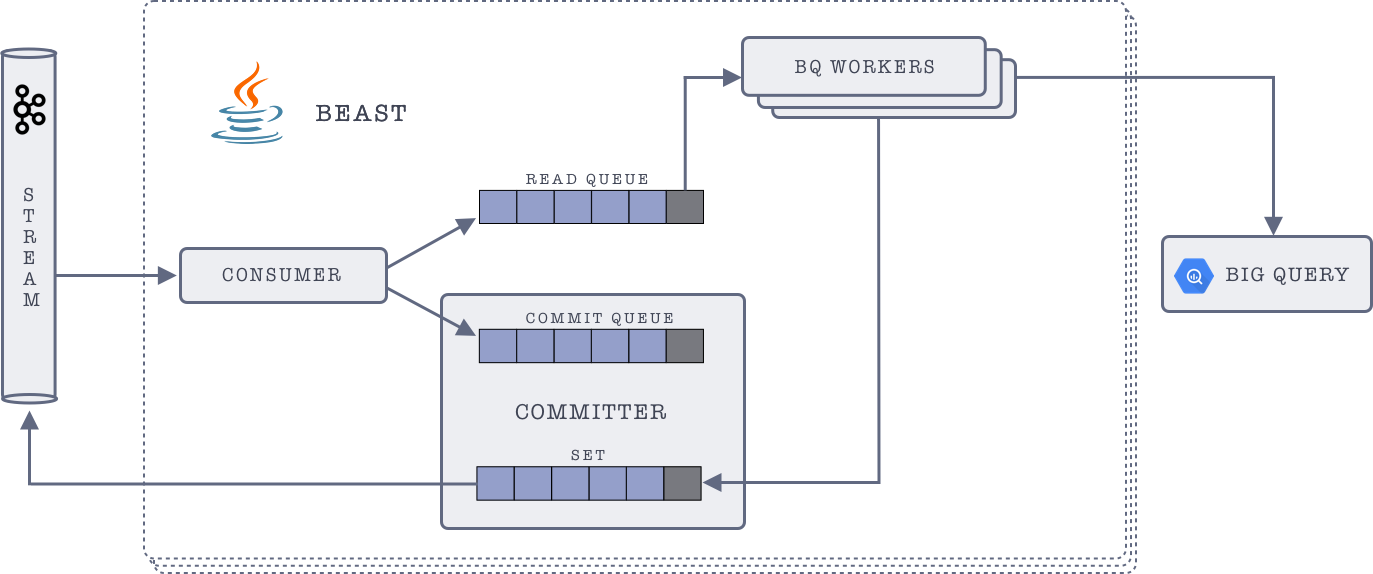

Architecture

- Consumer: Consumes messages from kafka in batches, and pushes these batches to Read & Commit queues. These queues are blocking queues, i.e, no more messages will be consumed if the queue is full. (This is configurable based on poll timeout)

- BigQuery Worker: Polls messages from the read queue, and pushes them to BigQuery. If the push operation was successful, BQ worker sends an acknowledgement to the Committer.

- Committer: Committer receives the acknowledgements of successful push to BigQuery from BQ Workers. All these acknowledgements are stored in a set within the committer. Committer polls the commit queue for message batches. If that batch is present in the set, i.e., the batch has been successfully pushed to BQ, then it commits the max offset for that batch, back to Kafka, and pops it from the commit queue & set.

-

Dead Letters:

Beast provides a plugable GCS (Google Cloud Storage) component to store invalid out of bounds messages that are rejected by BigQuery. Primarily all messages that are partitioned on a timestamp field and those that contain out of ranges timestamps (year old data or 6 months in future) on the partition key are considered as invalid. Without an handler for these messages, Beast stops processing. The default behaviour is to stop processing on these out of range data. GCS component can be turned on by supplying an environment field as below.

The handler partitions the invalid messages on GCS based on the message arrival date in the formatENABLE_GCS_ERROR_SINK=true GCS_BUCKET=<google cloud store bucket name> GCS_PATH_PREFIX=<prefix path under the bucket> GCS_WRITER_PROJECT_NAME=<google project having bucket><dt=yyyy-MM-dd>. The location of invalid messages on GCS would ideally be<GCS_WRITER_PROJECT_NAME>/<GCS_BUCKET>/<GCS_PATH_PREFIX>/<dt=yyyy-MM-dd>/<topicName>/<random-uuid>where-

<topicName>- is the topic that has the invalid messages -

<random-uuid>- name of the file

-

Building & Running

Prerequisite

- A kafka cluster which has messages pushed in proto format, which beast can consume

- should have BigQuery project which has streaming permission

- create a table for the message proto

- create configuration with column mapping for the above table and configure in env file

- env file should be updated with bigquery, kafka, and application parameters

Run locally:

git clone https://github.com/odpf/beast

export $(cat ./env/sample.properties | xargs -L1) && gradle clean runConsumer

Run with Docker

The image is available in odpf dockerhub.

export TAG=release-0.1.1

docker run --env-file beast.env -v ./local_dir/project-secret.json:/var/bq-secret.json -it odpf/beast:$TAG

-

-vmounts local secret fileproject-sercret.jsonto the docker mentioned location, andGOOGLE_CREDENTIALSshould match the same/var/bq-secret.jsonwhich is used for BQ authentication. -

TAGYou could update the tag if you want the latest image, the mentioned tag is tested well.

Running on Kubernetes

Create a beast deployment for a topic in kafka, which needs to be pushed to BigQuery.

- Deployment can have multiple instance of beast

- A beast container consists of the following threads:

- A kafka consumer

- Multiple BQ workers

- A committer

- Deployment also includes telegraf container which pushes stats metrics Follow the instructions in chart for helm deployment

BQ Setup:

Given a TestMessage proto file, you can create bigtable with schema

# create new table from schema

bq mk --table <project_name>:dataset_name.test_messages ./docs/test_messages.schema.json

# query total records

bq query --nouse_legacy_sql 'SELECT count(*) FROM `<project_name>:dataset_name.test_messages LIMIT 10'

# update bq schema from local schema json file

bq update --format=prettyjson <project_name>:dataset_name.test_messages booking.schema

# dump the schema of table to file

bq show --schema --format=prettyjson <project_name>:dataset_name.test_messages > test_messages.schema.json

Produce messages to Kafka

You can generate messages with TestMessage.proto with sample-kafka-producer, which pushes N messages

Running Stencil Server

- run shell script

./run_descriptor_server.shto build descriptor inbuilddirectory, and python server on:8000 - stencil url can be configured to

curl http://localhost:8000/messages.desc

Contribution

- You could raise issues or clarify the questions

- You could raise a PR for any feature/issues

- You could help us with documentation

To run and test locally:

git clone https://github.com/odpf/beast

export $(cat ./env/sample.properties | xargs -L1) && gradlew test

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].