yumath / Bertner

ChineseNER based on BERT, with BiLSTM+CRF layer

Stars: ✭ 195

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Bertner

Turkish Bert Nlp Pipeline

Bert-base NLP pipeline for Turkish, Ner, Sentiment Analysis, Question Answering etc.

Stars: ✭ 85 (-56.41%)

Mutual labels: named-entity-recognition, ner

Persian Ner

پیکره بزرگ شناسایی موجودیتهای نامدار فارسی برچسب خورده

Stars: ✭ 183 (-6.15%)

Mutual labels: named-entity-recognition, ner

Bi Lstm Crf Ner Tf2.0

Named Entity Recognition (NER) task using Bi-LSTM-CRF model implemented in Tensorflow 2.0(tensorflow2.0 +)

Stars: ✭ 93 (-52.31%)

Mutual labels: named-entity-recognition, ner

Kashgari

Kashgari is a production-level NLP Transfer learning framework built on top of tf.keras for text-labeling and text-classification, includes Word2Vec, BERT, and GPT2 Language Embedding.

Stars: ✭ 2,235 (+1046.15%)

Mutual labels: named-entity-recognition, ner

Bert Sklearn

a sklearn wrapper for Google's BERT model

Stars: ✭ 182 (-6.67%)

Mutual labels: named-entity-recognition, ner

Torchcrf

An Inplementation of CRF (Conditional Random Fields) in PyTorch 1.0

Stars: ✭ 58 (-70.26%)

Mutual labels: named-entity-recognition, ner

Ner

命名体识别(NER)综述-论文-模型-代码(BiLSTM-CRF/BERT-CRF)-竞赛资源总结-随时更新

Stars: ✭ 118 (-39.49%)

Mutual labels: named-entity-recognition, ner

Named entity recognition

中文命名实体识别(包括多种模型:HMM,CRF,BiLSTM,BiLSTM+CRF的具体实现)

Stars: ✭ 995 (+410.26%)

Mutual labels: named-entity-recognition, ner

Ner Evaluation

An implementation of a full named-entity evaluation metrics based on SemEval'13 Task 9 - not at tag/token level but considering all the tokens that are part of the named-entity

Stars: ✭ 126 (-35.38%)

Mutual labels: named-entity-recognition, ner

Dan Jurafsky Chris Manning Nlp

My solution to the Natural Language Processing course made by Dan Jurafsky, Chris Manning in Winter 2012.

Stars: ✭ 124 (-36.41%)

Mutual labels: named-entity-recognition, ner

Phonlp

PhoNLP: A BERT-based multi-task learning toolkit for part-of-speech tagging, named entity recognition and dependency parsing (NAACL 2021)

Stars: ✭ 56 (-71.28%)

Mutual labels: named-entity-recognition, ner

Ncrfpp

NCRF++, a Neural Sequence Labeling Toolkit. Easy use to any sequence labeling tasks (e.g. NER, POS, Segmentation). It includes character LSTM/CNN, word LSTM/CNN and softmax/CRF components.

Stars: ✭ 1,767 (+806.15%)

Mutual labels: named-entity-recognition, ner

Ner blstm Crf

LSTM-CRF for NER with ConLL-2002 dataset

Stars: ✭ 51 (-73.85%)

Mutual labels: named-entity-recognition, ner

Sequence tagging

Named Entity Recognition (LSTM + CRF) - Tensorflow

Stars: ✭ 1,889 (+868.72%)

Mutual labels: named-entity-recognition, ner

Jointre

End-to-end neural relation extraction using deep biaffine attention (ECIR 2019)

Stars: ✭ 41 (-78.97%)

Mutual labels: named-entity-recognition, ner

Bond

BOND: BERT-Assisted Open-Domain Name Entity Recognition with Distant Supervision

Stars: ✭ 96 (-50.77%)

Mutual labels: named-entity-recognition, ner

Tf ner

Simple and Efficient Tensorflow implementations of NER models with tf.estimator and tf.data

Stars: ✭ 876 (+349.23%)

Mutual labels: named-entity-recognition, ner

Nlp Experiments In Pytorch

PyTorch repository for text categorization and NER experiments in Turkish and English.

Stars: ✭ 35 (-82.05%)

Mutual labels: named-entity-recognition, ner

Multilstm

keras attentional bi-LSTM-CRF for Joint NLU (slot-filling and intent detection) with ATIS

Stars: ✭ 122 (-37.44%)

Mutual labels: named-entity-recognition, ner

Bnlp

BNLP is a natural language processing toolkit for Bengali Language.

Stars: ✭ 127 (-34.87%)

Mutual labels: named-entity-recognition, ner

Bert-ChineseNER

Introduction

该项目是基于谷歌开源的BERT预训练模型,在中文NER任务上进行fine-tune。

Datasets & Model

训练本模型的主要标记数据,来自于zjy-usas的ChineseNER项目。本项目在原本的BiLSTM+CRF的框架前,添加了BERT模型作为embedding的特征获取层,预训练的中文BERT模型及代码来自于Google Research的bert。

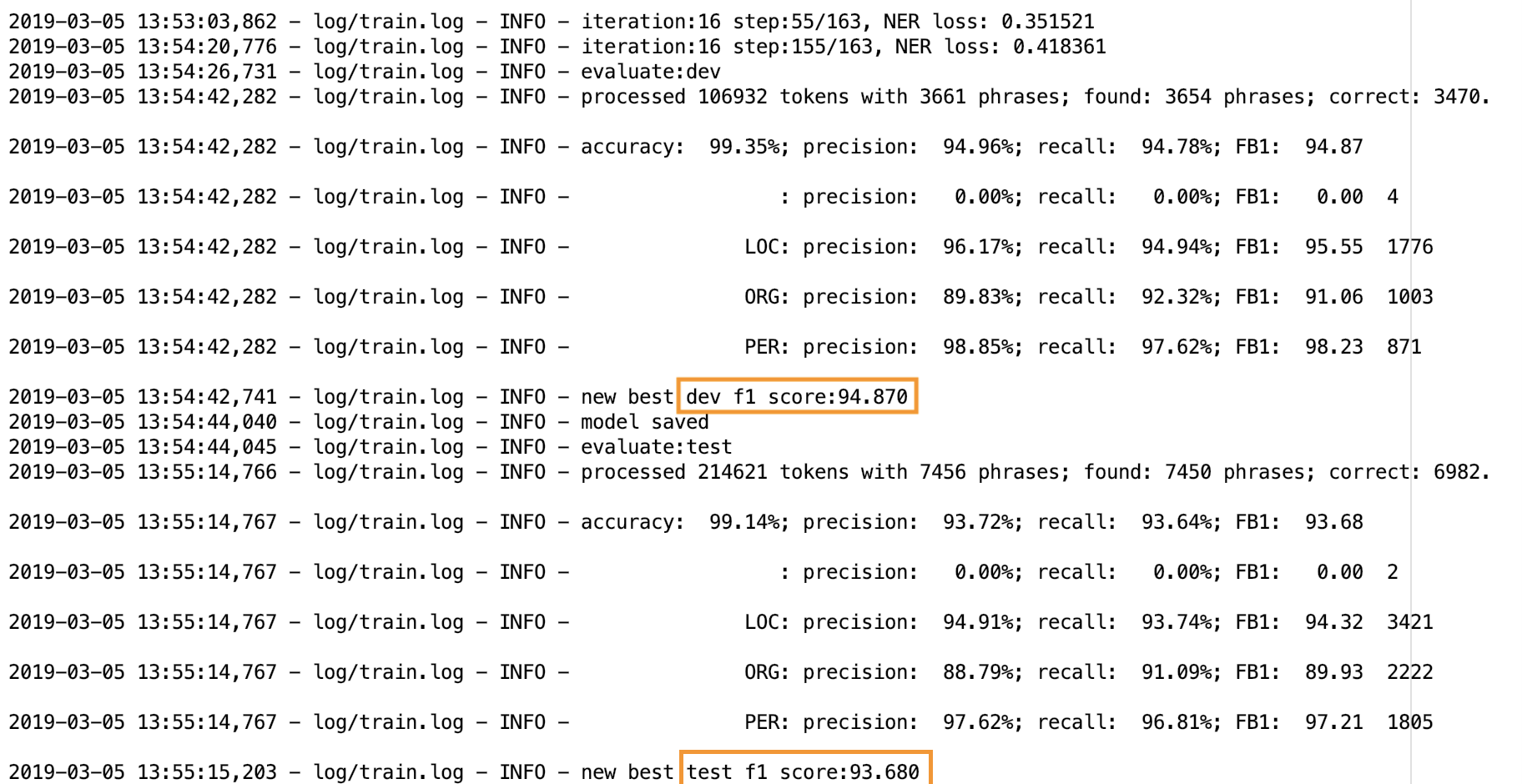

Results

引入bert之后,可以看到在验证集上的F-1值在训练了16个epoch时就已经达到了94.87,并在测试集上达到了93.68,在这个数据集上的F-1值提升了两个多百分点。

Train

- 下载bert模型代码,放入本项目根目录

- 下载bert的中文预训练模型,解压放入本项目根目录

- 搭建依赖环境python3+tensorflow1.12

- 执行

python3 train.py即可训练模型 - 执行

python3 predict.py可以对单句进行测试

整理后的项目目录,应如图所示。

Conclusion

可以看到,使用bert以后,模型的精度提升了两个多百分点。并且,在后续测试过程中发现,使用bert训练的NER模型拥有更强的泛化性能,比如训练集中未见过的公司名称等,都可以很好的识别。而仅仅使用ChineseNER中提供的训练集,基于BiLSTM+CRF的框架训练得到的模型,基本上无法解决OOV问题。

Fine-tune

目前的代码是Feature Based的迁移,可以改为Fine-tune的迁移,效果还能再提升1个点左右。fine-tune可以自行修改代码,将model中的bert参数加入一起训练,并将lr修改到1e-5的量级。 并且,是否添加BiLSTM都对结果影响不大,可以直接使用BERT输出的结果进行解码,建议还是加一层CRF,强化标记间的转移规则。

Reference

(1) https://github.com/zjy-ucas/ChineseNER

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].