Eugene-Mark / Bigdata File Viewer

Licence: mit

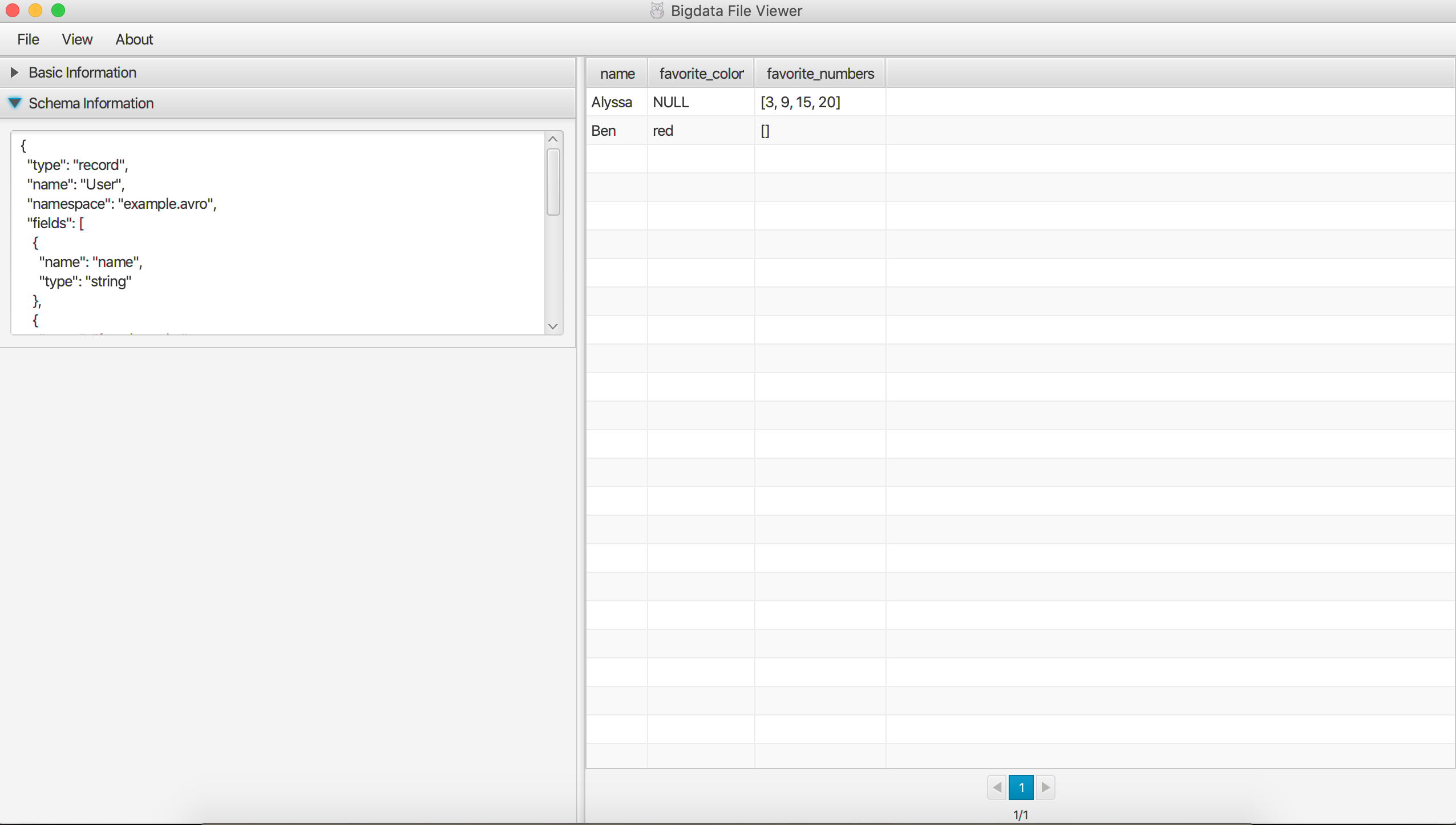

A cross-platform (Windows, MAC, Linux) desktop application to view common bigdata binary format like Parquet, ORC, AVRO, etc. Support local file system, HDFS, AWS S3, Azure Blob Storage ,etc.

Stars: ✭ 86

Programming Languages

java

68154 projects - #9 most used programming language

Projects that are alternatives of or similar to Bigdata File Viewer

columnify

Make record oriented data to columnar format.

Stars: ✭ 28 (-67.44%)

Mutual labels: avro, bigdata, parquet

Devops Python Tools

80+ DevOps & Data CLI Tools - AWS, GCP, GCF Python Cloud Function, Log Anonymizer, Spark, Hadoop, HBase, Hive, Impala, Linux, Docker, Spark Data Converters & Validators (Avro/Parquet/JSON/CSV/INI/XML/YAML), Travis CI, AWS CloudFormation, Elasticsearch, Solr etc.

Stars: ✭ 406 (+372.09%)

Mutual labels: avro, parquet, hdfs

Rumble

⛈️ Rumble 1.11.0 "Banyan Tree"🌳 for Apache Spark | Run queries on your large-scale, messy JSON-like data (JSON, text, CSV, Parquet, ROOT, AVRO, SVM...) | No install required (just a jar to download) | Declarative Machine Learning and more

Stars: ✭ 58 (-32.56%)

Mutual labels: avro, parquet, hdfs

Gcs Tools

GCS support for avro-tools, parquet-tools and protobuf

Stars: ✭ 57 (-33.72%)

Mutual labels: avro, parquet

Ratatool

A tool for data sampling, data generation, and data diffing

Stars: ✭ 279 (+224.42%)

Mutual labels: avro, parquet

leaflet heatmap

简单的可视化湖州通话数据 假设数据量很大,没法用浏览器直接绘制热力图,把绘制热力图这一步骤放到线下计算分析。使用Apache Spark并行计算数据之后,再使用Apache Spark绘制热力图,然后用leafletjs加载OpenStreetMap图层和热力图图层,以达到良好的交互效果。现在使用Apache Spark实现绘制,可能是Apache Spark不擅长这方面的计算或者是我没有设计好算法,并行计算的速度比不上单机计算。Apache Spark绘制热力图和计算代码在这 https://github.com/yuanzhaokang/ParallelizeHeatmap.git .

Stars: ✭ 13 (-84.88%)

Mutual labels: bigdata, hdfs

Choetl

ETL Framework for .NET / c# (Parser / Writer for CSV, Flat, Xml, JSON, Key-Value, Parquet, Yaml, Avro formatted files)

Stars: ✭ 372 (+332.56%)

Mutual labels: avro, parquet

God Of Bigdata

专注大数据学习面试,大数据成神之路开启。Flink/Spark/Hadoop/Hbase/Hive...

Stars: ✭ 6,008 (+6886.05%)

Mutual labels: bigdata, hdfs

Big Data Engineering Coursera Yandex

Big Data for Data Engineers Coursera Specialization from Yandex

Stars: ✭ 71 (-17.44%)

Mutual labels: bigdata, hdfs

Bigdata Interview

🎯 🌟[大数据面试题]分享自己在网络上收集的大数据相关的面试题以及自己的答案总结.目前包含Hadoop/Hive/Spark/Flink/Hbase/Kafka/Zookeeper框架的面试题知识总结

Stars: ✭ 857 (+896.51%)

Mutual labels: bigdata, hdfs

DaFlow

Apache-Spark based Data Flow(ETL) Framework which supports multiple read, write destinations of different types and also support multiple categories of transformation rules.

Stars: ✭ 24 (-72.09%)

Mutual labels: avro, parquet

taller SparkR

Taller SparkR para las Jornadas de Usuarios de R

Stars: ✭ 12 (-86.05%)

Mutual labels: bigdata, hdfs

wasp

WASP is a framework to build complex real time big data applications. It relies on a kind of Kappa/Lambda architecture mainly leveraging Kafka and Spark. If you need to ingest huge amount of heterogeneous data and analyze them through complex pipelines, this is the framework for you.

Stars: ✭ 19 (-77.91%)

Mutual labels: hdfs, parquet

parquet-extra

A collection of Apache Parquet add-on modules

Stars: ✭ 30 (-65.12%)

Mutual labels: avro, parquet

Iceberg

Iceberg is a table format for large, slow-moving tabular data

Stars: ✭ 393 (+356.98%)

Mutual labels: avro, parquet

Hadoop For Geoevent

ArcGIS GeoEvent Server sample Hadoop connector for storing GeoEvents in HDFS.

Stars: ✭ 5 (-94.19%)

Mutual labels: bigdata, hdfs

bigdata-file-viewer

A cross-platform (Windows, MAC, Linux) desktop application to view common bigdata binary format like Parquet, ORC, Avro, etc. Support local file system, HDFS, AWS S3, etc.

Note, you're recommended to download release v1.1.1 to if you just want to view local bigdata binary files, it's lightweight without dependency to AWS SDK, Azure SDK, etc. Quite honestly, you can download data files from web portal of AWS, Azure ,etc. before viewing it with this tool. The reason why I integrted the cloud storage system's SDK into this tool is more like a demo of how to use Java to read files from specific storage system.

Feature List

- Open and view Parquet, ORC and Avro at local directory, HDFS, AWS S3, etc.

- Convert binary format data to text format data like CSV

- Support complex data type like array, map, struct, etc

- Suport multiple platforms like Windows, MAC and Linux

- Code is extensible to involve other data format

Usage

- Download runnable jar from release page or follow

Buildsection to build from source code. - Invoke it by

java -jar BigdataFileViewer-1.2-SNAPSHOT-jar-with-dependencies.jar - Open binary format file by "File" -> "Open". Currently, it can open file with parquet suffix, orc suffix and avro suffix. If no suffix specified, the tool will try to extract it as Parquet file

- Set the maximum rows of each page by "View" ->

Input maximum row number-> "Go" - Set visible properties by "View" -> "Add/Remove Properties"

- Convert to CSV file by "File" -> "Save as" -> "CSV"

- Check schema information by unfolding "Schema Information" panel

Build

- Use

mvn packageto build an all-in-one runnable jar - Java 1.8 or higher is required

- Make sure the Java has javafx bound. For example, I installed openjdk 1.8 on Ubuntu 18.04 and it has no javafx bound, then I installed it following guide here.

Screenshots

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].