bollu / Bollu.github.io

Labels

A Universe of Sorts

A Universe of Sorts

Siddharth Bhat

- Please leave feedback!

- Vidcall/Hang out

- Reach me:

[email protected] - github

- math.se

- resume

- reading list/TODO

- Here is the RSS feed for this page

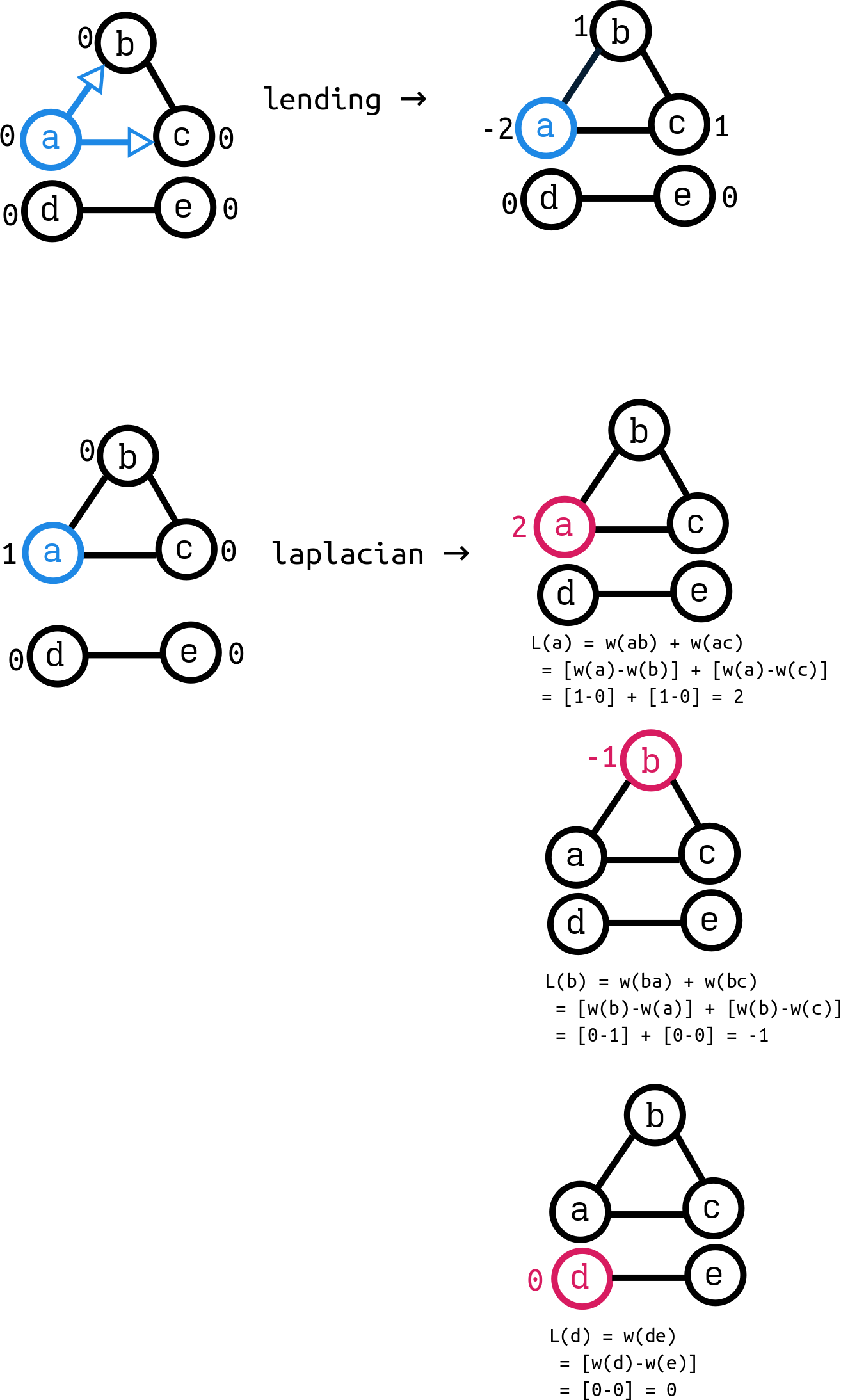

Covariant hom is left exact

Internal versus External semidirect products

Say we have an inner semidirect product. This means we have subgroups $N, K$ such that $NK = G$, $N$ normal in $G$ and $N \cap K = { e }$. Given such conditions, we can realize $N$ and $K$ as a semidirect product, where the action of $K$ on $N$ is given by conjugation in $G$. So, concretely, let's think of $N$ (as an abstract group) and $K$ (as an abstract group) with $K$ acting on $N$ (by conjugation inside $G$). We write the action of $k$ on $n$ as $n^k \equiv knk^{-1}$. We then have a homomorphism $\phi: N \ltimes K \rightarrow G$ given by $\phi((n, k)) = nk$. To check this is well-defined, let's take $s, s' \in N \ltimes K$, with $s \equiv (n, k)$ and $s' \equiv (n', k')$. Then we get:

$$ \begin{aligned} &\phi(ss') = \ &=\phi((n, k) \cdot (n', k')) \ &\text{definition of semidirect product via conjugation:} \ &= \phi((n {n'}^k, kk')) \ &\text{definition of $\phi$:} \ &= n n'^{k} kk' \ &\text{definition of $n'^k = k n' k^{-1}$:} \ &= n k n'k^{-1} k k' \ &= n k n' k' \ &= \phi(s) \phi(s') \end{aligned} $$

So, $\phi$ really is a homomorphism from the external description (given in terms of the conjugation) and the internal description (given in terms of the multiplication).

We can also go the other direction, to start from the internal definition and get to the conjugation. Let $g \equiv nk$ and $g' \equiv n'k'$. We want to multiply them, and show that the multiplication gives us some other term of the form $NK$:

$$ \begin{aligned} gg' \ &= (n k) (n' k') \ &= n k n' k' \ &= \text{insert $k^{-1}k$: } \ &= n k n' k^{-1} k k' \ &= n (k n' k^{-1}) k k' \ &= \text{$N$ is normal, so $k n' k^{-1}$ is some other element $n'' \in N$:} \ &= n n'' k k' \ &= N K \end{aligned} $$

So, the collection of elements of the form $NK$ in $G$ is closed. We can check that the other properties hold as well.

Splitting of semidirect products in terms of projections

Say we have an exact sequence that splits:

$$ 0 \rightarrow N \xrightarrow{i} G \xrightarrow{\pi} K \rightarrow 0 $$

with the section given by $s: K \rightarrow G$ such that $\forall k \in K, \pi(s(k)) = k$. Then we can consider the map $\pi_k \equiv s \circ pi: G \rightarrow G$. See that this firsts projects down to $K$, and then re-embeds the value in $G$. The cool thing is that this is in fact idempotent (so it's a projection!) Compute:

$$ \begin{aligned} &\pi_k \circ \pi_k \ &= (s \circ \pi) \circ (s \circ \pi ) \ &= s \circ (\pi \circ s) \circ \pi ) \ &= s \circ id \circ \pi \ &= s \circ \pi = \pi_k \ \end{aligned} $$

So this "projects onto the $k$ value". We can then extract out the $N$ component as $\pi_n: G \rightarrow G; \pi_n(g) \equiv g \cdot \pi(k)^{-1}$.

Tensor is right exact

Consider an exact sequence

$$ 0 \rightarrow A \xrightarrow{f} B \xrightarrow{g} C \rightarrow 0 $$

We wish to consider the operation of tensoring with some ring $R$. For a given ring morphism $h: P \rightarrow Q$ this induces a new morphism $R \otimes h: R \otimes A \rightarrow R \otimes B$ defined by $h(r \otimes a) \equiv r \otimes h(a)$.

So we wish to contemplate the sequence:

$$ R \otimes A \xrightarrow{R \otimes f} R \otimes B \xrightarrow{R \otimes g} R \otimes C $$

To see if it is left exact, right exact, or both. Consider the classic sequence of modules over $\mathbb Z$:

A detailed example

$$ 0 \rightarrow 2\mathbb Z \xrightarrow{i} \mathbb Z \xrightarrow{\pi} \mathbb Z / \mathbb 2Z \rightarrow 0 $$

Where $i$ is for inclusion, $\pi$ is for projection. This is an exact sequence, since it's of the form kernel-ring-quotient. We have three natural choices to tensor with: $\mathbb Z, \mathbb 2Z, \mathbb Z/\mathbb 2Z$. By analogy with fields, tensoring with the base ring $\mathbb Z$ is unlikely to produce anything of interest. $\mathbb 2Z$ maybe more interesting, but see that the map $1 \in \mathbb Z \mapsto 2 \in 2 \mathbb Z$ gives us an isomorphism between the two rings. That leaves us with the final and most interesting element (the one with torsion), $\mathbb Z / \mathbb 2Z$. So let's tensor by this element:

$$ \mathbb Z/2\mathbb Z \otimes 2\mathbb Z \xrightarrow{i'} \mathbb Z/2\mathbb Z \otimes \mathbb Z \xrightarrow{\pi'} \mathbb Z/2\mathbb Z \otimes \mathbb Z / \mathbb 2Z $$

- See that $\mathbb Z/2\mathbb Z \otimes 2 \mathbb Z$ has elements of the form $(0, *) = 0$, We might imagine that the full ring collapses since $1 \otimes 2k) = 2(1 \otimes k) = 2 \otimes k = 0$ (since $2 = 0$ in $\mathbb Z/2\mathbb Z$). But this in fact incorrect! Think of the element $1 \otimes 2$. We cannot factorize this as $2(1 \otimes 1)$ since $1 \not \in 2 \mathbb Z$. So we have the element $1 \otimes 2 \in \mathbb Z/2\mathbb Z / \times 2 \mathbb Z$.

- See that $\mathbb Z/2\mathbb Z \otimes \mathbb Z \simeq \mathbb Z/2\mathbb Z$: Factorize $(k, l) = l(k, 1) = (kl, 1) \simeq \mathbb Z/2 \mathbb Z$.

- Similarly, see that $\mathbb Z/2\mathbb Z \otimes \mathbb Z/2\mathbb Z \simeq \mathbb Z/2\mathbb Z$. Elements $0 \otimes 0, 0 \otimes 1, 1 \otimes 0 \simeq 0$ and $1 \otimes 1 \simeq 1$.

- In general, Let's investigate elements $a \otimes b \in \mathbb Z/n\mathbb Z \otimes \mathbb Z/m\mathbb mZ$ . We can write this as $ab 1 \otimes 1$. The $1 \otimes 1$ gives us a "machine" to reduce the number by $n$ and by $m$. So if we first reduce by $n$, we are left with $r$ (for remained) for some $ab = \alpha n + r$. We can then reduce $r$ by $m$ to get $ab = \alpha n + \beta m + r'$. So if $r' = 0$, then we get $ab = \alpha n + \beta m$. But see that all elements of the form $\alpha n + \beta m$ is divisible by $gcd(n, m)$. Hence, all multiples of $gcd(n, m)$ are sent to zero, and the rest of the action follows from this. So we effectively map into $\mathbb Z/ gcd(m, n) \mathbb Z$

- In fact, we can use the above along with (1) write finitely generated abelian groups as direct sum of cyclic groups, (2) tensor distributes over direct sum. This lets us decompose tensor products of all finitely generated abelian groups into cyclics.

- This gives us another heuristic argument for why $\mathbb Z \times \mathbb Z/2\mathbb Z \simeq \mathbb Z/2 \mathbb Z$. We should think of $\mathbb Z$ as $\mathbb Z/\mathbb \infty Z$, since we have "no torsion" or "torsion at infinity". So we get the tensor product should have $gcd(2, \infty) = 2$.

- Now see that the first two components of the tensor give us a map from $\mathbb Z/2\mathbb Z \otimes \mathbb 2Z \xrightarrow{i} \mathbb Z/2\mathbb Z \otimes \mathbb Z$ which sends:

$$ \begin{aligned} &x \otimes 2k \mapsto x \otimes 2k \in \mathbb Z/2\mathbb Z \otimes \mathbb Z \ &= 2 (x \otimes k) \ &= (2x \otimes k) \ &=0 \otimes k = 0 \end{aligned} $$

-

This map is not injective, since this map kills everything! Intuitively, the "doubling" that is latent in $2\mathbb Z$ is "freed" when injecting into $\mathbb Z$. This latent energy explodes on contant with $\mathbb Z/2 \mathbb Z$ giving zero. So, the sequence is no longer left-exact, since the map is not injective!

-

So the induced map is identically zero! Great, let's continue, and inspect the tail end $ \mathbb Z/2\mathbb Z \otimes \mathbb Z \xrightarrow{\pi} \mathbb Z/2\mathbb Z \otimes \mathbb Z / \mathbb 2Z$. Here, we sent the element $(x, y) \mapsto (x, y \mod 2)$. This clearly gives us all the elements: For example, we get $0 \otimes 0$ as the preimage of $0 \times 2k$ and we get $1 \otimes 1$ as the preimage of (predictably) $1 \otimes (2k+1)$. Hence, the map is surjective.

So finally, we have the exact sequence:

$$ \mathbb Z/2\mathbb Z \otimes 2\mathbb Z \xrightarrow{i'} \mathbb Z/2\mathbb Z \otimes \mathbb Z \xrightarrow{\pi'} \mathbb Z/2\mathbb Z \otimes \mathbb Z / \mathbb 2Z \rightarrow 0 $$

We do NOT have the initial $(0 \rightarrow \dots)$ since $i'$ is no longer injective. It fails injectivity as badly as possible, since $i'(x) = 0$. Thus, tensoring is RIGHT EXACT. It takes right exact sequences to right exact sequences!

The general proof

Given the sequence:

$$ A \xrightarrow{i} B \xrightarrow{\pi} C \rightarrow 0 $$

We need to show that the following sequence is exact:

$$ R \otimes A \xrightarrow{i'} R \otimes B \xrightarrow{\pi'} R \otimes C \rightarrow 0 $$

-

First, to see that $\pi'$ is surjective, consider the basis element $r \otimes c \in R \otimes C$. Since $\pi$ is surjective, there is some element $b \in B$ such that $\pi(b) = c$. So the element $r \otimes b \in B$ maps to $r \otimes c$ by $\pi'$; $\pi'(r \otimes b) = r \otimes \pi(b) = r \otimes c$ (by definition of $\pi$, and choice of $b$). This proves that $B \xrightarrow{\pi'} R \otimes C \rightarrow 0$ is exact.

-

Next, we need to show that $im(i') = ker(\pi')$.

-

To show that $im(i') \subseteq ker(\pi')$, consider an arbitrary $r \otimes a$. Now compute:

$$ \begin{aligned} &\pi'(i'(r \otimes a)) \ &= \pi'(r \otimes i(a)) \ &= r \otimes \pi(i(a)) & \text{By exactness of $A \xrightarrow{i} B \xrightarrow{\pi} C$, $\pi(i(a)) = 0$:} \ &= r \otimes 0 \ &= 0 \end{aligned} $$ So we have that any element in $i'(r \otimes a) \in im(i')$ is in the kernel of $\pi'$.

Next, let's show $ker(\pi') \subseteq im(i')$. This is the "hard part" of the proof. So let's try a different route. I claim that if $im(i') = ker(\pi')$ iff $coker(i') = R \otimes C$. This follows because:

$$ \begin{aligned} &coker(i) = (R \otimes B)/ im(i') \ & \text{Since } im(i') = ker(\pi') &= (R \otimes B)/ker(\pi') \ & \text{Isomorphism theorem: } \ &= im(\pi') \ & \text{$\pi'$ is surjective: } \ &= R \otimes C \end{aligned} $$

Since each line was an equality, if I show that $coker(i) = R \otimes C$, then I have that $im(i') = ker(\pi')$. So let's prove this:

$$ \begin{aligned} &coker(i) = (R \otimes B)/ im(i') \ &= (R \otimes B)/i'(R \otimes A) \ & \text{Definition of $i'$: } \ &= (R \otimes B)/(R \otimes i(A)) \ \end{aligned} $$

I claim that the $(R \otimes B)/( R \otimes i(A)) \simeq R \otimes (B/i(A))$ (informally, "take $R$ common"). Define the quotient map $q: B \rightarrow B/i(A)$. This is a legal quotient map because $i(A) = im(i) \simeq ker(\pi)$ is a submodule of $B$.

$$ \begin{aligned} q : B \rightarrow B/i(A) \ f: R \otimes B \rightarrow \rightarrow R \otimes (B / i(A)) \ f(r \otimes b) = r \otimes q(b) \ r \otimes b \in R \otimes B \xrightarrow{f = R \otimes q } r \otimes q(b) \in R \otimes B/i(A) \end{aligned} $$

Let's now study $ker(f)$. It contains all those elements such that $r \otimes q(b) = 0$. But this is only possible if $q(b) = 0$. This means that $b \in i(A) = im(i) = ker(\pi)$. Also see that for every element $r \otimes (b + i(A)) \in R \otimes (B/i(A))$, there is an inverse element $r \otimes b \in R \otimes B$. So, the map $f$ is surjective. Hence, $im(f) \simeq R \otimes (B/i(A))$. Combining the two facts, we get:

$$ \begin{aligned} &domain(f)/ker(f) \simeq im(f) \ &(R \otimes B)/(R \otimes (B/i(A))) \simeq R \otimes (B/i(A)) &coker(i) = (R \otimes B)/(R \otimes (B/i(A))) \simeq R \otimes (B/i(A)) = R \otimes C \end{aligned} $$

Hence, $coker(i) \simeq R \otimes C$.

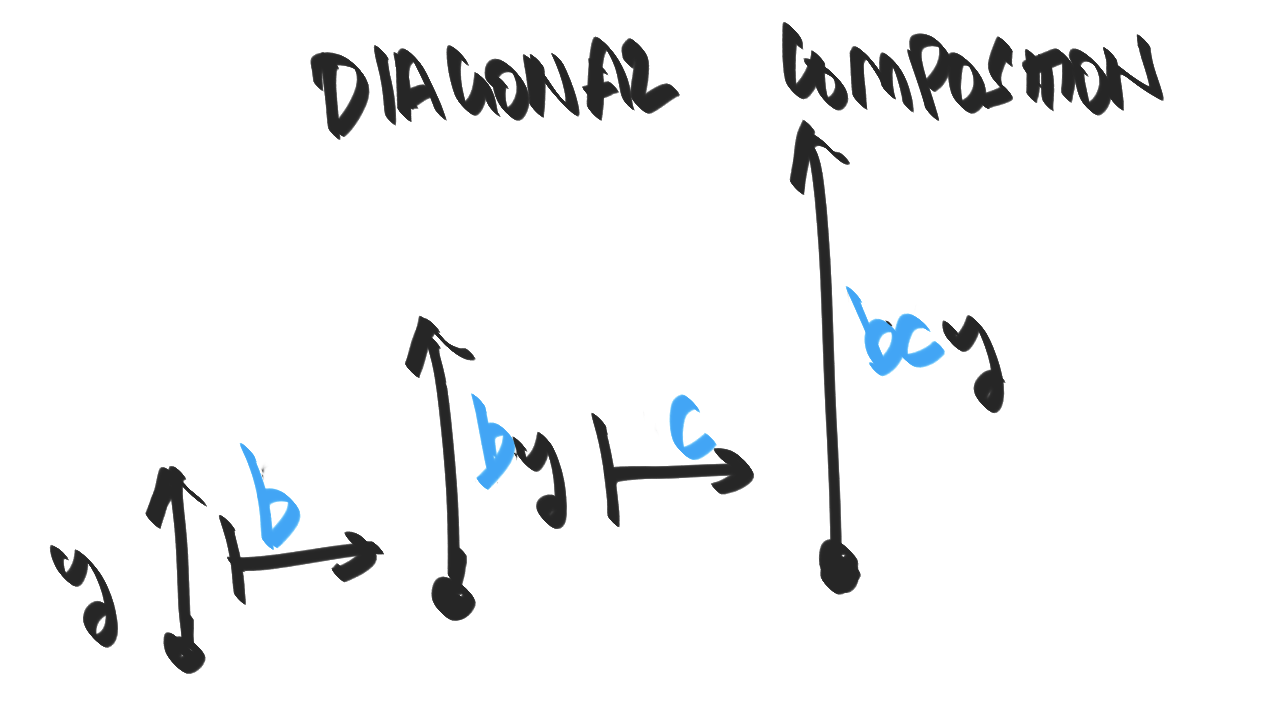

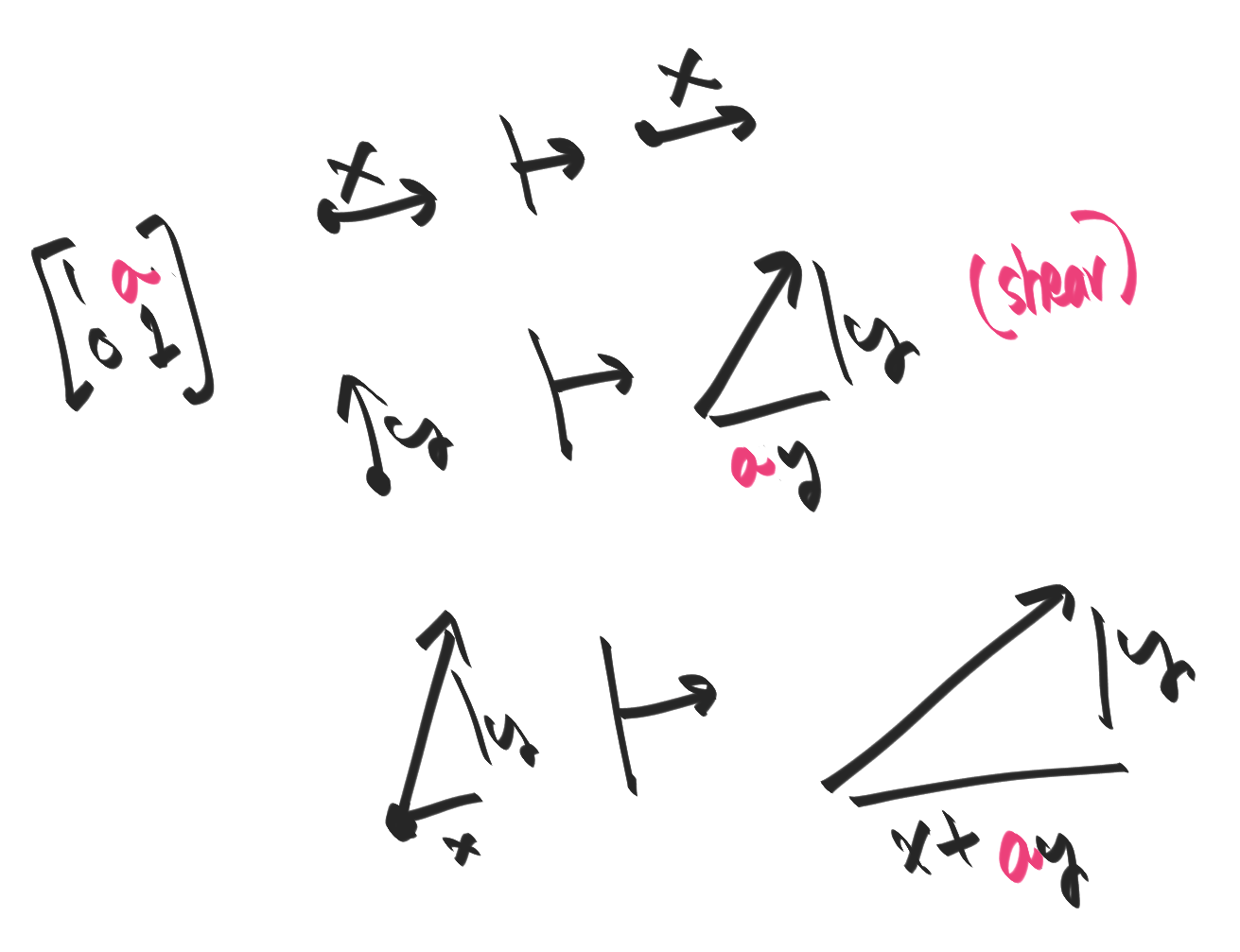

Semidirect product as commuting conditions

Recall that in $N \ltimes K = G$, $N$ is normal. This is from the mnemonic that it looks like $N \triangleleft G$, or from the fact that the acting/twisting subgroup $K$ is a fish that wants to "eat"/act on the normal subgroup $N$.

So, we have $knk^{-1} \in N$ as $N$ is normal, thus $knk^{-1} = n'$. This can be written as $kn = n'k$. So:

- When commuting, the element that gets changed/twisted in the normal subgroup. This is because the normal subgroup has the requisite constraint on it to be twistable.

- The element that remains invariant is the actor.

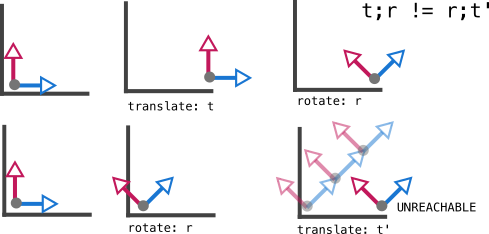

In the case of translations and rotations, it's the translations that are normal. This can be seen either by noticing that they are abelian, and are thus normal, while rotations don't "look normal". Alternatively, one can try to consider translate-rotate versus rotate-translate.

- First rotating by $r$ and then translating by $t$ along the x-axis

has the same effect as first translating by $t'$ at 45 degrees to the x-axis,

and then rotating by the **same** r.

- First rotating by $r$ and then translating by $t$ along the x-axis

has the same effect as first translating by $t'$ at 45 degrees to the x-axis,

and then rotating by the **same** r.

- This begs the question, is there some other

translation

t''and some other rotationr''such thatt''; r''(t''first,r''next) has the same effect asr;t(rfirst,tnext)?

- First let's translate by $t$ along the x-axis and then rotating by $r$.

Now let's think, if we wanted to rotate and then translate, what rotation would

we start with? it would HAVE TO BE $r$, since there's no other way to get the axis

in such an angle in the final state. But if we rotate by $r$, then NO translation

can get us to the final state we want. So, it's impossible to find a

rotation;translationpair that mimics our startingtranslation;rotation.

Exact sequences for semidirect products; fiber bundles

Fiber bundles

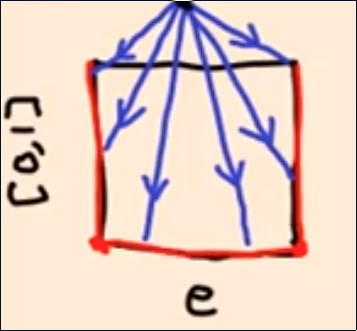

In the case of a bundle, we have a sequence of maps $F \rightarrow E \rightarrow B$ where $F$ is the fiber space (like the tangent space at the identity $T_eM$). $E$ is the total space (the bundle $TM$), and $B$ is the base space (the manifold $M$). We require that the inverse of the projection $\pi^{-1}: B \rightarrow E$ locally splits as product $\pi^{-1}(U) \simeq U \times F$.

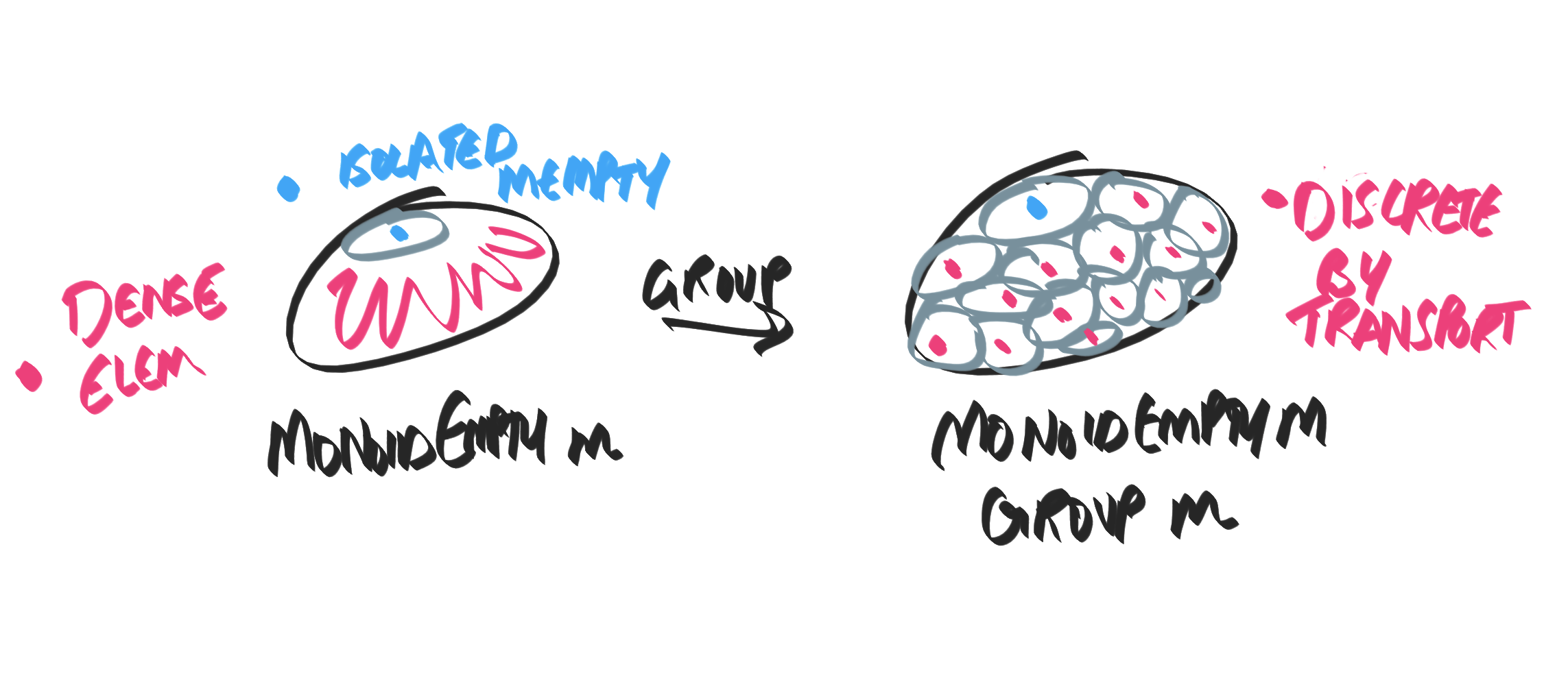

Semidirect products

In a semidirect product $N \ltimes K$, we have that $N$ is normal (because the fish wants to eat the normal subgroup $N$ / the symbol looks like $N \triangleleft G$ which is how we denote normality). Thus, we can only quotient by $N$, leaving us with $K$. This is captured by the SES:

$$ 0 \rightarrow N \rightarrow N \ltimes K \xrightarrow{\pi} K \rightarrow 0 $$

-

We imagine this as a bundle, with base space $M=K$, bundle $TM=N \ltimes K$, and fiber space (like, tangent space at the identity, say) $T_e M = N$.

-

Furthermore, this exact sequence splits; So there is a map $s: K \rightarrow N \ltimes K$ ($s$ for "section/split") such that $\forall k, \pi(s(k)) = k$. To see that this is true, define $s(k) \equiv (e, k)$. Since all actions of $K$ fix the identity $e \in N$, we have $s(k)s(k') = (e, k) (e, k') = (e, kk') = s(kk')$ so this is a valid map. To see that $\pi$ is its inverse, just act $\pi$; $\pi(s(k)) = \pi(e, k) = k$.

Relationship to gauges

Let $X$ be the space of all states. Let $O$ be a group action whose orbits identify equivalent states. So the space of "physical states" or "states that describe the same physical scenario" is the orbit of $X$ under $O$, or $X/O$. Now, the physical space $X/O$ is acted upon by some group $G$. If we want to "undo the quotienting" to have $G$ act on all of $X$, then we need to construct $G \ltimes O$. $G$ is normal here because $O$ already knows how to act on the whole space; $G$ does not, so $O$ needs to "guide" the action of $G$ by acting on it. The data needed to construct $G \ltimes O$ is a connection. Topologically, we have $X \rightarrow X/O$ and $G \curvearrowright X/O$. We want to extend this to $(G \ltimes O) \curvearrowright X$. We imagine this as:

*1| #1 | @1 X

*2| #2 | @2

*3| #3 | @3

| | |

| v |

* | # | @ X/H

where the action of $H$ permutes amongst the fibers of *, #, @. Next, we have an action of $G$ on $X/H$:

*1| #1 | @1 X

*2| #2 | @2

*3| #3 | @3

| | |

| v |

* | # | @ [X/H] --G--> # | @ | *

We need to lift this action of H the H-orbits. This is precisely the data a

connection gives us (why?) I guess the intuition is that the orbits of $X$ are like

the tangent spaces where $X \rightarrow X/O$ is the projection from the bundle

into the base space, and the $G$ is a curve that tells us what the "next point" we want to

travel to from the current point. The connection allows us to "lift" this to

"next tangent vector". That's quite beautiful.

We want the final picture to be:

*1| #1 | @1 X #2| @2|

*2| #2 | @2 --G--> #1| |

*3| #3 | @3 #3| |

| | | | |

| v | | |

* | # | @ [X/H] --G--> # | @ | *

If sequence splits then semidirect product

Consider the exact sequence

$$ 0 \rightarrow N \xrightarrow{\alpha} G \xrightarrow{\pi} H \rightarrow 0 $$

- We want to show that if there exists a map $s: H \rightarrow G$ such that $\forall h, \pi(s(h)) = h$ (ie, $\pi \circ s = id$), then G$ \simeq N \ltimes H$. So the splitting of the exact sequence decomposes $G$ into a semidirect product.

- The idea is that elements of $G$ have an $N$ part and a $K$ part. We can get the $K$ part by first pushing into $K$ using $\pi$ and then pulling back using $s$. So define $k: G \rightarrow G; k(g) \equiv s(\pi(g))$. This gives us the "K" part. To get the $N$ part, invert the "k part" to annihiliate it from $G$. So define a map $n: G \rightarrow G; n(g) \equiv g k(g)^{-1} = g k(g^{-1})$.

- See that the image of $n$ lies entirely in the kernel of $\pi$, or the image of $n$ indeed lies in $N$. This is a check:

$$ \begin{aligned} &\pi(n(g)) \ = \pi(g k(g^{-1})) \ = \pi(g) \pi(k(g^{-1})) \ = \pi(g) \pi(s(\pi(g^{-1}))) \ = \text{$\pi(s(x)) = x$:}\ = \pi(g) \pi(g^{-1}) = e \end{aligned} $$

- Hence, the image of $n$ is entirely in the kernel of $\pi$. But the kernel of $\pi$ is isomorphic to $N$, and hence the image of $n$ is isomorphic to $N$. So we've managed to decompose an element of $G$ into a $K$ part and an $N$ part.

- Write $G$ as $N \ltimes K$, by the map $\phi: G \rightarrow N \ltimes K; \phi(g) = (n(g), k(g))$. Let's discover the composition law.

$$ \begin{aligned} &\phi(gh) =^? \phi(g) \phi(h) \ &(n(gh), k(gh)) =^? (n(g), k(g)) (n(h), k(h)) \ &(ghk((gh)^{-1}), k(gh)) =^? (gk(g^{-1}), k(g)) (hk(h^{-1}), k(h)) \ \end{aligned} $$

We need the second to be $k(gh) = k(g) k(h)$, so that composes in an entirely straightforward fashion. For the other component, we need:

$$ \begin{aligned} &ghk((gh)^{-1}) =^? gk(g^{-1}) \cdot hk(h^{-1}) \ &ghk((gh)^{-1}) =^? gk(g^{-1}) k(g) \cdot hk(h^{-1}) k(g^{-1}) \ &ghk((gh)^{-1}) =^? g [k(g^{-1}) k(g)] \cdot h [k(h^{-1}) k(g^{-1})] \ &ghk((gh)^{-1}) =^? g \cdot h k((gh)^{-1}) \ &ghk((gh)^{-1}) = gh k((gh)^{-1}) \ \end{aligned} $$

So we need the $n$ of $h$ to be twisted by the $k$ component of $g$ by a conjugation. So we define the semidirect structure as:

$$ \begin{aligned} (n(g), k(g)) \cdot (n(h), k(h)) \equiv (n(g) k(g) n(h) k(g)^{-1}, k(g) k(h)) \ &= (n(g) n(h)^{k(g)}, k(g) k(h)) \end{aligned} $$

We've checked that this works with the group structure. So we now have a morphism $\phi: G \rightarrow N \ltimes K$. we need to check that it's an isomorphism, so we need to make sure that this has full image and trivial kernel.

-

Full image: Let $(n, k) \in N \ltimes K$. Create the element $g = \alpha(n) s(k) \in G$. We get $\pi(g) = \pi(\alpha(n)s(k)) = \pi(\alpha(n)) \pi(s(k)) = e k = k$. We get $n(g) = g k$

Intro to topological quantum field theory

- Once again, watching a videos for shits and giggles.

- Geometrically, we cut and paste topological indices / defects.

- QFT in dimensions n+1 (n space, 1 time)

- Manifold: $X^n$. Can associate a hilbert space of states $H_x$.

- Space of wave functions on field space.

- Axioms of hilbert space: (1) if there is no space, the hilbert space $H_\emptyset$ for it is the complex numbers. (2) If we re-orient the space, the hilbert space becomes the dual $H_{-X} = H_X^\star$. (3) Hilbert space over different parts is the tensor product: $H_{X \cup Y} = H_X \otimes H_Y$.

- We want arbitrary spacetime topology. We start at space $X$, and we end at a space $Y$. The space $X$ is given positive orientation to mark "beginning" and $Y$ is given negative orientation to mark "end". We will have a time-evolution operator $\Phi: H_X \rightarrow H_Y$.

- We have a composition law of gluing: Going from $X$ to $Y$ and then from $Y$ to $Z$ is the same as going from $X$ to $Z$. $\phi_{N \circ M} = \phi_N \circ \phi_M$.

- If we start and end at empty space, then we get a linear map $\Phi: H_\emptyset \rightarrow H_\emptyset$ which is a linear map $\Phi: \mathbb C \rightarrow \mathbb C$, which is a fancy way to talk about a complex number (scaling)

- If we start with an empty set and end at $Y$, then we get a function $\Phi: H_\emptyset \rightarrow H_Y \simeq \mathbb C \rightarrow \mathbb Y$. But this is the same as picking a state, for example, $\Phi(1) \in H_Y$ [everything else is determined by this choice].

- If a manifold has two sections $X$ and $-X$, we can glue $X$ to $-X$ to get the trace.

- Quantum mechanics is

0 + 1TQFT (!) - TQFT of 1+1 dimensions.

- Take a circle: $S^1 \rightarrow H$. Let $H$ be finite dimensional.

- A half-sphere has a circle as boundary. So it's like $H_\emptyset \rightarrow H_{S^1}$. This is the ket $|0\rangle$.

- This is quite a lot like a string diagram...

- Frobenius algebra

- Video: IAS PiTP 2015

Spectral norm of Hermitian matrix equals largest eigenvalue (WIP)

Define $||A|| \equiv \max { ||Ax|| : ||x|| = 1 }$. Let $A$ be hermitian. We wish to show that $||A||$ is equal to the largest eigenvalue. The proof idea is to consider the eigenvectors $v[i]$ with eigenvalue $\lambda[i]$ with largest eigenvalue $v^\star$ of eigenvalue $\lambda^$ and claim that $||Av^\star|| = \lambda^$ is maximal.

Non examples of algebraic varieties

It's always good to have a stock of non-examples.

Line with hole: Algebraic proof

The set of points $V \equiv { (t, t) : t \neq 42, t \in \mathbb R } \subseteq mathbb R^2$ is not a variety. To prove this, assume it is a variety defined by equations $I(V)$. Let $f(x, y) \in I(V) \subseteq \mathbb R[x, y]$. Since $f$ vanishes on $V$, we must have $f(a, a) = 0$ for all $a \neq 42$ (since $(a, a) \in V$ for all $a \neq 42$). So create a new function $g(a) \equiv (a, a)$. Now $f \circ g: \mathbb R \rightarrow \mathbb R = f(g(a)) = f(a, a) = 0$. This polynomial (it is a composition of polynomial, and is thus a polynomial) has infinitely many zeroes, and is thus identically zero. So, $f(g(a)) = 0$, So $f(a, a) = 0$ for all $a$. In particular, $f(42, 42) = 0$ for all equations that define $V$, hence $(42, 42) \in I(V)$. But this does not give us the variety $V$. Hence $V$ is not a variety.

Line with hole: Analytic proof

The set of points $V \equiv { (t, t) : t \neq 42, t \in \mathbb R } \subseteq mathbb R^2$ is not a variety. To prove this, assume it is a variety defined by equations $I(V)$. Let $f(x, y) \in I(V) \subseteq \mathbb R[x, y]$. Since $f$ vanishes on $V$, we must have $f(a, a) = 0$ for all $a \neq 42$ (since $(a, a) \in V$ for all $a \neq 42$). Since $f$ is continuous, $f$ preserves limits. Thus, $\lim_{x \to 42} f(x, x) = f(\lim_{x \to 42} (x, x))$. The left hand side is zero, hence the right hand size must be zero. Thus, $f(42, 42) = 0$. But this can't be, because $(42, 42) \not \in V$.

$\mathbb Z$

The set $\mathbb Z$ is not an algebraic variety. Suppose it is, and is the zero set of a collection of polynomials ${ f_i }$. Then for some $f_i$, they must vanish on at least all of $\mathbb Z$, and maybe more. This means that $f_i(z) = 0$ for all $z \in \mathbb Z$. But a degree $n$ polynomial can have at most $n$ roots, unless it is the zero polynomial. Since $f_i$ does not have a finite number of roots, $f_i = 0$. Thus, all the polynomials are identically zero, and so their zero set is not $\mathbb Z$; it is all of $\mathbb R$.

The general story

In general, we are using a combinatorial fact that a $n$ degree polynomial has at most $n$ roots. In some cases, we could have used analytic facts about continuity of polynomials, but it suffices to simply use combiantorial data which I find interesting.

Nilradical is intersection of all prime ideals

Nilradical is contained in intersection of all prime ideals

Let $x \in \sqrt 0$. We must show that it is contained in all prime ideals. Since $x$ is in the nilradical, $x$ is nilpotent, hence $x^n = 0$ for some $n$. Let $p$ be an arbitrary prime ideal. Since $0 \in p$ for all prime ideals, we have $x^n = 0 \in p$ for $x$. This means that $x^n = x \cdot x^{n-1} \in p$, and hence $x \in p \lor x^{n-1} \in p$. If $x \in p$ we are done. If $x^{n-1} \in p$, recurse to get $x \in p$ eventually.

Proof 1: Intersection of all prime ideals is contained in the Nilradical

Let $f$ be in the intersection of all prime ideals. We wish to show that $f$ is contained in the nilradical (that is, $f$ is nilpotent). We know that $R_f$ ($R$ localized at $f$) collapses to the zero ring iff $f$ is nilpotent. So we wish to show that the sequence:

$$ \begin{aligned} 0 \rightarrow R_f \rightarrow 0 \end{aligned} $$

is exact. But exactness is a local property, so it suffices to check against each $(R_f)_m$ for all maximal ideals $m$. Since $(R_f)_m = (R_m)_f$ (localizations commute), let's reason about $(R_m)_f$. We know that $R_m$ is a local ring as $m$ is prime (it is maximal), and thus $R_m$ has only a single ideal $m$. Since $f \in m$ for all maximal ideal $m$ (since $f$ lives in the intersection of all prime ideals), localizing at $f$ in $R_m$ blows up the only remaining ideal, collapsing us the ring to give us the zero ring. Thus, for each maximal ideal $m$, we have that:

$$ \begin{aligned} 0 \rightarrow (R_f)_m \rightarrow 0 \end{aligned} $$

is exact. Thus, $0 \rightarrow R_f \rightarrow 0$ is exact. Hence, $f$ is nilpotent, or $f$ belongs to the nilradical.

Proof 2: Intersection of all prime ideals is contained in the Nilradical

- Quotient the ring $R$ by the nilradical $N$.

- The statement in $R/N$ becomes "in a ring with no ninpotents, intersection of all primes is zero".

- This means that every non-zero element is not contained in some prime ideal. Pick some arbitrary element $f \neq 0 \in R/N$. We know $f$ is not nilpotent, so we naturally consider $S_f \equiv { f^i : i \in \mathbb N }$.

- The only thing one can do with a multiplicative subset like that is to localize. So we localize the ring $R/N$ at $S$.

- If all prime ideals contain the function $f$, then localizing at $f$ destroys all prime ideals, thus blows up all maximal ideals, thereby collapsing the ring into the zero ring (the ring has no maximal ideals, so the ring is the zero ring).

- Since $S^{-1} R/N = 0$, we have that $0 \in S$. So some $f^i = 0$. This contradicts the assumption that no element of $R/N$ is nilpotent. Thus we are done.

Lemma: $S$ contains zero iff $S^{-1} R = 0$

-

(Forward): Let $S$ contain zero. Then we must show that $S^{-1} R = 0$. Consider some element $x/s \in S^{-1} R$. We claim that $x = 0/1$. To show this, we need to show that there exists an $s' \in S$ such that $xs'/s = 0s'/1$. That is, $s'(x \cdot 1 - 0 \cdot s) = 0$. Choose $s' = 0$ and we are done. Thus every element is $S^{-1}R$ is zero if $S$ contains zero.

-

(Backward): Let $S^{-1} R = 0$. We need to show that $S$ contains zero. Consider $1/1 \in S^{-1} R$. We have that $1/1 = 0/1$. This means that there is an $s' \in S$ such that $s'1/1 = s'0/1$. Rearranging, this means that $s'(1 \cdot 1 - 1 \cdot 0) = 0$. That is, $s'1 = 0$, or $s' = 0$. Thus, the element $s'$ must be zero for $1$ to be equal to zero. Hence, for the ring to collapse, we must have $0 = s' \in S$. So, if $S^{-1}R = 0$, then $S$ contains zero.

Exactness of modules is local

We wish to show that for some ring $R$ and modules $K, L, M$ a sequence $K \rightarrow L \rightarrow M$ is exact iff $K_m \rightarrow L_m \rightarrow M_m$ is exact for every maximal ideal $m \subset R$. This tells us that exactness is local.

Quotient by maximal ideal gives a field

Quick proof

Use correspondence theorem. $R/m$ only has the images of $m, R$ as ideals which is the zero ideal and the full field.

Element based proof

Let $x + m \neq 0$ be an element in $R/m$. Since $x + m \neq 0$, we have $x \not in m$. Consider $(x, m)$. By maximality of $m$, $(x, m) = R$. Hence there exist elements $a, b \in R$ such that $xa + mb = 1$. Modulo $m$, this read $xa \equiv 1 (\text{mod}~$m$)$. Thus $a$ is an inverse to $x$, hence every nonzero element is invertible.

Ring of power series with infinite positive and negative terms

If we allow a ring with elements $x^i$ for all $-\infty < x < \infty$, for notation's sake, let's call it $R[[[x]]]$. Unfortunately, this is a badly behaved ring. Define $S \equiv \sum_{i = -\infty}^\infty x^i$. See that $xS = S$, since multiplying by $x$ shifts powers by 1. Since we are summing over all of $\mathbb Z$, $+1$ is an isomorphism. Rearranging gives $(x - 1)S = 0$. If we want our ring to be an integral domain, we are forced to accept that $S = 0$. In the Barvinok theory of polyhedral point counting, we accept that $S = 0$ and exploit this in our theory.

Mean value theorem and Taylor's theorem. (TODO)

I realise that there are many theorems that I learnt during my preparation for JEE that I simply don't know how to prove. This is one of them. Here I exhibit the proof of Taylor's theorem from Tu's introduction to smooth manifolds.

Taylor's theorem: Let $f: \mathbb R \rightarrow \mathbb R$ be a smooth function, and let $n \in \mathbb N$ be an "approximation cutoff". Then there exists for all $x_0 \in \mathbb R$ a smooth function $r \in C^{\infty} \mathbb R$ such that: f(x) = f(x_0) + (x - x_0) f'(x_0)/1! + (x - x_0)^2 f'(x_0)/2! + \dots + (x - x_0)^n f^{(n)'}(x_0)/n! + (x - x_0)^{n+1} r

We prove this by induction on $n$. For $n = 0$, we need to show that there exists an $r$ such that $f(x) = f(x_0) + r$. We begin by parametrising the path from $x_0$ to $x$ as $p(t) \equiv (1 - t) x_0 + tx$. Then we consider $(f \circ p)'$:

$$ \begin{aligned} &\frac{f(p(t))}{dt} = \frac{df((1 - t) x_0) + tx)}{dt} \ &= (x - x_0) \frac{df((1 - t)x_0) + tx)}{dx} \end{aligned} $$

Integrating on both sides with limits $t=0, t=1$ yields:

$$ \begin{aligned} &\int_0^1 \frac{df(p(t))}{dt} dt = \int_0^1 (x - x_0) \frac{df((1 - t)x_0) + tx)}{dx} dt \ f(p(1)) - f(p(0)) = (x - x_0) \int_0^1 \frac{df((1 - t)x_0) + tx)}{dx} dt \ f(x) - f(x_0) = (x - x_0) g1 \ \end{aligned} $$

where we define $g1 \equiv \int_0^1 \frac{df((1 - t)x_0) + tx)}{dx} dt $ where the $g1$ witnesses that we have the first derivative of $f$ in its expression. By rearranging, we get:

$$ \begin{aligned} f(x) - f(x_0) = (x - x_0) g1 \ f(x) = f(x_0) + (x - x_0) g1 \ \end{aligned} $$

If we want higher derivatives, then we simply notice that $g1$ is of the form:

$$ \begin{aligned} g1 \equiv \int_0^1 f'((1 - t)x_0) + tx) dt \ g1 \equiv \int_0^1 f'((1 - t)x_0) + tx) dt \ \end{aligned} $$

Cayley Hamilton

I find the theorem spectacular, because while naively the vector space $M_n(F)$ has dimension $n^2$, Cayley-Hamilton tells us that there's only $n$ of $M^0, M^1, \dots$ are enough to get linear dependence. However, I've never known a proof of Cayley Hamilton that sticks with me; I think I've found one now.

For any matrix $A$, the adjugate has the property:

$$ A adj(A) = det(A) I $$

Using this, Consider the matrix $P_A \equiv xI - A$ which lives in $End(V)[x]$, and its corresponding determinant $p_A(x) \equiv det(P_A) = det(xI - A)$.

We have that

$$ P_A adj(P_A) = det(P_A) I \ (xI - A) adj(xI - A) = det(xI - A) I = p_A(x) I \ $$

If we view this as an equation in $End(V)[x]$, it says that $p_A$ has a factor $xI - A$. This means that $x = A$ is a zero of $p_A(X)$. Thus, we know that $A$ satisfies $p_A(x)$, hence $A$ satisfies its own characteristic polynomial!

The key is of course the adjugate matrix equation that relates the adjugate matrix to the determinant of $A$.

Adjugate matrix equation

- Let $A'(i, j)$ be the matrix $A$ with the $i$ th row and $j$ column removed.

- Let $C[i, j] \equiv (-1)^{i+j} det(A'(i, j))$ be the determinant of the $A'(i, j)$ matrix.

- Let define $adj(A) \equiv C^T$ to be the transpose of the cofactor matrix. That is, $adj(A)[i, j] = C[j, i] = det(A'(j, i))$.

- Call $D \equiv A adj(A)$. We will now compute the entries of $Z$ when $i = j$ and when $i \neq j$. We call it $D$ for diagonal since we will show that $D$ is a diagonal matrix with entry $det(A)$ on the diagonal.

- First, compute $D[i, i]$ (I use einstein summation convention where repeated indices are implicitly summed over):

$$ \begin{aligned} = D[i, i] = (A adj(A))[i, i] \ &= A[i, k] adj(A) [k, i] \ &= A[i, k] (-1)^{i+k} det(A'[k, i]) \ &= det(A) \end{aligned} $$

The expression $A[i, k] det(A'[k, i])$ is the determinant of $A$ when expanded along the row $i$ using the Laplace expansion.

Next, let's compute $D[i, j]$ when $i \neq j$:

$$ \begin{aligned} D[i, j] = (A adj(A))[i, j] \ &= A[i, k] adj (A)[k, j] \ & = A[i, k] (-1)^{k+j} det(A'[k, j]) \ & = A[i, k] (-1)^{j+k} det(A'[k, j]) \ & = det(Z) \end{aligned} $$

This is the determinant of a new matrix $Z$ (for zero), such that the $j$th row of $Z$ is the $i$th row of $A$. More explicitly:

$$ \begin{aligned} Z[l, :] \equiv \begin{cases} A[l, :] & l \neq j \ A[i, :] & l = j \ \end{cases} \end{aligned} $$

Since $Z$ differs from $A$ only in the $j$th row, we must have that $Z'[k, j] = A'[k, j]$, since $Z'[k, j]$ depends on what happens on all rows and columns outside of $j$.

If we compute $det(Z)$ by expanding along the $j$ row, we get:

$$ \begin{aligned} &det(Z) = (-1)^{j+k} Z[j, k] det(Z'[k, j]) \ &det(Z) = (-1)^{j+k} A[j, k] det(Z'[k, j]) \ &det(Z) = (-1)^{j+k} A[j, k] det(A'[k, j]) \ &= D[i, j] \end{aligned} $$

But $Z$ has a repeated row: $A[j, :] = A[i, :]$ and $i \neq j$. Hence, $det(Z) = 0$. So, $D[i, j] = 0$ when $i \neq j$.

Hence, this means that $A adj(A) = det(A) I$.

- We can rapidly derive other properties from this reuation. For example, $det(A adj(A)) = det(det(A) I) = det(A)^n$, and hence $det(A) det(adj(A)) = det(A)^n$, or $det(adj(A)) = det(A)^{n-1}$.

- Also, by rearranging, if $det(A) \neq 0$, we get $A adj(A) = det(A) I$, hence $A (adj(A)/det(A)) = I$, or $adj(A)/det(A) = A^{-1}$.

Determinant in terms of exterior algebra

For a vector space $V$ of dimension $n$, Given a linear map $T: V \rightarrow V$, define a map $\Lambda T: \Lambda^n V \rightarrow \Lambda^n V$ such that $\Lambda T(v_1 \wedge v_2 \dots \wedge v_n) \equiv T v_1 \wedge T v_2 \dots \wedge T v_n$. Since the space $\Lambda^n V$ is one dimension, we will need one scalar $k$ to define $T$: $T(v_1 \wedge \dots \wedge v_n) = k v_1 \wedge \dots \wedge v_n$. It is either a theorem or a definition (depending on how one starts this process) that $k = det(T)$.

If we choose this as a definition, then let's try to compute the value. Pick orthonormal basis $v[i]$. Let $w[i] \equiv T v[i]$ (to write $T$ as a matrix). Define the $T$ matrix to be defined by the equation $w[i] = T[i][j] v[j]$. If we now evaluate $\Lambda_T$, we get:

$$ \begin{aligned} \Lambda T(v_1 \wedge \dots v_n) \ &= T(v_1) \wedge T(v_2) \dots \wedge T(v_n) \ &= w_1 \wedge w_2 \dots w_n \ &= (T[1][j_1] v[j_1]) \wedge (T[1][j_2] v[j_2]) \wedge (T[1][j_n] v[j_n]) \ &= (\sum_{\sigma \in S_n} (\prod_i T[i][\sigma(i)] sgn(\sigma)) v[1] \wedge v[2] \dots \wedge v_n \end{aligned} $$

Where the last equality is because:

- (1) Repeated vectors get cancelled, so we must have unique $v[1], v[2], \dots v[n]$ in the terms we collect. So all the $j_k$ must be distinct in a given term.

- A wedge of the form $T[1][j_1] v[j_1] \wedge T[2][j_2] v[j_2] \dots T[n][j_n] v[j_n]$, where all the $j_i$ are distinct (see (1)) can be rearranged by a permutation that sends $v_{j_i} \mapsto v_i$. Formally, apply the permutation $\tau(j_i) \equiv i$. This will reorganize the wedge into $T[1][j_1] T[2][j_2] \dots T[n][j_n] v[1] \wedge v[2] \wedge v[3] \dots v[n] (-1)^{sgn(\tau)}$, where the sign term is picked up by the rearrangement.

- Now, write the indexing into $T[i][j_i]$ in terms of a permutation $\sigma(i) \equiv j_i$. This becomes $\prod_i T[i][\sigma(i)] (-1)^{sgn(\tau)} v[1] \wedge v[2] \dots \wedge v[n]$.

- We have two permutations $\sigma, \tau$ in the formula. But we notice that $\sigma = \tau^{-1}$, and hence $sgn(\sigma) = sgn(\tau)$, so we can write the above as $\prod_i T[i][\sigma(i)] (-1)^{sgn(\sigma)} v[1] \wedge v[2] \dots \wedge v[n]$.

- Thus, we have recovered the "classical determinant formula".

Laplace expansion of determinant

From the above algebraic encoding of the determinant of $T[i][j]$ as $\sum_{\sigma \in S_n}sgn(\sigma)\prod_i T[i][\sigma(i)]$, we can recover the "laplace expansion" rule, that asks to pick a row $r$, and then compute the expression: as

$$ L_r(T) \equiv \sum_c T[r, c] (-1)^{r+c} det(T'(r, c)) $$

Where $T'(r, c)$ is the matrix $T$ with row $r$ and column $c$ deleted. I'll derive this concretely using the determinant definition for the 3 by 3 case. The general case follows immediately. I prefer being explicit in a small case as it lets me see what's going on.

Let's pick a basis for $V$, called $b[1], b[2], b[3]$. We have the relationship $v[i] \equiv Tb[i]$. We want to evaluate the coefficient of $v[1] \wedge v[2] \wedge v[3]$. First grab a basis expansion of $v[i]$ as $v[i] = c[i][j] b[j]$. These uniquely define the coefficients $c[i][j]$. Next, expand the wedge:

$$ \begin{aligned} & v[1] \wedge v[2] \wedge v[3] \ &= (c[1][1]b[1] + c[1][2]b[2] + c[1][3]b[3]) \wedge (c[2][1]b[1] + c[2][2]b[2] + c[2][3]b[3]) \wedge (c[3][1]b[1] + c[3][2]b[2] + c[3][3]b[3]) \end{aligned} $$

I now expand out only the first wedge, leaving terms of the form $c[1][1]b[1] (\cdot) + c[1][2]b2 c[1][3]b[3] (\cdot)$. (This corresponds to "expanding along and deleting the row" in a laplace expansion when finding the determinant) Let's identify the $(\cdot)$ and see what remains:

$$ \begin{aligned} & v[1] \wedge v[2] \wedge v[3] \ &= c[1][1]b[1] \wedge (c[2][1]b[1] + c[2][2]b[2] + c[2][3]b[3]) \wedge (c[3][1]b[1] + c[3][2]b[2] + c[3][3]b[3])

- c[1][2]b[2] \wedge(c[2][1]b[1] + c[2][2]b[2] + c[2][3]b[3]) \wedge(c[3][1]b[1] + c[3][2]b[2] + c[3][3]b[3])

- c[1][3]b[2] \wedge(c[2][1]b[1] + c[2][2]b[2] + c[2][3]b[3]) \wedge(c[3][1]b[1] + c[3][2]b[2] + c[3][3]b[3]) \end{aligned} $$

Now, for example, in the first term $c[1][1]b[1] \wedge (\cdot)$, we lose anything inside that contains a $b[1]$, as the wedge will give us $b[1] \wedge b[1] = 0$ (this corresponds to "deleting the column" when considering the submatrix). Similar considerations have us remove all terms that contain $b[2]$ in the brackets of $c[1][2]b[2]$, and terms that contain $b[3]$ in the brackets of $c[1][3]b[3]$. This leaves us with:

$$ \begin{aligned} & v[1] \wedge v[2] \wedge v[3] \ &= c[1][1]b[1] \wedge (c[2][2]b[2] + c[2][3]b[3]) \wedge (c[3][2]b[2] + c[3][3]b[3])

- c[1][2]b[2] \wedge(c[2][1]b[1] + c[2][3]b[3]) \wedge(c[3][1]b[1] + c[3][3]b[3])

- c[1][3]b[2] \wedge(c[2][1]b[1] + c[2][2]b[2] ) \wedge(c[3][1]b[1] + c[3][2]b[2]) \end{aligned} $$

We are now left with calculating terms like $(c[2][2]b[2] + c[2][3]b[3]) \wedge (c[3][2]b[2] + c[3][3]b[3])$ which we can solve by recursion (that is the determinant of the 2x2 submatrix). So if we now write the "by recursion" terms down, we will get something like:

$$ \begin{aligned} & v[1] \wedge v[2] \wedge v[3] \ &= c[1][1]b[1] \wedge (k[1] b[2] \wedge b[3])

- c[1][2]b[2] \wedge(k[2] b[1] \wedge b[3])

- c[1][3]b[2] \wedge(k[3] b[1] \wedge b[2]) \end{aligned} $$

Where the $k[i]$ are the values produced by the recursion, and we assume that the recursion will give us the coefficients of the wedges "in order": so we always have $b[2] \wedge b[3]$ for example, not $b[3] \wedge b[2]$. So, we need to ensure that the final answer we spit out corresponds to $b[1] \wedge b[2] \wedge b[3]$. If we simplify the current step we are at, we will get:

$$ \begin{aligned} & v[1] \wedge v[2] \wedge v[3] \ &= k[1] c[1][1]b[1] \wedge b[2] \wedge b[3]

- k[2] c[1][2]b[2] \wedge b[1] \wedge b[3]

- k[3] c[1][3]b[2] \wedge b[1] \wedge b[2] \end{aligned} $$

We need to rearrange our terms to get $b[1] \wedge b[2] \wedge b[3]$ times some constant. On rearranging each term into the standard form $b[1] \wedge b[2] \wedge b[3]$, we are forced to pick up the correct sign factors:

$$ \begin{aligned} & v[1] \wedge v[2] \wedge v[3] \ &= k[1] c[1][1]b[1] \wedge b[2] \wedge b[3] -k[2] c[1][2]b[1] \wedge b[2] \wedge b[3]

- k[3] c[1][3]b[1] \wedge b[2] \wedge b[3] \ &= (c[1][1]k[1] - c[1][2]k[2] + c[1][3]k[3])(b[1] \wedge b[2] \wedge b[3]) \end{aligned} $$

We clearly see that for each $c[i]$, the factor is $(-1)^i k[i]$ where $k[i]$ is the answer gotten by computing the determinant of the sub-expression where we delete the vector $b[i]$ (ignore the column) and also ignore the entire "row", by not thinking about $cj$ where $j \neq i$. So, this proves the laplace expansion by exterior algebra.

Deriving Cayley Hamilton for rings from $\mathbb Z$

I'll show the idea of how to prove Cayley Hamilton for an arbitrary commutative ring $R$ given we know Cayley Hamilton for $\mathbb Z$. I describe it for 2x2 matrices. The general version is immediate from this. Pick a variable matrix and write down the expression for the characteristic polynomial So if:

M = [a b]

[c d]

then the characteristic polynomial is:

ch

= |M - xI|

=

|a-x b|

|c d-x|

that's $ch(a, b, c, d, x) \equiv (a-x)(d-x) - bc = x^2 +x (a + d) + ad - bc$. This equation has $a, b, c, d, x \in R$ for some commutative ring $R$. Now, we know that if we set $x = M$, this equation will be satisfied. But what does it mean to set $x = A$? Well, we need to let $x$ be an arbitrary matrix:

x = [p q]

[r s]

And thus we compute x^2 to be:

x^2

= [p q][p q]

[r s][r s]

= [p^2 + qr; pq + qs]

[rp + sr; rq + s^2]

So now expanding out $ch(a, b, c, d, x)$ in terms of $x$ on substituting for $x$ the matrix we get the system:

[p^2 + qr; pq + qs] + (a + d) [p q] + (ad - bc)[1 0] = [0 0]

[rp + sr; rq + s^2] [r s] [0 1] [0 0]

We know that these equations hold when $x = M$, because the Cayley-Hamilton theorem

tells us that $ch(M) = 0$! So we get a different system with p = a, q = b, r = c, s = d,

still with four equations, that we know is equal to zero! This means we have four

intederminates a, b, c, d and four equations, and we know that these equations are true

for all $\mathbb Z$. But if a polynomial vanishes on infinitely many points, it must

identically be zero. Thus, this means that ch(A) is the zero polynomial, or ch(A) = 0

for all R. This seems to depend on the fact that the ring is infinite, because otherwise

imagine we send $\mathbb Z$ to $Z/10Z$. Since we don't have an infinite number

of $\mathbb Z$ elements, why should the polynomial be zero? I imagine that this

needs zariski like arguments to be handled.

Cramer's rules

We can get cramer's rule using some handwavy manipulation or rigorizing the manipulation using geometric algebra.

Say we have a system of equations:

$$ \begin{aligned} a[1] x + b[1] y = c[1] \ a[2] x + b[2] y = c[2] \end{aligned} $$

We can write this as:

$$ \vec a x + \vec b y = \vec c $$

where $\vec a \equiv (a_1, a_2)$ and so on. To solve the system, we wedge with $\vec a$ and $\vec b$:

$$ \begin{aligned} \vec a \wedge (\vec a x + \vec b y) = \vec a \wedge \vec c \ \vec a \wedge \vec b y = \vec a \wedge \vec c \ y = \frac{\vec a \wedge \vec c}{\vec a \wedge \vec b} \ y = \frac{\begin{vmatrix} a[1] & a[2] \ c[1] & c[2] \end{vmatrix}}{ \begin{vmatrix} a[1] & b[1] \ a[2] & b[2] \end{vmatrix} } \end{aligned} $$

Which is exactly cramer's rule.

The formula for the adjugate matrix from Cramer's rule (TODO)

References

Nakayama's lemma (WIP)

Geometric applications of Jacobson radical

vector fields over the 2 sphere (WIP)

We assume that we already know the hairy ball theorem, which states that no continuous vector field on $S^2$ exists that is nowhere vanishing. Using this, we wish to deduce (1) that the module of vector fields over $S^2$ is not free, and an explicit version of what the Serre Swan theorem tells us, that this module is projective

1. Vector fields over the 2-sphere is projective

Embed the 2-sphere as a subset of $\mathbb R^3$. So at each point, we have a tangent plane, and a normal vector that is perpendicular to the sphere: for the point $p \in S^2$, we have the vector $p$ as being normal to $T_p S^2$ at $p$. So the normal bundle is of the form:

$$ \mathfrak N \equiv { { s } \times { \lambda s : \lambda in \mathbb R } : s \in \mathbb S^2 } $$

- If we think of the trivial bundle, that is of the form $Tr \equiv { s } \times \mathbb R : s \in \mathbb S^2 }$.

- We want to show an isomorphism between $N$ and $T$.

- Consider a map $f: N \rightarrow Tr$ such that $f((s, n)) \equiv (s, ||n||)$. The inverse is $g: Tr \rightarrow N$ given by $g((s, r)) \equiv (s, r \cdot s)$. It's easy to check that these are inverses, so we at least have a bijection.

- To show that it's a vector bundle morphism, TODO.

- (This is hopelessly broken, I can't treat the bundle as a product. I can locally I guess by taking charts; I'm not sure how I ought to treat it globally!)

1. Vector fields over the sphere is not free

-

- Given two bundles $E, F$ over any manifold $M$, a module isomorphism $f: \mathfrak X(E) \rightarrow \mathfrak X(F)$ of vector fields as $C^\infty(M)$ modules is induced by a smooth isomorphism of vector bundles $F: E \rightarrow F$.

-

- The module $\mathfrak X(M)$ is finitely generated as a $C^\infty$ module over $M$.

- Now, assume that $\mathfrak X(S^2)$ is a free module, so we get that $\mathfrak X(S^2) \simeq \oplus_i C^\infty(S^2)$.

- By (2), we know that this must be a finite direct sum for some finite $N$: $mathfrak X(S^2) = \oplus_i=1^N C^\infty(S^n)$.

- But having $N$ different independent non-vanishing functions on $\mathbb S^2$ is the same as clubbing them all together into a vector of $N$ values at each point at $S^2$.

- So we get a smooth function $S^2 \rightarrow \mathbb R^n$, AKA a section of the trivial bundle $\underline{\mathbb R^n} \equiv S^2 \times \mathbb R^n$.

- This means that we have managed to trivialize the vector bundle over the sphere if vector fields over $S^2$ were a free module.

- Now, pick the element $S^2 \times { (1, 1, 1, 1, \dots) } \in S^2 \times \mathbb R^n$. This is a nowhere vanishing vector field over $S^2$. But such an object cannot exist, and hence vector fields over the sphere cannot be free.

References

- Smooth vector fields over $S^2$ is not a free module

- Smooth vector fields over $S^2$ is a projective module

Learning to talk with your hands

I was intruged by this HN thread about learning to talk with your hands. I guess I'm going to try and do this more often now.

Lovecraftisms

I recently binged a lot of Lovecraftian horror to get a sense of his writing style. Here's a big list of my favourite quotes:

His madness held no affinity to any sort recorded in even the latest and most exhaustive of treatises, and was conjoined to a mental force which would have made him a genius or a leader had it not been twisted into strange and grotesque forms.

seething vortex of time

Snatches of what I read and wrote would linger in my memory. There were horrible annals of other worlds and other universes, and of stirrings of formless life outside of all universes

clinging pathetically to the cold planet and burrowing to its horror-filled core, before the utter end.

appalled at the measureless age of the fragments

fitted darkly into certain Papuan and Polynesian legends of infinite antiquity

The condition and scattering of the blocks told mutely of vertiginous cycles of time and geologic upheavals of cosmic savagery.

uttermost horrors of the aeon-old legendry

The moon, slightly past full, shone from a clear sky, and drenched the ancient sands with a white, leprous radiance which seemed to me somehow infinitely evil.

with the bloated, fungoid moon sinking in the west

how preserved through aeons of geologic convulsion I could not then and cannot now even attempt to guess.

gently bred families of the town

could not escape the sensation of being watched from ambush on every hand by sly, staring eyes that never shut.

and there was that constant, terrifying impression of other sounds--perhaps from regions beyond life--trembling on the very brink of audibility.

little garden oasis of village-like antiquity where huge, friendly cats sunned themselves atop a convenient shed.

Better it be left alone for the years to topple, lest things be stirred that ought to rest forevrr in the black abyss

It clearly belonged to some settled technique of infinite maturity and perfection, yet that technique was utterly remote from any--Eastern or Western, ancient or modern--which I had ever heard of or seen exemplified. It was as if the workmanship were that of another planet.

intricate arabesques roused into a kind of ophidian animation.

never was an organic brain nearer to utter annihilation in the chaos that transcends form and force and symmetry.

away outside the galaxy and possibly beyond the last curved rim of space.

It was like the drone of some loathsome, gigantic insect ponderously shaped into the articulate speech of an alien species

In time the ruts of custom and economic interest became so deeply cut in approved places that there was no longer any reason for going outside them, and the haunted hills were left deserted by accident rather than by design

There were, too, certain caves of problematical depth in the sides of the hills; with mouths closed by boulders in a manner scarcely accidental, and with more than an average quota of the queer prints leading both toward and away from them

he fortified himself with the mass lore of cryptography

As before, the sides of the road showed a bruising indicative of the blasphemously stupendous bulk of the horror

The dogs slavered and crouched close to the feet of the fear-numbed family.

an added element of furtiveness in the clouded brain which subtly transformed him from an object to a subject of fear

fireflies come out in abnormal profusion to dance to the raucous, creepily insistent rhythms of stridently piping bull-frogs.

a seemingly limitless legion of whippoorwills that cried their endless message in repetitions timed diabolically to the wheezing gasps of the dying man

pandemoniac cachinnation which filled all the countryside

the left-hand one of which, in the Latin version, contained such monstrous threats to the peace and sanity of the world

Their arrangement was odd, and seemed to follow the symmetries of some cosmic geometry unknown to earth or the solar system

faint miasmal odour which clings about houses that have stood too long

I do not believe I would like to visit that country by night--at least not when the sinister stars are out

there was much breathless talk of new elements, bizarre optical properties, and other things which puzzled men of science are wont to say when faced by the unknown..

stealthy bitterness and sickishness, so that even the smallest bites induced a lasting disgust

plants of that kind ought never to sprout in a healthy world.

everywhere were those hectic and prismatic variants of some diseased, underlying primary tone without a place among the known tints of earth.

In her raving there was not a single specific noun, but only verbs and pronouns..

great bare trees clawing up at the grey November sky with a studied malevolence

There are things which cannot be mentioned, and what is done in common humanity is sometimes cruelly judged by the law.

monstrous constellation of unnatural light, like a glutted swarm of corpse-fed fireflies dancing hellish sarabands over an accursed marsh,

No traveler has ever escaped a sense of strangeness in those deep ravines, and artists shiver as they paint thick woods whose mystery is as much of the spirits as of the eye.

We live on a placid island of ignorance in the midst of black seas of infinity, and it was not meant that we should voyage far. The sciences, each straining in its own direction, have hitherto harmed us little; but some day the piecing together of dissociated knowledge will open up such terrifying vistas of reality, and of our frightful position therein, that we shall either go mad from the revelation...

form which only a diseased fancy could conceive

... dreams are older than brooding Tyre, or the contemplative Sphinx, or garden-girdled Babylon

iridescent flecks and striations resembled nothing familiar to geology or mineralogy.

miserable huddle of hut

Only poetry or madness could do justice to the noises heard by Legrasse's men as they ploughed on through the black morass.

In his house at R'lyeh dead Cthulhu waits dreaming

all the earth would flame with a holocaust of ecstasy and freedom.

The aperture was black with a darkness almost material.

Hairy ball theorem from Sperner's Lemma (WIP)

- Let $\Delta$ be an n-dimensional simplex with vertices $v_0, v_1, \dots, v_n$.

- Let $\Delta_i$ be the face opposite to vertex $v_i$. That is, $\Delta_i$ is the face with all vertices except $v_i$.

- The boundary $\partial \Delta$ is the union of all the $n+1$ faces of $\Delta_i$ (i is from $0$ to $n$).

- Let $\Delta$ be subdivided into smaller simplicies forming a simplciial complex $S$.

- Sperner's lemma: Let the vertices of $S$ be labelled by $\phi: S \rightarrow \Delta$ (that is, it maps all vertices of the simplicial complex $S$ to one of the vertices of the simplex $\Delta$), such that $v \in \Delta_i \implies \phi(v) \neq i$. Then there is at least one $n$-dimensional simplices of $S$ whose image is $\Delta$ (That is, there is at least one n-dimensional-sub-simplex $T \subseteq S$ such that vertices of $T$ are mapped to ${0, 1, \dots, n}$). More strongly, the number of such sub-simplices is odd.

- We can see that the map $\phi$ looks like some sort of retract that maps the complex $S$ to its boundary $\Delta$. Then Sperner's lemma tells us that there is one "region" $T \subseteq S$ that gets mapped onto $\Delta$.

1D proof of Sperner's: Proof by cohomology

-

For 1D, assume we have a line with vertex set $V$ and edges $E$. Let the vertex at the beginning be $v_0$ and the vertx at the end be $v_1$. That is, $\Delta \equiv {v_0, v_1}$ and $S \equiv (V, E)$ is a subcomplex of $\Delta$ --- that is, it subdivides the line $\Delta$ into smaller portions. Let $\phi: S \rightarrow \Delta$ be the labelling function.

-

create a function $f: \Delta \rightarrow \mathbb F_2$ that assigns $0$ to $v_0$ and $+1$ to $v_1$: $f(v_0) \equiv 0; f(v_1) \equiv 1$. Use this to generate a function on the full complex $F: S \rightarrow F_2; F(v) \equiv F(\phi(v))$.

-

From $F$, generate a function on the edges $dF: E \rightarrow F_2; dF(\overline{vw}) = F(w) + F(v)$. See that this scores such that $dF(AB) = +1$, $dF(BA) = +1$, $dF(AA) = dF(BB) = 0$. (Recall that the arithmetic is over $F_2$) So, $dF$ adds a one every time we switch from $A$ to $B$ or from $B$ to $A$.

-

However, we also see that $dF$ is generated from a "potential function "f". Hence we have the identity $\sum_{e \in E} dF(e) = f(v_1) - f(v_0) = 1 - 0 = 1$. Hence, we must have switched signs an odd number of times.

-

Since we start from $A$, that means we must have switched from $A$ to $B$ an odd number of times.

2D proof of Sperner's: Proof by search

- Start from an edge in the bottom $ef$ labeled $BC$. We are looking for a simplex labeled $ABC$.

- To start: Pick some vertex above $ef$, say $g$. If this is labeled $A$, we are done. If not, say this is labeled $B$. So we get triangle $efg=ABB$. Launch our search procedure from this triangle $efb$.

- Find the triangle adjacent to $efg$ along the edge $eg=AB$ (the other $AB$ edge, not the one we started with). If this adjacent triangle $egh$ has $h=A$ we are done. If not, move to the triangle $egh$.

- See that we will either find a triangle labeled $ABC$, or we will keep running into triangles labeled $ABB$.

- We cannot ever repeat a triangle in our path; to repeat a triangle is to start with some edge $xy$ and then to pick a vertex $z$ such that $xyz=efg$ where $efg$ was already picked. This must mean that the edge $ef$ was already picked. [WIP]

Proof of hairy ball by sperner's lemma [WIP]

Why hairy ball is interesting: Projective modules

The reason I care about the hairy ball theorem has to do with vector fields. The idea is to first think of smooth vector fields over a smooth manifold. What algebraic structure do they have? Indeed, they are a vector space over $\mathbb R$. However, it is difficult to exhibit a basis. Naively, for each point $p \in M$, we would need a basis $T_p B \subset T_p M$ as a basis. This brings in issues of smoothness, etc. Regardless, it would be uncountable in dimension.

On the other hand, let's say we allow ourselves to consider vector fields as modules over the ring of smooth functions on a manifold. That is, we can scale the vector field by a different value at each point.

We can hope the ""dimension"" of the module is much smaller. So, for example, if we think of $\mathbb R^2$, given some vector field $V \equiv v_x \hat x + v_y \hat y$, the functions $v_x$ and $v_y$ allow us to write basis! Create the vector fields $V_x \equiv \hat x$ and $V_y \equiv \hat y$. Then any vector field $V$ can be written as $V = v_x V_x + v_y V_y$ for functions $v_x, v_y$ in a unique way!

However, as we know, not all modules are free. A geometric example of such a phenomenon is the module of vector fields on the sphere. By the hairy ball theorem, any vector field must vanish at at least a single point. So if we try to build a vector field pointing "rightwards" (analogous to $\hat x$) and "upwards" (analogous $\hat y$), these will not be valid smooth vector fields, because they don't vanish! So, we will be forced to take more than two vector fields. But when we do that, we will lose uniqueness of representation. However, all is not lost. The Serre Swan theorem tells us that any such module of vector fields will be a projective module. The sphere gives us a module that is not free. I'm not sure how to show that it's projective.

Simple example of projective module that is not free.

-

Let $K$ be a field. Consider $R \equiv K \times K$ as a ring, and let $M \equiv K$ be a module on top of $R$.

-

$M$ is a projective module because $M \oplus K \simeq R$ (that is, we can direct sum something onto it to get the some $\oplus_i R$)

-

On the other hand, $M$ itself is not free because $M \neq \oplus_i R$ for any $i$. Intuitively, $M$ is "half an $R$" as $M \simeq K$ while $R \simeq K\times K$.

-

The geometric picture is that we have a space with two points ${p, q}$. We have a bundle on top of it, with $M$ sitting on $p$ and $0$ (the trivial module) sitting on top of $q$. When we restrict to $p$, we have a good bundle $M$.

-

But in total over thr space, we can't write the bundle as $M \times {p, q}$ because the fibers have different dimensions! The dimension over $p$ is $dim(M) = 1$ while over $q$ is $dim(0) = 0$.

-

What we can do is to "complete" the bundle by adding a copy of $M$ over $q$, so that we can then trivialise the bundle to write $M \times {p, q}$.

-

So, a projective module corresponds to a vector bundle because it locally is like a vector space, but may not be trivialisable due to a difference in dimension, or compatibility, or some such.

-

Sperner's lemma, Brower's fixed point theorem, and cohomology

CS and type theory: Talks by vovodesky

- Talk 1: Computer Science and Homotopy Theory

- Think of ZFC sets as rooted trees. Have two axioms:

- (1) all branches of all vertices are non-isomorphic (otherwise a set would have two copies of the same element)

- (2) Each leaf must be at finite depth from the root.

- This is horrible to work with, so type theory!

- Talk 2: What if foundations of math is inconsistent?

- We "know" that first order math is consistent. We can prove that it is impossible to prove that first order math is consistent!

- Choice 1: If we "know" FOL is consistent, then we should be able to transform this knowledge into a proof, then 2nd incompleteness is false.

- Choice 2: Admit "transcendental" part of math, write dubious philosophy.

- Choice 3: Admit that our sensation that FOL +arithmetic is consistent is an illusion and admit that FOL arithmetic is inconsistent.

- Time to consider Choice 3 seriously?

First order arithmetic

Mathematical object which belongs to class of objects called formal theories. Has four pieces of data:

- Special symbols, names of variables.

- Syntactic rules.

- Deduction rules: Construct new closed formulas from old closed formula.

- Axioms: collection of closed formulas.

Anything that is obtainable from these deduction rules is called a theorem.

First order logic have symbols: ∀, ∃, ⇒, !(not) and so on. First order theory is

inconsistent if there a closed formula $A$ such that both $A$ and $!A$ is a

theorem.

- Free variables describe subsets.

Eg:

∃ n: n^2 = mdescribes the set{ m : ∃ n: n^2 = m }. - It's possible to construct subsets (formulae with one free variable) whose membership is undedicable. So you can prove that it is impossible to say anything whatsoever about these subsets.

Gentzen's proof and problems with it

Tries to reason about trees of deduction. Show that proofs correspond to combinatorial objects. Show that inconsistency corresponds to an infinite decreasing sequence that never terminates. Then he says that it is "self evident" that this cannot happen. But it is not self evident!

What would inconsistency of FOL mean for mathematicians?

- Inconsistency of FOL implies inconsistency of many other systems (eg. set theory).

- Inconsistency of FOL implies inconsistency of constructive (intuitionistic) mathematics! (WTF?) shown by Godel in 1933. Takes a proof of contradiction in classical and strips off LEM.

- We need foundations that can create reliable proofs despite being inconsistent!

- Have systems that react to inconsistency in less drastic ways. One possible candidate is constructive type theories. A proof of a formula in such a system is itself a formula in the system. There are no deduction rules, only syntactic rules. So a proof is an object that can be studied in the system. If one has a proof of contradiction, then such a proof can be detected --- they have certain properties that can be detected by an algorithm (what properties?)

New workflow

- Formalize a problem.

- Construct creative solution.

- Submit proof to a "reliable" verifier. If the verifier terminates, we are done. If the verifier does not terminate, we need to look for other proofs that can terminate.

- our abstract thinking cancels out by normalisation :P

Summary

- Correct interpretation of 2nd incompleteness is a step of proof of inconsistency of FOL (Conjecture).

- In math, we need to learn how to use inconsistent theories to obtain reliable proofs. Can lead to more freedom in mathematical workflow.

Univalent Foundations: New Foundations of Mathematics

-

Talk 3: Univalent foundations --- New Foundations of Mathematics

-

Was uncertain about future when working on 2-categories and higher math. No way to ground oneself by doing "computations" (numerical experiments). To make it worse, the existing foundations of set theory is bad for these types of objects.

-

Selected papers on Automath.

-

Overcoming category theory as new foundations was very difficult for vovodesky.

-

Categories are "higher dimensional sets"? NO! Categories are "posets in the next dimension". Correct version of "sets in the next dimension" are groupoids (WHY?) MathOverflow question

-

Grothendeick went from isomorphisms to all morphisms, this prevented him from gravitating towards groupoids.

-

Univalent foundations is a complete foundational system.

Vovodesky's univalence principle --- Joyal

-

Univanent type theory is arrived at by adding univalence to MLTT.

-

Goal of univalent foundations is to apply UTT to foundations.

-

Univalence is to type theory what induction principle is to peano arithmetic

-

Univalence implies descent. Descent implies Blakers Massey-theorem, which implies Goodwille calculus.

-

The syntactic system of type theory is a tribe.

-

A clan is a category equipped with a class of carrable maps called fibrations. A map is carrable if we can pull it back along any other map.

-

A clan is a category along with maps called "fibrations", such that (1) every isomorphism is a fibration, (1) closed under composition, (3) fibrations are carrable, (4) base change of fibration is a fibration, (4) Category has a terminal object, and map into the terminal object is a fibration.

-

A map $u: A \rightarrow B$ is anodyne if it does something good with respect to fibrations.

-

A tribe is a clan such that (1) base chnge of anodyne along fibration is anodyne, (2) every map factorizes as anodyne followed by fibration.

-

Kan complexes form a tribe. A fibration is a Kan fibration. A map is anodyne here if it is a monomorphism and a homotopy equivalence.

-

Given a tribe $E$, can build a new tribe by slicing $E/A$ (this is apparently very similar to things people do in Quillen Model categories).

-

A tribe is like a commutative ring. We can extend by adding new variables to get polynomial rings. An elementary extension is extending the tribe by adding a new element.

-

If $E$ is a tribe, an object of $E$ is a type. We write

E |- A : Type. -

If we have a map $a: 1 -> A$, we regard this as an element of A:

E |- a : A. -

A fibration is a family of objects. This is a dependent type

x : A |- E(x): Type.E(x)is the fiber ofp: E -> Aat a variable elementx : A. -

A section of a fibration gives an element of the fibration. We write this as

x : A |- s(x) : E(x).s(x)denotes the value ofs: A -> Eof a variable elementx : A. (Inhabitance is being able to take the section of a fiber bundle?!) -

change of parameters / homomorphism is substitution.

y : B |- E(y) : Type

--------------------

x : A |- E(f(x)) : Type

This is pulling back along fibrations.

- Elementary extension

E -> E(A)are called as context extensions.

|- B : Type

-----------

x : A |- B : Type

- A map between types is a variable element

f(x) : Bindexed byx : A

x : A |- f(x) : B

- Sigma formation rule: The total space of the union is the sum of all fibers(?)

x: A |- E(x): Type

------------------

|- \sum{x : A}E(x): Type

x: A |- E(x): Type

------------------

y : B |- \sum{x : f^{-1}(y)}E(x): Type

-

Path object for $A$ is obtained by factorial diagonal map

diag: a -> (a, a)as an anodyne mapr: A -> PAfollowed by a fibration(s, t) : PA -> A x A. -

A homotopy

h: f ~ gbetween two mapsf, g : A -> Bis a maph: A -> PBsuch thatsh = fandth = g. homotopy is a congruence. -

x: A, y : A |- Id_A(x, y) : Typecalled the identity type of A. -

An element

p: Id_A(x, y)is a proof thatx =A y. -

Reflexivity term

x : A |- r(x) : Id_A(x, x)which provesx =A x. -

The identity type is a path object

-

$\gamma(x, y): Id_A(x, y) -> Eq(E(x), E(y))$. $\gamma$ is some kind of connection: given a path from $x$ to $y$, it lets us transport $E(x)$ to $E(y)$, where the $Eq$ is the distortion from the curvature?

Hilbert basis theorem for polynomial rings over fields (WIP)

Theorem: Every ideal $I$ of $k[x_1, \dots, x_n]$ is finitely generated.

First we need a lemma:

Lemma:

Let $I \subseteq k[x_1, \dots, x_n]$ be an ideal. (1) $(LT(I)) \equiv $ is a monomial ideal. An ideal $I$ is a monomial ideal if there is a subset $A \subseteq \mathbb Z^n_{\geq 0}$ (possibly infinite) such that $I = (x^a : a \in a)$. That is, $I$ is generated by monomials of the form $x^a$. Recall that since we have

Proof of hilbert basis theorem

- We wish to show that every ideal $I$ of $k[x_1, \dots, x_n]$ is finitely generated.

- If $I = { 0 }$ then take $I = (0)$ and we are done.

- Pick polynomials $g_i$ such that $(LT(I)) = (LT(g_1), LT(g_2), \dots, LT(g_t))$. This is always possible from our lemma. We claim that $I = (g_1, g_2, \dots, g_t)$.

- Since each $g_i \in I$, it is clear that $(g_1, \dots, g_t) \subseteq I$.

- Conversely, let $f \in I$ be a polynomial.

- Divide $f$ by $g_1, \dots, g_t$ to get $f = \sum_i a_i g_i + r$ where no term of $r$ is divisible by $LT(g_1), \dots, LT(g_t)$. We claim that $r = 0$.

References

- Cox, Little, O'Shea: computational AG.

Covering spaces (WIP)

Covering spaces: Intuition

- Consider the map $p(z) = z^2 : \mathbb C^\times \rightarrow \mathbb C^\times$. This is a 2-to-1 map. We can try to define an inverse regardless.

- We do define a "square root" if we want. Cut out a half-line $[0, -infty)$ called $B$ for branch cuts. We get two functions on $q_+, q_-: \mathbb C - B \rightarrow \mathbb C^\times$, such that $p(q_+(z)) = z$. Here, we have $q_- = - q_+$.

- The point of taking the branch cut is to preserve simply connectedness. $\mathbb C^\times$ is not simply connected, while $\mathbb C/B$ is simply connected! (This seems so crucial, why has no one told me this before?!)

- Eg 2: exponential. Pick $exp: \mathbb C \rightarrow \mathbb C^\times$. This is surjective, and infinite to 1. $e^{z + 2 \pi n} = e^{iz}$.

- Again, on $\mathbb C / B$, we have $q_n \equiv \log + 2 \pi i n$, such that $exp(q_n(z)) = z$.

- A covering map is, roughly speaking, something like the above. It's a map that's n-to-1, which has n local inverse defined on simply connected subsets of the target.

- So if we have $p: Y \rightarrow X$, we have $q: U \rightarrow Y$ (for $U \subseteq X$) such that $p(q(z)) = z, \forall z \in U$.

Covering spaces: Definition

- A subset $U \subset X$ is a called as an elementary neighbourhood if there is a discrete set $F$ and a homeomorphism $h: p^{-1}(U) \rightarrow U \times F$ such that $p|{p^{-1}(U)}(y) = fst(h)$ or $p|{p^{-1}(U)} = pr_1 \circ h$.

- Alternative definition A subset $U \subset X$ is called as evenly covered/elementary nbhd if $p^{-1}(U) = \sqcup \alpha V_\alpha$ where the $V_\alpha$ are disjoint and open, and $p|{V\alpha} : V_\alpha \rightarrow U$ is a homeomorphism for all $\alpha$.

- An elementary neighbourhood is the region where we have the local inverses (the complement of a branch cut).

- We get for each $i \in F$ , a map $q_i : U \rightarrow U \times F; q_i(x) = (x, i)$ and then along $h^{-1}$ sending $h^{-1}(x, i) \in p^{-1}(U)$.

- We say $p$ is a covering map if $X$ is covered by elementary neighbourhoods.

- We say $V \subseteq Y$ is an elementary sheet if it is path connected and $p(V)$ is an elementary neighbourhood.

- So, consider $p(x) = e^{ix}: \mathbb R \rightarrow S^1$. If we cut the space at $(0, 0)$, then we will have elementary neighbourhood $S^1 - {(0, 0)}$ and elementary sheets $(2 \pi k, 2 \pi+1)$.

- The point is that the inverse projection $p^{-1}$ takes $U$ to some object of the form $U \times F$: a local product! So even though the global covering space $\mathbb R$ does not look like a product of circles, it locally does. So it's some sort of fiber bundle?

Slogan: Covering space is locally disjoint copies of the original space.

Path lifting and Monodromy

- Monodromy is behaviour that's induced in the covering space, on moving in a loop in a base.

- Etymology: Mono --- single, drome --- running. So running in a single loop / running around a single time.

- Holonomy is a type of monodromy that occurs due to parallel transport in a loop, to detect curvature

- Loop on the base is an element of $\pi_1(X)$.

- Pick some point $x \in X$. Consider $F \equiv \pi^{-1}(x)$ ($F$ for fiber).

- Now move in a small loop on the base, $\gamma$. The local movement will cause movement of the elements of the fiber.

- Since $\gamma(1) = \gamma(0)$, the elements of the fiber at the end of the movement are equal to the original set $F$.

- So moving in a loop induces a permutation of the elements of the fiber $F$.

- Every element of $\pi_1(X)$ induces a permutation of elements of the fiber $F$.

- This lets us detect non-triviality of $\pi_1(X)$. The action of $\pi_1(X)$ on the fiber lets us "detect" what $\pi_1(X)$ is.

- We will define what is means to "move the fiber along the path".

Path lifting lemma

Theorem:Suppose $p: y \rightarrow X$ is a covering map. Let $\delta: [0, 1] \rightarrow X$ be a path such that $\delta(0) = x$, and let $y \in p^{-1}(x)$ [$y$ is in the fiber of $x$]. Then there is a unique path $\gamma: [0,1] \rightarrow Y$ which "lifts" $\delta$. That is, $\delta(p(y)) = \gamma(y)$, such that $\gamma(0) = Y$.

Slogan: Paths can be lifted. Given how to begin the lift, can be extended all the way.

- Let $N$ be a collection of elementary neighbourhoods of $X$.

- ${ \delta^{-1}(U) : U \in N }$ is an open cover (in the compactness sense) of $[0, 1]$.

- By compactness, find a finite subcover. Divide interval into subintervals $0 = t_0 < t_1 < \dots t_n = 1$ such that $\delta|k = \delta|{[t_k, t{k+1}]}$ lands in $U_k$, an elementary neighbourhood.

- Build $\gamma$ by induction on $k$.

- We know that $\gamma(0)$ should be $y$.

- Since we have an elementary neighbourhood, it means that there are a elementary sheets living over $U_0$, indexed by some discrete set $F$. $y$ lives in one of thse sheets. We have local inverses $q_m$. One of them lands on the sheet of $y$, call it $q$. So we get a map $q: U_0 \rightarrow Y$ such that $q(x) = y$.

- Define $\gamma(0) \equiv q(\delta(0)) = q(x) = y$.

- Extend $\gamma$ upto $t_1$.

- Continue all the way upto $t_k$.

- To get $\gamma$ from $(t_k, t_{k+1}$, there exists a $q_k: U_k \rightarrow Y$ such that $q_k(\delta(t_k)) = \gamma(t_k)$. Define $\gamma(t_k \leq t \leq t_{k_1}) \equiv q_k(\delta(t_k))$.

- This is continuous because $\delta$ continuous by definition, $q_k$ continuous by neighbourhood, $\gamma$ is pieced together such that endpoints fit, and is thus continuous.

- Can check this is a lift! We get $p \circ \gamma = p \circ q_k \circ \delta_k$. Since $q_k$ is a local inverse of $p$, we get $p \circ \gamma = \delta_k$ in the region.

7.03: Path lifting: uniqueness

If we have a space $X$ and a covering space $Y$, for a path $gamma$ that starts at $x$, we can find a path $\gamma'$ which starts at $y \in p^{-1}(x)$ and projects down to $\gamma$: $\gamma(t) = p(\gamma'(t))$. We want to show that this path lift is unique

Lemma

Let $p: Y \rightarrow X$ be a covering space. Let $T$ be a connected space Let $F: T \rightarrow X$ be a continuous map (for us, $T \simeq [0, 1]$). Let $F_1, F_2: T \rightarrow Y$ be lifts of $F$ ($p \circ F_1 = F$, $p \circ F_2 = F$). We will show that $F_1 = F_2$ iff the lifts are equal for some $t \in $T.

Slogan: Lifts of paths are unique: if they agree at one point, they agree at all points!

- We just need to show that if $F_1$ and $F_2$ agree somewhere in $Y$, they agree everywhere. It is clear that if they agree everywhere, they must agree somewhere.

- To show this, pick the set $S$ where $F_1, F_2$ agree in $Y$: $S \equiv { t \in T : F_1(t) = F_2(t) }$.

- We will show that $S$ is open and closed. Since $T$ is connected, $S$ must be either the full space or the empty set. Since $S$ is assumed to be non-empty, $S = T$ and the two functions agree everywhere.

- (Intuition: if both $S$ and $S^c$ are open, then we can build a function that colors $T = S \cup S^c$ in two colors continuously; ie, we can partition it continuously; ie the spaces must be disconnected. Since $T$ is connected, we cannot allow that to happen, hence $S = \emptyset$ or $S = T$.)

- Let $t \ in T$. Let $U$ be an evenly covered neighbourhood/elementary neighbourhood of $F(t)$ downstairs (in $X$). Then we have $p^{-1}(U) = \sqcup_\alpha V_\alpha$ such that $p|V{\alpha}: V\alpha \rightarrow U$ is a local homeomorphism.

- Since $F_1, F_2$ are continuous, we will have opens $V_1, V_2$ in $V_\alpha$, which contain $F_1(t), F_2(t)$ upstairs (mirrroring $U$ containing $F(t)$ downstairs).

- The pre-images of $V_1$, $V_2$ along $F_1, F_2$ give us open sets $t \in T_1, T_2 \subseteq T$.

- Define $T* = T_1 \cap T_2$. If $F_1(t) \neq F_2(t)$, then $V_1 \neq V_2$ and thus $F_1 \neq F_2$ on all of $T*$. So, $S^c = T*$ is open.

- If $F_1(t) = F_2(t)$, then $V_1 = V_2$ and thus $F_1 = F_2$ on $T*$ (since $p \circ F_1 = F = p \circ F_2$, and $p$ is injective within $U$, ie within $V_1, V_2$). So $S$ is open.

- Hence we are done, as $S$ is non-empty and clopen and is thus equal to $T$. Thus, the two functions agree on all of $T$.

Homotopy lifting, Monodromy

- Given a loop $\gamma$ in $X$ based at $x$ , the monodromy around $\gamma$ is a permutation $\sigma_\gamma : p^{-1}(x) \rightarrow p^{-1}(x)$, where $\sigma_{\gamma}(y) \equiv \gamma^y(1)$ where $\gamma^y$ is the unique lift of $\gamma$ staring at $y$. We have that $\sigma_{\gamma} \in Perm(p^{-1}(x))$.

- Claim: if $\gamma_1 \simeq \gamma_2$ then $\sigma_{\gamma_1} = \sigma_{\gamma_2}$.