MILVLG / Bottom Up Attention.pytorch

Labels

Projects that are alternatives of or similar to Bottom Up Attention.pytorch

bottom-up-attention.pytorch

This repository contains a PyTorch reimplementation of the bottom-up-attention project based on Caffe.

We use Detectron2 as the backend to provide completed functions including training, testing and feature extraction. Furthermore, we migrate the pre-trained Caffe-based model from the original repository which can extract the same visual features as the original model (with deviation < 0.01).

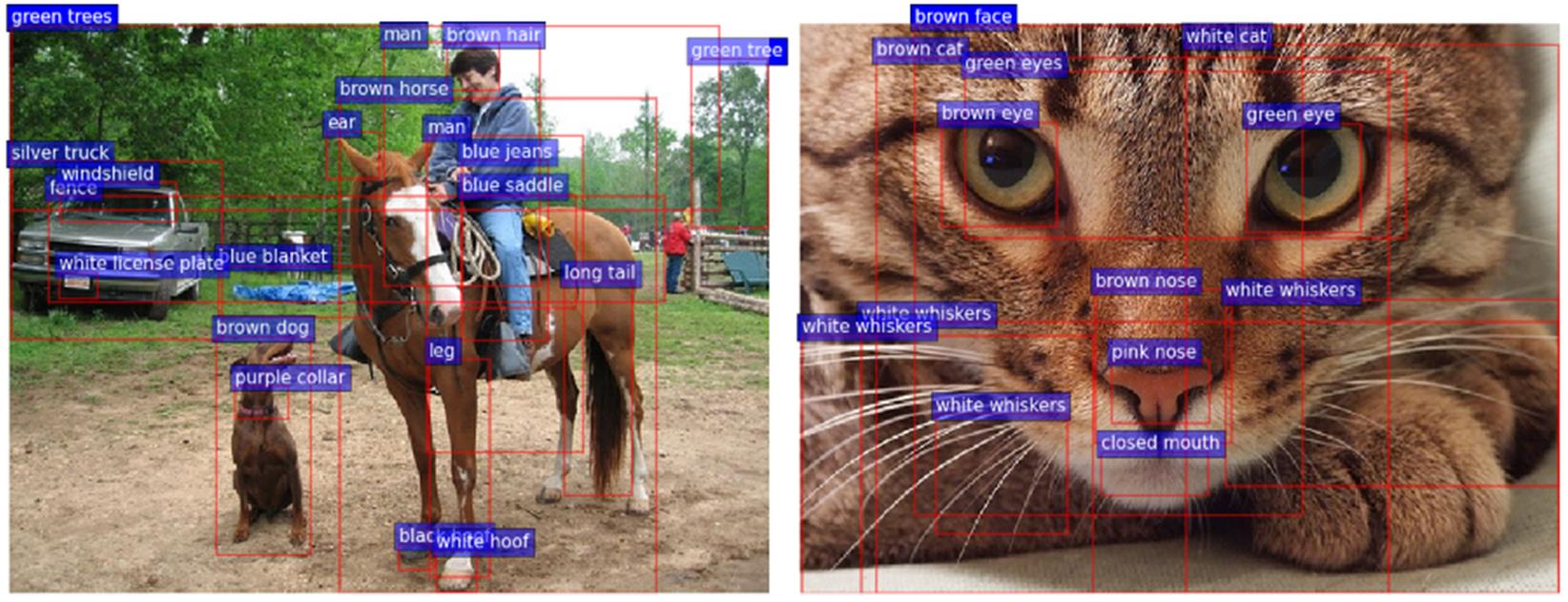

Some example object and attribute predictions for salient image regions are illustrated below. The script to obtain the following visualizations can be found here

Table of Contents

Prerequisites

Requirements

- Python >= 3.6

- PyTorch >= 1.4

- Cuda >= 9.2 and cuDNN

- Apex

- Detectron2

- Ray

- OpenCV

- Pycocotools

Note that most of the requirements above are needed for Detectron2.

Installation

-

Clone the project including the required version (v0.2.1) of Detectron2

# clone the repository inclduing Detectron2(@be792b9) $ git clone --recursive https://github.com/MILVLG/bottom-up-attention.pytorch

-

Install Detectron2

$ cd detectron2 $ pip install -e . $ cd ..

We recommend using Detectron2 v0.2.1 (@be792b9) as backend for this project, which has been cloned in step 1. We believe a newer Detectron2 version is also compatible with this project unless their interface has been changed (we have tested v0.3 with PyTorch 1.5).

-

Compile the rest tools using the following script:

# install apex $ git clone https://github.com/NVIDIA/apex.git $ cd apex $ python setup.py install $ cd .. # install the rest modules $ python setup.py build develop $ pip install ray

Setup

If you want to train or test the model, you need to download the images and annotation files of the Visual Genome (VG) dataset. If you only need to extract visual features using the pre-trained model, you can skip this part.

The original VG images (part1 and part2) are to be downloaded and unzipped to the datasets folder.

The generated annotation files in the original repository are needed to be transformed to a COCO data format required by Detectron2. The preprocessed annotation files can be downloaded here and unzipped to the dataset folder.

Finally, the datasets folders will have the following structure:

|-- datasets

|-- vg

| |-- images

| | |-- VG_100K

| | | |-- 2.jpg

| | | |-- ...

| | |-- VG_100K_2

| | | |-- 1.jpg

| | | |-- ...

| |-- annotations

| | |-- train.json

| | |-- val.json

Training

The following script will train a bottom-up-attention model on the train split of VG. We are still working on this part to reproduce the same results as the Caffe version.

$ python3 train_net.py --mode detectron2 \

--config-file configs/bua-caffe/train-bua-caffe-r101.yaml \

--resume

-

mode = {'caffe', 'detectron2'}refers to the used mode. We only support the mode with Detectron2, which refers todetectron2mode, since we think it is unnecessary to train a new model using thecaffemode. -

config-filerefers to all the configurations of the model. -

resumerefers to a flag if you want to resume training from a specific checkpoint.

Testing

Given the trained model, the following script will test the performance on the val split of VG:

$ python3 train_net.py --mode caffe \

--config-file configs/bua-caffe/test-bua-caffe-r101.yaml \

--eval-only

-

mode = {'caffe', 'detectron2'}refers to the used mode. For the converted model from Caffe, you need to use thecaffemode. For other models trained with Detectron2, you need to use thedetectron2mode. -

config-filerefers to all the configurations of the model, which also include the path of the model weights. -

eval-onlyrefers to a flag to declare the testing phase.

Feature Extraction

With highly-optimized multi-process parallelism, the following script will extract the bottom-up-attention visual features in a fast manner (about 7 imgs/s on a workstation with 4 Titan-V GPUs and 32 CPU cores).

And we also provide a faster version of the script of extract features, which will extract the bottom-up-attention visual features in an extremely fast manner! (about 16 imgs/s on a workstation with 4 Titan-V GPUs and 32 cores) However, it has a drawback that it could cause memory leakage problem when the computing capability of GPUs and CPUs is mismatched (More details and some matched examples in here).

To use this faster version, just replace 'extract_features.py' with 'extract_features_faster.py' in the following script. MAKE SURE YOU HAVE ENOUGH CPUS.

$ python3 extract_features.py --mode caffe \

--num-cpus 32 --gpus '0,1,2,3' \

--extract-mode roi_feats \

--min-max-boxes '10,100' \

--config-file configs/bua-caffe/extract-bua-caffe-r101.yaml \

--image-dir <image_dir> --bbox-dir <out_dir> --out-dir <out_dir>

-

mode = {'caffe', 'detectron2'}refers to the used mode. For the converted model from Caffe, you need to use thecaffemode. For other models trained with Detectron2, you need to use thedetectron2mode.'caffe'is the default value. -

num-cpusrefers to the number of cpu cores to use for accelerating the cpu computation. 0 stands for using all possible cpus and 1 is the default value. -

gpusrefers to the ids of gpus to use. '0' is the default value. -

config-filerefers to all the configurations of the model, which also include the path of the model weights. -

extract-moderefers to the modes for feature extraction, including {roi_feats,bboxesandbbox_feats}. -

min-max-boxesrefers to the min-and-max number of features (boxes) to be extracted. -

image-dirrefers to the input image directory. -

bbox-dirrefers to the pre-proposed bbox directory. Only be used if theextract-modeis set to'bbox_feats'. -

out-dirrefers to the output feature directory.

Using the same pre-trained model, we provide an alternative two-stage strategy for extracting visual features, which results in (slightly) more accurate bboxes and visual features:

# extract bboxes only:

$ python3 extract_features.py --mode caffe \

--num-cpus 32 --gpu '0,1,2,3' \

--extract-mode bboxes \

--config-file configs/bua-caffe/extract-bua-caffe-r101.yaml \

--image-dir <image_dir> --out-dir <out_dir> --resume

# extract visual features with the pre-extracted bboxes:

$ python3 extract_features.py --mode caffe \

--num-cpus 32 --gpu '0,1,2,3' \

--extract-mode bbox_feats \

--config-file configs/bua-caffe/extract-bua-caffe-r101.yaml \

--image-dir <image_dir> --bbox-dir <bbox_dir> --out-dir <out_dir> --resume

Pre-trained models

We provided pre-trained models as follows, including the models converted from the original Caffe repo (the standard dynamic 10-100 model and the alternative fix36 model). The evaluation metrics are exactly the same as those in the original Caffe project.

| Model | Mode | Backbone | Objects [email protected] | Objects weighted [email protected] | Download |

|---|---|---|---|---|---|

| Faster R-CNN-k36 | Caffe | ResNet-101 | 9.3% | 14.0% | model |

| Faster R-CNN-k10-100 | Caffe | ResNet-101 | 10.2% | 15.1% | model |

| Faster R-CNN | Caffe | ResNet-152 | 11.1% | 15.7% | model |

License

This project is released under the Apache 2.0 license.

Contact

This repo is currently maintained by Zhou Yu (@yuzcccc), Tongan Luo (@Zoroaster97), and Jing Li (@J1mL3e_).

Citation

If this repository is helpful for your research or you want to refer the provided pretrained models, you could cite the work using the following BibTeX entry:

@misc{yu2020buapt,

author = {Yu, Zhou and Li, Jing and Luo, Tongan and Yu, Jun},

title = {A PyTorch Implementation of Bottom-Up-Attention},

howpublished = {\url{https://github.com/MILVLG/bottom-up-attention.pytorch}},

year = {2020}

}