sjchoi86 / Choicenet

Licence: mit

Implementation of ChoiceNet

Stars: ✭ 125

Labels

Projects that are alternatives of or similar to Choicenet

Dash Sample Apps

Open-source demos hosted on Dash Gallery

Stars: ✭ 2,090 (+1572%)

Mutual labels: jupyter-notebook

Carnd Lenet Lab

Implement the LeNet deep neural network model with TensorFlow.

Stars: ✭ 124 (-0.8%)

Mutual labels: jupyter-notebook

Nlp Beginner Guide Keras

NLP model implementations with keras for beginner

Stars: ✭ 125 (+0%)

Mutual labels: jupyter-notebook

Ipyvolume

3d plotting for Python in the Jupyter notebook based on IPython widgets using WebGL

Stars: ✭ 1,696 (+1256.8%)

Mutual labels: jupyter-notebook

Onlineminingtripletloss

PyTorch conversion of https://omoindrot.github.io/triplet-loss

Stars: ✭ 125 (+0%)

Mutual labels: jupyter-notebook

Gdeltpyr

Python based framework to retreive Global Database of Events, Language, and Tone (GDELT) version 1.0 and version 2.0 data.

Stars: ✭ 124 (-0.8%)

Mutual labels: jupyter-notebook

Deepinsight

A general framework for interpreting wide-band neural activity

Stars: ✭ 125 (+0%)

Mutual labels: jupyter-notebook

Predictive Maintenance

Data Wrangling, EDA, Feature Engineering, Model Selection, Regression, Binary and Multi-class Classification (Python, scikit-learn)

Stars: ✭ 124 (-0.8%)

Mutual labels: jupyter-notebook

Dnnweaver2

Open Source Specialized Computing Stack for Accelerating Deep Neural Networks.

Stars: ✭ 125 (+0%)

Mutual labels: jupyter-notebook

Simplegesturerecognition

A very simple gesture recognition technique using opencv and python

Stars: ✭ 124 (-0.8%)

Mutual labels: jupyter-notebook

Keras Mdn Layer

An MDN Layer for Keras using TensorFlow's distributions module

Stars: ✭ 125 (+0%)

Mutual labels: jupyter-notebook

Lit2vec

Representing Books as vectors using the Word2Vec algorithm

Stars: ✭ 125 (+0%)

Mutual labels: jupyter-notebook

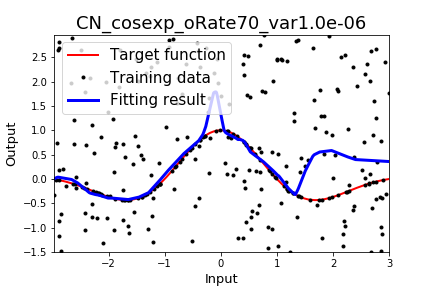

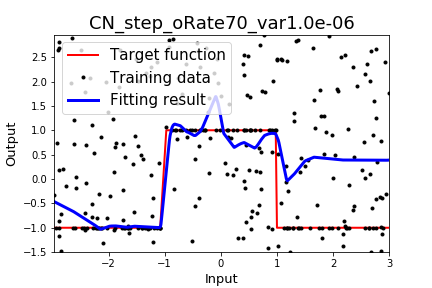

ChoiceNet

TensorFlow Implementation of ChoiceNet on regression tasks.

Summarized result:

Paper: arxiv

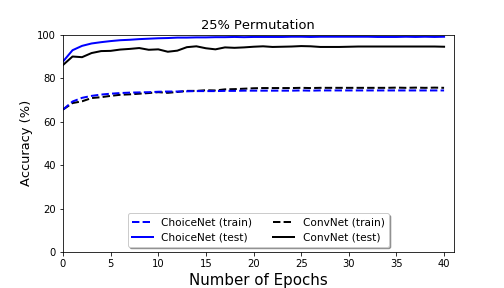

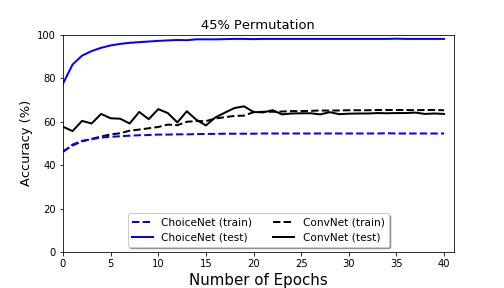

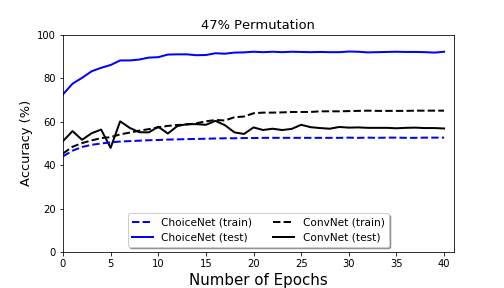

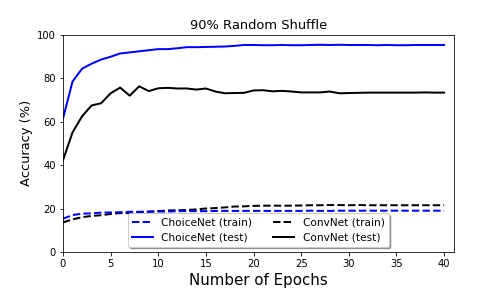

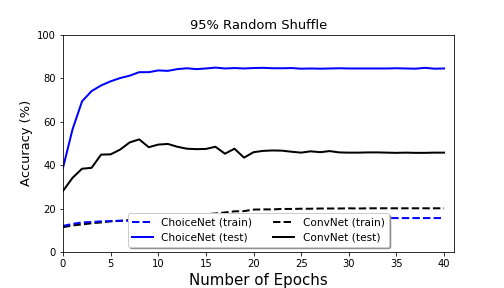

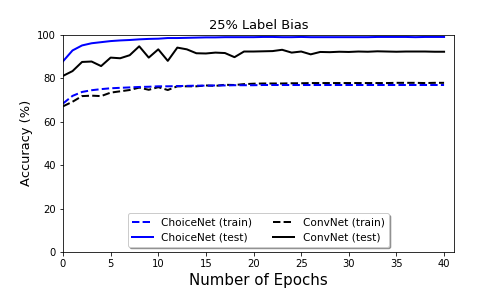

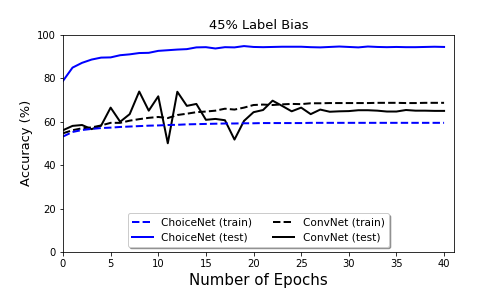

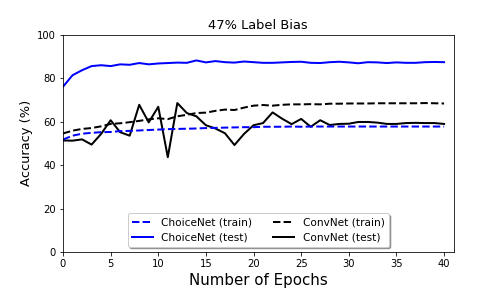

Classification (MNIST) Result

| name | Result |

|---|---|

| Outlier Rate: 25.0% |  |

| Outlier Rate: 45.0% |  |

| Outlier Rate: 47.5% |  |

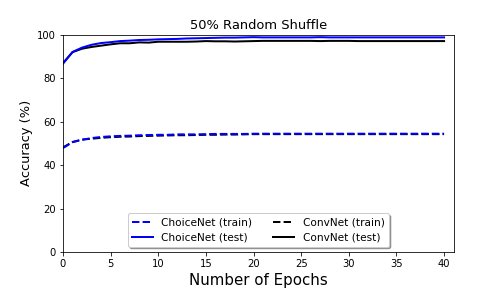

| name | Result |

|---|---|

| Outlier Rate: 50.0% |  |

| Outlier Rate: 90.0% |  |

| Outlier Rate: 95.0% |  |

| name | Result |

|---|---|

| Outlier Rate: 25.0% |  |

| Outlier Rate: 45.0% |  |

| Outlier Rate: 47.5% |  |

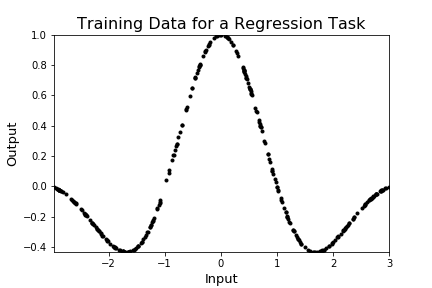

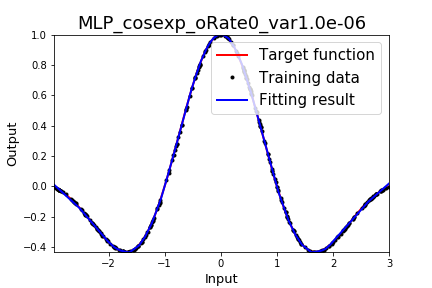

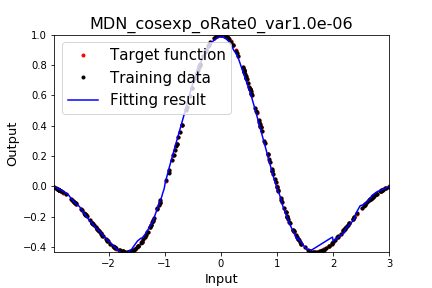

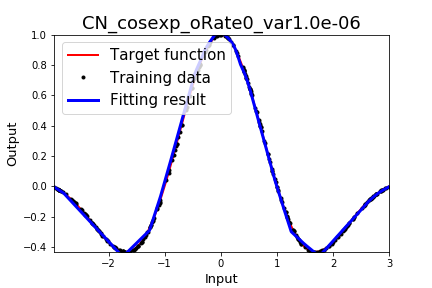

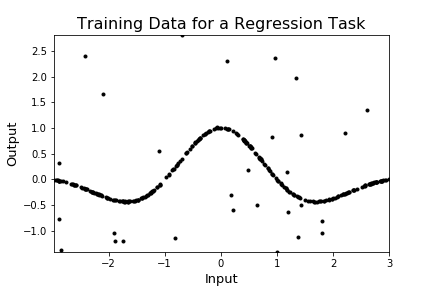

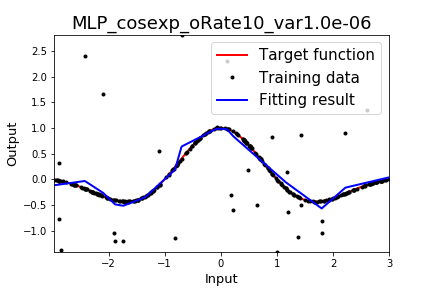

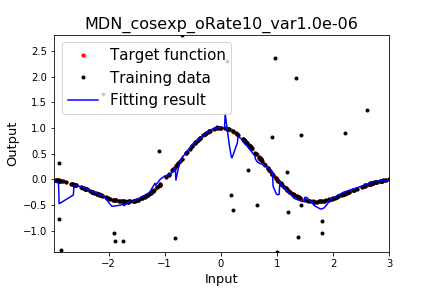

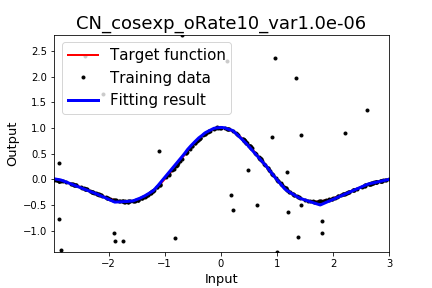

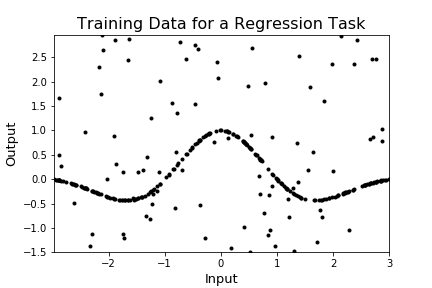

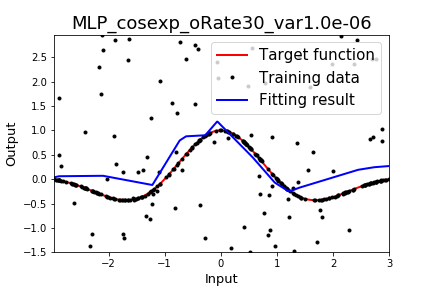

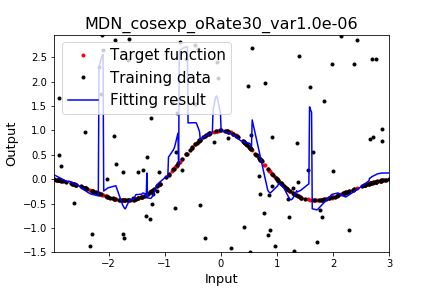

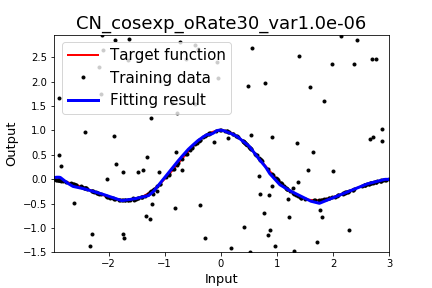

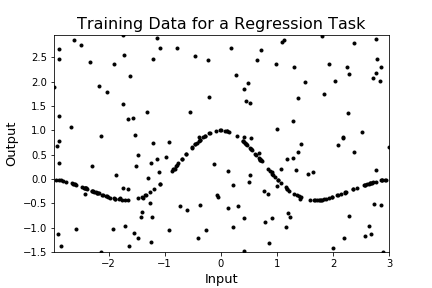

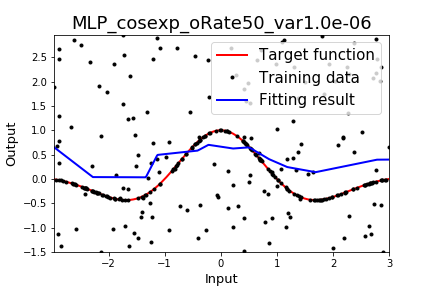

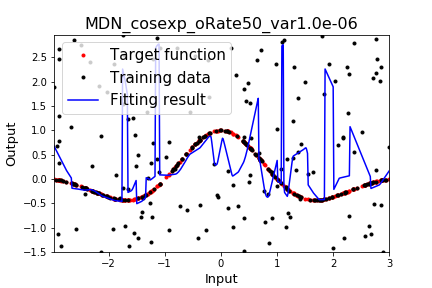

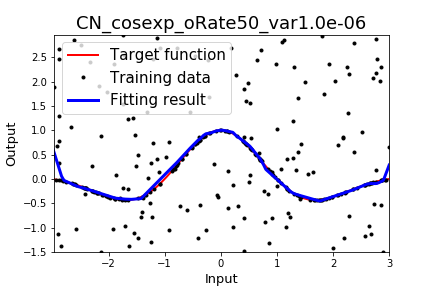

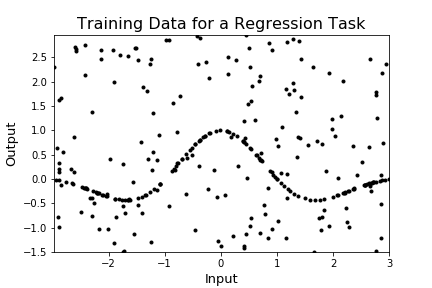

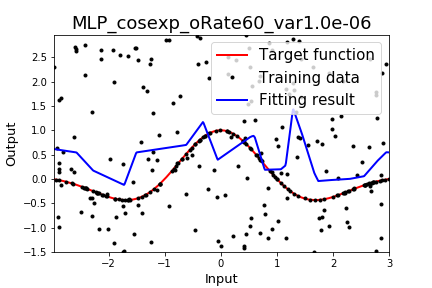

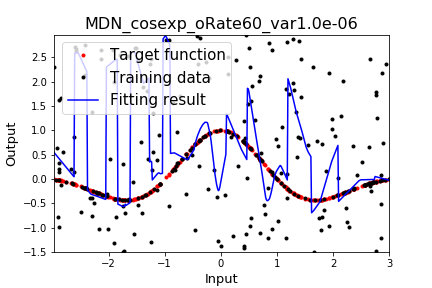

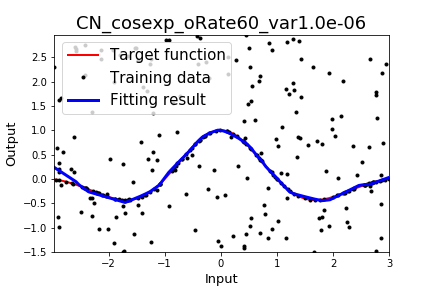

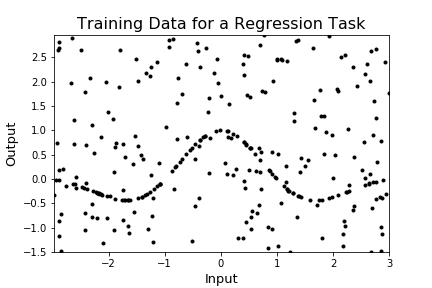

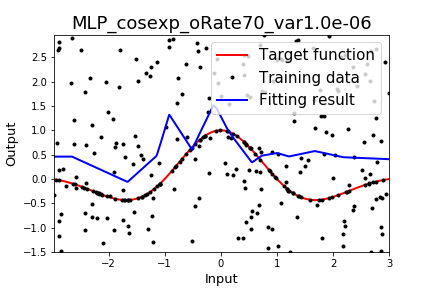

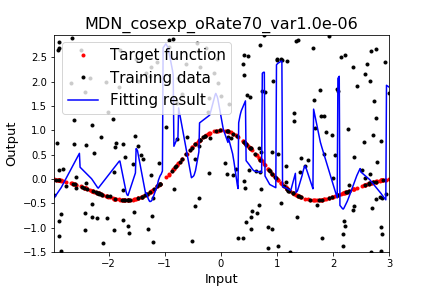

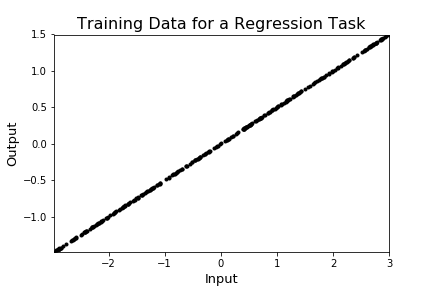

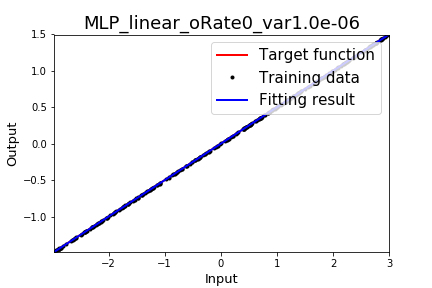

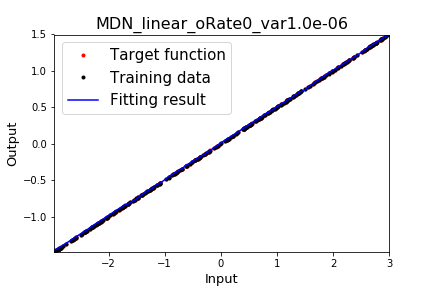

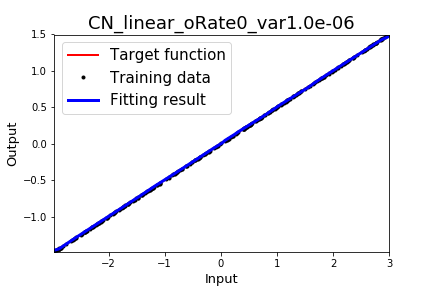

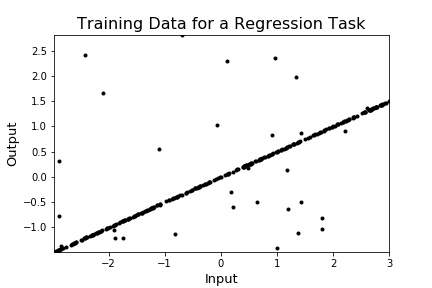

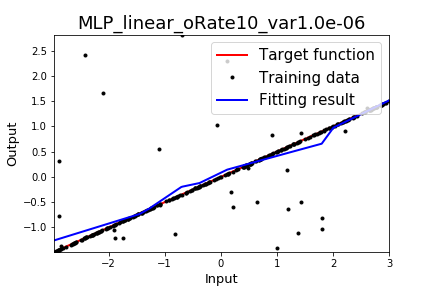

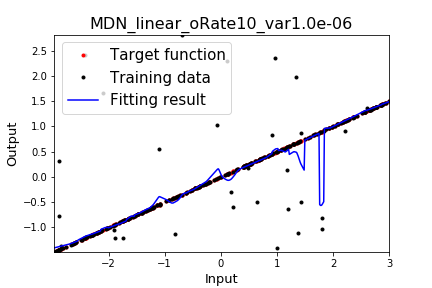

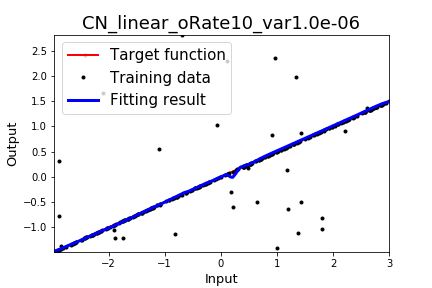

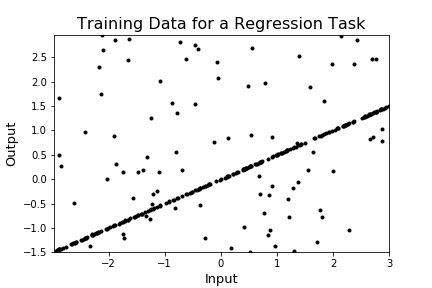

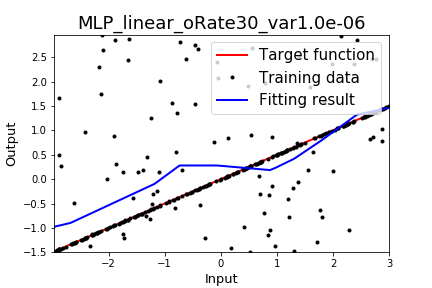

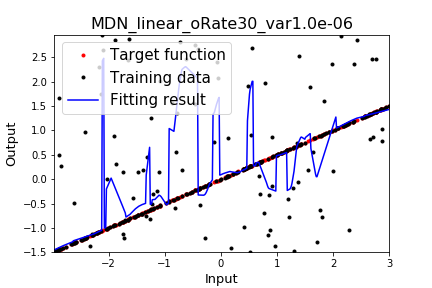

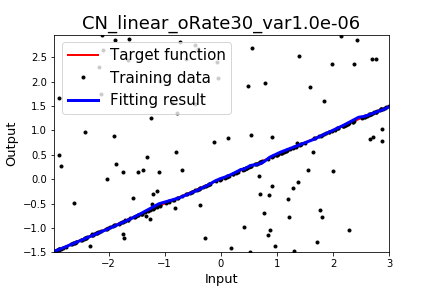

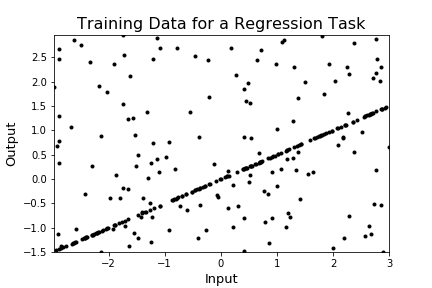

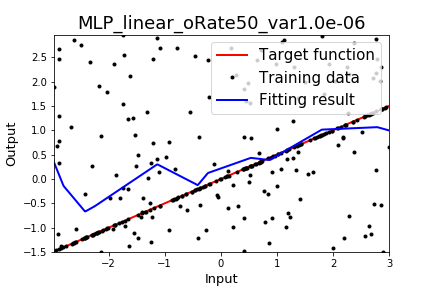

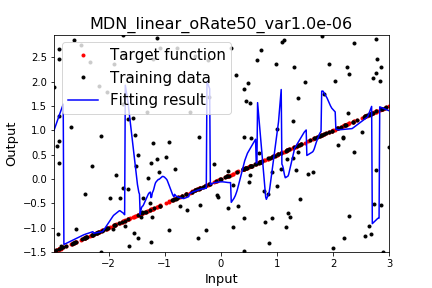

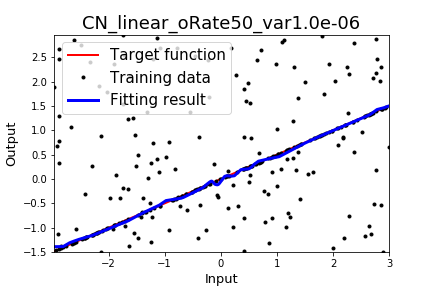

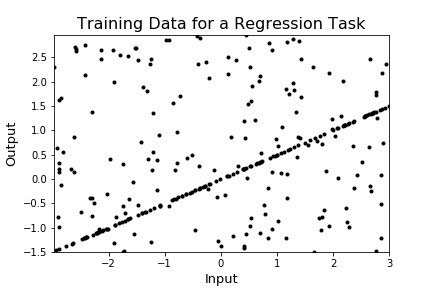

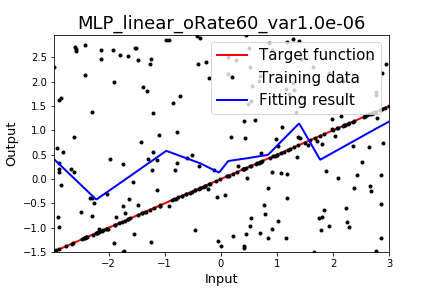

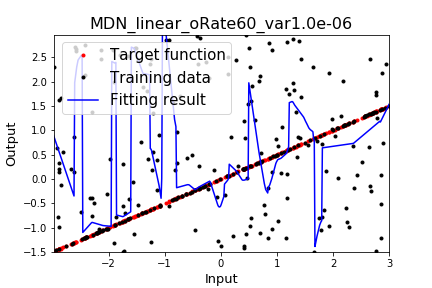

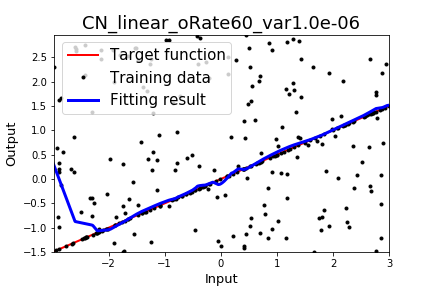

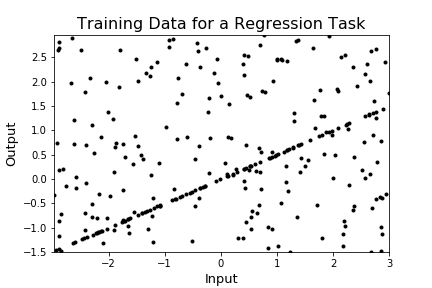

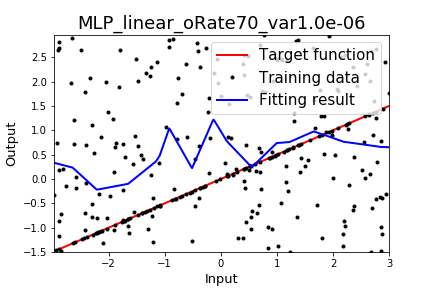

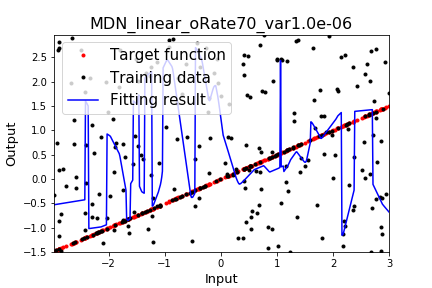

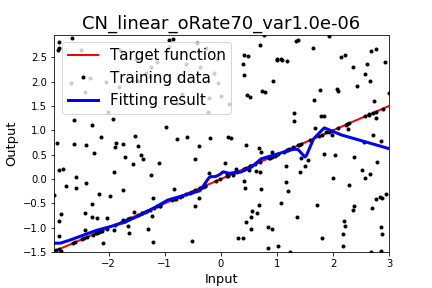

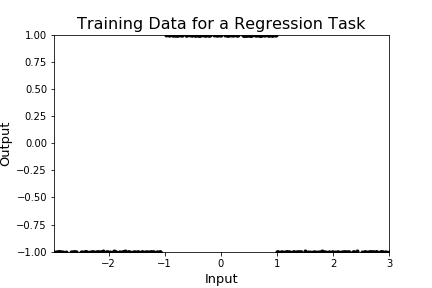

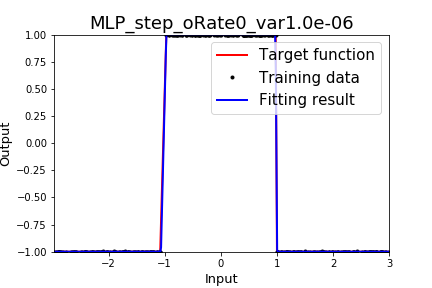

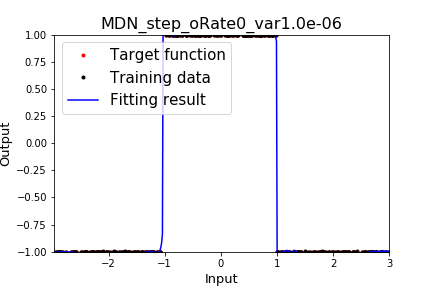

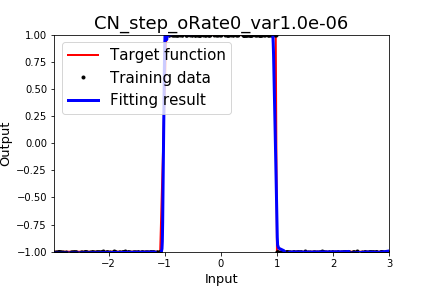

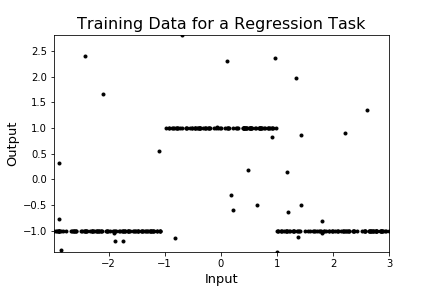

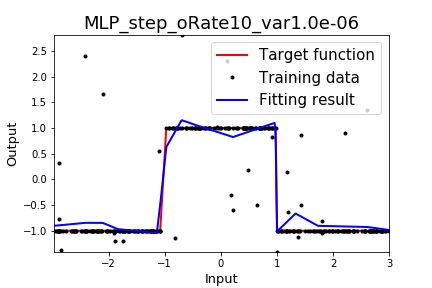

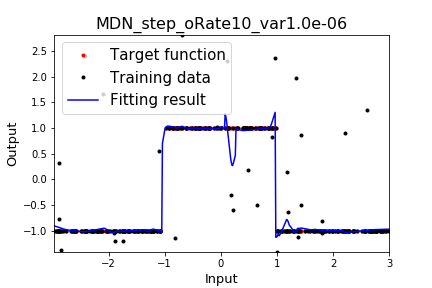

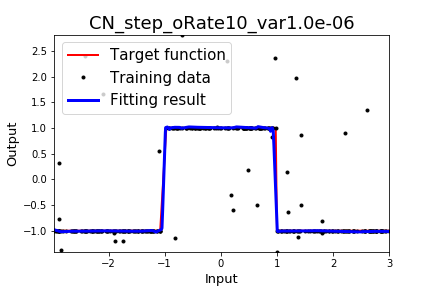

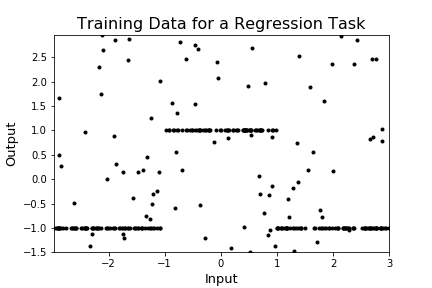

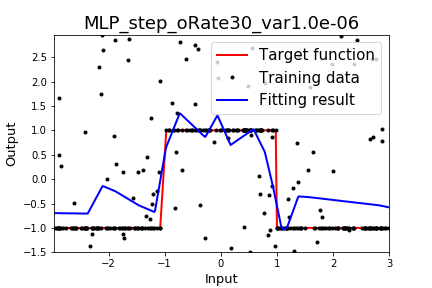

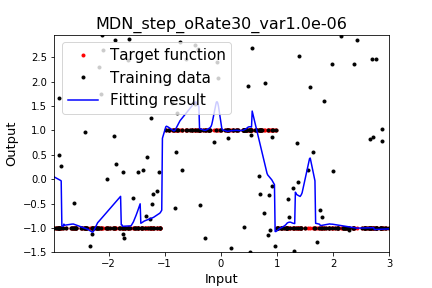

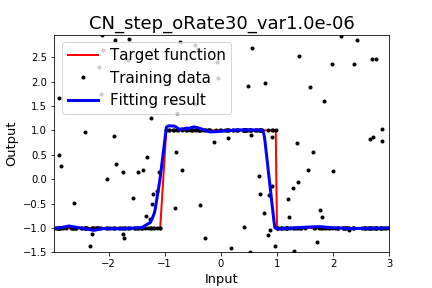

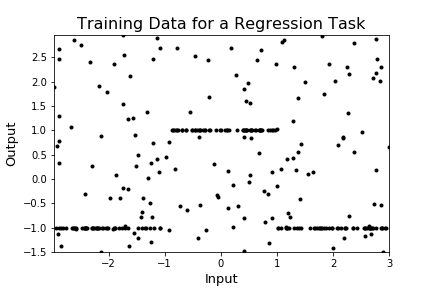

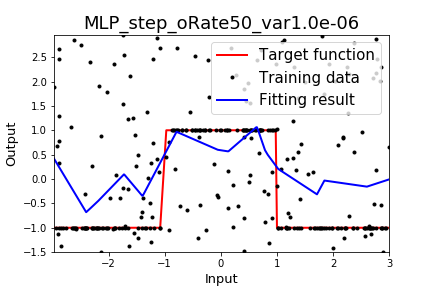

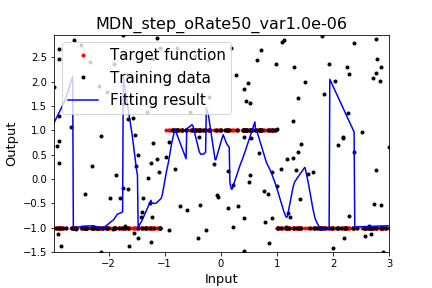

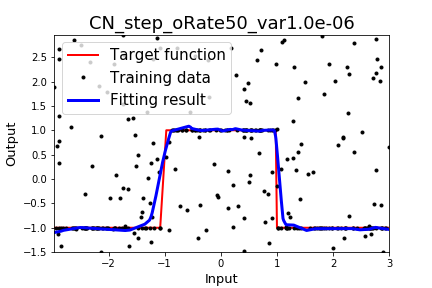

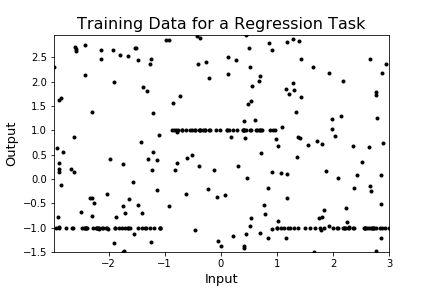

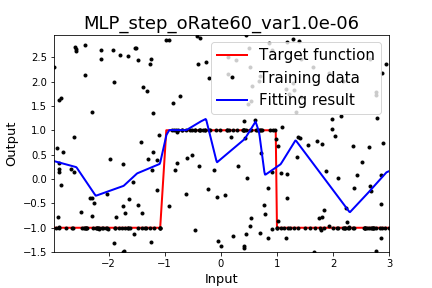

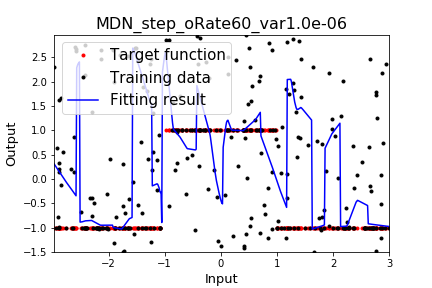

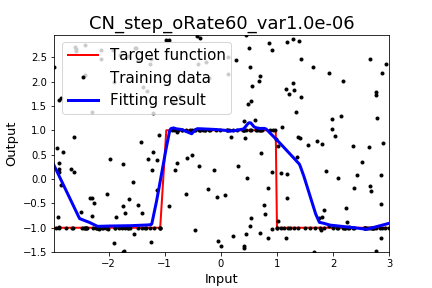

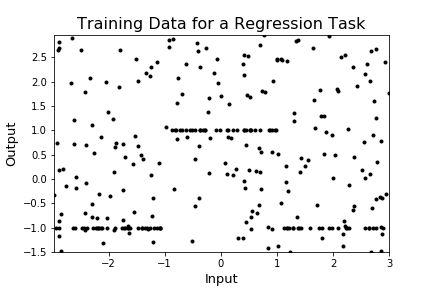

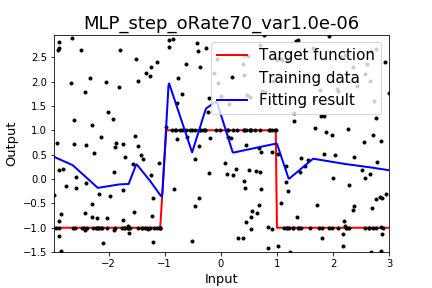

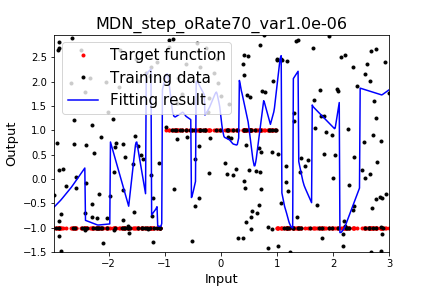

Regression Result

| name | Training Data | Multi-Layer Perceptron | Mixture Density Network | ChoiceNet |

|---|---|---|---|---|

| oRate: 0.0% |  |

|

|

|

| oRate: 10.0% |  |

|

|

|

| oRate: 30.0% |  |

|

|

|

| oRate: 50.0% |  |

|

|

|

| oRate: 60.0% |  |

|

|

|

| oRate: 70.0% |  |

|

|

|

| name | Training Data | Multi-Layer Perceptron | Mixture Density Network | ChoiceNet |

|---|---|---|---|---|

| oRate: 0.0% |  |

|

|

|

| oRate: 10.0% |  |

|

|

|

| oRate: 30.0% |  |

|

|

|

| oRate: 50.0% |  |

|

|

|

| oRate: 60.0% |  |

|

|

|

| oRate: 70.0% |  |

|

|

|

| name | Training Data | Multi-Layer Perceptron | Mixture Density Network | ChoiceNet |

|---|---|---|---|---|

| oRate: 0.0% |  |

|

|

|

| oRate: 10.0% |  |

|

|

|

| oRate: 30.0% |  |

|

|

|

| oRate: 50.0% |  |

|

|

|

| oRate: 60.0% |  |

|

|

|

| oRate: 70.0% |  |

|

|

|

HowTo?

- run code/main_reg_run.ipynb

- Properly modify followings based on the working environment:

nWorker = 16

maxGPU = 8

- (I was using 16 CPUs / 8 TESLA P40s / 96GB RAM.)

Requirements

- Python3

- TF 1.4>=

Contact

This work was done in Kakao Brain.

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].