ksanjeevan / Crnn Audio Classification

Licence: mit

UrbanSound classification using Convolutional Recurrent Networks in PyTorch

Stars: ✭ 235

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Crnn Audio Classification

Automatic speech recognition

End-to-end Automatic Speech Recognition for Madarian and English in Tensorflow

Stars: ✭ 2,751 (+1070.64%)

Mutual labels: lstm, rnn, audio

Pytorch Kaldi

pytorch-kaldi is a project for developing state-of-the-art DNN/RNN hybrid speech recognition systems. The DNN part is managed by pytorch, while feature extraction, label computation, and decoding are performed with the kaldi toolkit.

Stars: ✭ 2,097 (+792.34%)

Mutual labels: lstm, rnn

Lstm Music Genre Classification

Music genre classification with LSTM Recurrent Neural Nets in Keras & PyTorch

Stars: ✭ 166 (-29.36%)

Mutual labels: lstm, rnn

Stylenet

A cute multi-layer LSTM that can perform like a human 🎶

Stars: ✭ 187 (-20.43%)

Mutual labels: lstm, rnn

Natural Language Processing With Tensorflow

Natural Language Processing with TensorFlow, published by Packt

Stars: ✭ 222 (-5.53%)

Mutual labels: lstm, rnn

Load forecasting

Load forcasting on Delhi area electric power load using ARIMA, RNN, LSTM and GRU models

Stars: ✭ 160 (-31.91%)

Mutual labels: lstm, rnn

Rnn For Joint Nlu

Pytorch implementation of "Attention-Based Recurrent Neural Network Models for Joint Intent Detection and Slot Filling" (https://arxiv.org/abs/1609.01454)

Stars: ✭ 176 (-25.11%)

Mutual labels: lstm, rnn

Rnnoise

Recurrent neural network for audio noise reduction

Stars: ✭ 2,266 (+864.26%)

Mutual labels: rnn, audio

Chameleon recsys

Source code of CHAMELEON - A Deep Learning Meta-Architecture for News Recommender Systems

Stars: ✭ 202 (-14.04%)

Mutual labels: lstm, rnn

Sign Language Gesture Recognition

Sign Language Gesture Recognition From Video Sequences Using RNN And CNN

Stars: ✭ 214 (-8.94%)

Mutual labels: lstm, rnn

Tensorflow Bitcoin Robot

A Bitcoin trade robot based on Tensorflow LSTM model.Just for fun.

Stars: ✭ 155 (-34.04%)

Mutual labels: lstm, rnn

Rnnvis

A visualization tool for understanding and debugging RNNs

Stars: ✭ 162 (-31.06%)

Mutual labels: lstm, rnn

Audioowl

Fast and simple music and audio analysis using RNN in Python 🕵️♀️ 🥁

Stars: ✭ 151 (-35.74%)

Mutual labels: rnn, audio

Eeg Dl

A Deep Learning library for EEG Tasks (Signals) Classification, based on TensorFlow.

Stars: ✭ 165 (-29.79%)

Mutual labels: lstm, rnn

Speech Denoiser

A speech denoise lv2 plugin based on RNNoise library

Stars: ✭ 220 (-6.38%)

Mutual labels: rnn, audio

Pytorch Image Comp Rnn

PyTorch implementation of Full Resolution Image Compression with Recurrent Neural Networks

Stars: ✭ 146 (-37.87%)

Mutual labels: lstm, rnn

Char Rnn Chinese

Multi-layer Recurrent Neural Networks (LSTM, GRU, RNN) for character-level language models in Torch. Based on code of https://github.com/karpathy/char-rnn. Support Chinese and other things.

Stars: ✭ 192 (-18.3%)

Mutual labels: lstm, rnn

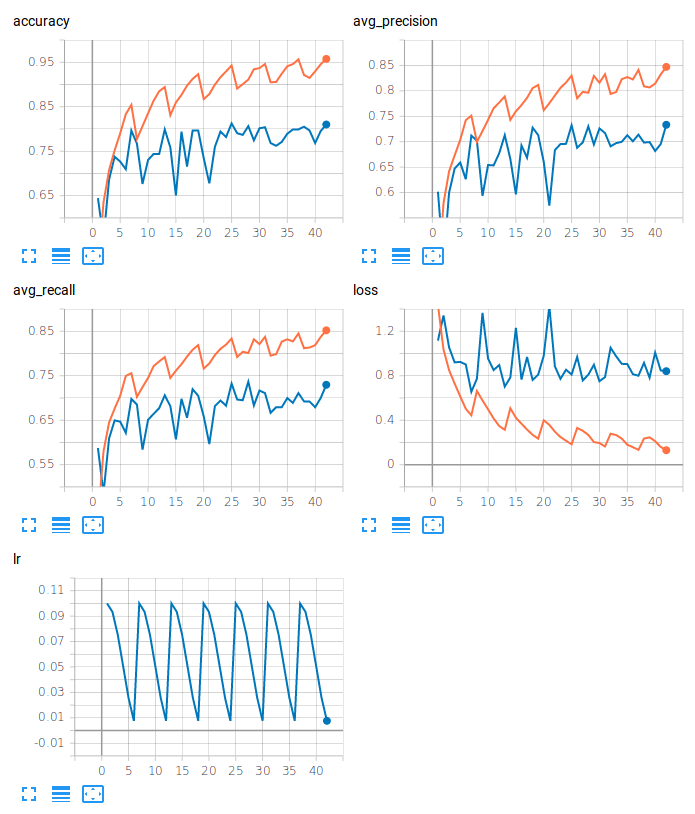

PyTorch Audio Classification: Urban Sounds

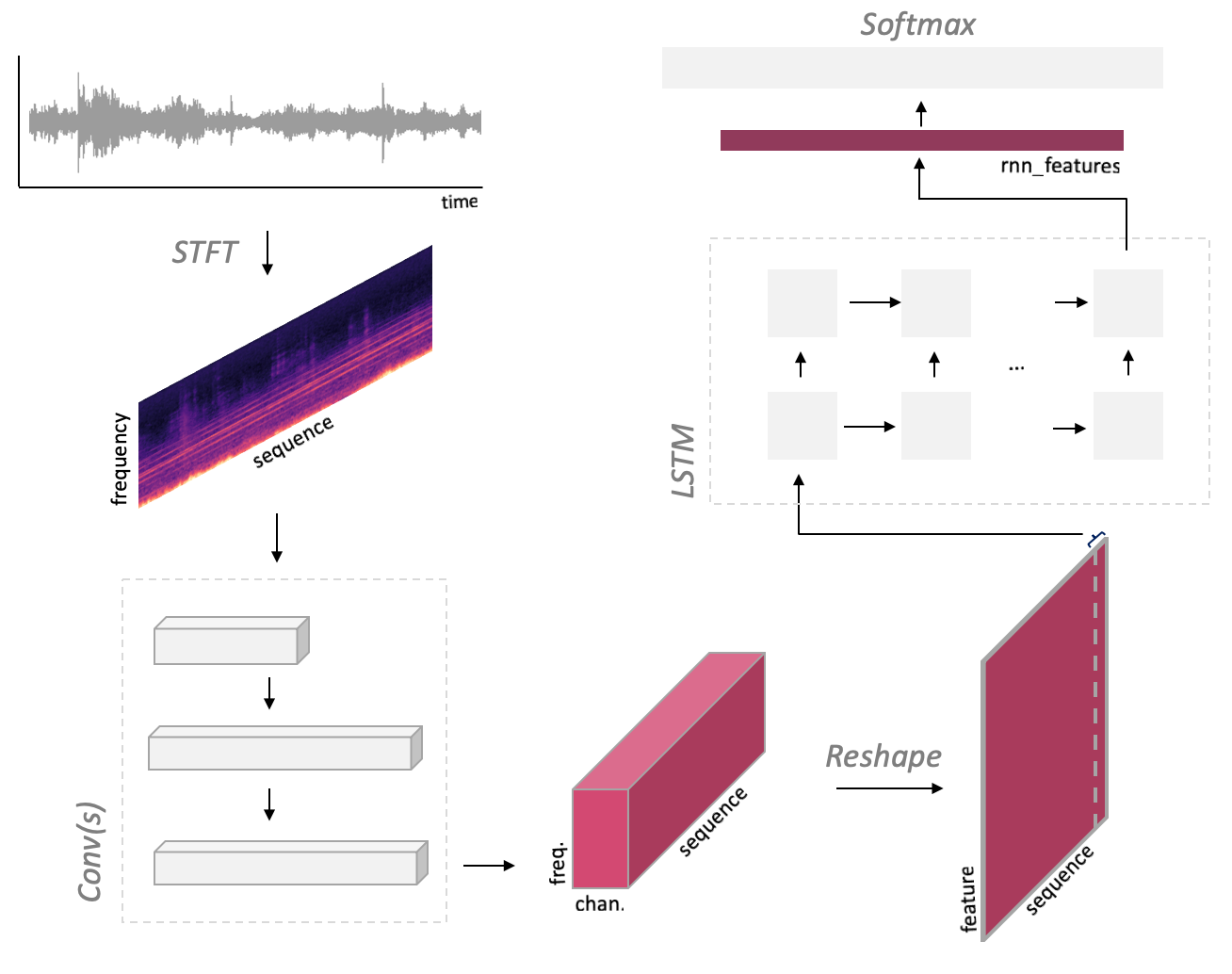

Classification of audio with variable length using a CNN + LSTM architecture on the UrbanSound8K dataset.

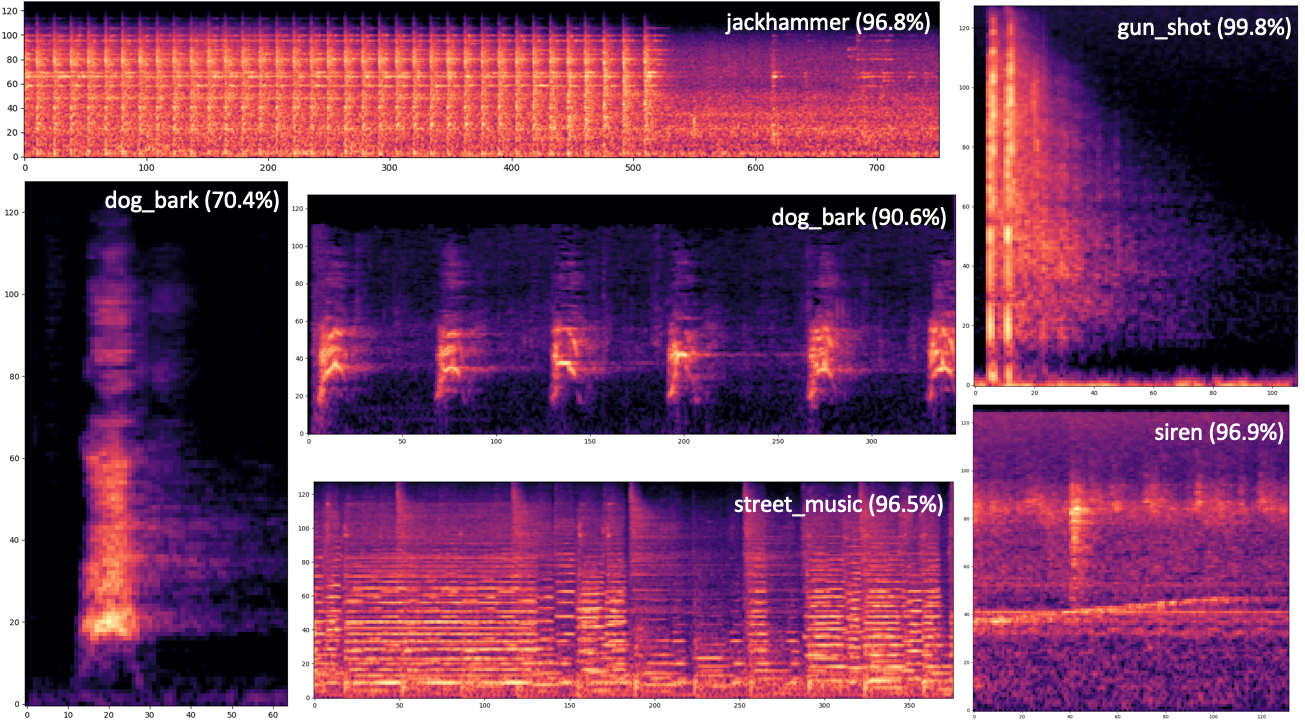

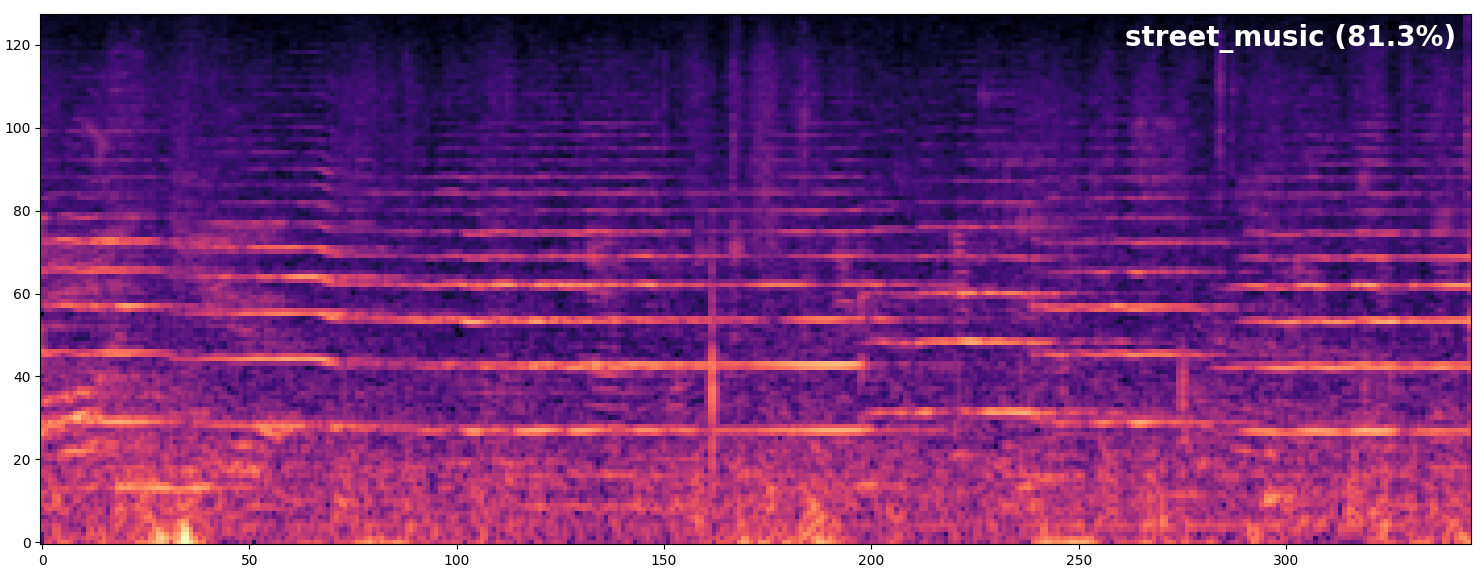

Example results:

Contents

Dependencies

- soundfile: audio loading

- torchparse: .cfg easy model definition

- pytorch/audio: Audio transforms

Features

- Easily define CRNN in .cfg format

- Spectrogram computation on GPU

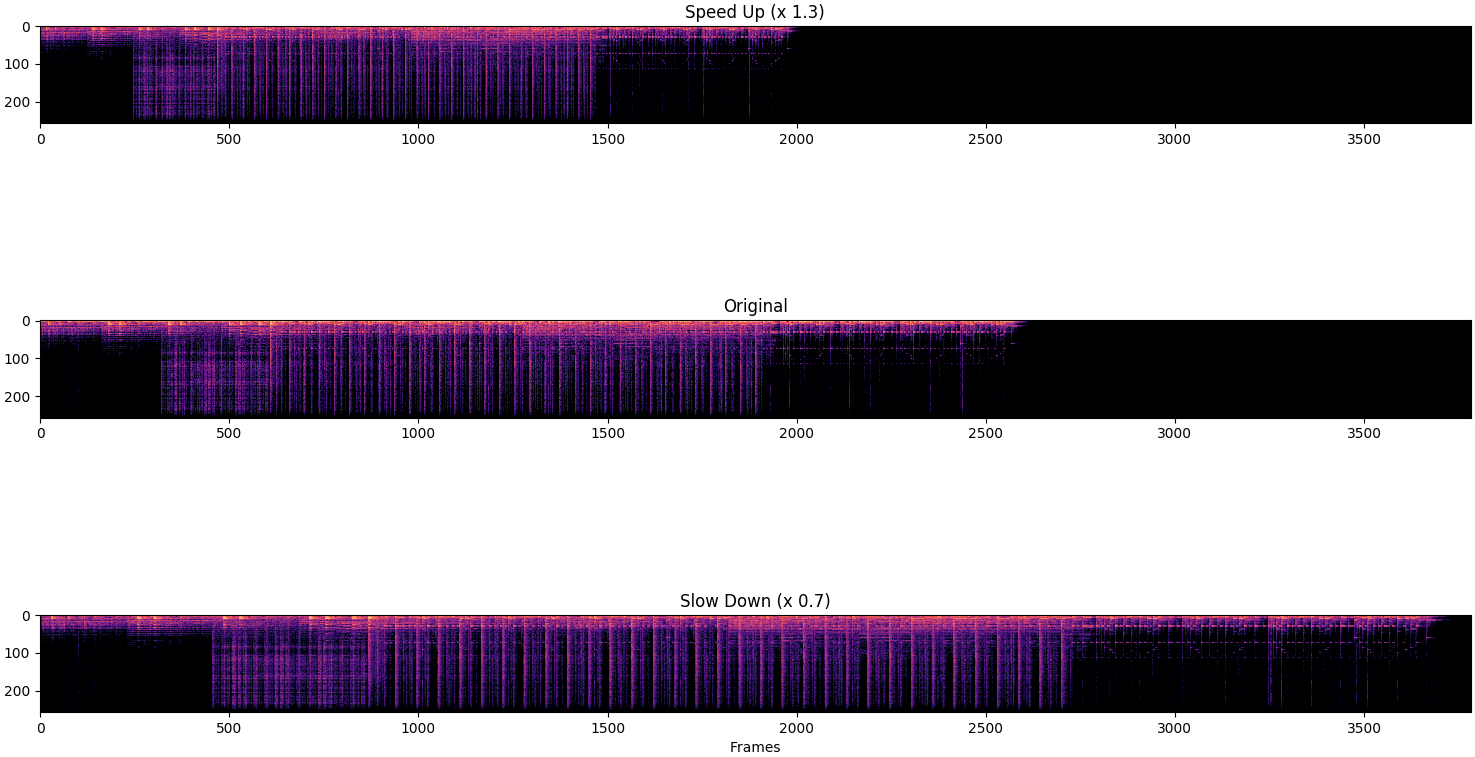

- Audio data augmentation: Cropping, White Noise, Time Stretching (using phase vocoder on GPU!)

Models

CRNN architecture:

Printing model defined with torchparse:

AudioCRNN(

(spec): MelspectrogramStretch(num_bands=128, fft_len=2048, norm=spec_whiten, stretch_param=[0.4, 0.4])

(net): ModuleDict(

(convs): Sequential(

(conv2d_0): Conv2d(1, 32, kernel_size=(3, 3), stride=(1, 1), padding=[0, 0])

(batchnorm2d_0): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(elu_0): ELU(alpha=1.0)

(maxpool2d_0): MaxPool2d(kernel_size=3, stride=3, padding=0, dilation=1, ceil_mode=False)

(dropout_0): Dropout(p=0.1)

(conv2d_1): Conv2d(32, 64, kernel_size=(3, 3), stride=(1, 1), padding=[0, 0])

(batchnorm2d_1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(elu_1): ELU(alpha=1.0)

(maxpool2d_1): MaxPool2d(kernel_size=4, stride=4, padding=0, dilation=1, ceil_mode=False)

(dropout_1): Dropout(p=0.1)

(conv2d_2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=[0, 0])

(batchnorm2d_2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(elu_2): ELU(alpha=1.0)

(maxpool2d_2): MaxPool2d(kernel_size=4, stride=4, padding=0, dilation=1, ceil_mode=False)

(dropout_2): Dropout(p=0.1)

)

(recur): LSTM(128, 64, num_layers=2)

(dense): Sequential(

(dropout_3): Dropout(p=0.3)

(batchnorm1d_0): BatchNorm1d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(linear_0): Linear(in_features=64, out_features=10, bias=True)

)

)

)

Trainable parameters: 139786

Usage

Inference

Run inference on an audio file:

./run.py /path/to/audio/file.wav -r path/to/saved/model.pth

Training

./run.py train -c config.json --cfg arch.cfg

Augmentation

Dataset transforms:

Compose(

ProcessChannels(mode=avg)

AdditiveNoise(prob=0.3, sig=0.001, dist_type=normal)

RandomCropLength(prob=0.4, sig=0.25, dist_type=half)

ToTensorAudio()

)

As well as time stretching:

TensorboardX

Evaluation

./run.py eval -r /path/to/saved/model.pth

Then obtain defined metrics:

100%|█████████████████████████████████████████████████████████████████████████████████████████████████| 34/34 [00:03<00:00, 12.68it/s]

{'avg_precision': '0.725', 'avg_recall': '0.719', 'accuracy': '0.804'}

10-Fold Cross Validation

| Arch | Accuracy | AvgPrecision(macro) | AvgRecall(macro) |

|---|---|---|---|

| CNN | 71.0% | 63.4% | 63.5% |

| CRNN | 72.3% | 64.3% | 65.0% |

| CRNN(Bidirectional, Dropout) | 73.5% | 65.5% | 65.8% |

| CRNN(Dropout) | 73.0% | 65.5% | 65.7% |

| CRNN(Bidirectional) | 72.8% | 64.3% | 65.2% |

Per fold metrics CRNN(Bidirectional, Dropout):

| Fold | Accuracy | AvgPrecision(macro) | AvgRecall(macro) |

|---|---|---|---|

| 1 | 73.1% | 65.1% | 66.1% |

| 2 | 80.7% | 69.2% | 68.9% |

| 3 | 62.8% | 57.3% | 57.5% |

| 4 | 73.6% | 65.2% | 64.9% |

| 5 | 78.4% | 70.3% | 71.5% |

| 6 | 73.5% | 65.5% | 65.9% |

| 7 | 74.6% | 67.0% | 66.6% |

| 8 | 66.7% | 62.3% | 61.7% |

| 9 | 71.7% | 60.7% | 62.7% |

| 10 | 79.9% | 72.2% | 71.8% |

To Do

- [ ] commit jupyter notebook dataset exploration

- [x] Switch overt to using pytorch/audio

- [x] use torchaudio-contrib for STFT transforms

- [x] CRNN entirely defined in .cfg

- [x] Some bug in 'infer'

- [x] Run 10-fold Cross Validation

- [x] Switch over to pytorch/audio since the merge

- [ ] Comment things

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].