yizt / Crnn.pytorch

Licence: apache-2.0

crnn实现水平和垂直方向中文文字识别, 提供在3w多个中文字符训练的水平识别和垂直识别的预训练模型; 欢迎关注,试用和反馈问题... ...

Stars: ✭ 145

Programming Languages

python

139335 projects - #7 most used programming language

Labels

Projects that are alternatives of or similar to Crnn.pytorch

Tr

Free Offline OCR 离线的中文文本检测+识别SDK

Stars: ✭ 598 (+312.41%)

Mutual labels: crnn, ocr, text-recognition

Ocr.pytorch

A pure pytorch implemented ocr project including text detection and recognition

Stars: ✭ 196 (+35.17%)

Mutual labels: crnn, ocr, text-recognition

Crnn With Stn

implement CRNN in Keras with Spatial Transformer Network

Stars: ✭ 83 (-42.76%)

Mutual labels: crnn, ocr, text-recognition

insightocr

MXNet OCR implementation. Including text recognition and detection.

Stars: ✭ 100 (-31.03%)

Mutual labels: ocr, text-recognition, crnn

Deep Text Recognition Benchmark

Text recognition (optical character recognition) with deep learning methods.

Stars: ✭ 2,665 (+1737.93%)

Mutual labels: crnn, ocr, text-recognition

Sightseq

Computer vision tools for fairseq, containing PyTorch implementation of text recognition and object detection

Stars: ✭ 116 (-20%)

Mutual labels: crnn, ocr, text-recognition

Image Text Localization Recognition

A general list of resources to image text localization and recognition 场景文本位置感知与识别的论文资源与实现合集 シーンテキストの位置認識と識別のための論文リソースの要約

Stars: ✭ 788 (+443.45%)

Mutual labels: ocr, text-recognition

Easyocr

Ready-to-use OCR with 80+ supported languages and all popular writing scripts including Latin, Chinese, Arabic, Devanagari, Cyrillic and etc.

Stars: ✭ 13,379 (+9126.9%)

Mutual labels: crnn, ocr

Crnn

Convolutional recurrent neural network for scene text recognition or OCR in Keras

Stars: ✭ 68 (-53.1%)

Mutual labels: ocr, text-recognition

Awesome Ocr Resources

A collection of resources (including the papers and datasets) of OCR (Optical Character Recognition).

Stars: ✭ 335 (+131.03%)

Mutual labels: ocr, text-recognition

Text renderer

Generate text images for training deep learning ocr model

Stars: ✭ 931 (+542.07%)

Mutual labels: crnn, ocr

Textrecognitiondatagenerator

A synthetic data generator for text recognition

Stars: ✭ 2,075 (+1331.03%)

Mutual labels: ocr, text-recognition

Cnn lstm ctc ocr

Tensorflow-based CNN+LSTM trained with CTC-loss for OCR

Stars: ✭ 464 (+220%)

Mutual labels: ocr, text-recognition

Attention Ocr Chinese Version

Attention OCR Based On Tensorflow

Stars: ✭ 421 (+190.34%)

Mutual labels: crnn, text-recognition

React Native Tesseract Ocr

Tesseract OCR wrapper for React Native

Stars: ✭ 384 (+164.83%)

Mutual labels: ocr, text-recognition

Php Apache Tika

Apache Tika bindings for PHP: extract text and metadata from documents, images and other formats

Stars: ✭ 76 (-47.59%)

Mutual labels: ocr, text-recognition

Node Tesseract Ocr

A Node.js wrapper for the Tesseract OCR API

Stars: ✭ 92 (-36.55%)

Mutual labels: ocr, text-recognition

Crnn attention ocr chinese

CRNN with attention to do OCR,add Chinese recognition

Stars: ✭ 315 (+117.24%)

Mutual labels: crnn, ocr

crnn.pytorch

本工程使用随机生成的水平和垂直图像训练crnn模型做文字识别;一共使用10多种不同字体;共包括数字、字符、简体和繁体中文字30656个,详见all_words.txt。

预测

直接预测

预训练模型下载地址:水平模型 crnn.horizontal.061.pth 提取码: fguu; 垂直模型 crnn.vertical.090.pth 提取码: ygx7。

a) 执行如下命令预测单个图像

# 水平方向

python demo.py --weight-path /path/to/chk.pth --image-path /path/to/image --direction horizontal

# 垂直方向

python demo.py --weight-path /path/to/chk.pth --image-path /path/to/image --direction vertical

b) 执行如下命令预测图像目录

# 水平方向

python demo.py --weight-path /path/to/chk.pth --image-dir /path/to/image/dir --direction horizontal

# 垂直方向

python demo.py --weight-path /path/to/chk.pth --image-dir /path/to/image/dir --direction vertical

使用restful服务预测

a) 启动restful服务

python rest.py -l /path/to/crnn.horizontal.061.pth -v /path/to/crnn.vertical.090.pth -d cuda

b) 使用如下代码预测,参考rest_test.py

import base64

import requests

img_path = './images/horizontal-002.jpg'

with open(img_path, 'rb') as fp:

img_bytes = fp.read()

img = base64.b64encode(img_bytes).decode()

data = {'img': img}

r = requests.post("http://localhost:5000/crnn", json=data)

print(r.json()['text'])

结果如下:

厘鳃 銎 萛闿 檭車 垰銰 陀 婬2 蠶

模型效果

以下图像均来为生成器随机生成的,也可以试用自己的图像测试

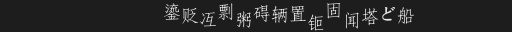

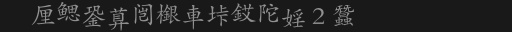

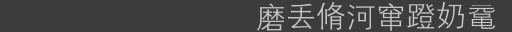

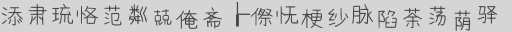

水平方向

| 图像 | 识别结果 |

|---|---|

|

鎏贬冱剽粥碍辆置钷固闻塔ど船 |

|

厘鳃銎萛闿檭車垰銰陀婬2蠶 |

|

磨丢河窜蹬奶鼋 |

|

添肃琉恪范粼兢俺斋┟傺怃梗纱脉陷荼荡荫驿 |

|

荼反霎吕娟斑恃畀貅引铥哳断替碱嘏 |

|

汨鑅譜軥嶰細挓 |

|

讵居世鄄钷橄鸠乩嗓犷魄芈丝 |

|

憎豼蕖蚷願巇廾尖瞚寣眗媝页锧荰瞿睔 |

|

休衷餐郄俐徂煅黢让咣 |

|

桃顸噢伯臣 |

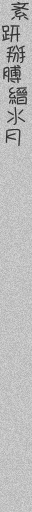

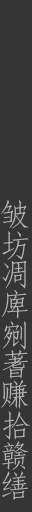

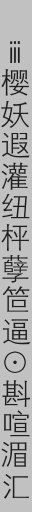

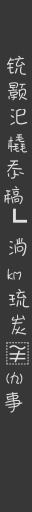

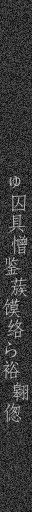

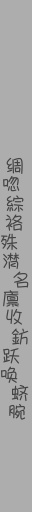

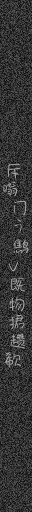

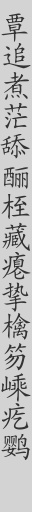

垂直方向

| Image1 | Image2 | Image3 | Image4 | Image5 | Image6 | Image7 | Image8 | Image9 | Image10 |

|---|---|---|---|---|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

从左到右识别结果

蟒销咔侉糌圻

醵姹探里坌葺神赵漓

紊趼掰膊縉氺月

皱坊凋庳剜蓍赚拾赣缮

ⅲ樱妖遐灌纽枰孽笸逼⊙斟喧湄汇

铳颢汜橇忝稿┗淌㎞琉炭盛㈨事

ゆ囚具憎鉴蔟馍络ら裕翱偬

绸唿綜袼殊潸名廪收鈁跃唤蛴腕

斥嗡门彳鹪Ⅴ戝物据趱欹

覃追煮茫舔酾桎藏瘪挚檎笏嵊疙鹦

评估

a) 水平方向

python eval.py --weight-path /path/to/crnn.horizontal.061.pth \

--direction horizontal --device cuda

输出结果:acc:0.893

b) 垂直方向

python eval.py --weight-path /path/to/crnn.vertical.090.pth \

--direction vertical --device cuda

输出结果:acc:0.523

训练

a) 单机多卡

export CUDA_DEVICE_ORDER="PCI_BUS_ID"

export CUDA_VISIBLE_DEVICES=1,2,3,4

python -m torch.distributed.launch --nproc_per_node 4 train.py --device cuda --direction vertical

b) 多机多卡

# 第一台主机

export NCCL_SOCKET_IFNAME=eth0

export NCCL_IB_DISABLE=1

export CUDA_DEVICE_ORDER="PCI_BUS_ID"

export CUDA_VISIBLE_DEVICES=1,2,3

python -m torch.distributed.launch --nproc_per_node 3 --nnodes=2 --node_rank=0 \

--master_port=6066 --master_addr="192.168.0.1" \

train.py --device cuda --direction vertical

# 第二台主机

export NCCL_SOCKET_IFNAME=eth0

export NCCL_IB_DISABLE=1

export CUDA_DEVICE_ORDER="PCI_BUS_ID"

export CUDA_VISIBLE_DEVICES=1,2,3

python -m torch.distributed.launch --nproc_per_node 3 --nnodes=2 --node_rank=1 \

--master_port=6066 --master_addr="192.168.0.1" \

train.py --device cuda --direction vertical

存在问题:多机训练比单机要慢很多,目前尚未解决.

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].