ajbosco / Dag Factory

Licence: mit

Dynamically generate Apache Airflow DAGs from YAML configuration files

Stars: ✭ 385

Programming Languages

python

139335 projects - #7 most used programming language

Labels

Projects that are alternatives of or similar to Dag Factory

airflow-user-management-plugin

A plugin for Apache Airflow that allows you to manage the users that can login

Stars: ✭ 13 (-96.62%)

Mutual labels: airflow

Airflow Operator

Kubernetes custom controller and CRDs to managing Airflow

Stars: ✭ 278 (-27.79%)

Mutual labels: airflow

bitnami-docker-airflow-scheduler

Bitnami Docker Image for Apache Airflow Scheduler

Stars: ✭ 19 (-95.06%)

Mutual labels: airflow

astro

Astro allows rapid and clean development of {Extract, Load, Transform} workflows using Python and SQL, powered by Apache Airflow.

Stars: ✭ 79 (-79.48%)

Mutual labels: airflow

Around Dataengineering

A Data Engineering & Machine Learning Knowledge Hub

Stars: ✭ 257 (-33.25%)

Mutual labels: airflow

ecs-airflow

Cloudformation templates for deploying Airflow in ECS

Stars: ✭ 37 (-90.39%)

Mutual labels: airflow

udacity-data-eng-proj2

A production-grade data pipeline has been designed to automate the parsing of user search patterns to analyze user engagement. Extract data from S3, apply a series of transformations and load into S3 and Redshift.

Stars: ✭ 25 (-93.51%)

Mutual labels: airflow

openverse-catalog

Identifies and collects data on cc-licensed content across web crawl data and public apis.

Stars: ✭ 27 (-92.99%)

Mutual labels: airflow

Airflow Rest Api Plugin

A plugin for Apache Airflow that exposes rest end points for the Command Line Interfaces

Stars: ✭ 281 (-27.01%)

Mutual labels: airflow

airflow-tutorial

Use Airflow to move data from multiple MySQL databases to BigQuery

Stars: ✭ 96 (-75.06%)

Mutual labels: airflow

AirflowDataPipeline

Example of an ETL Pipeline using Airflow

Stars: ✭ 24 (-93.77%)

Mutual labels: airflow

Aws Airflow Stack

Turbine: the bare metals that gets you Airflow

Stars: ✭ 352 (-8.57%)

Mutual labels: airflow

helpdesk

Yet another helpdesk based on multiple providers

Stars: ✭ 14 (-96.36%)

Mutual labels: airflow

dag-factory

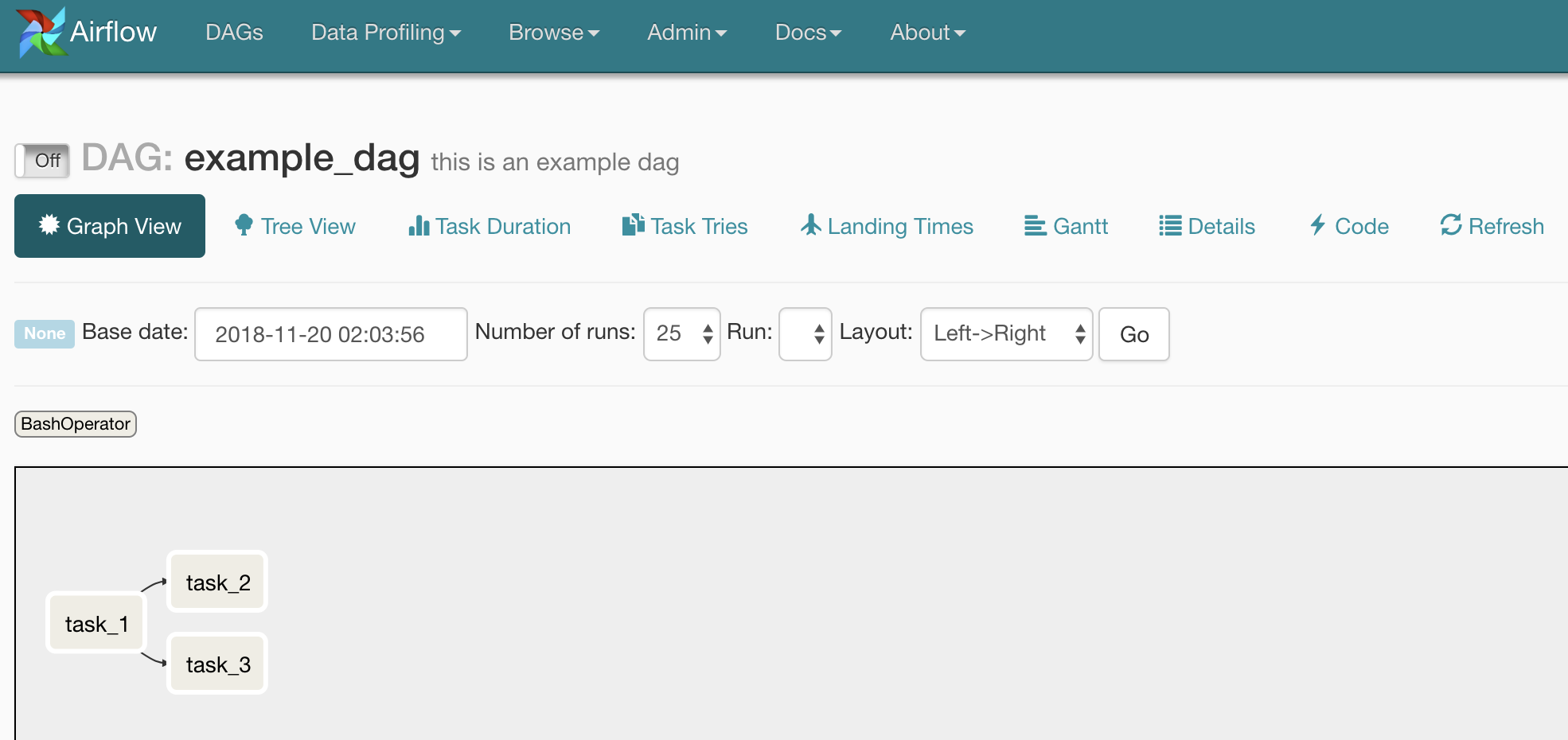

dag-factory is a library for dynamically generating Apache Airflow DAGs from YAML configuration files.

Installation

To install dag-factory run pip install dag-factory. It requires Python 3.6.0+ and Apache Airflow 1.10+.

Usage

After installing dag-factory in your Airflow environment, there are two steps to creating DAGs. First, we need to create a YAML configuration file. For example:

example_dag1:

default_args:

owner: 'example_owner'

start_date: 2018-01-01 # or '2 days'

end_date: 2018-01-05

retries: 1

retry_delay_sec: 300

schedule_interval: '0 3 * * *'

concurrency: 1

max_active_runs: 1

dagrun_timeout_sec: 60

default_view: 'tree' # or 'graph', 'duration', 'gantt', 'landing_times'

orientation: 'LR' # or 'TB', 'RL', 'BT'

description: 'this is an example dag!'

on_success_callback_name: print_hello

on_success_callback_file: /usr/local/airflow/dags/print_hello.py

on_failure_callback_name: print_hello

on_failure_callback_file: /usr/local/airflow/dags/print_hello.py

tasks:

task_1:

operator: airflow.operators.bash_operator.BashOperator

bash_command: 'echo 1'

task_2:

operator: airflow.operators.bash_operator.BashOperator

bash_command: 'echo 2'

dependencies: [task_1]

task_3:

operator: airflow.operators.bash_operator.BashOperator

bash_command: 'echo 3'

dependencies: [task_1]

Then in the DAGs folder in your Airflow environment you need to create a python file like this:

from airflow import DAG

import dagfactory

dag_factory = dagfactory.DagFactory("/path/to/dags/config_file.yml")

dag_factory.clean_dags(globals())

dag_factory.generate_dags(globals())

And this DAG will be generated and ready to run in Airflow!

Benefits

- Construct DAGs without knowing Python

- Construct DAGs without learning Airflow primitives

- Avoid duplicative code

- Everyone loves YAML! ;)

Contributing

Contributions are welcome! Just submit a Pull Request or Github Issue.

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].