escorciav / Daps

Projects that are alternatives of or similar to Daps

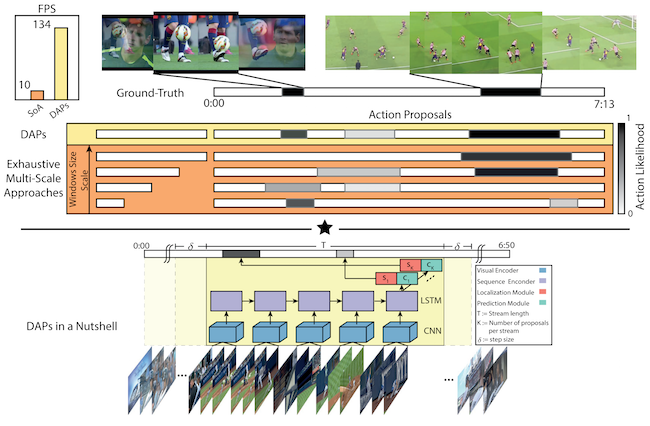

Deep Action Proposals for Videos

Temporal Action Proposals for long untrimmed videos.

DAPs architecture allows to retrieve segments from long videos where it is likely to find actions with high recall very quickly.

Welcome

Welcome to our repo! This project hosts a simple, handy interface to generate segments where it is likely to find actions in your videos.

If you find any piece of code valuable for your research please cite this work:

@Inbook{Escorcia2016,

author="Escorcia, Victor and Caba Heilbron, Fabian and Niebles, Juan Carlos and Ghanem, Bernard",

editor="Leibe, Bastian and Matas, Jiri and Sebe, Nicu and Welling, Max",

title="DAPs: Deep Action Proposals for Action Understanding",

bookTitle="Computer Vision -- ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11-14, 2016, Proceedings, Part III",

year="2016",

publisher="Springer International Publishing",

address="Cham",

pages="768--784",

isbn="978-3-319-46487-9",

doi="10.1007/978-3-319-46487-9_47",

url="http://dx.doi.org/10.1007/978-3-319-46487-9_47"

}

If you like this project, give us a ⭐️ in the github banner 😉.

Installation

-

Ensure that you have gcc, conda, CUDA and CUDNN (optional).

-

Clone our repo,

git clone https://github.com/escorciav/daps/. -

Go to our project folder and type

bash install.sh.

Notes

-

Our implementation uses Theano. It is tested with gcc but as long as Theano supports your desired compiler, go ahead.

-

In case you don't want to use conda, our python dependencies are here. A complete list of dependecies is here.

-

Do you like environment-modules? we provide bash scripts to activate or deactivate the environment. Personalize them 😉.

What can you find?

-

Pre-trained models. Our generalization experiment suggests that you may expect decent results for other kind of action classes with similar lengths. Check out the models trained on the validation set of THUMOS14.

-

Pre-computed action proposals. Take a look at our results if you are interested in comparisons or building cool algorithms on top of our outputs.

-

Code for retrieving proposals in new videos. Check out our program to retrieve proposals from your video.

Do you want to try?

-

Download the C3D representation of a couple of videos from here.

-

Download our model.

-

Go to our project folder

-

Activate our conda environment. We reduce your choices to one of the following:

-

Execute

source activate daps-eccv16, for conda users. -

Execute

./activate.sh, for conda and environment-modules users. -

Ensure that our package is in your

PYTHONPATH, python users.

Note for new environment-modules users: You must personalize the script to activate the environment, otherwise it will fail.

- Execute:

tools/generate_proposals.py -iv video_test_0000541 -ic3d [path-to-c3d-of-videos] -imd [path-our-model]

Questions

Please visit our FAQs, if you have any doubt. In case that your question is not there, send us an email.