Mercari Dataflow Template

The Mercari Dataflow Template enables you to run various pipelines without writing programs by simply defining a configuration file.

Mercari Dataflow Template is implemented as a FlexTemplate for Cloud Dataflow. Pipelines are assembled based on the defined configuration file and can be executed as Cloud Dataflow Jobs.

See the Document for usage

Usage Example

Write the following json file and upload it to GCS (Suppose you upload it to gs://example/config.json).

This configuration file stores the BigQuery query results in the table specified by Spanner.

{

"sources": [

{

"name": "bigquery",

"module": "bigquery",

"parameters": {

"query": "SELECT * FROM `myproject.mydataset.mytable`"

}

}

],

"sinks": [

{

"name": "spanner",

"module": "spanner",

"input": "bigquery",

"parameters": {

"projectId": "myproject",

"instanceId": "myinstance",

"databaseId": "mydatabase",

"table": "mytable"

}

}

]

}Assuming you have deployed the Mercari Dataflow Template to gs://example/template, run the following command.

gcloud dataflow flex-template run bigquery-to-spanner \

--template-file-gcs-location=gs://example/template \

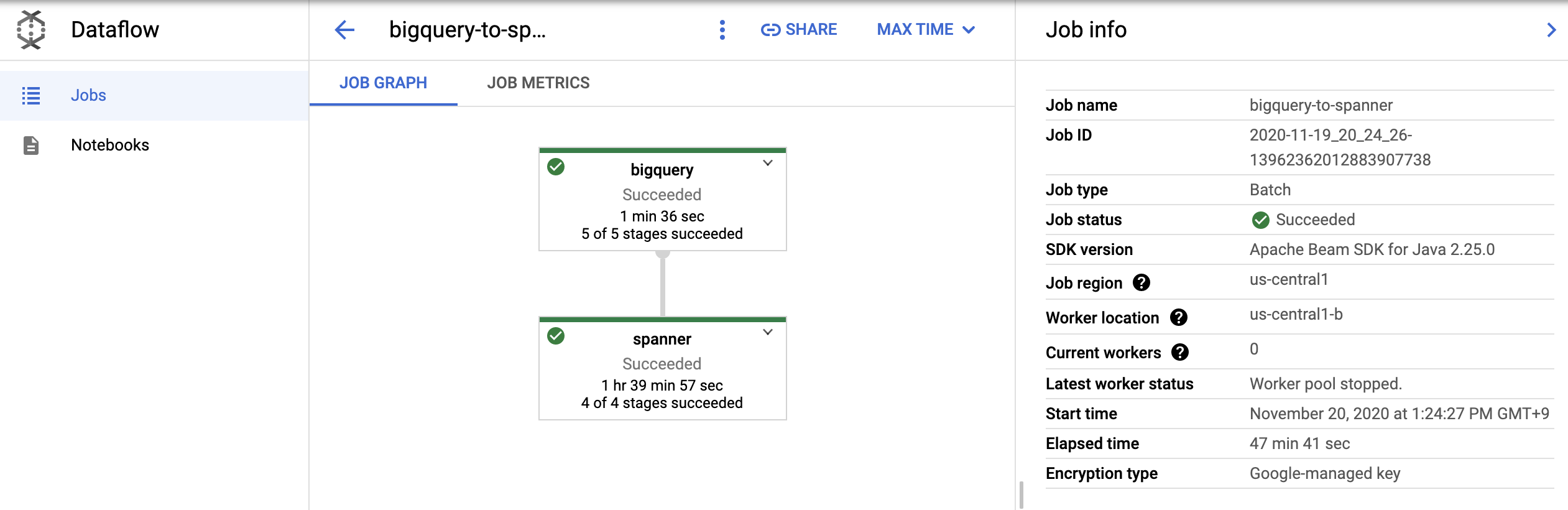

--parameters=config=gs://example/config.jsonThe Dataflow job will be started, and you can check the execution status of the job in the console screen.

Deploy Template

Mercari Dataflow Template is used as FlexTemplate. Therefore, the Mercari Dataflow Template should be deployed according to the FlexTemplate creation steps.

Requirements

- Java 11

- Maven 3

- gcloud command-line tool

Push Template Container Image to Cloud Container Registry.

The first step is to build the source code and register it as a container image in the Cloud Container Registry.

The following command will generate a container for FlexTemplate from the source code and upload it to Container Registry.

mvn clean package -DskipTests -Dimage=gcr.io/{deploy_project}/{template_repo_name}Upload template file.

The next step is to generate a template file to start a job from the container image and upload it to GCS.

Use the following command to generate a template file that can execute a dataflow job from a container image, and upload it to GCS.

gcloud dataflow flex-template build gs://{path/to/template_file} \

--image "gcr.io/{deploy_project}/{template_repo_name}" \

--sdk-language "JAVA"Run dataflow job from template file

Run Dataflow Job from the template file.

- gcloud command

You can run template specifying gcs path that uploaded config file.

gsutil cp config.json gs://{path/to/config.json}

gcloud dataflow flex-template run {job_name} \

--template-file-gcs-location=gs://{path/to/template_file} \

--parameters=config=gs://{path/to/config.json}- REST API

You can also run template by REST API.

PROJECT_ID=[PROJECT_ID]

REGION=[REGION]

CONFIG="$(cat examples/xxx.json)"

curl -X POST -H "Content-Type: application/json" -H "Authorization: Bearer $(gcloud auth print-access-token)" "https://dataflow.googleapis.com/v1b3/projects/${PROJECT_ID}/locations/${REGION}/flexTemplates:launch" -d "{

'launchParameter': {

'jobName': 'myJobName',

'containerSpecGcsPath': 'gs://{path/to/template_file}',

'parameters': {

'config': '$(echo "$CONFIG")',

'stagingLocation': 'gs://{path/to/staging}'

},

'environment': {

'tempLocation': 'gs://{path/to/temp}'

}

}

}"(The options tempLocation and stagingLocation are optional. If not specified, a bucket named dataflow-staging-{region}-{project_no} will be automatically generated and used)

Run Template in streaming mode

To run Template in streaming mode, specify streaming=true in the parameter.

gcloud dataflow flex-template run {job_name} \

--template-file-gcs-location=gs://{path/to/template_file} \

--parameters=config=gs://{path/to/config.json} \

--parameters=streaming=trueDeploy Docker image for local pipeline

You can run pipeline locally. This is useful when you want to process small data quickly.

At first, you should register the container for local execution.

# Generate MDT jar file.

mvn clean package -DskipTests -Dimage=gcr.io/{deploy_project}/{template_repo_name}

# Create Docker image for local run

docker build --tag=gcr.io/{deploy_project}/{repo_name_local} .

# If you need to push the image to the GCR,

# you may do so by using the following commands

gcloud auth configure-docker

docker push gcr.io/{deploy_project}/{repo_name_local}Run Pipeline locally

For local execution, execute the following command to grant the necessary permissions

gcloud auth application-default loginThe following is an example of a locally executed command.

The authentication file and config file are mounted for access by the container.

The other arguments (such as project and config) are the same as for normal execution.

If you want to run in streaming mode, specify streaming=true in the argument as you would in normal execution.

Mac OS

docker run \

-v ~/.config/gcloud:/mnt/gcloud:ro \

-v /{your_work_dir}:/mnt/config:ro \

--rm gcr.io/{deploy_project}/{repo_name_local} \

--project={project} \

--config=/mnt/config/{my_config}.jsonWindows OS

docker run ^

-v C:\Users\{YourUserName}\AppData\Roaming\gcloud:/mnt/gcloud:ro ^

-v C:\Users\{YourWorkingDirPath}\:/mnt/config:ro ^

--rm gcr.io/{deploy_project}/{repo_name_local} ^

--project={project} ^

--config=/mnt/config/{MyConfig}.jsonNote: If you use BigQuery module locally, you will need to specify the tempLocation argument.

Committers

- Yoichi Nagai (@orfeon)

Contribution

Please read the CLA carefully before submitting your contribution to Mercari. Under any circumstances, by submitting your contribution, you are deemed to accept and agree to be bound by the terms and conditions of the CLA.

License

Copyright 2022 Mercari, Inc.

Licensed under the MIT License.