visinf / Dpp

Labels

Projects that are alternatives of or similar to Dpp

Detail Preserving Pooling in Torch

This repository contains the code for DPP introduced in the following paper:

Detail-Preserving Pooling in Deep Networks (CVPR 2018)

Faraz Saeedan1, Nicolas Weber1,2*, Michael Goesele1,3*, and Stefan Roth1

1TU Darmstadt (VISINF & GCC), 2NEC Laboratories Europe, 3Oculus Research

*Work carried out while at TU Darmstadt

Citation

If you find DPP useful in your research, please cite:

@inproceedings{saeedan2018dpp,

title={Detail-preserving pooling in deep networks},

author={Saeedan, Faraz and Weber, Nicolas and Goesele, Michael and Roth, Stefan},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

year={2018}

}

Requirements

- This code is built on fb.resnet.torch. Check that repository for requirements and preparations.

- In addition you need the nnlr package installed in Torch.

Install

-

Install the visinf package first. This package will give you access to all the models that were used in the paper: Symmetric, asymmetric, full, lite, with and without stochasticity. Please check the reference paper for a description of these variants.

-

Clone the repository:

git clone https://github.com/visinf/dpp.git

-

Follow one of the recipes below or try your own.

Usage

For training, simply run main.lua. By default, the script runs ResNet-110 with $DPP sym lite$ on CIFAR10 with 1 GPU and 2 data-loader threads. To run the models used in CIFAR10 experiments try:

th main.lua -data [imagenet-folder with train and val folders] -netType resnetdpp -poolingType DPP_sym_lite -save [folder to save results] -stochasticity 'false' -manualSeed xyz

th main.lua -data [imagenet-folder with train and val folders] -netType resnetdpp -poolingType DPP_asym_lite -save [folder to save results] -stochasticity 'false' -manualSeed xyz

th main.lua -data [imagenet-folder with train and val folders] -netType resnetdpp -poolingType DPP_sym_full -save [folder to save results] -stochasticity 'false' -manualSeed xyz

th main.lua -data [imagenet-folder with train and val folders] -netType resnetdpp -poolingType DPP_asym_full -save [folder to save results] -stochasticity 'false' -manualSeed xyz

th main.lua -data [imagenet-folder with train and val folders] -netType resnetdpp -poolingType DPP_sym_lite -save [folder to save results] -stochasticity 'true' -manualSeed xyz

replace xyz with your desired random number generator seed.

To train ResNets on ImageNet try:

th main.lua -depth 50 -batchSize 85 -nGPU 4 -nThreads 8 -shareGradInput true -data [imagenet-folder] -dataset imagenet -LR 0.033 -netType resnetdpp -poolingType DPP_sym_lite

or

th main.lua -depth 101 -batchSize 85 -nGPU 4 -nThreads 8 -shareGradInput true -data [imagenet-folder] -dataset imagenet -LR 0.033 -netType resnetdpp -poolingType DPP_sym_lite

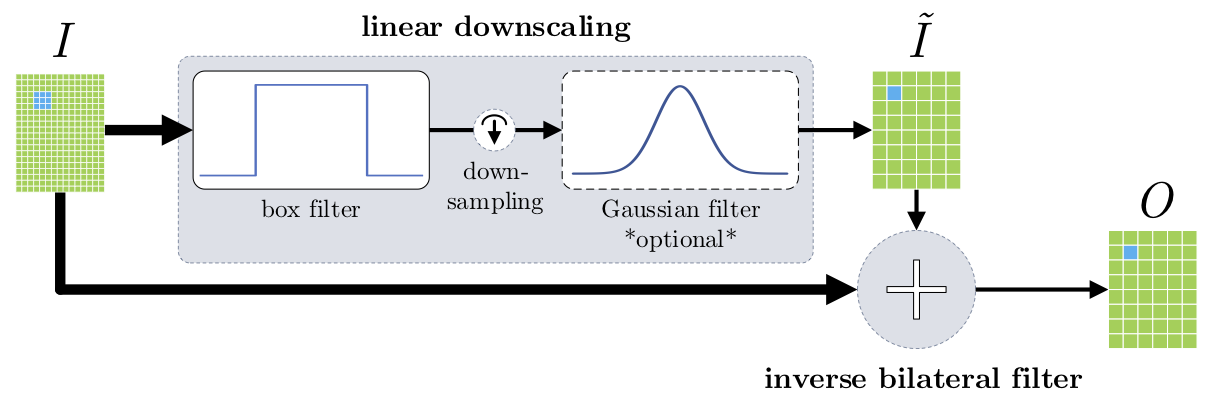

Different implementations of DPP

There are two different implementation codes of DPP available in the Visinf package:

-

Black-box implementation: For this implementation we have derived closed form equations for the forward and backward passes of the inverse bilateral pooling component of DPP and implemented them in CUDA. visinf.SpatialInverseBilateralPooling gives access to this implementation, which is very fast and memory efficient. This version can be used for large-scale experiments such as ImageNet, but gives very little insight into various elements inside the block and modifying them is difficult and requires re-deriving and implementing the gradients w.r.t. parameters and inputs.

-

Implementation based on nngraph: This version is made up of a number of Torch primitive blocks connected to each other in a graph. The internals of the block can be understood easily, examined, and altered if need be. This version is fast but not memory efficient for large image sizes. The memory overhead for CIFAR-sized experiments is moderate.

Contact

If you have further questions or discussions write an email to [email protected]