tyshiwo / Drrn_cvpr17

Code for our CVPR'17 paper "Image Super-Resolution via Deep Recursive Residual Network"

Stars: ✭ 217

Programming Languages

matlab

3953 projects

DRRN

[Paper][Project]

Citation

If you find DRRN useful in your research, please consider citing:

@inproceedings{Tai-DRRN-2017,

title={Image Super-Resolution via Deep Recursive Residual Network},

author={Tai, Ying and Yang, Jian and Liu, Xiaoming },

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

year={2017}

}

Other implementation

[DRRN-tensorflow] by LoSealL

[DRRN-pytorch] by yun_yang

[DRRN-pytorch] by yiyang7

Implement adjustable gradient clipping

modify sgd_solver.cpp in your_caffe_root/src/caffe/solvers/, where we add the following codes in funciton ClipGradients():

Dtype rate = GetLearningRate();

const Dtype clip_gradients = this->param_.clip_gradients()/rate;

Training

- Preparing training/validation data using the files: generate_trainingset_x234/generate_testingset_x234 in "data" folder. "Train_291" folder contains 291 training images and "Set5" folder is a popular benchmark dataset.

- We release two DRRN architectures: DRRN_B1U9_20C128 and DRRN_B1U25_52C128 in "caffe_files" folder. Choose either one to do training. E.g., run ./train_DRRN_B1U9_20C128.sh

Test

- Remember to compile the matlab wrapper: make matcaffe, since we use matlab to do testing.

- We release two pretrained models: DRRN_B1U9_20C128 and DRRN_B1U25_52C128 in "model" folder. Choose either one to do testing on benchmark Set5. E.g., run file ./test/DRRN_B1U9_20C128/test_DRRN_B1U9, the results are stored in "results" folder, with both reconstructed images and PSNR/SSIM/IFCs.

Benchmark results

Quantitative results

PSNR/SSIMs

IFCs

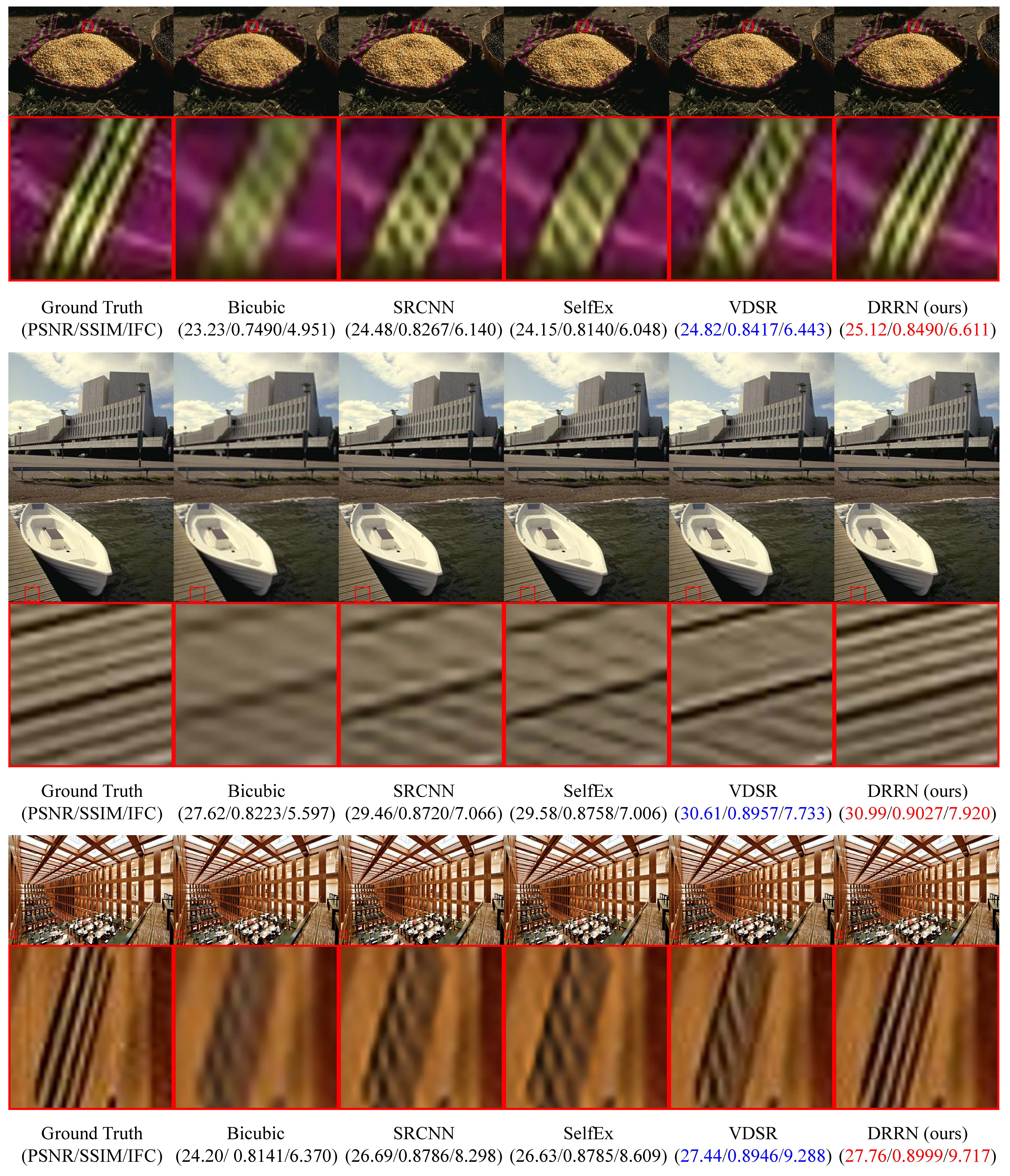

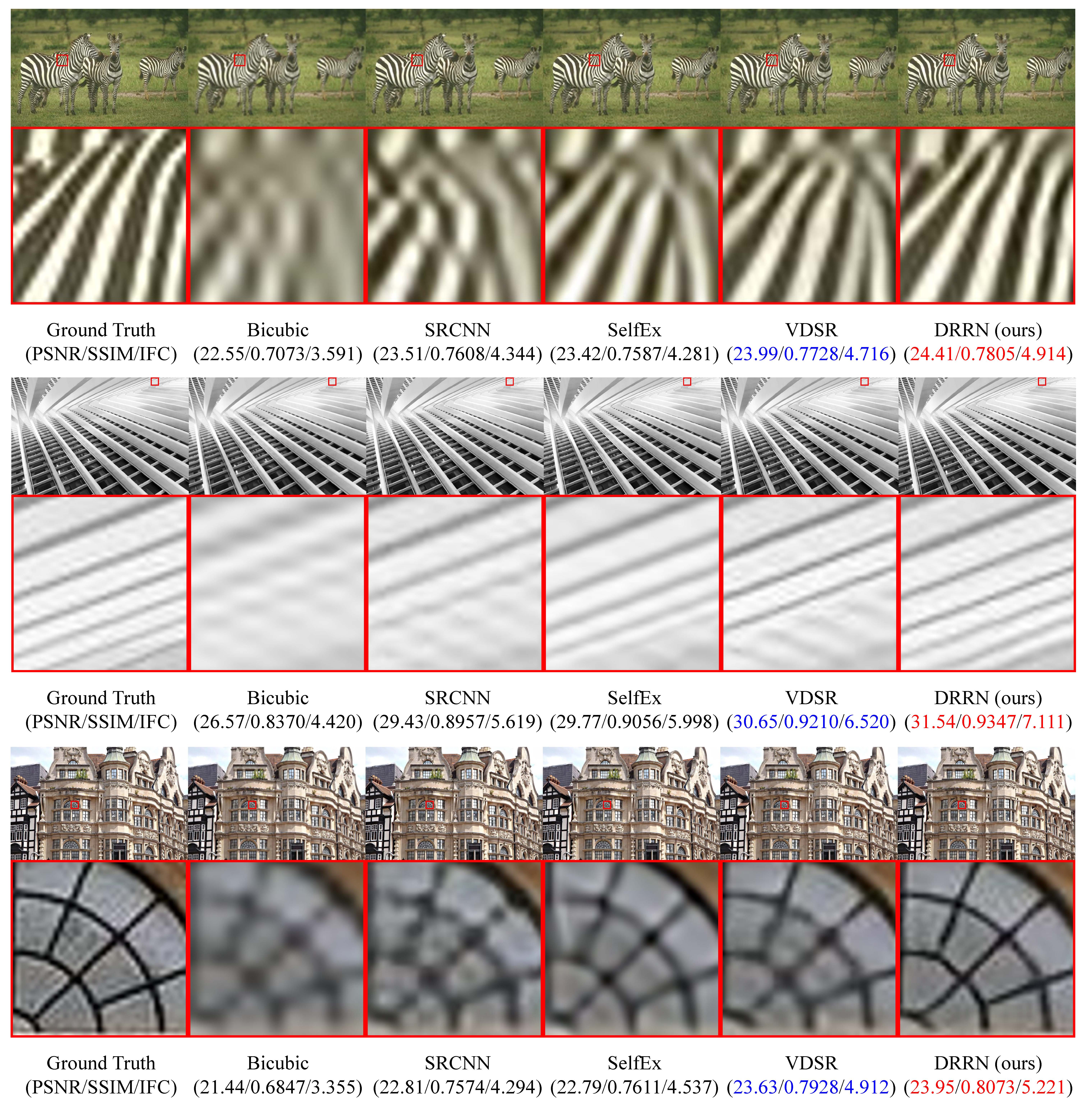

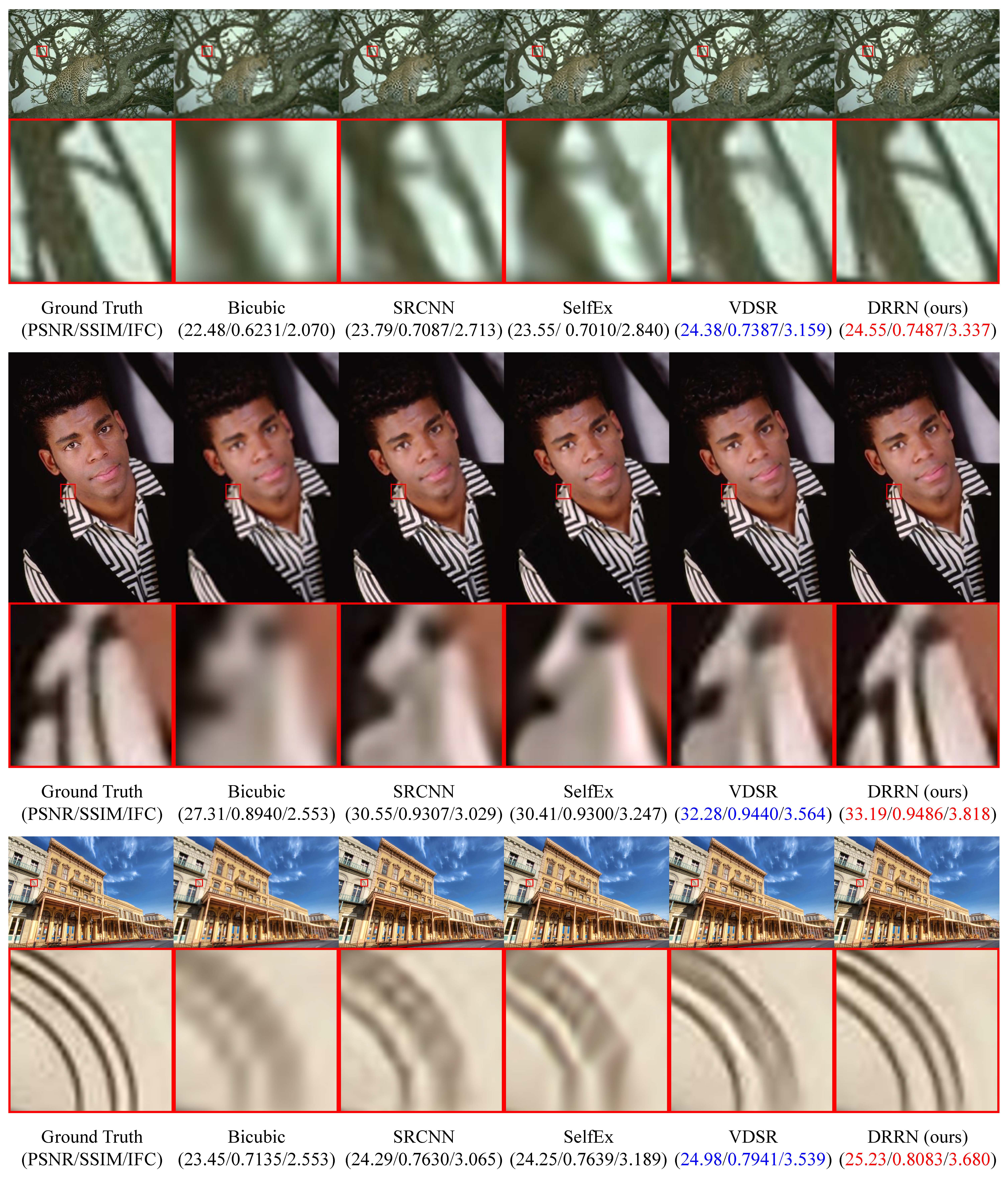

Qualitative results

Scale factor x2

Scale factor x3

Scale factor x4

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].