yxgeee / Fd Gan

Programming Languages

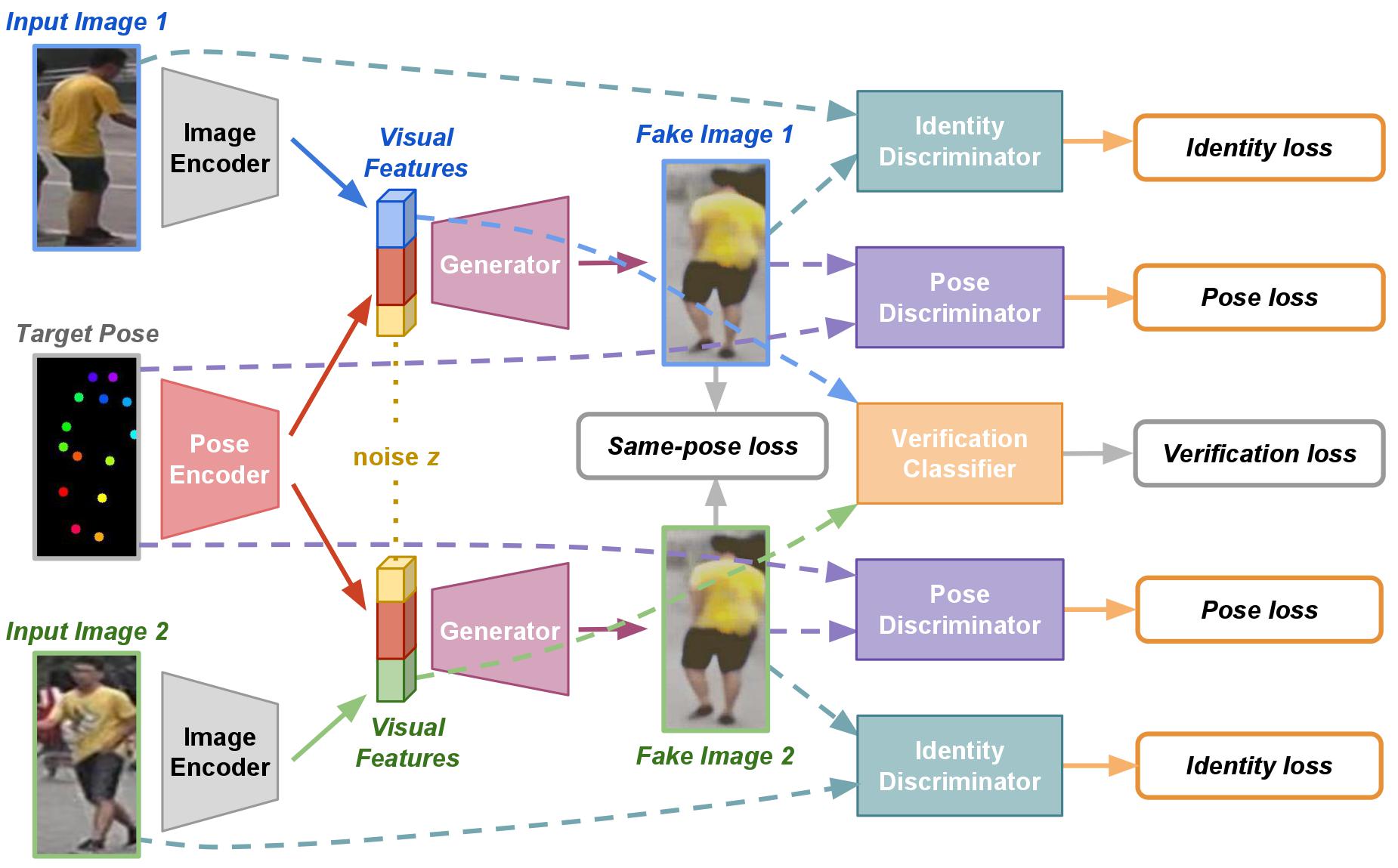

FD-GAN: Pose-guided Feature Distilling GAN for Robust Person Re-identification

Yixiao Ge*, Zhuowan Li*, Haiyu Zhao, Guojun Yin, Shuai Yi, Xiaogang Wang, and Hongsheng Li

Neural Information Processing Systems (NIPS), 2018 (* equal contribution)

Pytorch implementation for our NIPS 2018 work. With the proposed siamese structure, we are able to learn identity-related and pose-unrelated representations.

News

- Baidu Pan links of datasets and pretrained models have been updated.

Prerequisites

- Python 3

- Pytorch (We run the code under version 0.3.1, maybe lower versions also work.)

Getting Started

Installation

pip install scipy, pillow, torchvision, sklearn, h5py, dominate, visdom

- Clone this repo:

git clone https://github.com/yxgeee/FD-GAN

cd FD-GAN/

Datasets

We conduct experiments on Market1501, DukeMTMC-reID, CUHK03 datasets. We need pose landmarks for each dataset during training, so we generate the pose files by Realtime Multi-Person Pose Estimation. And the raw datasets have been preprocessed by the code in open-reid. Download the prepared datasets following below steps:

- Create directories for datasets:

mkdir datasets

cd datasets/

- Download these datasets through the links below, and

unzipthem in the same root path.

Market1501: [Google Drive] [Baidu Pan]

DukeMTMC-reID: [Google Drive] [Baidu Pan]

CUHK03: [Google Drive] [Baidu Pan]

Usage

As mentioned in the original paper, there are three stages for training our proposed framework.

Stage I: reID baseline pretraining

We use a Siamese baseline structure based on ResNet-50. You can train the model with follow commands,

python baseline.py -b 256 -j 4 -d market1501 -a resnet50 --combine-trainval \

--lr 0.01 --epochs 100 --step-size 40 --eval-step 5 \

--logs-dir /path/to/save/checkpoints/

You can train it on specified GPUs by setting CUDA_VISIBLE_DEVICES, and change the dataset name [market1501|dukemtmc|cuhk03] after -d to train models on different datasets.

Or you can download the pretrained baseline model directly following the link below,

- Market1501_baseline_model: [Google Drive] [Baidu Pan]

- DukeMTMC_baseline_model: [Google Drive] [Baidu Pan]

- CUHK03_baseline_model: [Google Drive] [Baidu Pan]

And test them with follow commands,

python baseline.py -b 256 -d market1501 -a resnet50 --evaluate --resume /path/of/model_best.pth.tar

Stage II: FD-GAN pretraining

We need to pretain FD-GAN with the image encoder part (E in the original paper and net_E in the code) fixed first. You can train the model with follow commands,

python train.py --display-port 6006 --display-id 1 \

--stage 1 -d market1501 --name /directory/name/of/saving/checkpoints/ \

--pose-aug gauss -b 256 -j 4 --niter 50 --niter-decay 50 --lr 0.001 --save-step 10 \

--lambda-recon 100.0 --lambda-veri 0.0 --lambda-sp 10.0 --smooth-label \

--netE-pretrain /path/of/model_best.pth.tar

You can train it on specified GPUs by setting CUDA_VISIBLE_DEVICES. For main arguments,

-

--display-port: display port of visdom, e.g., you can visualize the results bylocalhost:6006. -

--display-id: set0to disable visdom. -

--stage: set1for Stage II, and set2for stage III. -

--pose-aug: choose from[no|erase|gauss]to make augmentations on pose maps. -

--smooth-label: smooth the label of GANloss or not.

Other arguments can be viewed in options.py. Also you can directly download the models for stage II,

- Market1501_stageII_model: [Google Drive] [Baidu Pan]

- DukeMTMC_stageII_model: [Google Drive] [Baidu Pan]

- CUHK03_stageII_model: [Google Drive] [Baidu Pan]

There are four models in each directory for separate nets.

Notice:

If you use visdom for visualization by setting --display-id 1, you need to open a new window and run the script python -m visdom.server -port=6006 before running the main program, where -port should be consistent with --display-port.

Stage III: Global finetuning

Finetune the whole framework by optimizing all parts. You can train the model with follow commands,

python train.py --display-port 6006 --display-id 1 \

--stage 2 -d market1501 --name /directory/name/of/saving/checkpoints/ \

--pose-aug gauss -b 256 -j 4 --niter 25 --niter-decay 25 --lr 0.0001 --save-step 10 --eval-step 5 \

--lambda-recon 100.0 --lambda-veri 10.0 --lambda-sp 10.0 --smooth-label \

--netE-pretrain /path/of/100_net_E.pth --netG-pretrain /path/of/100_net_G.pth \

--netDi-pretrain /path/of/100_net_Di.pth --netDp-pretrain /path/of/100_net_Dp.pth

You can train it on specified GPUs by setting CUDA_VISIBLE_DEVICES.

We trained this model on a setting of batchsize 256. If you don't have such or better hardware, you may decrease the batchsize (the performance may also drop).

Or you can directly download our final model,

- Market1501_stageIII_model: [Google Drive] [Baidu Pan]

- DukeMTMC_stageIII_model: [Google Drive] [Baidu Pan]

- CUHK03_stageIII_model: [Google Drive] [Baidu Pan]

And test best_net_E.pth by the same way as mentioned in Stage I.

TODO

- scripts for generate pose landmarks.

- generate specified images.

Citation

Please cite our paper if you find the code useful for your research.

@inproceedings{ge2018fd,

title={FD-GAN: Pose-guided Feature Distilling GAN for Robust Person Re-identification},

author={Ge, Yixiao and Li, Zhuowan and Zhao, Haiyu and Yin, Guojun and Yi, Shuai and Wang, Xiaogang and Li, Hongsheng},

booktitle={Advances in Neural Information Processing Systems},

pages={1229--1240},

year={2018}

}

Acknowledgements

Our code is inspired by pytorch-CycleGAN-and-pix2pix and open-reid.