zhongyuchen / Few Shot Text Classification

Licence: apache-2.0

Few-shot binary text classification with Induction Networks and Word2Vec weights initialization

Stars: ✭ 32

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Few Shot Text Classification

Shallowlearn

An experiment about re-implementing supervised learning models based on shallow neural network approaches (e.g. fastText) with some additional exclusive features and nice API. Written in Python and fully compatible with Scikit-learn.

Stars: ✭ 196 (+512.5%)

Mutual labels: text-classification, word2vec

text-classification-cn

中文文本分类实践,基于搜狗新闻语料库,采用传统机器学习方法以及预训练模型等方法

Stars: ✭ 81 (+153.13%)

Mutual labels: text-classification, word2vec

Meta Learning Bert

Meta learning with BERT as a learner

Stars: ✭ 52 (+62.5%)

Mutual labels: meta-learning, text-classification

Ml Projects

ML based projects such as Spam Classification, Time Series Analysis, Text Classification using Random Forest, Deep Learning, Bayesian, Xgboost in Python

Stars: ✭ 127 (+296.88%)

Mutual labels: text-classification, word2vec

Nlp Projects

word2vec, sentence2vec, machine reading comprehension, dialog system, text classification, pretrained language model (i.e., XLNet, BERT, ELMo, GPT), sequence labeling, information retrieval, information extraction (i.e., entity, relation and event extraction), knowledge graph, text generation, network embedding

Stars: ✭ 360 (+1025%)

Mutual labels: text-classification, word2vec

Text Pairs Relation Classification

About Text Pairs (Sentence Level) Classification (Similarity Modeling) Based on Neural Network.

Stars: ✭ 182 (+468.75%)

Mutual labels: text-classification, word2vec

sarcasm-detection-for-sentiment-analysis

Sarcasm Detection for Sentiment Analysis

Stars: ✭ 21 (-34.37%)

Mutual labels: text-classification, word2vec

MetaLifelongLanguage

Repository containing code for the paper "Meta-Learning with Sparse Experience Replay for Lifelong Language Learning".

Stars: ✭ 21 (-34.37%)

Mutual labels: text-classification, meta-learning

Text rnn attention

嵌入Word2vec词向量的RNN+ATTENTION中文文本分类

Stars: ✭ 117 (+265.63%)

Mutual labels: text-classification, word2vec

Lightnlp

基于Pytorch和torchtext的自然语言处理深度学习框架。

Stars: ✭ 739 (+2209.38%)

Mutual labels: text-classification, word2vec

Vaaku2Vec

Language Modeling and Text Classification in Malayalam Language using ULMFiT

Stars: ✭ 68 (+112.5%)

Mutual labels: text-classification, word2vec

Product-Categorization-NLP

Multi-Class Text Classification for products based on their description with Machine Learning algorithms and Neural Networks (MLP, CNN, Distilbert).

Stars: ✭ 30 (-6.25%)

Mutual labels: text-classification, word2vec

Nlp chinese corpus

大规模中文自然语言处理语料 Large Scale Chinese Corpus for NLP

Stars: ✭ 6,656 (+20700%)

Mutual labels: text-classification, word2vec

Nlp In Practice

Starter code to solve real world text data problems. Includes: Gensim Word2Vec, phrase embeddings, Text Classification with Logistic Regression, word count with pyspark, simple text preprocessing, pre-trained embeddings and more.

Stars: ✭ 790 (+2368.75%)

Mutual labels: text-classification, word2vec

Concise Ipython Notebooks For Deep Learning

Ipython Notebooks for solving problems like classification, segmentation, generation using latest Deep learning algorithms on different publicly available text and image data-sets.

Stars: ✭ 23 (-28.12%)

Mutual labels: text-classification

Eda nlp

Data augmentation for NLP, presented at EMNLP 2019

Stars: ✭ 902 (+2718.75%)

Mutual labels: text-classification

few-shot-text-classification

Few-shot binary text classification with Induction Networks and Word2Vec weights initialization

Reference

This is an PyTorch implementation of IJCNLP 2019 paper Induction Networks for Few-Shot Text Classification.

Few-shot Classification

- Few-shot classification is a task in which a classifier must be adapted to accommodate new classes not seen in training, given only a few examples of each of these new classes.

- There is a large labeled training set with a set of classes. However, after training, the ultimate goal is to produce classifiers on the test set (a disjoint set of new classes), for which only a small labeled support set will be available.

- If the support set contains K labeled examples for each of the C unique classes, the target few-shot problem is called C-way K-shot problem.

- Usually, the K is too small to train a supervised classification model. Therefore meta-learning on the training set is necessary, in order to extract transferable knowledge that will help classify the test set more successfully.

Prerequisites

- Install the required packages by:

pip install -r requirements.txt

Parameters

- All the parameters are defined in

config.ini. -

configparseris used to parse the parameters.

Dataset: Amazon Review Sentiment Classification (ARSC)

This dataset comes from NAACL 2018 paper Diverse Few-Shot Text Classification with Multiple Metrics.

- The dataset comprises English reviews for 23 types of products on Amazon.

- For each product domain, there are three different binary classification tasks. These buckets then form 23 x 3 = 69 tasks in total.

- 4 x 3 = 12 tasks from 4 test domains (Books, DVD, Electronics and Kitchen) are selected as test set, and there are only 5 examples as support set for each labels in the test set. There other 19 domains are train domains.

| Train Tasks | Dev Tasks | Test Tasks |

|---|---|---|

| 19 * 3 = 57 | 4 * 3 = 12 | 4 * 3 = 12 |

- Therefore, 2-way 5-shot learning model is needed to classify this dataset.

Download

- Download Gorov/DiverseFewShot_Amazon

- The text files are in

Amazon_few_shotfolder.

Process

python data.py

- Data for train, dev and test:

- Train data:

*.trainfiles in train domains. - Dev data:

*.trianfiles in test domains as support data and*.devfiles in test domains as query data. - Test data:

*.trianfiles in test domains as support data and*.testfiles in test domains as query data.

- Train data:

- Pre-process all the texts data.

- Extract vocabulary with train data. Vocabulary has size of 35913, with 0 as

<pad>and 1 as<unk>. - Index all the texts with the vocabulary.

- Training batch composition: 5 negative support data + 5 positive support data + 27 negative query data + 27 positive query data. In each batch, support data and query data are randomly divided. As this is a 2-way 5-shot problem, the 2-ways means the amount of labels (negative/positive) and the 5-shot means the size of support data with the same label.

- Dev batch composition: 5 negative support data + 5 positive support data + 54 query data. There are 183 batches in total.

- Test batch composition: 5 negative support data + 5 positive support data + 54 query data. There are 181 batches in total.

- Above all, the batch size is always 64.

Word2Vec

python word2vec.py

- It is hard to train without Word2Vec weights initialization! Therefore Word2Vec weights are necessary!

- Train Word2Vec model with texts in train data, 95584 texts in total.

- Get the weights of the Word2Vec model.

- Load the weights in the encoder module.

Model

- Encoder Module: bi-direction recurrent neural network with self-attention.

- Induction Module: dynamic routing induction algorithm.

- Relation Module: measure the correlation between each pair of query and class and output the relation scores.

- See the paper in the reference section (at the end) for details.

Train, Dev and Test

export CUDA_VISIBLE_DEVICES=1

python main.py

- Training Strategy: episode-based meta training

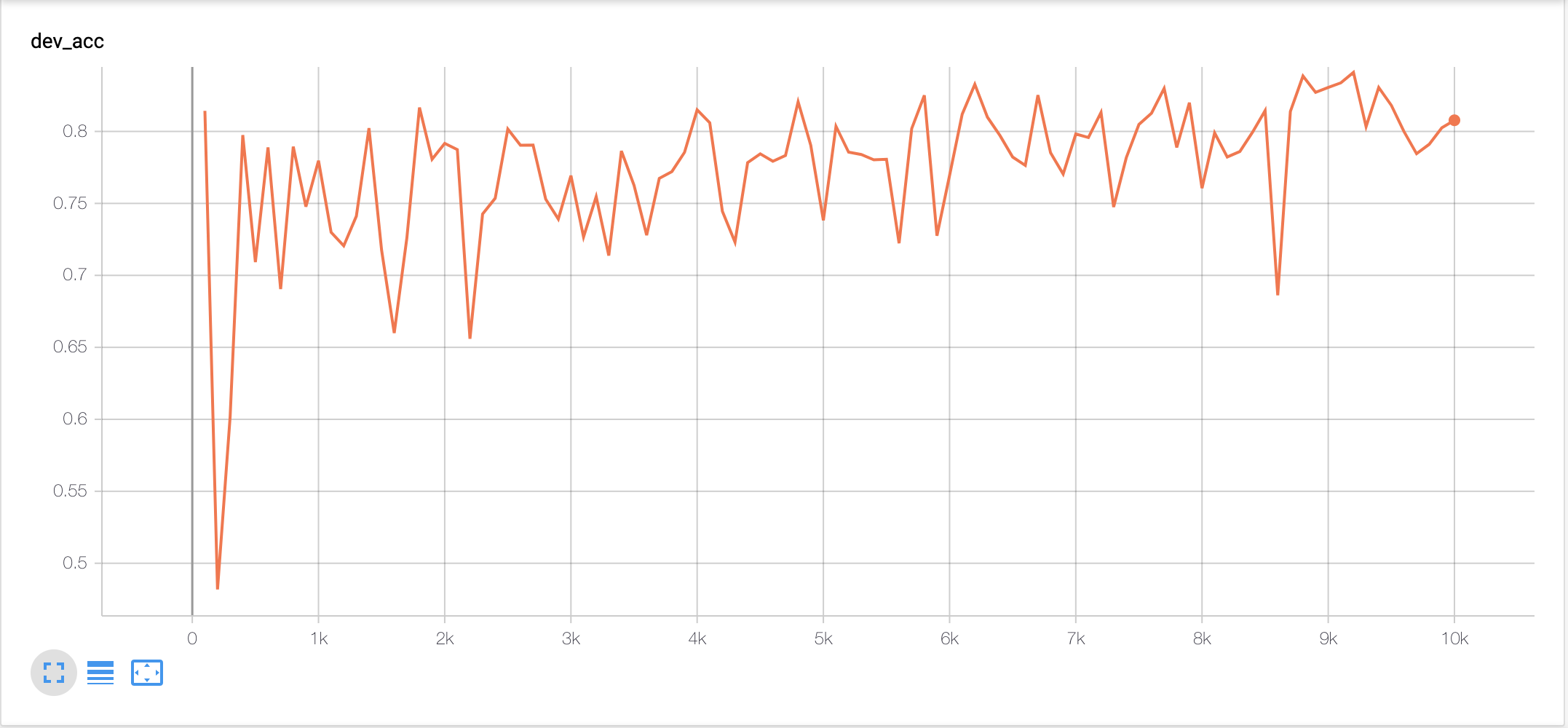

- Dev while training and record dev accuracy.

- Pick the checkpoint with the highest dev accuracy as the best model and test on it.

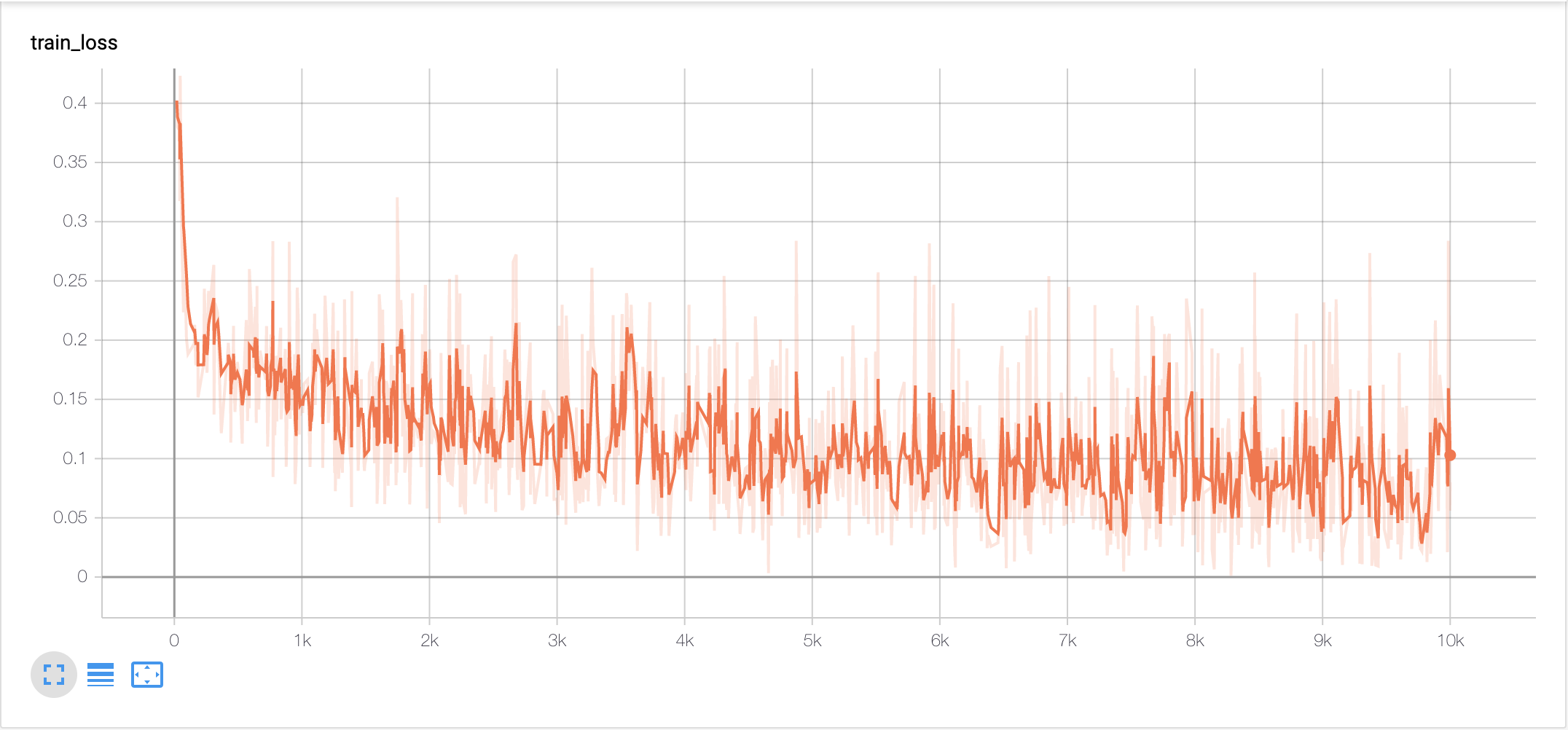

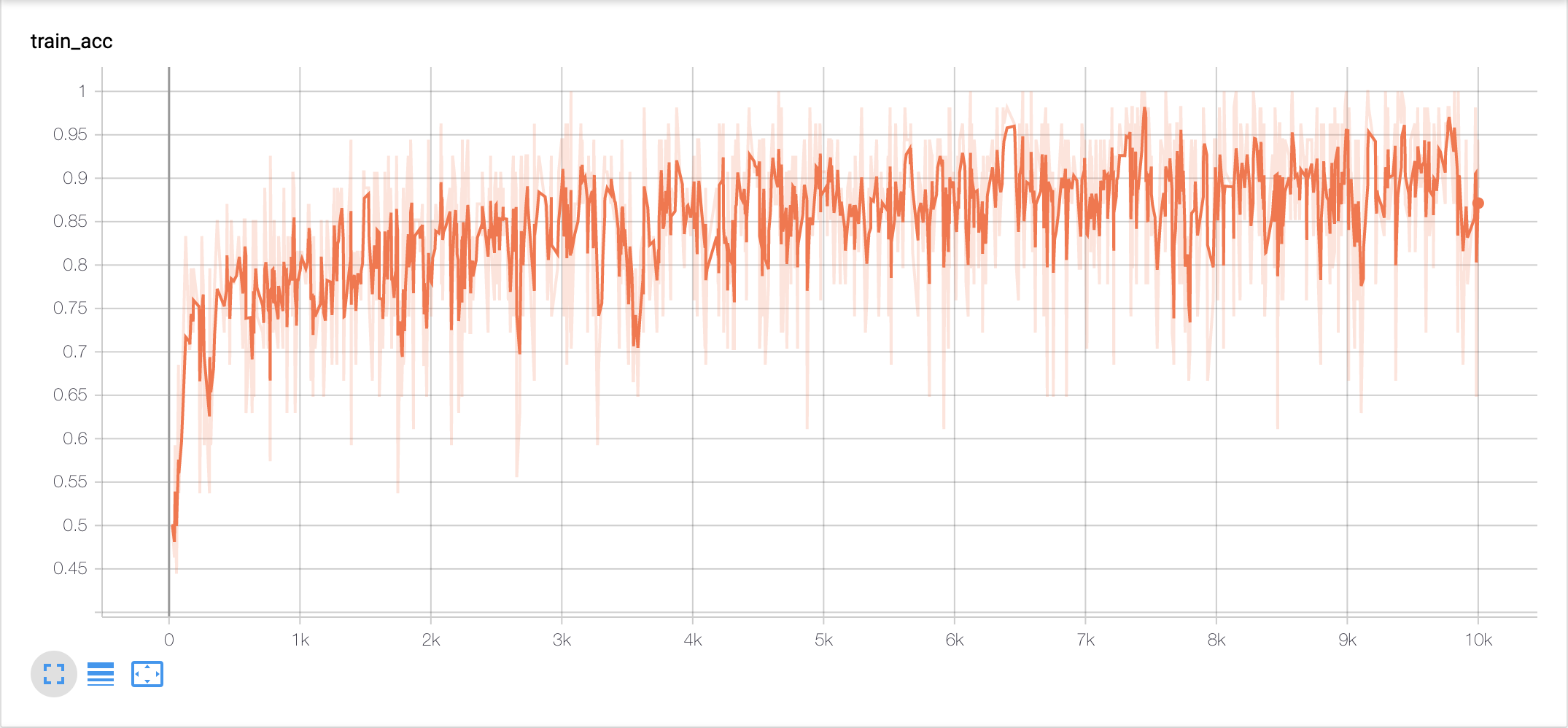

Result on ARSC

- tensorboard

tensorboard --logdir=log/

- result

| Train Loss | Train Accuracy |

|---|---|

|

|

| Dev Accuracy (achieves the highest 0.8410 at episode 9200) | Test Accuracy (at episode 9200) | Test Accuracy (paper) |

|---|---|---|

|

0.8452 | 0.8563 |

Author

Zhongyu Chen

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].