mykolaharmash / Hyntax

Programming Languages

Labels

Projects that are alternatives of or similar to Hyntax

Hyntax

Straightforward HTML parser for JavaScript. Live Demo.

- Simple. API is straightforward, output is clear.

- Forgiving. Just like a browser, normally parses invalid HTML.

- Supports streaming. Can process HTML while it's still being loaded.

- No dependencies.

Table Of Contents

Usage

npm install hyntax

const { tokenize, constructTree } = require('hyntax')

const util = require('util')

const inputHTML = `

<html>

<body>

<input type="text" placeholder="Don't type">

<button>Don't press</button>

</body>

</html>

`

const { tokens } = tokenize(inputHTML)

const { ast } = constructTree(tokens)

console.log(JSON.stringify(tokens, null, 2))

console.log(util.inspect(ast, { showHidden: false, depth: null }))

TypeScript Typings

Hyntax is written in JavaScript but has integrated TypeScript typings to help you navigate around its data structures. There is also Types Reference which covers most common types.

Streaming

Use StreamTokenizer and StreamTreeConstructor classes to parse HTML chunk by chunk while it's still being loaded from the network or read from the disk.

const { StreamTokenizer, StreamTreeConstructor } = require('hyntax')

const http = require('http')

const util = require('util')

http.get('http://info.cern.ch', (res) => {

const streamTokenizer = new StreamTokenizer()

const streamTreeConstructor = new StreamTreeConstructor()

let resultTokens = []

let resultAst

res.pipe(streamTokenizer).pipe(streamTreeConstructor)

streamTokenizer

.on('data', (tokens) => {

resultTokens = resultTokens.concat(tokens)

})

.on('end', () => {

console.log(JSON.stringify(resultTokens, null, 2))

})

streamTreeConstructor

.on('data', (ast) => {

resultAst = ast

})

.on('end', () => {

console.log(util.inspect(resultAst, { showHidden: false, depth: null }))

})

}).on('error', (err) => {

throw err;

})

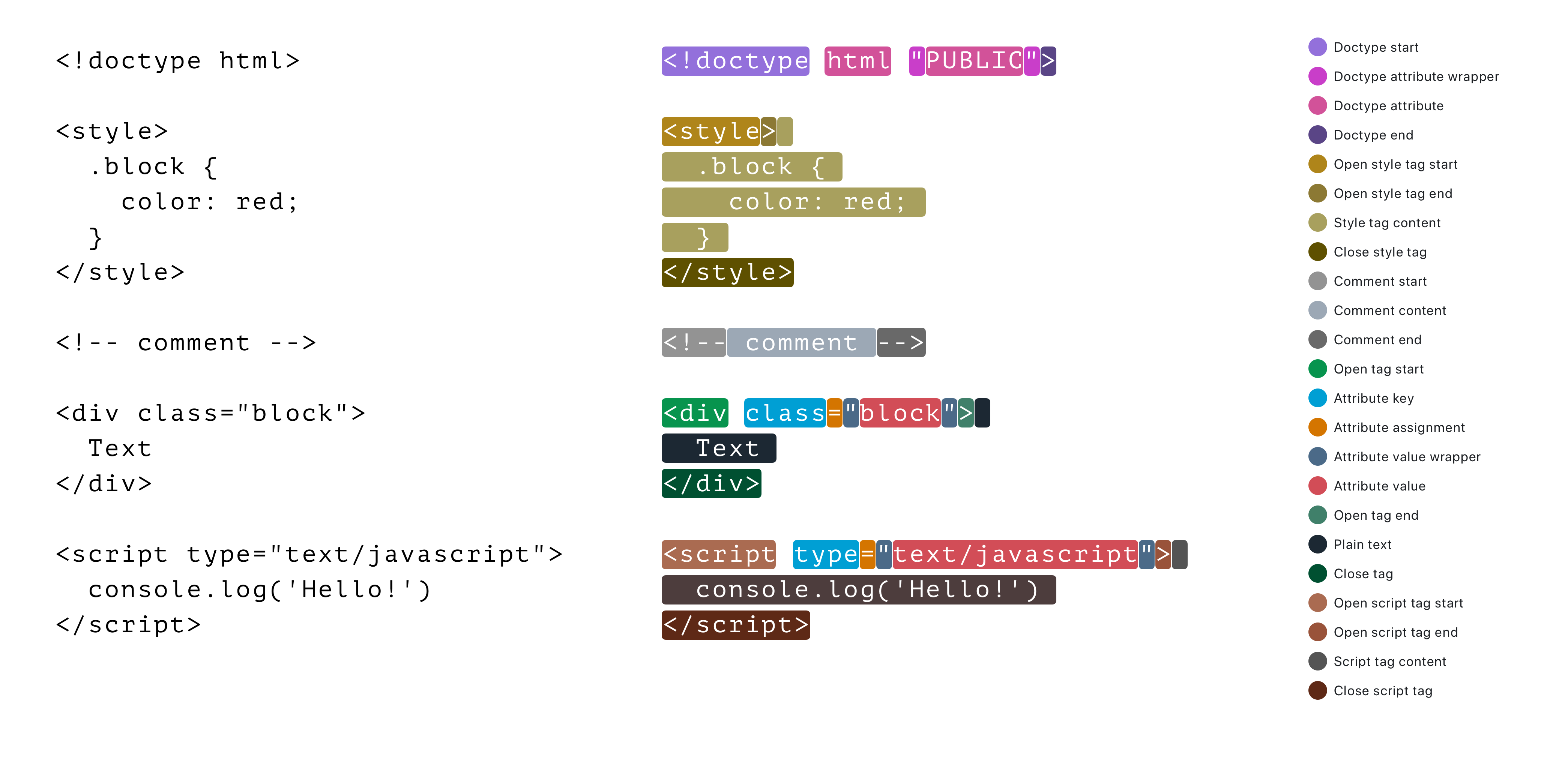

Tokens

Here are all kinds of tokens which Hyntax will extract out of HTML string.

Each token conforms to Tokenizer.Token interface.

AST Format

Resulting syntax tree will have at least one top-level Document Node with optional children nodes nested within.

{

nodeType: TreeConstructor.NodeTypes.Document,

content: {

children: [

{

nodeType: TreeConstructor.NodeTypes.AnyNodeType,

content: {…}

},

{

nodeType: TreeConstructor.NodeTypes.AnyNodeType,

content: {…}

}

]

}

}

Content of each node is specific to node's type, all of them are described in AST Node Types reference.

API Reference

Tokenizer

Hyntax has its tokenizer as a separate module. You can use generated tokens on their own or pass them further to a tree constructor to build an AST.

Interface

tokenize(html: String): Tokenizer.Result

Arguments

-

html

HTML string to process

Required.

Type: string.

Returns Tokenizer.Result

Tree Constructor

After you've got an array of tokens, you can pass them into tree constructor to build an AST.

Interface

constructTree(tokens: Tokenizer.AnyToken[]): TreeConstructor.Result

Arguments

-

tokens

Array of tokens received from the tokenizer.

Required.

Type: Tokenizer.AnyToken[]

Returns TreeConstructor.Result

Types Reference

Tokenizer.Result

interface Result {

state: Tokenizer.State

tokens: Tokenizer.AnyToken[]

}

-

state

The current state of tokenizer. It can be persisted and passed to the next tokenizer call if the input is coming in chunks. -

tokens

Array of resulting tokens.

Type: Tokenizer.AnyToken[]

TreeConstructor.Result

interface Result {

state: State

ast: AST

}

-

state

The current state of the tree constructor. Can be persisted and passed to the next tree constructor call in case when tokens are coming in chunks. -

ast

Resulting AST.

Type: TreeConstructor.AST

Tokenizer.Token

Generic Token, other interfaces use it to create a specific Token type.

interface Token<T extends TokenTypes.AnyTokenType> {

type: T

content: string

startPosition: number

endPosition: number

}

-

type

One of the Token types. -

content

Piece of original HTML string which was recognized as a token. -

startPosition

Index of a character in the input HTML string where the token starts. -

endPosition

Index of a character in the input HTML string where the token ends.

Tokenizer.TokenTypes.AnyTokenType

Shortcut type of all possible tokens.

type AnyTokenType =

| Text

| OpenTagStart

| AttributeKey

| AttributeAssigment

| AttributeValueWrapperStart

| AttributeValue

| AttributeValueWrapperEnd

| OpenTagEnd

| CloseTag

| OpenTagStartScript

| ScriptTagContent

| OpenTagEndScript

| CloseTagScript

| OpenTagStartStyle

| StyleTagContent

| OpenTagEndStyle

| CloseTagStyle

| DoctypeStart

| DoctypeEnd

| DoctypeAttributeWrapperStart

| DoctypeAttribute

| DoctypeAttributeWrapperEnd

| CommentStart

| CommentContent

| CommentEnd

Tokenizer.AnyToken

Shortcut to reference any possible token.

type AnyToken = Token<TokenTypes.AnyTokenType>

TreeConstructor.AST

Just an alias to DocumentNode. AST always has one top-level DocumentNode. See AST Node Types

type AST = TreeConstructor.DocumentNode

AST Node Types

There are 7 possible types of Node. Each type has a specific content.

type DocumentNode = Node<NodeTypes.Document, NodeContents.Document>

type DoctypeNode = Node<NodeTypes.Doctype, NodeContents.Doctype>

type TextNode = Node<NodeTypes.Text, NodeContents.Text>

type TagNode = Node<NodeTypes.Tag, NodeContents.Tag>

type CommentNode = Node<NodeTypes.Comment, NodeContents.Comment>

type ScriptNode = Node<NodeTypes.Script, NodeContents.Script>

type StyleNode = Node<NodeTypes.Style, NodeContents.Style>

Interfaces for each content type:

TreeConstructor.Node

Generic Node, other interfaces use it to create specific Nodes by providing type of Node and type of the content inside the Node.

interface Node<T extends NodeTypes.AnyNodeType, C extends NodeContents.AnyNodeContent> {

nodeType: T

content: C

}

TreeConstructor.NodeTypes.AnyNodeType

Shortcut type of all possible Node types.

type AnyNodeType =

| Document

| Doctype

| Tag

| Text

| Comment

| Script

| Style

Node Content Types

TreeConstructor.NodeTypes.AnyNodeContent

Shortcut type of all possible types of content inside a Node.

type AnyNodeContent =

| Document

| Doctype

| Text

| Tag

| Comment

| Script

| Style

TreeConstructor.NodeContents.Document

interface Document {

children: AnyNode[]

}

TreeConstructor.NodeContents.Doctype

interface Doctype {

start: Tokenizer.Token<Tokenizer.TokenTypes.DoctypeStart>

attributes?: DoctypeAttribute[]

end: Tokenizer.Token<Tokenizer.TokenTypes.DoctypeEnd>

}

TreeConstructor.NodeContents.Text

interface Text {

value: Tokenizer.Token<Tokenizer.TokenTypes.Text>

}

TreeConstructor.NodeContents.Tag

interface Tag {

name: string

selfClosing: boolean

openStart: Tokenizer.Token<Tokenizer.TokenTypes.OpenTagStart>

attributes?: TagAttribute[]

openEnd: Tokenizer.Token<Tokenizer.TokenTypes.OpenTagEnd>

children?: AnyNode[]

close?: Tokenizer.Token<Tokenizer.TokenTypes.CloseTag>

}

TreeConstructor.NodeContents.Comment

interface Comment {

start: Tokenizer.Token<Tokenizer.TokenTypes.CommentStart>

value: Tokenizer.Token<Tokenizer.TokenTypes.CommentContent>

end: Tokenizer.Token<Tokenizer.TokenTypes.CommentEnd>

}

TreeConstructor.NodeContents.Script

interface Script {

openStart: Tokenizer.Token<Tokenizer.TokenTypes.OpenTagStartScript>

attributes?: TagAttribute[]

openEnd: Tokenizer.Token<Tokenizer.TokenTypes.OpenTagEndScript>

value: Tokenizer.Token<Tokenizer.TokenTypes.ScriptTagContent>

close: Tokenizer.Token<Tokenizer.TokenTypes.CloseTagScript>

}

TreeConstructor.NodeContents.Style

interface Style {

openStart: Tokenizer.Token<Tokenizer.TokenTypes.OpenTagStartStyle>,

attributes?: TagAttribute[],

openEnd: Tokenizer.Token<Tokenizer.TokenTypes.OpenTagEndStyle>,

value: Tokenizer.Token<Tokenizer.TokenTypes.StyleTagContent>,

close: Tokenizer.Token<Tokenizer.TokenTypes.CloseTagStyle>

}

TreeConstructor.DoctypeAttribute

interface DoctypeAttribute {

startWrapper?: Tokenizer.Token<Tokenizer.TokenTypes.DoctypeAttributeWrapperStart>,

value: Tokenizer.Token<Tokenizer.TokenTypes.DoctypeAttribute>,

endWrapper?: Tokenizer.Token<Tokenizer.TokenTypes.DoctypeAttributeWrapperEnd>

}

TreeConstructor.TagAttribute

interface TagAttribute {

key?: Tokenizer.Token<Tokenizer.TokenTypes.AttributeKey>,

startWrapper?: Tokenizer.Token<Tokenizer.TokenTypes.AttributeValueWrapperStart>,

value?: Tokenizer.Token<Tokenizer.TokenTypes.AttributeValue>,

endWrapper?: Tokenizer.Token<Tokenizer.TokenTypes.AttributeValueWrapperEnd>

}