YyzHarry / Imbalanced Semi Self

Programming Languages

Labels

Projects that are alternatives of or similar to Imbalanced Semi Self

Rethinking the Value of Labels for Improving Class-Imbalanced Learning

This repository contains the implementation code for paper:

Rethinking the Value of Labels for Improving Class-Imbalanced Learning

Yuzhe Yang, and Zhi Xu

34th Conference on Neural Information Processing Systems (NeurIPS), 2020

[Website] [arXiv] [Paper] [Slides] [Video]

If you find this code or idea useful, please consider citing our work:

@inproceedings{yang2020rethinking,

title={Rethinking the Value of Labels for Improving Class-Imbalanced Learning},

author={Yang, Yuzhe and Xu, Zhi},

booktitle={Conference on Neural Information Processing Systems (NeurIPS)},

year={2020}

}

Overview

In this work, we show theoretically and empirically that, both semi-supervised learning (using unlabeled data) and self-supervised pre-training (first pre-train the model with self-supervision) can substantially improve the performance on imbalanced (long-tailed) datasets, regardless of the imbalanceness on labeled/unlabeled data and the base training techniques.

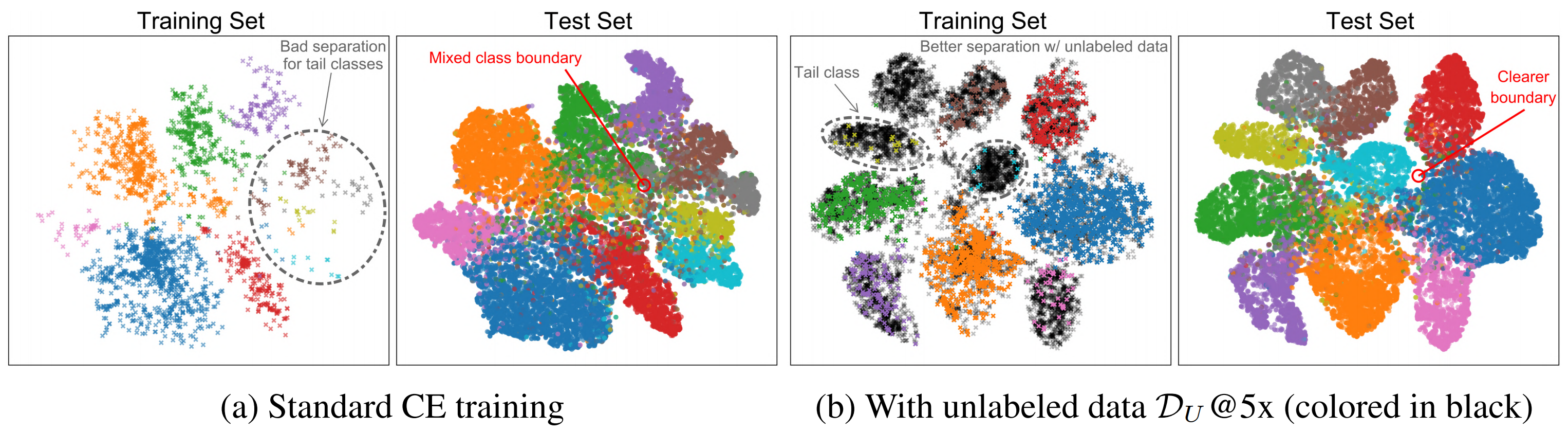

Semi-Supervised Imbalanced Learning:

Using unlabeled data helps to shape clearer class boundaries and results in better class separation, especially for the tail classes.

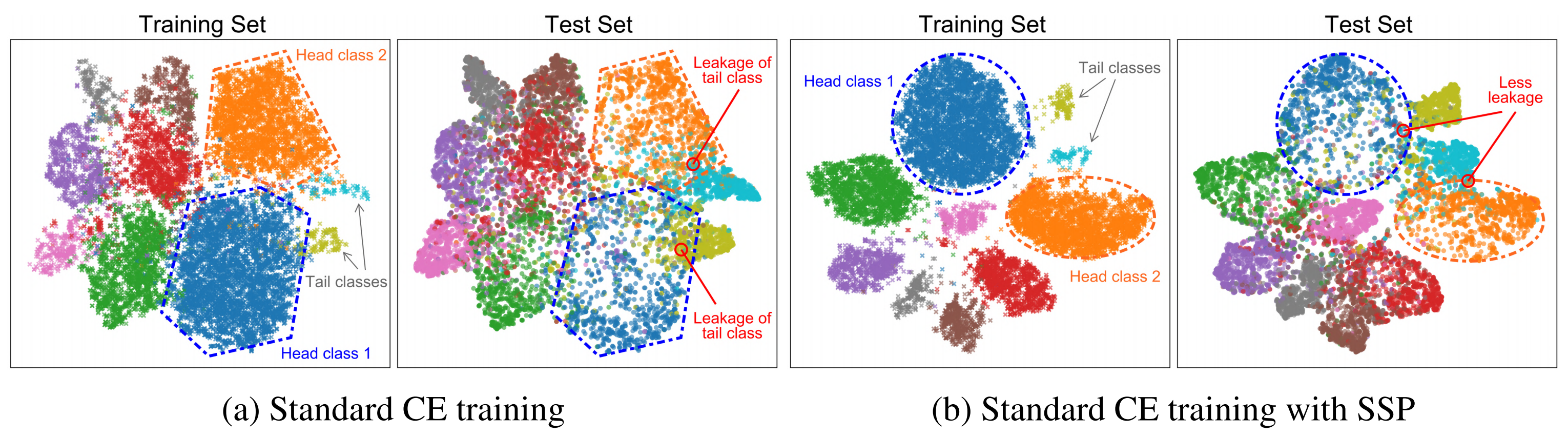

Self-Supervised Imbalanced Learning:

Self-supervised pre-training (SSP) helps mitigate the tail classes leakage during testing, which results in better learned boundaries and representations.

Installation

Prerequisites

- Download CIFAR & SVHN dataset, and place them in your

data_path. Original data will be converted byimbalance_cifar.pyandimbalance_svhn.py - Download ImageNet & iNaturalist 2018 dataset, and place them in your

data_path. Long-tailed version will be created using train/val splits (.txt files) in corresponding subfolders underimagenet_inat/data/ - Change the

data_rootinimagenet_inat/main.pyaccordingly for ImageNet-LT & iNaturalist 2018

Dependencies

- PyTorch (>= 1.2, tested on 1.4)

- yaml

- scikit-learn

- TensorboardX

Code Overview

Main Files

-

train_semi.py: train model with extra unlabeled data, on CIFAR-LT / SVHN-LT -

train.py: train model with (or without) SSP, on CIFAR-LT / SVHN-LT -

imagenet_inat/main.py: train model with (or without) SSP, on ImageNet-LT / iNaturalist 2018 -

pretrain_rot.py&pretrain_moco.py: self-supervised pre-training using Rotation prediction or MoCo

Main Arguments

-

--dataset: name of chosen long-tailed dataset -

--imb_factor: imbalance factor (inverse value of imbalance ratio\rhoin the paper) -

--imb_factor_unlabel: imbalance factor for unlabeled data (inverse value of unlabel imbalance ratio\rho_U) -

--pretrained_model: path to self-supervised pre-trained models -

--resume: path to resume checkpoint (also for evaluation)

Getting Started

Semi-Supervised Imbalanced Learning

Unlabeled data sourcing

CIFAR-10-LT: CIFAR-10 unlabeled data is prepared following this repo using the 80M TinyImages. In short, a data sourcing model is trained to distinguish CIFAR-10 classes and an "non-CIFAR" class. For each class, images are then ranked based on the prediction confidence, and unlabeled (imbalanced) datasets are constructed accordingly. Use the following link to download the prepared unlabeled data, and place in your data_path:

SVHN-LT: Since its own dataset contains an extra part with 531.1K additional (labeled) samples, they are directly used to simulate the unlabeled dataset.

Note that the class imbalance in unlabeled data is also considered, which is controlled by --imb_factor_unlabel (\rho_U in the paper). See imbalance_cifar.py and imbalance_svhn.py for details.

Semi-supervised learning with pseudo-labeling

To perform pseudo-labeling (self-training), first a base classifier is trained on original imbalanced dataset. With the trained base classifier, pseudo-labels can be generated using

python gen_pseudolabels.py --resume <ckpt-path> --data_dir <data_path> --output_dir <output_path> --output_filename <save_name>

We provide generated pseudo label files for CIFAR-10-LT & SVHN-LT with \rho=50, using base models trained with standard cross-entropy (CE) loss:

- Generated pseudo labels for CIFAR-10-LT with

\rho=50 - Generated pseudo labels for SVHN-LT with

\rho=50

To train with unlabeled data, for example, on CIFAR-10-LT with \rho=50 and \rho_U=50

python train_semi.py --dataset cifar10 --imb_factor 0.02 --imb_factor_unlabel 0.02

Self-Supervised Imbalanced Learning

Self-supervised pre-training (SSP)

To perform Rotation SSP on CIFAR-10-LT with \rho=100

python pretrain_rot.py --dataset cifar10 --imb_factor 0.01

To perform MoCo SSP on ImageNet-LT

python pretrain_moco.py --dataset imagenet --data <data_path>

Network training with SSP models

Train on CIFAR-10-LT with \rho=100

python train.py --dataset cifar10 --imb_factor 0.01 --pretrained_model <path_to_ssp_model>

Train on ImageNet-LT / iNaturalist 2018

python -m imagenet_inat.main --cfg <path_to_ssp_config> --model_dir <path_to_ssp_model>

Results and Models

All related data and checkpoints can be found via this link. Individual results and checkpoints are detailed as follows.

Semi-Supervised Imbalanced Learning

CIFAR-10-LT

| Model | Top-1 Error | Download |

|---|---|---|

CE + [email protected] (\rho=50 and \rho_U=1) |

16.79 | ResNet-32 |

CE + [email protected] (\rho=50 and \rho_U=25) |

16.88 | ResNet-32 |

CE + [email protected] (\rho=50 and \rho_U=50) |

18.36 | ResNet-32 |

CE + [email protected] (\rho=50 and \rho_U=100) |

19.94 | ResNet-32 |

SVHN-LT

| Model | Top-1 Error | Download |

|---|---|---|

CE + [email protected] (\rho=50 and \rho_U=1) |

13.07 | ResNet-32 |

CE + [email protected] (\rho=50 and \rho_U=25) |

13.36 | ResNet-32 |

CE + [email protected] (\rho=50 and \rho_U=50) |

13.16 | ResNet-32 |

CE + [email protected] (\rho=50 and \rho_U=100) |

14.54 | ResNet-32 |

Test a pretrained checkpoint

python train_semi.py --dataset cifar10 --resume <ckpt-path> -e

Self-Supervised Imbalanced Learning

CIFAR-10-LT

-

Self-supervised pre-trained models (Rotation)

Dataset Setting \rho=100\rho=50\rho=10Download ResNet-32 ResNet-32 ResNet-32 -

Final models (200 epochs)

Model \rhoTop-1 Error Download CE(Uniform) + SSP 10 12.28 ResNet-32 CE(Uniform) + SSP 50 21.80 ResNet-32 CE(Uniform) + SSP 100 26.50 ResNet-32 CE(Balanced) + SSP 10 11.57 ResNet-32 CE(Balanced) + SSP 50 19.60 ResNet-32 CE(Balanced) + SSP 100 23.47 ResNet-32

CIFAR-100-LT

-

Self-supervised pre-trained models (Rotation)

Dataset Setting \rho=100\rho=50\rho=10Download ResNet-32 ResNet-32 ResNet-32 -

Final models (200 epochs)

Model \rhoTop-1 Error Download CE(Uniform) + SSP 10 42.93 ResNet-32 CE(Uniform) + SSP 50 54.96 ResNet-32 CE(Uniform) + SSP 100 59.60 ResNet-32 CE(Balanced) + SSP 10 41.94 ResNet-32 CE(Balanced) + SSP 50 52.91 ResNet-32 CE(Balanced) + SSP 100 56.94 ResNet-32

ImageNet-LT

-

Self-supervised pre-trained models (MoCo)

[ResNet-50] -

Final models (90 epochs)

Model Top-1 Error Download CE(Uniform) + SSP 54.4 ResNet-50 CE(Balanced) + SSP 52.4 ResNet-50 cRT + SSP 48.7 ResNet-50

iNaturalist 2018

-

Self-supervised pre-trained models (MoCo)

[ResNet-50] -

Final models (90 epochs)

Model Top-1 Error Download CE(Uniform) + SSP 35.6 ResNet-50 CE(Balanced) + SSP 34.1 ResNet-50 cRT + SSP 31.9 ResNet-50

Test a pretrained checkpoint

# test on CIFAR-10 / CIFAR-100

python train.py --dataset cifar10 --resume <ckpt-path> -e

# test on ImageNet-LT / iNaturalist 2018

python -m imagenet_inat.main --cfg <path_to_ssp_config> --model_dir <path_to_model> --test

Acknowledgements

This code is partly based on the open-source implementations from the following sources: OpenLongTailRecognition, classifier-balancing, LDAM-DRW, MoCo, and semisup-adv.

Contact

If you have any questions, feel free to contact us through email ([email protected] & [email protected]) or Github issues. Enjoy!