zhouhaoyi / Informer2020

Programming Languages

Projects that are alternatives of or similar to Informer2020

Informer: Beyond Efficient Transformer for Long Sequence Time-Series Forecasting (AAAI'21 Best Paper)

This is the origin Pytorch implementation of Informer in the following paper:

Informer: Beyond Efficient Transformer for Long Sequence Time-Series Forecasting. Special thanks to Jieqi Peng@cookieminions for building this repo.

🚩News(Feb 22, 2021): We provide Colab Examples for friendly usage.

🚩News(Feb 8, 2021): Our Informer paper has been awarded AAAI'21 Best Paper! We will continue this line of research and update on this repo. Please star this repo and cite our paper if you find our work is helpful for you.

Figure 1. The architecture of Informer.

ProbSparse Attention

The self-attention scores form a long-tail distribution, where the "active" queries lie in the "head" scores and "lazy" queries lie in the "tail" area. We designed the ProbSparse Attention to select the "active" queries rather than the "lazy" queries. The ProbSparse Attention with Top-u queries forms a sparse Transformer by the probability distribution.

Why not use Top-u keys? The self-attention layer's output is the re-represent of input. It is formulated as a weighted combination of values w.r.t. the score of dot-product pairs. The top queries with full keys encourage a complete re-represent of leading components in the input, and it is equivalent to selecting the "head" scores among all the dot-product pairs. If we choose Top-u keys, the full keys just preserve the trivial sum of values within the "long tail" scores but wreck the leading components' re-represent.

Figure 2. The illustration of ProbSparse Attention.

Requirements

- Python 3.6

- matplotlib == 3.1.1

- numpy == 1.19.4

- pandas == 0.25.1

- scikit_learn == 0.21.3

- torch == 1.4.0

Dependencies can be installed using the following command:

pip install -r requirements.txt

Data

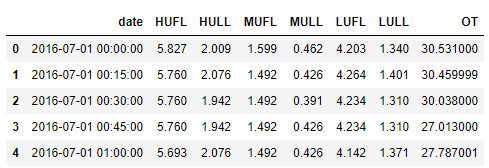

The ETT dataset used in the paper can be download in the repo ETDataset.

The required data files should be put into data/ETT/ folder. A demo slice of the ETT data is illustrated in the following figure. Note that the input of each dataset is zero-mean normalized in this implementation.

Figure 3. An example of the ETT data.

Usage

Colab Examples: We provide google colabs to help reproducing and customing our repo, which includes experiments(train and test), prediction, visualization and custom data.

Commands for training and testing the model with ProbSparse self-attention on Dataset ETTh1, ETTh2 and ETTm1 respectively:

# ETTh1

python -u main_informer.py --model informer --data ETTh1 --attn prob --freq h

# ETTh2

python -u main_informer.py --model informer --data ETTh2 --attn prob --freq h

# ETTm1

python -u main_informer.py --model informer --data ETTm1 --attn prob --freq t

More parameter information please refer to main_informer.py.

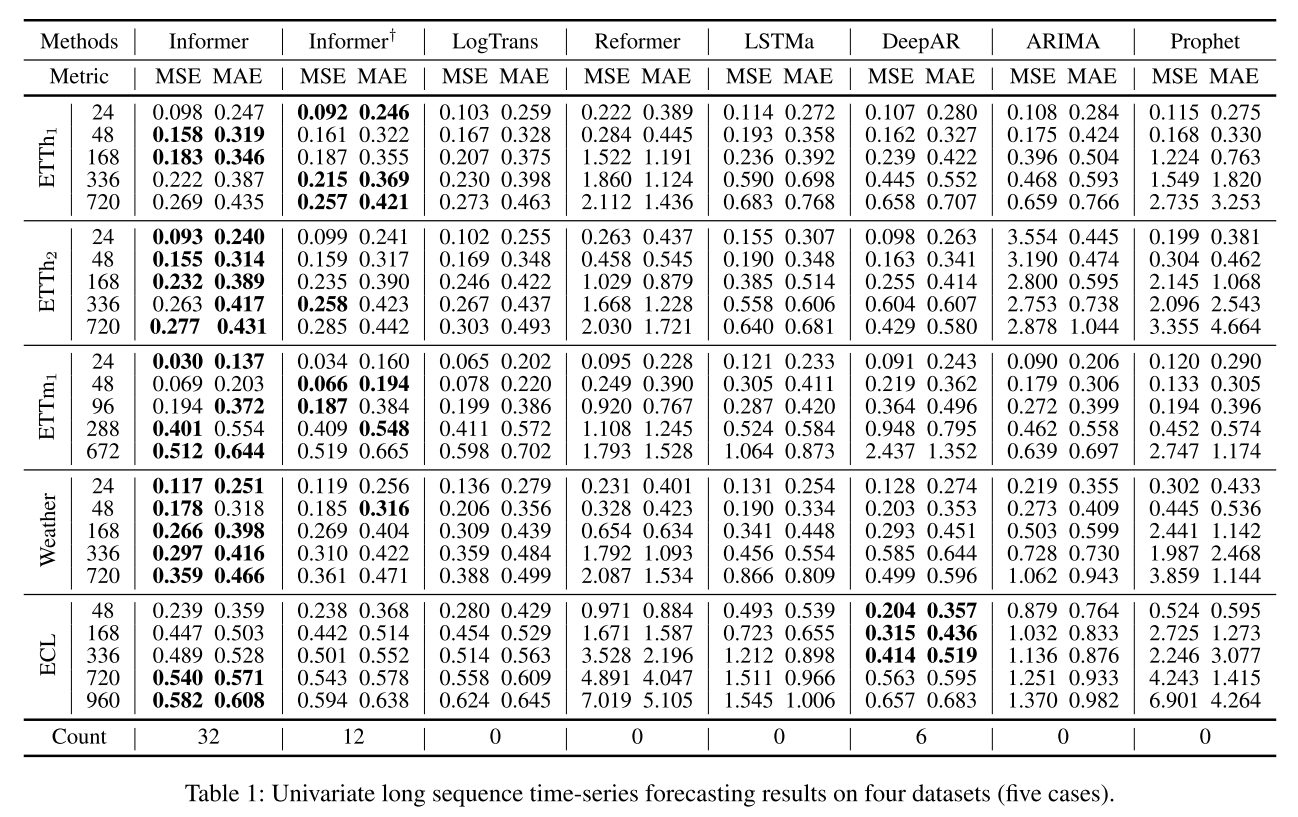

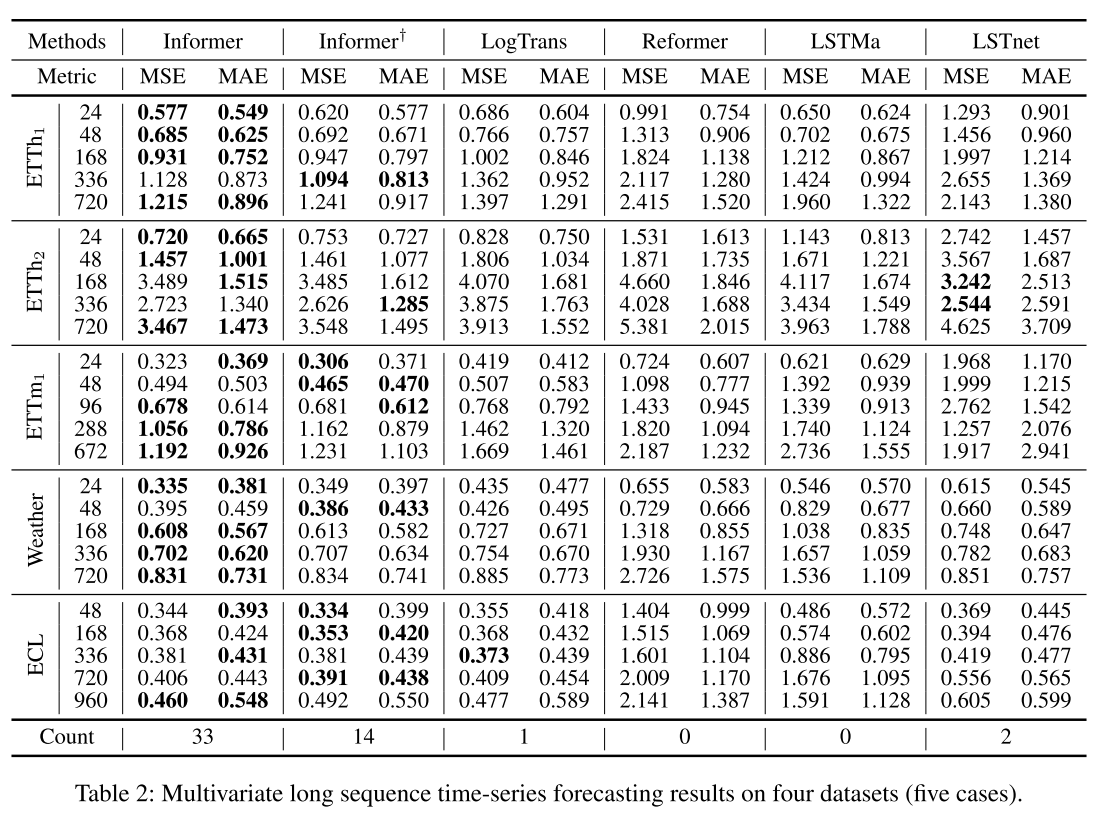

Results

Figure 4. Univariate forecasting results.

Figure 5. Multivariate forecasting results.

FAQ

If you run into a problem like RuntimeError: The size of tensor a (98) must match the size of tensor b (96) at non-singleton dimension 1, you can check torch version or modify code about Conv1d of TokenEmbedding in models/embed.py as the way of circular padding mode in Conv1d changed in different torch versions.

Citation

If you find this repository useful in your research, please consider citing the following paper:

@inproceedings{haoyietal-informer-2021,

author = {Haoyi Zhou and

Shanghang Zhang and

Jieqi Peng and

Shuai Zhang and

Jianxin Li and

Hui Xiong and

Wancai Zhang},

title = {Informer: Beyond Efficient Transformer for Long Sequence Time-Series Forecasting},

booktitle = {The Thirty-Fifth {AAAI} Conference on Artificial Intelligence, {AAAI} 2021},

pages = {online},

publisher = {{AAAI} Press},

year = {2021},

}

Contact

If you have any questions, feel free to contact Haoyi Zhou through Email ([email protected]) or Github issues. Pull requests are highly welcomed!

Acknowlegements

Thanks for the computing infrastructure provided by Beijing Advanced Innovation Center for Big Data and Brain Computing (BDBC).

At the same time, thank you all for your attention to this work!