Lab41 / Ipython Spark Docker

Programming Languages

ipython-spark-docker

This repo provides Docker containers to run:

- Spark master and worker(s) for running Spark in standalone mode on dedicated hosts

- Mesos-enhanced containers for Mesos-mastered Spark jobs

- IPython web interface for interacting with Spark or Mesos master via PySpark

Please see the accompanying blog posts for the technical details and motivation behind this project:

- Using Docker to Build an IPython-driven Spark Deployment

- Triplewide Trailer, Part 1: Looking to Hitch IPython, Spark, and Mesos to Docker

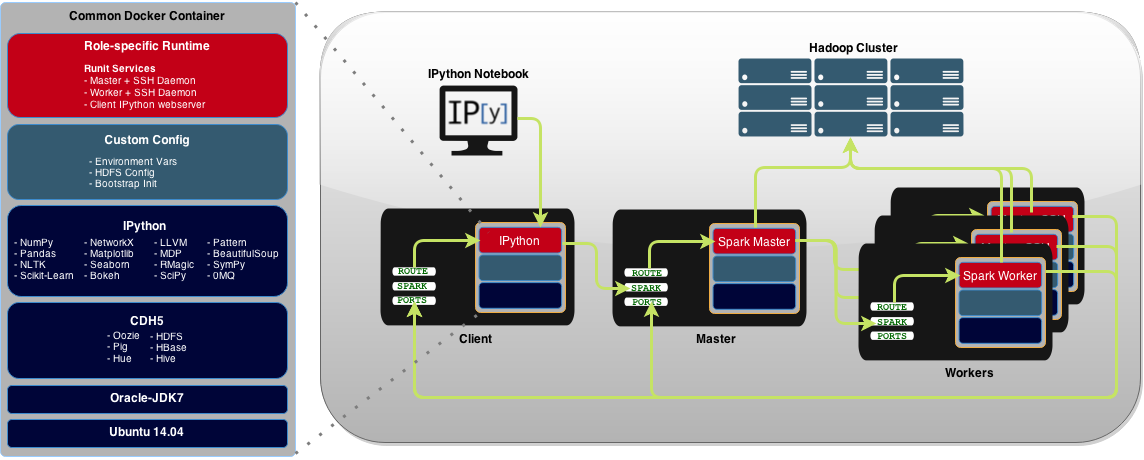

Architecture

Docker containers provide a portable and repeatable method for deploying the cluster:

CDH5 Tools and Libraries

| HDFS | Hbase | Hive | Oozie | Pig | Hue |

Python Packages and Modules

| Pattern | NLTK | Pandas | NumPy | SciPy | SymPy | Seaborn |

| Cython | Numba | Biopython | Rmagic | 0MQ | Matplotlib | Scikit-Learn |

| Statsmodels | Beautiful Soup | NetworkX | LLVM | Bokeh | Vincent | MDP |

Usage

Option 1. Mesos-mastered Spark Jobs

-

Install Mesos with Docker Containerizer and Docker Images: Install a Mesos cluster configured to use the Docker containerizer, which enables the Mesos slaves to execute Spark tasks within a Docker container.

A. End-to-end Installation: The script

mesos/1-setup-mesos-cluster.shuses the Python library Fabric to install and configure a cluster according to How To Configure a Production-Ready Mesosphere Cluster on Ubuntu 14.04. After installation, it also pulls the Docker images that will execute Spark tasks. To use:- Update IP Addresses of Mesos nodes in

mesos/fabfile.py. Find instances to change with:

grep 'ip-address' mesos/fabfile.py- Install/configure the cluster:

./mesos/1-setup-mesos-cluster.shOptional:

./1-build.shif you prefer instead to build the docker images from scratch (rather than the script pulling from Docker Hub)B. Manual Installation: Follow the general steps in

mesos/1-setup-mesos-cluster.shto manually install:- Install mesosphere on masters

- Install mesos on slaves

- Configure zookeeper on all nodes

- Configure and start masters

- Configure and start slaves

- Load docker images:

docker pull lab41/spark-mesos-dockerworker-ipython

- Update IP Addresses of Mesos nodes in

docker pull lab41/spark-mesos-mesosworker-ipython

-

Run the client container on a client host (replace 'username-for-sparkjobs' and 'mesos-master-fqdn' below):

*Note: the client container will create username-for-sparkjobs when started, providing the ability to submit Spark jobs as a specific user and/or deploy different IPython servers for different users../5-run-spark-mesos-dockerworker-ipython.sh username-for-sparkjobs mesos://mesos-master-fqdn:5050

Option 2. Spark Standalone Mode

Installation and Deployment - Build each Docker image and run each on separate dedicated hosts

- Prerequisites

- Deploy Hadoop/HDFS cluster. Spark uses a cluster to distrubute analysis of data pulled from multiple sources, including the Hadoop Distrubuted File System (HDFS). The ephemeral nature of Docker containers make them ill-suited for persisting long-term data in a cluster. Instead of attempting to store data within the Docker containers' HDFS nodes or mounting host volumes, it is recommended you point this cluster at an external Hadoop deployment. Cloudera provides complete resources for installing and configuring its distribution (CDH) of Hadoop. This repo has been tested using CDH5.

-

Build and configure hosts

-

Install Docker v1.5+, jq JSON processor, and iptables. For example, on an Ubuntu host:

./0-prepare-host.sh -

Update the Hadoop configuration files in

runtime/cdh5/<hadoop|hive>/<multiple-files>with the correct hostnames for your Hadoop cluster. Usegrep FIXME -R .to find hostnames to change. -

Generate new SSH keypair (

dockerfiles/base/lab41/spark-base/config/ssh/id_rsaanddockerfiles/base/lab41/spark-base/config/ssh/id_rsa.pub), adding the public key todockerfiles/base/lab41/spark-base/config/ssh/authorized_keys. -

(optional) Update

SPARK_WORKER_CONFIGenvironment variable for Spark-specific options such as executor cores. Update the variable via a shellexportcommand or by updatingdockerfiles/standalone/lab41/spark-client-ipython/config/service/ipython/run. -

(optional) Comment out any unwanted Python packages in the base Dockerfile image

dockerfiles/base/lab41/python-datatools/Dockerfile. -

Get Docker images:

docker pull lab41/spark-master

docker pull lab41/spark-worker

docker pull lab41/spark-client-ipython./1-build.sh- Deploy cluster nodes

./2-run-spark-master.sh./3-run-spark-worker.sh spark://spark-master-fqdn:7077./4-run-spark-client-ipython.sh spark://spark-master-fqdn:7077