xwhan / Knowledge Aware Reader

PyTorch implementation of the ACL 2019 paper "Improving Question Answering over Incomplete KBs with Knowledge-Aware Reader"

Stars: ✭ 123

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Knowledge Aware Reader

CONVEX

As far as we know, CONVEX is the first unsupervised method for conversational question answering over knowledge graphs. A demo and our benchmark (and more) can be found at

Stars: ✭ 24 (-80.49%)

Mutual labels: question-answering, knowledge-base

Sentence Similarity

PyTorch implementations of various deep learning models for paraphrase detection, semantic similarity, and textual entailment

Stars: ✭ 96 (-21.95%)

Mutual labels: question-answering

Capse

A Capsule Network-based Embedding Model for Knowledge Graph Completion and Search Personalization (NAACL 2019)

Stars: ✭ 114 (-7.32%)

Mutual labels: knowledge-base

Ama

[[I'm slow at replying these days, but I hope to get back to answering questions eventually]] Ask me anything!

Stars: ✭ 102 (-17.07%)

Mutual labels: question-answering

Log4brains

✍️ Log and publish your architecture decisions (ADR)

Stars: ✭ 98 (-20.33%)

Mutual labels: knowledge-base

Community

Modern Confluence alternative designed for internal & external docs, built with Golang + EmberJS

Stars: ✭ 1,286 (+945.53%)

Mutual labels: knowledge-base

Clicr

Machine reading comprehension on clinical case reports

Stars: ✭ 123 (+0%)

Mutual labels: question-answering

Reading Comprehension Question Answering Papers

Survey on Machine Reading Comprehension

Stars: ✭ 101 (-17.89%)

Mutual labels: question-answering

Bi Att Flow

Bi-directional Attention Flow (BiDAF) network is a multi-stage hierarchical process that represents context at different levels of granularity and uses a bi-directional attention flow mechanism to achieve a query-aware context representation without early summarization.

Stars: ✭ 1,472 (+1096.75%)

Mutual labels: question-answering

Flexneuart

Flexible classic and NeurAl Retrieval Toolkit

Stars: ✭ 99 (-19.51%)

Mutual labels: question-answering

Vqa Tensorflow

Tensorflow Implementation of Deeper LSTM+ normalized CNN for Visual Question Answering

Stars: ✭ 98 (-20.33%)

Mutual labels: question-answering

Happy Transformer

A package built on top of Hugging Face's transformer library that makes it easy to utilize state-of-the-art NLP models

Stars: ✭ 97 (-21.14%)

Mutual labels: question-answering

Dynamic Coattention Network Plus

Dynamic Coattention Network Plus (DCN+) TensorFlow implementation. Question answering using Deep NLP.

Stars: ✭ 117 (-4.88%)

Mutual labels: question-answering

Caseinterviewquestions

PHPer case interview questions

Stars: ✭ 96 (-21.95%)

Mutual labels: question-answering

Neuronblocks

NLP DNN Toolkit - Building Your NLP DNN Models Like Playing Lego

Stars: ✭ 1,356 (+1002.44%)

Mutual labels: question-answering

Simple

SimplE Embedding for Link Prediction in Knowledge Graphs

Stars: ✭ 104 (-15.45%)

Mutual labels: knowledge-base

Dynamic Memory Networks Plus Pytorch

Implementation of Dynamic memory networks plus in Pytorch

Stars: ✭ 123 (+0%)

Mutual labels: question-answering

Code for the ACL 2019 paper:

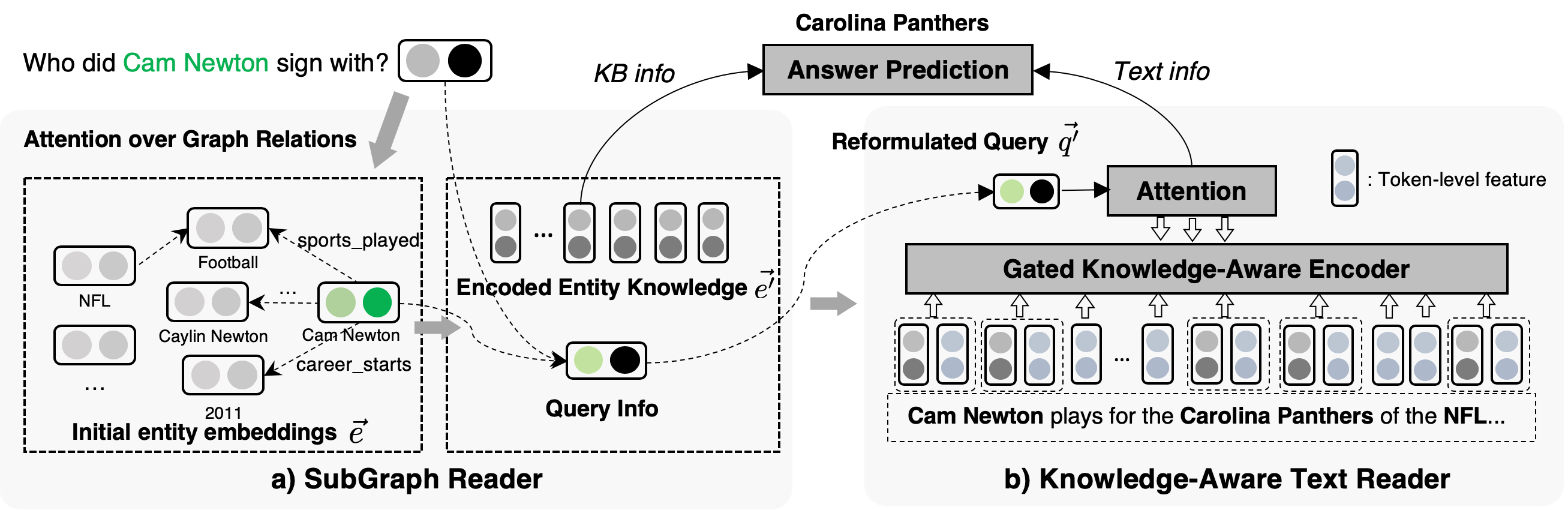

Improving Question Answering over Incomplete KBs with Knowledge-Aware Reader

Paper link: https://arxiv.org/abs/1905.07098

Model Overview:

Requirements

PyTorch 1.0.1tensorboardXtqdmgluonnlp

Prepare data

mkdir datasets && cd datasets && wget https://sites.cs.ucsb.edu/~xwhan/datasets/webqsp.tar.gz && tar -xzvf webqsp.tar.gz && cd ..

Full KB setting

CUDA_VISIBLE_DEVICES=0 python train.py --model_id KAReader_full_kb --max_num_neighbors 50 --label_smooth 0.1 --data_folder datasets/webqsp/full/

Incomplete KB setting

Note: The [email protected] should match or be slightly better than the number reported in the paper. More tuning on threshold should give you better F1 score.

30% KB

CUDA_VISIBLE_DEVICES=0 python train.py --model_id KAReader_kb_03 --max_num_neighbors 50 --use_doc --data_folder datasets/webqsp/kb_03/ --eps 0.05

10% KB

CUDA_VISIBLE_DEVICES=0 python train.py --model_id KAReader_kb_01 --max_num_neighbors 50 --use_doc --data_folder datasets/webqsp/kb_01/ --eps 0.05

50% KB

CUDA_VISIBLE_DEVICES=0 python train.py --model_id KAReader_kb_05 --num_layer 1 --max_num_neighbors 100 --use_doc --data_folder datasets/webqsp/kb_05/ --eps 0.05 --seed 3 --hidden_drop 0.05

Citation

@inproceedings{xiong-etal-2019-improving,

title = "Improving Question Answering over Incomplete {KB}s with Knowledge-Aware Reader",

author = "Xiong, Wenhan and

Yu, Mo and

Chang, Shiyu and

Guo, Xiaoxiao and

Wang, William Yang",

booktitle = "Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics",

month = jul,

year = "2019",

address = "Florence, Italy",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/P19-1417",

doi = "10.18653/v1/P19-1417",

pages = "4258--4264",

}

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].