AIS-Bonn / Lattice_net

Labels

Projects that are alternatives of or similar to Lattice net

LatticeNet

Project Page | Video | Paper

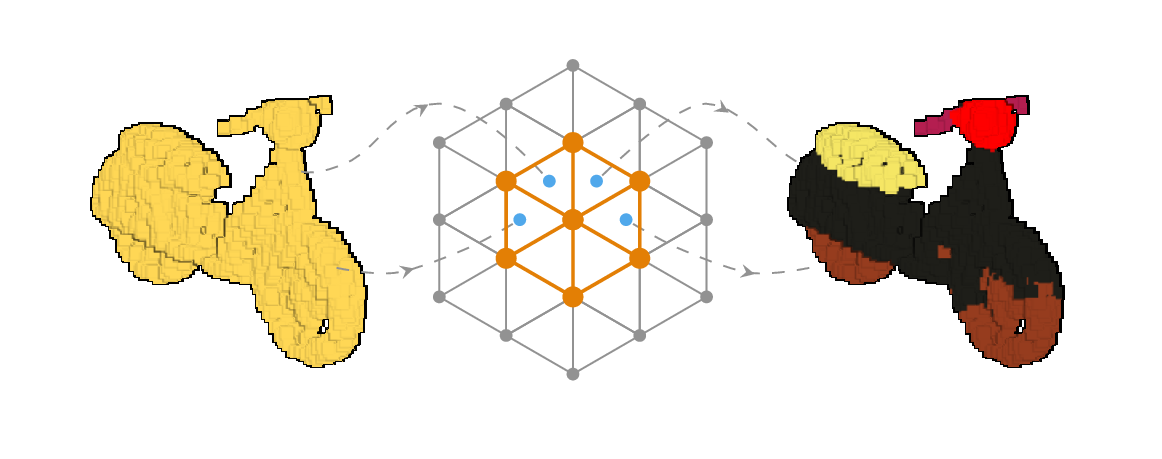

LatticeNet: Fast Point Cloud Segmentation Using Permutohedral Lattices

Radu Alexandru Rosu 1,

Peer Schütt 1,

Jan Quenzel 1,

Sven Behnke 1

1University of Bonn, Autonomous Intelligent Systems

This is the official PyTorch implementation of LatticeNet: Fast Point Cloud Segmentation Using Permutohedral Lattices

LatticeNet can process raw point clouds for semantic segmentation (or any other per-point prediction task). The implementation is written in CUDA and PyTorch. There is no CPU implementation yet.

Getting started

Install

The easiest way to install LatticeNet is using the included dockerfile.

You will need to have Docker>=19.03 and nvidia drivers installed.

Afterwards, you can build the docker image which contains all the LatticeNet dependencies using:

$ git clone --recursive https://github.com/RaduAlexandru/lattice_net

$ cd lattice_net/docker

$ ./build.sh lattice_img #this will take some time because some packages need to be build from source

$ ./run.sh lattice_img

$ git clone --recursive https://github.com/RaduAlexandru/easy_pbr

$ cd easy_pbr && make && cd ..

$ git clone --recursive https://github.com/RaduAlexandru/data_loaders

$ cd data_loaders && make && cd ..

$ git clone --recursive https://github.com/RaduAlexandru/lattice_net

$ cd lattice_net && make && cd ..

Data

LatticeNet uses point clouds for training. The data is loaded with the DataLoaders package and interfaced using EasyPBR. Here we show how to train on the ShapeNet dataset.

While inside the docker container ( after running ./run.sh lattice_img ), download and unzip the ShapeNet dataset:

$ bash ./lattice_net/data/shapenet_part_seg/download_shapenet.sh

Usage

Train

LatticeNet uses config files to configure the dataset used, the training parameters, model architecture and various visualization options.

The config file used to train on the shapenet dataset can be found under "lattice_net/config/ln_train_shapenet_example.cfg".

Running the training script will by default read this config file and start the training.

$ ./lattice_net/latticenet_py/ln_train.py

Configuration options

Various configuration options can be interesting to check out and modify. We take ln_train_shapenet_example.cfg as an example.

core: hdpi: false #can be turned on an off to accomodate high DPI displays. If the text and fonts in the visualizer are too big, set this option to false

train: with_viewer: false #setting to true will start a visualizer which displays the currently segmented point cloud and the difference to the ground truth

If training is performed with the viewer enabled, you should see something like this:

Citation

@inproceedings{rosu2020latticenet,

title={LatticeNet: Fast point cloud segmentation using permutohedral lattices},

author={Rosu, Radu Alexandru and Sch{\"u}tt, Peer and Quenzel, Jan and Behnke, Sven},

booktitle="Proc. of Robotics: Science and Systems (RSS)",

year={2020}

}