alphadl / Lookahead.pytorch

Licence: mit

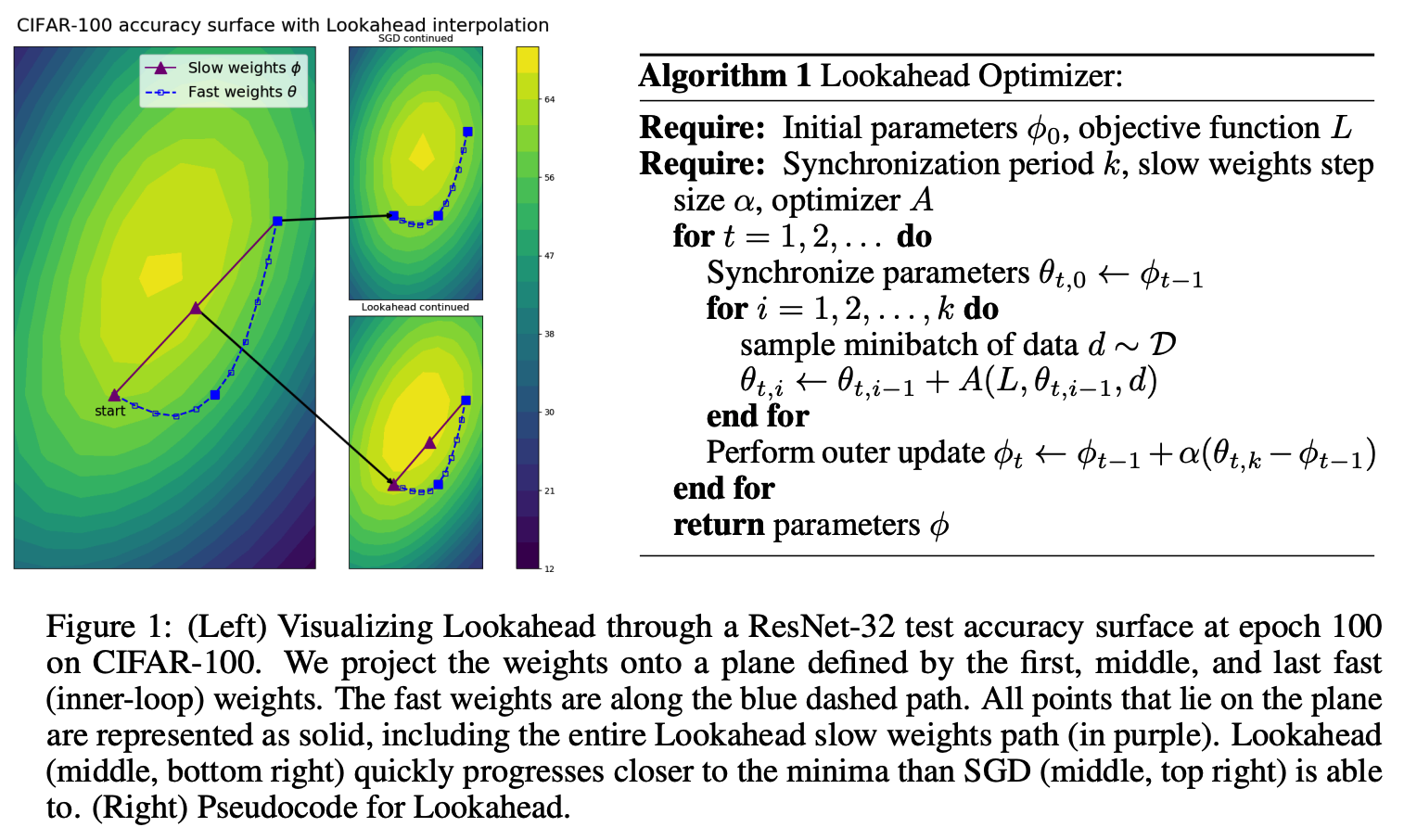

lookahead optimizer (Lookahead Optimizer: k steps forward, 1 step back) for pytorch

Stars: ✭ 279

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Lookahead.pytorch

portfolio-optimizer

A library for portfolio optimization algorithms with python interface.

Stars: ✭ 19 (-93.19%)

Mutual labels: optimizer

sam.pytorch

A PyTorch implementation of Sharpness-Aware Minimization for Efficiently Improving Generalization

Stars: ✭ 96 (-65.59%)

Mutual labels: optimizer

Post-Tweaks

A post-installation batch script for Windows

Stars: ✭ 136 (-51.25%)

Mutual labels: optimizer

lookahead tensorflow

Lookahead optimizer ("Lookahead Optimizer: k steps forward, 1 step back") for tensorflow

Stars: ✭ 25 (-91.04%)

Mutual labels: optimizer

falcon

A WordPress cleanup and performance optimization plugin.

Stars: ✭ 17 (-93.91%)

Mutual labels: optimizer

Cleaner

The only storage saving app that actually works! :D

Stars: ✭ 27 (-90.32%)

Mutual labels: optimizer

simplu3D

A library to generate buildings from local urban regulations.

Stars: ✭ 18 (-93.55%)

Mutual labels: optimizer

postcss-clean

PostCss plugin to minify your CSS with clean-css

Stars: ✭ 41 (-85.3%)

Mutual labels: optimizer

rethinking-bnn-optimization

Implementation for the paper "Latent Weights Do Not Exist: Rethinking Binarized Neural Network Optimization"

Stars: ✭ 62 (-77.78%)

Mutual labels: optimizer

AdaBound-tensorflow

An optimizer that trains as fast as Adam and as good as SGD in Tensorflow

Stars: ✭ 44 (-84.23%)

Mutual labels: optimizer

goga

Go evolutionary algorithm is a computer library for developing evolutionary and genetic algorithms to solve optimisation problems with (or not) many constraints and many objectives. Also, a goal is to handle mixed-type representations (reals and integers).

Stars: ✭ 39 (-86.02%)

Mutual labels: optimizer

adamwr

Implements https://arxiv.org/abs/1711.05101 AdamW optimizer, cosine learning rate scheduler and "Cyclical Learning Rates for Training Neural Networks" https://arxiv.org/abs/1506.01186 for PyTorch framework

Stars: ✭ 130 (-53.41%)

Mutual labels: optimizer

EAGO.jl

A development environment for robust and global optimization

Stars: ✭ 106 (-62.01%)

Mutual labels: optimizer

Windows11-Optimization

Community repository, to improve security and performance of Windows 10 and windows 11 with tweaks, commands, scripts, registry keys, configuration, tutorials and more

Stars: ✭ 17 (-93.91%)

Mutual labels: optimizer

pigosat

Go (golang) bindings for Picosat, the satisfiability solver

Stars: ✭ 15 (-94.62%)

Mutual labels: optimizer

lookahead optimizer for pytorch

PyTorch implement of Lookahead Optimizer: k steps forward, 1 step back

Usage:

base_opt = torch.optim.Adam(model.parameters(), lr=1e-3, betas=(0.9, 0.999)) # Any optimizer

lookahead = Lookahead(base_opt, k=5, alpha=0.5) # Initialize Lookahead

lookahead.zero_grad()

loss_function(model(input), target).backward() # Self-defined loss function

lookahead.step()

lookahead优化器的PyTorch实现

论文《Lookahead Optimizer: k steps forward, 1 step back》的PyTorch实现。

用法:

base_opt = torch.optim.Adam(model.parameters(), lr=1e-3, betas=(0.9, 0.999)) # 用你想用的优化器

lookahead = Lookahead(base_opt, k=5, alpha=0.5) # 初始化Lookahead

lookahead.zero_grad()

loss_function(model(input), target).backward() # 自定义的损失函数

lookahead.step()

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].