lawy623 / Lstm_pose_machines

Labels

Projects that are alternatives of or similar to Lstm pose machines

LSTM Pose Machines

This repo includes the source code of the paper: "LSTM Pose Machines" (CVPR'18) by Yue Luo, Jimmy Ren, Zhouxia Wang, Wenxiu Sun, Jinshan Pan, Jianbo Liu, Jiahao Pang, Liang Lin.

Contact: Yue Luo ([email protected])

Before Everything

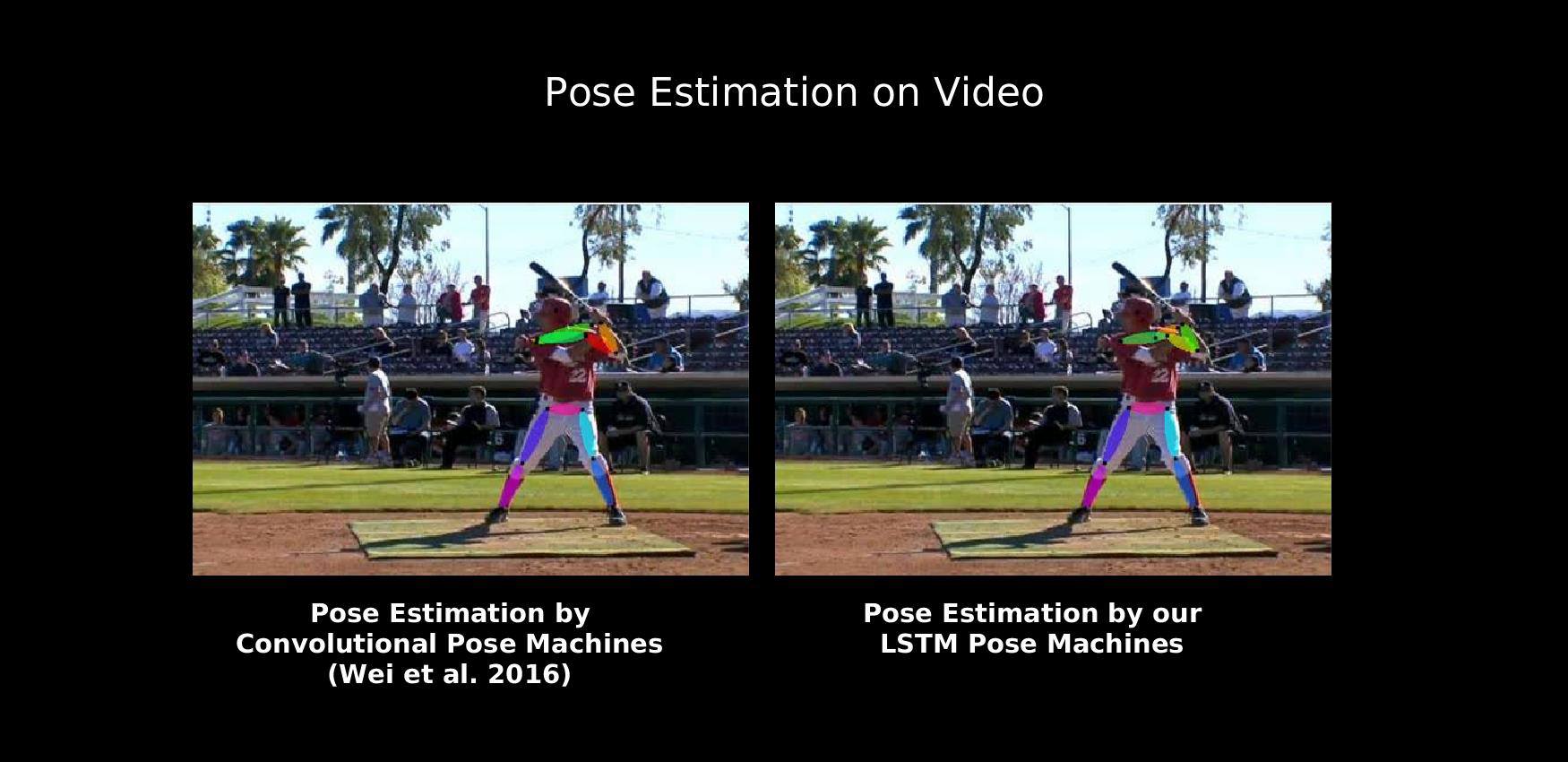

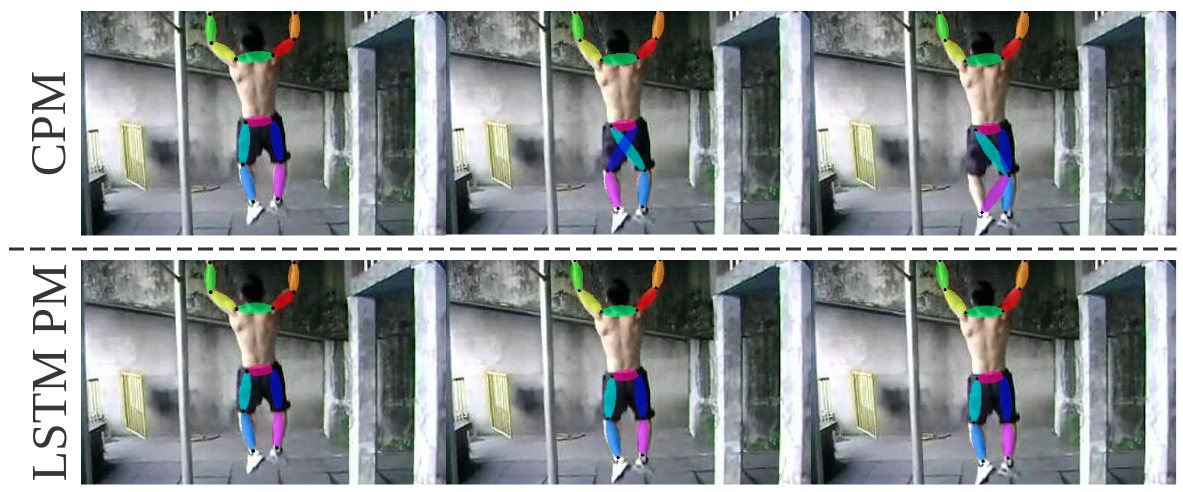

You can click the images below to watch our results on video-based pose estimation. The first one is the comparison with the state-of-the-art single image pose estimation method "Convolutional Pose Machines(CPMs)" on videos. Second one is our LSTM Pose Machines on video pose estimation.

Prerequisites

The code is tested on 64 bit Linux (Ubuntu 14.04 LTS). You should also install Matlab (R2015a) and OpenCV (At least 2.4.8). We have tested our code on GTX TitanX with CUDA8.0+cuDNNv5. Please install all these prerequisites before running our code.

Installation

-

Get the code.

git clone https://github.com/lawy623/LSTM_Pose_Machines.git cd LSTM_Pose_Machines -

Build the code. Please follow Caffe instruction to install all necessary packages and build it.

cd caffe/ # Modify Makefile.config according to your Caffe installation/. Remember to allow CUDA and CUDNN. make -j8 make matcaffe -

Prepare data. We write all data and labels into

.matfiles.

- Please go to directory

dataset/, and runget_data.shto download PENN and JHMDB datasets. - To create the

.matfiles, please go to directorydataset/PENNanddataset/JHMDB, and run the matlab scriptsJHMDB_PreData.mandPENN_PreData.mrespectively. It will take some time to prepare data.

Training

- As described in our paper, we first trained a "single image model" based on the repository: Convolutional Pose Machines(CPMs). You can download this model at [Google Drive|Baidu Pan]. Put it in

training/prototxt/preModelafter downloading it. If you hope to train it by yourself, we also provide the prototxts intraining/prototxt/preModel. You can train this model with our prototxts using the codes released by CPMs. This single image model is trained on LEEDS Sport Dataset and MPII Dataset. - To train our LSTM Pose Machines on video datasets, go to

training/to runvideo_train_JHMDB.morvideo_train_PENN.m. You can also run the matlab scripts from terminal at directorytraining/by following commands. By default matlab is installed under/usr/local/MATLAB/R2015a. If the location of your matlab is not the same, please modifytrain_LSTM.shif want to run the scripts from terminal. Notice that, if you want to train our LSTM Pose Machines on sub-JHMDB datasets, please modifyline 10ofvideo_train_JHMDB.mand set the correct subset ID before your run this script.

## To run the training matlab scripts from terminal

sh prototxt/PENN/LSTM_5/train_LSTM.sh #To trained on PENN dataset

## Or

sh prototxt/sub-JHMDB/LSTM_5_Sub1/train_LSTM.sh #To trained on sub-JHMDB subset 1, change `line 10` of `video_train_JHMDB.m` to be `modelID = 1` first.

sh prototxt/sub-JHMDB/LSTM_5_Sub2/train_LSTM.sh #To trained on sub-JHMDB subset 2, change `line 10` of `video_train_JHMDB.m` to be `modelID = 2` first.

sh prototxt/sub-JHMDB/LSTM_5_Sub3/train_LSTM.sh #To trained on sub-JHMDB subset 3, change `line 10` of `video_train_JHMDB.m` to be `modelID = 3` first.

Testing

- Download our trained models from [Google Drive|Baidu Pan]. Put these models in

model/PENN/andmodel/sub-JHMDB/respectively. - Go to directory

testing/. Specify the model ID you want to test by modifyingline 15ofbenchmark.mand setting the correctbenchmark_modelID. Then you can runtest_LSTM.shwhich runs the matlab test script to get our evaluation results. Please look intest_LSTM.shand modify the matlab bin location and-logfilename before running this script. - Predicted results will be saved in

testing/predicts/. You can play with the results by ploting predicted locations on images. - Orders of the predicted accuracy for two datasets will be as follows:

## PENN Dataset

Head R_Shoulder L_Shoulder R_Elbow L_Elbow R_Wrist L_Wrist R_Hip L_Hip R_Knee L_Knee R_Ankle L_Ankle || Overall

98.90% 98.50% 98.60% 96.60% 96.60% 96.60% 96.50% 98.20% 98.20% 97.90% 98.50% 97.30% 97.70% || 97.73%

## sub-JHMDB Dataset

Neck Belly Head R_Shoulder L_Shoulder R_Hip L_Hip R_Elbow L_Elbow R_Knee L_Knee R_Wrist L_Wrist R_Ankle L_Ankle || Overall

99.20% 98.97% 98.27% 96.67% 96.13% 98.83% 98.63% 90.17% 89.10% 96.40% 94.80% 85.93% 86.17% 91.90% 89.90% || 94.09%

To get the results in our paper, you need to remove unlisted joints, calculate average and reorder the accuracy.

Visualization

- We provide the sample visualization code in

testing/visualization/, runvisualization.mto visually get our predicted result on PENN dataset. Make sure your have already run the testing script for PENN before visualizing the results.

Citation

Please cite our paper if you find it useful for your work:

@inproceedings{Luo2018LSTMPose,

title={LSTM Pose Machines},

author={Yue Luo, Jimmy Ren, Zhouxia Wang, Wenxiu Sun, Jinshan Pan, Jianbo Liu, Jiahao Pang, Liang Lin},

booktitle={CVPR},

year={2018},

}