lichengunc / Mattnet

Labels

Projects that are alternatives of or similar to Mattnet

PyTorch Implementation of MAttNet

Introduction

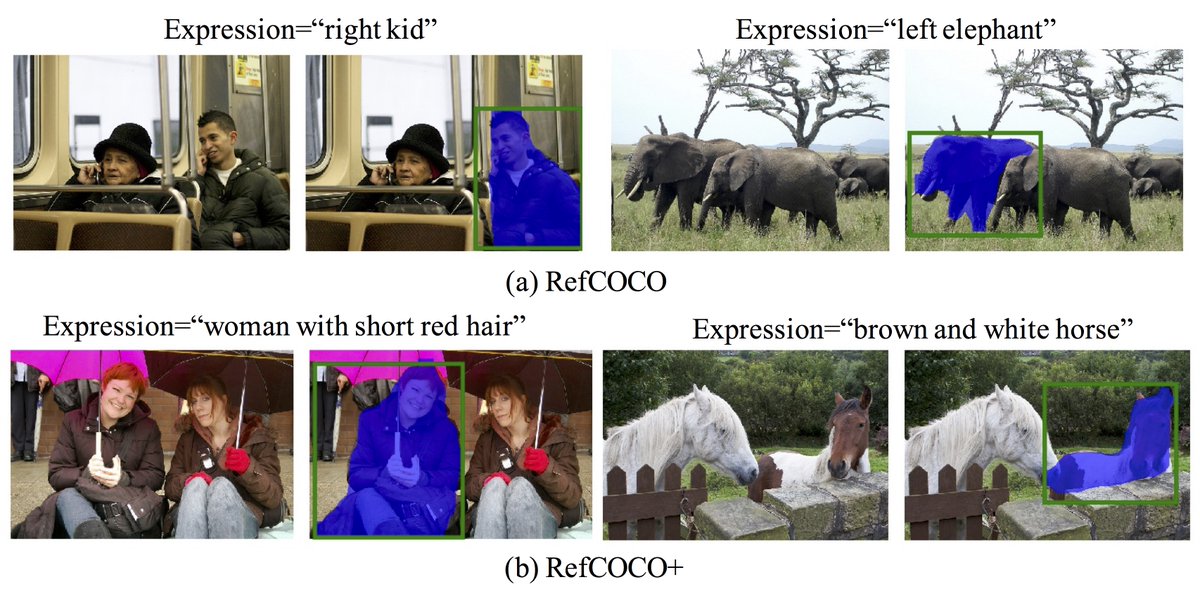

This repository is Pytorch implementation of MAttNet: Modular Attention Network for Referring Expression Comprehension in CVPR 2018. Refering Expressions are natural language utterances that indicate particular objects within a scene, e.g., "the woman in red sweater", "the man on the right", etc. For robots or other intelligent agents communicating with people in the world, the ability to accurately comprehend such expressions will be a necessary component for natural interactions. In this project, we address referring expression comprehension: localizing an image region described by a natural language expression. Check our paper and online demo for more details. Examples are shown as follows:

Prerequisites

- Python 2.7

- Pytorch 0.2 (may not work with 1.0 or higher)

- CUDA 8.0

Installation

- Clone the MAttNet repository

git clone --recursive https://github.com/lichengunc/MAttNet

- Prepare the submodules and associated data

-

Mask R-CNN: Follow the instructions of my mask-faster-rcnn repo, preparing everything needed for

pyutils/mask-faster-rcnn. You could usecv/mrcn_detection.ipynbto test if you've get Mask R-CNN ready. -

REFER API and data: Use the download links of REFER and go to the foloder running

make. Followdata/README.mdto prepare images and refcoco/refcoco+/refcocog annotations. -

refer-parser2: Follow the instructions of refer-parser2 to extract the parsed expressions using Vicente's R1-R7 attributes. Note this sub-module is only used if you want to train the models by yourself.

Training

- Prepare the training and evaluation data by running

tools/prepro.py:

python tools/prepro.py --dataset refcoco --splitBy unc

- Extract features using Mask R-CNN, where the

head_featsare used in subject module training andann_featsis used in relationship module training.

CUDA_VISIBLE_DEVICES=gpu_id python tools/extract_mrcn_head_feats.py --dataset refcoco --splitBy unc

CUDA_VISIBLE_DEVICES=gpu_id python tools/extract_mrcn_ann_feats.py --dataset refcoco --splitBy unc

- Detect objects/masks and extract features (only needed if you want to evaluate the automatic comprehension). We empirically set the confidence threshold of Mask R-CNN as 0.65.

CUDA_VISIBLE_DEVICES=gpu_id python tools/run_detect.py --dataset refcoco --splitBy unc --conf_thresh 0.65

CUDA_VISIBLE_DEVICES=gpu_id python tools/run_detect_to_mask.py --dataset refcoco --splitBy unc

CUDA_VISIBLE_DEVICES=gpu_id python tools/extract_mrcn_det_feats.py --dataset refcoco --splitBy unc

- Train MAttNet with ground-truth annotation:

./experiments/scripts/train_mattnet.sh GPU_ID refcoco unc

During training, you may want to use cv/inpect_cv.ipynb to check the training/validation curves and do cross validation.

Evaluation

Evaluate MAttNet with ground-truth annotation:

./experiments/scripts/eval_easy.sh GPUID refcoco unc

If you detected/extracted the Mask R-CNN results already (step 3 above), now you can evaluate the automatic comprehension accuracy using Mask R-CNN detection and segmentation:

./experiments/scripts/eval_dets.sh GPU_ID refcoco unc

./experiments/scripts/eval_masks.sh GPU_ID refcoco unc

Pre-trained Models

In order to get the results in our paper, please follow Training Step 1-3 for data and feature preparation then run Evaluation Step 1.

We provide the pre-trained models for RefCOCO, RefCOCO+ and RefCOCOg. Download and put them under ./output folder.

- RefCOCO: Pre-trained model (56M)

| Localization (gt-box) | Localization (Mask R-CNN) | Segmentation (Mask R-CNN) | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

|

- RefCOCO+: Pre-trained model (56M)

| Localization (gt-box) | Localization (Mask R-CNN) | Segmentation (Mask R-CNN) | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

|

- RefCOCOg: Pre-trained model (58M)

| Localization (gt-box) | Localization (Mask R-CNN) | Segmentation (Mask R-CNN) | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

|

Pre-computed detections/masks

We provide the detected boxes/masks for those who are interested in automatic comprehension. This was done using Training Step 3. Note our Mask R-CNN is trained on COCO’s training images, excluding those in RefCOCO, RefCOCO+, and RefCOCOg’s validation+testing. That said it is unfair to use the other off-the-shelf detectors trained on whole COCO set for this task.

Demo

Run cv/example_demo.ipynb for demo example.

You can also check our Online Demo.

Citation

@inproceedings{yu2018mattnet,

title={MAttNet: Modular Attention Network for Referring Expression Comprehension},

author={Yu, Licheng and Lin, Zhe and Shen, Xiaohui and Yang, Jimei and Lu, Xin and Bansal, Mohit and Berg, Tamara L},

booktitle={CVPR},

year={2018}

}

License

MAttNet is released under the MIT License (refer to the LICENSE file for details).

A few notes

I'd like to share several thoughts after working on Referring Expressions for 3 years (since 2015):

-

Model Improvement: I'm satisfied with this model architecture but still feel the context information is not fully exploited. We tried the context of visual comparison in our ECCV2016. It worked well but relied too much on the detector. That's why I removed the appearance difference in this paper. (Location comparison still remains as it's too important.) I'm looking forward to seeing more robust and interesting context proposed in the future. Another direction is the end-to-end multi-task training. Current model loses some concepts after going through Mask R-CNN. For example, Mask R-CNN can perfectly detect (big)

sports ballin an image but MAttNet can no longer recognize it. The reason is we are training the two models seperately and our RefCOCO dataset do not have ball-related expressions. -

Borrowing External Concepts: Current datasets (RefCOCO, RefCOCO+, RefCOCOg) have bias toward

personcategory. Around half of the expressions are related to person. However, in real life people may also be interested in referring other common objects (cup, bottle, book) or even stuff (sky, tree or building). As RefCOCO already provides common referring expression structure, the (only) piece left is getting the universal objects/stuff concepts, which could be borrowed from external datasets/tasks. -

Referring Expression Generation (REG): Surprisingly few paper works on referring expression generation task so far! Dialogue is important. Referring to things is always the first step for computer-to-human interaction. (I don't think people would love to use a passive computer or robot which cannot talk.) In our CVPR2017, we actually collected more testing expressions for better REG evaluation. (Check REFER2 for the data. The only difference with REFER is it contains more testing expressions on RefCOCO and RefCOCO+.) While we achieved the SOA results in the paper, there should be plentiful space for further improvement. Our speaker model can only utter "boring" and "safe" expressions, thus cannot well specify every object in an image. GAN or a Modular Speaker might be effective weapons as future work.

-

Data Collection: Larger Referring Expressions dataset is apparently the most straight-forward way to improve the performance of any model. You might have two questions: 1) What data should we collect? 2) How do we collect the dataset? A larger Referring Expression dataset covering the whole MS COCO is expected (of course). This will also make end-to-end learning possible in the future. Task-specific dataset is also interesting. Since ReferIt Game, there have been several datasets in different domains, e.g., video, dialogue and spoken language. Note you may be careful about the problem setting. Randomly fitting referring expressions into a task (just for paper publication) is boring. As for the collection method, I prefer the way used in our ealy work ReferIt Game. The collected expressions might be slightly short (compared with image captioning datasets), but that is how we refer things naturally in daily life.

Authorship

This project is maintained by Licheng Yu.