MILVLG / Mcan Vqa

Programming Languages

Labels

Projects that are alternatives of or similar to Mcan Vqa

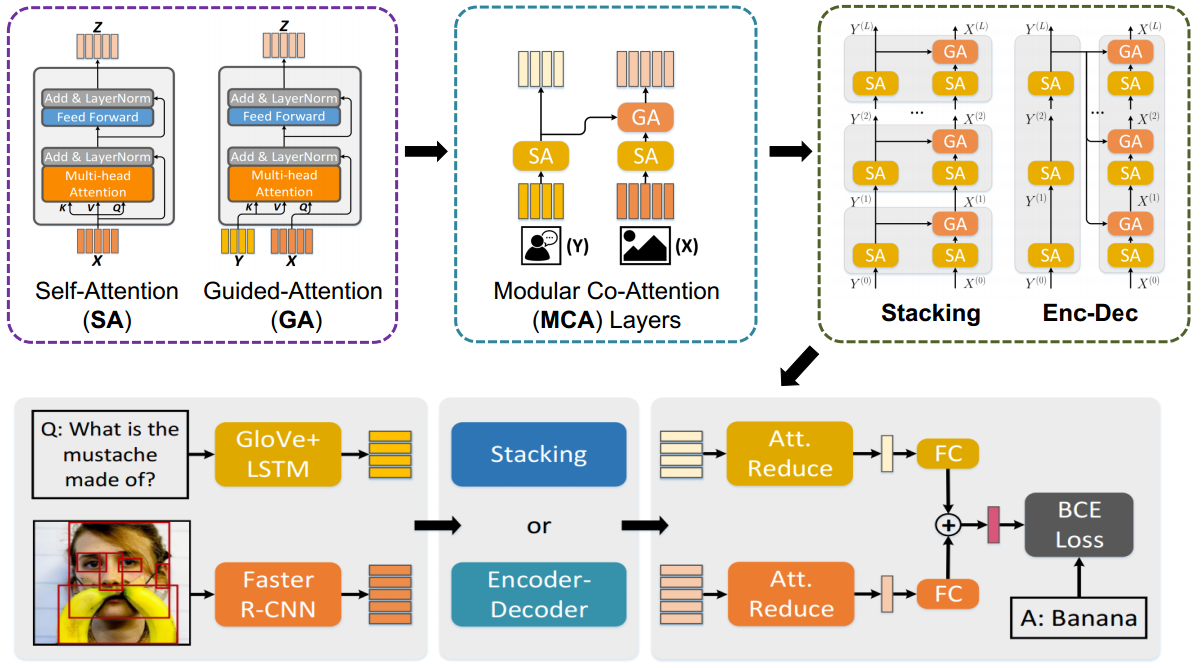

Deep Modular Co-Attention Networks (MCAN)

This repository corresponds to the PyTorch implementation of the MCAN for VQA, which won the champion in VQA Challgen 2019. With an ensemble of 27 models, we achieved an overall accuracy 75.23% and 75.26% on test-std and test-challenge splits, respectively. See our slides for details.

By using the commonly used bottom-up-attention visual features, a single MCAN model delivers 70.70% (small model) and 70.93% (large model) overall accuracy on the test-dev split of VQA-v2 dataset respectively, which significantly outperform existing state-of-the-arts. Please check our paper for details.

Updates

July 10, 2019

- Pytorch implementation of MCAN along with several state-of-the-art models on VQA/GQA/CLEVR are maintained in our another OpenVQA project.

June 13, 2019

- Pure PyTorch implementation of MCAN model with deep encoder-decoder strategy.

- Self-contained documentation from scratch .

- Model zoo consists of pre-trained MCAN-small and MCAN-large models on the VQA-v2 dataset.

- Multi-GPUs training and gradient accumulation.

Table of Contents

Prerequisites

Software and Hardware Requirements

You may need a machine with at least 1 GPU (>= 8GB), 20GB memory and 50GB free disk space. We strongly recommend to use a SSD drive to guarantee high-speed I/O.

You should first install some necessary packages.

-

Install Python >= 3.5

-

Install PyTorch >= 0.4.1 with CUDA (Pytorch 1.x is also supported).

-

Install SpaCy and initialize the GloVe as follows:

$ pip install -r requirements.txt $ wget https://github.com/explosion/spacy-models/releases/download/en_vectors_web_lg-2.1.0/en_vectors_web_lg-2.1.0.tar.gz -O en_vectors_web_lg-2.1.0.tar.gz $ pip install en_vectors_web_lg-2.1.0.tar.gz

Setup

The image features are extracted using the bottom-up-attention strategy, with each image being represented as an dynamic number (from 10 to 100) of 2048-D features. We store the features for each image in a .npz file. You can prepare the visual features by yourself or download the extracted features from OneDrive or BaiduYun. The downloaded files contains three files: train2014.tar.gz, val2014.tar.gz, and test2015.tar.gz, corresponding to the features of the train/val/test images for VQA-v2, respectively. You should place them as follows:

|-- datasets

|-- coco_extract

| |-- train2014.tar.gz

| |-- val2014.tar.gz

| |-- test2015.tar.gz

Besides, we use the VQA samples from the visual genome dataset to expand the training samples. Similar to existing strategies, we preprocessed the samples by two rules:

- Select the QA pairs with the corresponding images appear in the MSCOCO train and val splits.

- Select the QA pairs with the answer appear in the processed answer list (occurs more than 8 times in whole VQA-v2 answers).

For convenience, we provide our processed vg questions and annotations files, you can download them from OneDrive or BaiduYun, and place them as follow:

|-- datasets

|-- vqa

| |-- VG_questions.json

| |-- VG_annotations.json

After that, you can run the following script to setup all the needed configurations for the experiments

$ sh setup.sh

Running the script will:

- Download the QA files for VQA-v2.

- Unzip the bottom-up features

Finally, the datasets folders will have the following structure:

|-- datasets

|-- coco_extract

| |-- train2014

| | |-- COCO_train2014_...jpg.npz

| | |-- ...

| |-- val2014

| | |-- COCO_val2014_...jpg.npz

| | |-- ...

| |-- test2015

| | |-- COCO_test2015_...jpg.npz

| | |-- ...

|-- vqa

| |-- v2_OpenEnded_mscoco_train2014_questions.json

| |-- v2_OpenEnded_mscoco_val2014_questions.json

| |-- v2_OpenEnded_mscoco_test2015_questions.json

| |-- v2_OpenEnded_mscoco_test-dev2015_questions.json

| |-- v2_mscoco_train2014_annotations.json

| |-- v2_mscoco_val2014_annotations.json

| |-- VG_questions.json

| |-- VG_annotations.json

Training

The following script will start training with the default hyperparameters:

$ python3 run.py --RUN='train'

All checkpoint files will be saved to:

ckpts/ckpt_<VERSION>/epoch<EPOCH_NUMBER>.pkl

and the training log file will be placed at:

results/log/log_run_<VERSION>.txt

To add:

-

--VERSION=str, e.g.--VERSION='small_model'to assign a name for your this model. -

--GPU=str, e.g.--GPU='2'to train the model on specified GPU device. -

--NW=int, e.g.--NW=8to accelerate I/O speed. -

--MODEL={'small', 'large'}( Warning: The large model will consume more GPU memory, maybe Multi-GPU Training and Gradient Accumulation can help if you want to train the model with limited GPU memory.) -

--SPLIT={'train', 'train+val', 'train+val+vg'}can combine the training datasets as you want. The default training split is'train+val+vg'. Setting--SPLIT='train'will trigger the evaluation script to run the validation score after every epoch automatically. -

--RESUME=Trueto start training with saved checkpoint parameters. In this stage, you should assign the checkpoint version--CKPT_V=strand the resumed epoch numberCKPT_E=int. -

--MAX_EPOCH=intto stop training at a specified epoch number. -

--PRELOAD=Trueto pre-load all the image features into memory during the initialization stage (Warning: needs extra 25~30GB memory and 30min loading time from an HDD drive).

Multi-GPU Training and Gradient Accumulation

We recommend to use the GPU with at least 8 GB memory, but if you don't have such device, don't worry, we provide two ways to deal with it:

-

Multi-GPU Training:

If you want to accelerate training or train the model on a device with limited GPU memory, you can use more than one GPUs:

Add

--GPU='0, 1, 2, 3...'The batch size on each GPU will be adjusted to

BATCH_SIZE/#GPUs automatically. -

Gradient Accumulation:

If you only have one GPU less than 8GB, an alternative strategy is provided to use the gradient accumulation during training:

Add

--ACCU=nThis makes the optimizer accumulate gradients for

nsmall batches and update the model weights at once. It is worth noting thatBATCH_SIZEmust be divided bynto run this mode correctly.

Validation and Testing

Warning: If you train the model use --MODEL args or multi-gpu training, it should be also set in evaluation.

Offline Evaluation

Offline evaluation only support the VQA 2.0 val split. If you want to evaluate on the VQA 2.0 test-dev or test-std split, please see Online Evaluation.

There are two ways to start:

(Recommend)

$ python3 run.py --RUN='val' --CKPT_V=str --CKPT_E=int

or use the absolute path instead:

$ python3 run.py --RUN='val' --CKPT_PATH=str

Online Evaluation

The evaluations of both the VQA 2.0 test-dev and test-std splits are run as follows:

$ python3 run.py --RUN='test' --CKPT_V=str --CKPT_E=int

Result files are stored in results/result_test/result_run_<'PATH+random number' or 'VERSION+EPOCH'>.json

You can upload the obtained result json file to Eval AI to evaluate the scores on test-dev and test-std splits.

Pretrained models

We provide two pretrained models, namely the small model and the large model. The small model corrresponds to the one describe in our paper with slightly higher performance (the overall accuracy on the test-dev split is 70.63% in our paper) due to different pytorch versions. The large model uses a 2x larger HIDDEN_SIZE=1024 compared to the small model with HIDDEN_SIZE=512.

The performance of the two models on test-dev split is reported as follows:

| Model | Overall | Yes/No | Number | Other |

|---|---|---|---|---|

| Small | 70.7 | 86.91 | 53.42 | 60.75 |

| Large | 70.93 | 87.39 | 52.78 | 60.98 |

These two models can be downloaded from OneDrive or BaiduYun, and you should unzip and put them to the correct folders as follows:

|-- ckpts

|-- ckpt_small

| |-- epoch13.pkl

|-- ckpt_large

| |-- epoch13.pkl

Set --CKPT={'small', 'large'} --CKPT_E=13 to testing or resume training, details can be found in Training and Validation and Testing.

Citation

If this repository is helpful for your research, we'd really appreciate it if you could cite the following paper:

@inProceedings{yu2019mcan,

author = {Yu, Zhou and Yu, Jun and Cui, Yuhao and Tao, Dacheng and Tian, Qi},

title = {Deep Modular Co-Attention Networks for Visual Question Answering},

booktitle = {Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

pages = {6281--6290},

year = {2019}

}