mlajtos / Moniel

Programming Languages

Projects that are alternatives of or similar to Moniel

⚠️ Notice

L1: Tensor Studio - a more practical continuation of the ideas presented in Moniel.

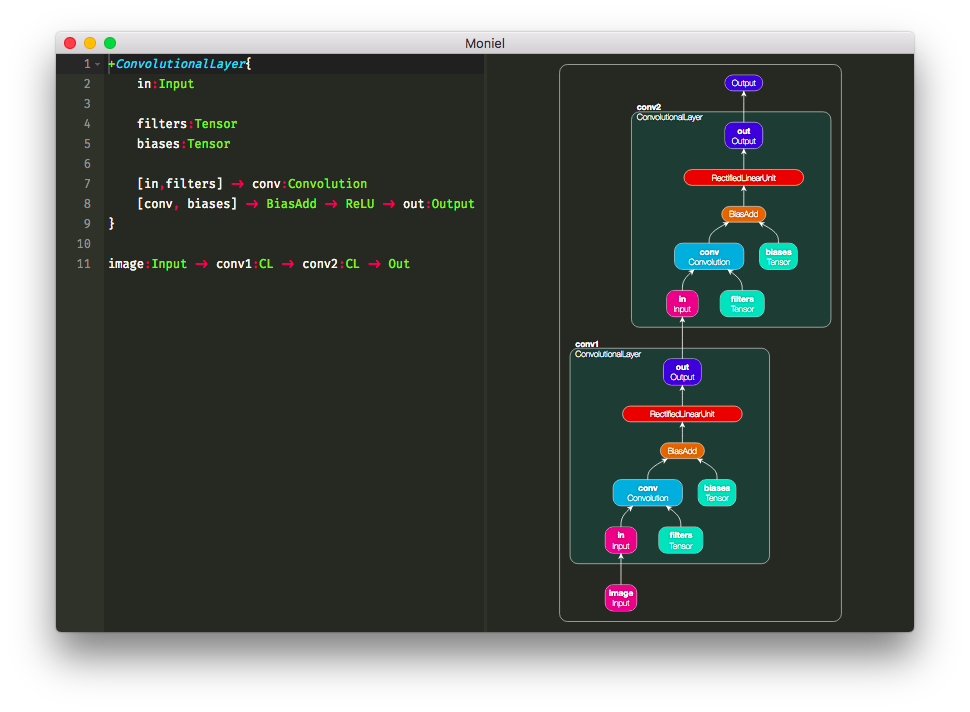

Moniel: Notation for Computational Graphs

Human-friendly declarative dataflow notation for computational graphs. See video.

Pre-built packages

macOS

Moniel.dmg (77MB)

Setup for other platforms

$ git clone https://github.com/mlajtos/moniel.git

$ cd moniel

$ npm install

$ npm start

Quick Introduction

Moniel is one of many attempts at creating a notation for deep learning models leveraging graph thinking. Instead of defining computation as list of formulea, we define the model as a declarative dataflow graph. It is not a programming language, just a convenient notation. (Which will be executable. Wanna help?)

Note: Proper syntax highlighting is not available here on GitHub. Use the application for the best experience.

Let's start with nothing, i.e. comments:

// This is line comment.

/*

This is block

comment.

*/

Node can be created by stating its type:

Sigmoid

You don't have to write full name of a type. Use acronym that fits you! These are all equivalent:

LocalResponseNormalization // canonical, but too long

LocRespNorm // weird, but why not?

LRN // cryptic for beginners, enough for others

Nodes connect with other nodes with an arrow:

Sigmoid -> MaxPooling

There can be chain of any length:

LRN -> Sigm -> BatchNorm -> ReLU -> Tanh -> MP -> Conv -> BN -> ELU

Also, there can be multiple chains:

ReLU -> BN

LRN -> Conv -> MP

Sigm -> Tanh

Nodes can have identifiers:

conv:Convolution

Identifiers let's you refer to nodes that are used more than once:

// inefficient declaration of matrix-matrix multiplication

matrix1:Tensor

matrix2:Tensor

mm:MatrixMultiplication

matrix1 -> mm

matrix2 -> mm

However, this can be rewritten without identifiers using list:

[Tensor,Tensor] -> MatMul

Lists let's you easily declare multi-connection:

// Maximum of 3 random numbers

[Random,Random,Random] -> Maximum

List-to-list connections are sometimes really handy:

// Range of 3 random numbers

[Rand,Rand,Rand] -> [Max,Min] -> Sub -> Abs

Nodes can take named attributes that modify their behavior:

Fill(shape = 10x10x10, value = 1.0)

Attribute names can also be shortened:

Ones(s=10x10x10)

Defining large graphs without proper structuring is unmanageable. Metanodes can help:

layer:{

RandomNormal(shape=784x1000) -> weights:Variable

weights -> dp:DotProduct -> act:ReLU

}

Tensor -> layer/dp // feed input into the DotProduct of the "layer" metanode

layer/act -> Softmax // feed output of the "layer" metanode into another node

Metanodes are more powerful when they define proper Input-Output boundary:

layer1:{

RandomNormal(shape=784x1000) -> weigths:Variable

[in:Input,weigths] -> DotProduct -> ReLU -> out:Output

}

layer2:{

RandomNormal(shape=1000x10) -> weigths:Variable

[in:Input,weigths] -> DotProduct -> ReLU -> out:Output

}

// connect metanodes directly

layer1 -> layer2

Alternatively, you can use inline metanodes:

In -> layer:{[In,Tensor] -> Conv -> Out} -> Out

Or you don't need to give it a name:

In -> {[In,Tensor] -> Conv -> Out} -> Out

If metanodes have identical structure, we can create a reusable metanode and use it as a normal node:

+ReusableLayer(shape = 1x1){

RandN(shape = shape) -> w:Var

[in:In,w] -> DP -> RLU -> out:Out

}

RL(s = 784x1000) -> RL(s = 1000x10)

Similar projects and Inspiration

- Piotr Migdał: Simple Diagrams of Convoluted Neural Networks - great summary on visualizing ML architectures

- Lobe (video) – "Build, train, and ship custom deep learning models using a simple visual interface."

- Serrano – "A graph computation framework with Accelerate and Metal support."

- Subgraphs – "Subgraphs is a visual IDE for developing computational graphs."

- 💀Machine – "Machine is a machine learning IDE."

- PyTorch – "Tensors and Dynamic neural networks in Python with strong GPU acceleration."

- Sonnet – "Sonnet is a library built on top of TensorFlow for building complex neural networks."

- TensorGraph – "TensorGraph is a framework for building any imaginable models based on TensorFlow"

- nngraph – "graphical computation for nn library in Torch"

- DNNGraph – "a deep neural network model generation DSL in Haskell"

- NNVM – "Intermediate Computational Graph Representation for Deep Learning Systems"

- DeepRosetta – "An universal deep learning models conversor"

- TensorBuilder – "a functional fluent immutable API based on the Builder Pattern"

- Keras – "minimalist, highly modular neural networks library"

- PrettyTensor – "a high level builder API"

- TF-Slim – "a lightweight library for defining, training and evaluating models"

- TFLearn – "modular and transparent deep learning library"

- Caffe – "deep learning framework made with expression, speed, and modularity in mind"