JunweiLiang / Multiverse

Programming Languages

Projects that are alternatives of or similar to Multiverse

Multiverse

This repository contains the code and models for the following CVPR'20 paper:

The Garden of Forking Paths: Towards Multi-Future Trajectory Prediction

Junwei Liang,

Lu Jiang,

Kevin Murphy,

Ting Yu,

Alexander Hauptmann

You can find more information at our Project Page and the blog.

The SimAug (ECCV'20) project is here.

If you find this code useful in your research then please cite

@inproceedings{liang2020garden,

title={The Garden of Forking Paths: Towards Multi-Future Trajectory Prediction},

author={Liang, Junwei and Jiang, Lu and Murphy, Kevin and Yu, Ting and Hauptmann, Alexander},

booktitle={The IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year={2020}

}

@inproceedings{liang2020simaug,

title={SimAug: Learning Robust Representations from Simulation for Trajectory Prediction},

author={Liang, Junwei and Jiang, Lu and Hauptmann, Alexander},

booktitle={Proceedings of the European Conference on Computer Vision (ECCV)},

month = {August},

year={2020}

}

Introduction

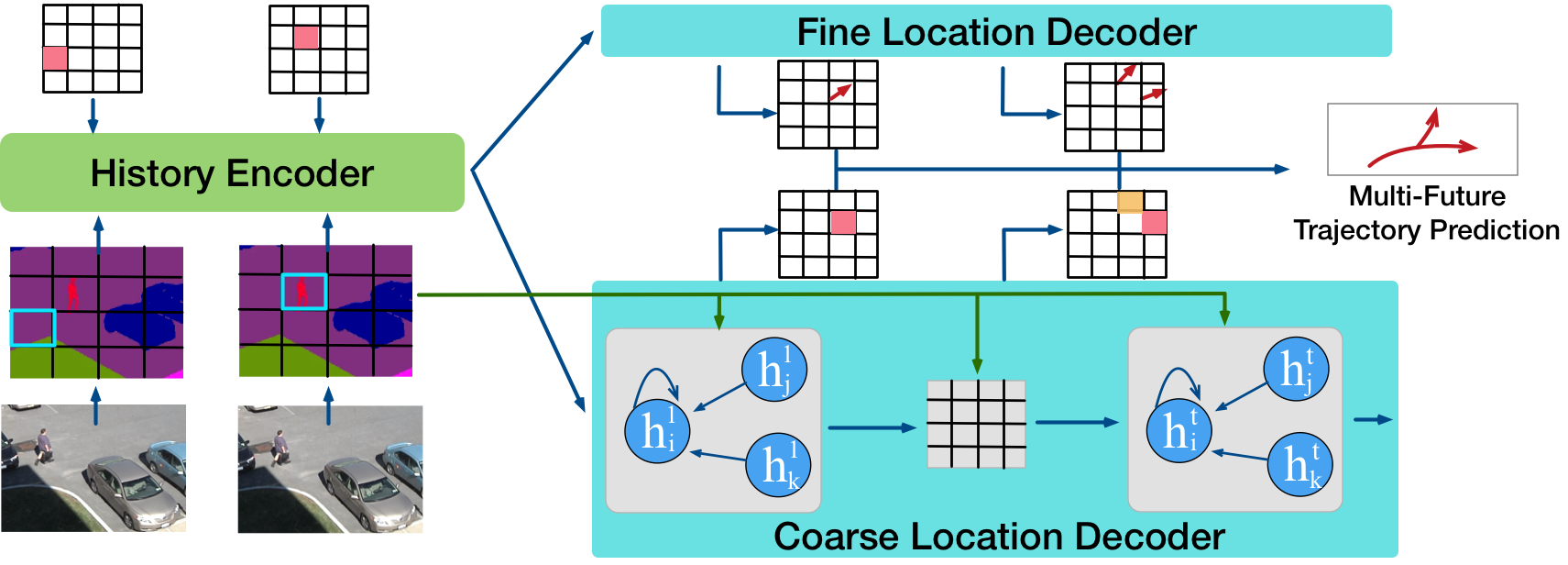

This paper proposes the first multi-future pedestrian trajectory prediction dataset and a multi-future prediction method called Multiverse.

This paper studies the problem of predicting the distribution over multiple possible future paths of people as they move through various visual scenes. We make two main contributions. The first contribution is a new dataset called the Forking Paths Dataset, created in a realistic 3D simulator, which is based on real world trajectory data, and then extrapolated by human annotators to achieve different latent goals. This provides the first benchmark for quantitative evaluation of the models to predict multi-future trajectories.

The Forking Paths Dataset

-

Current dataset version: v1

-

Download links: Google Drive (sha256sum) / Baidu Pan (提取码: tpd7)

-

The dataset includes 3000 1920x1080 videos (750 human-annotated trajectory samples in 4 camera views) with bounding boxes and scene semantic segmentation ground truth. More notes and instructions about the dataset can be found here.

-

Instructions of how to add more human annotations, edit the scenes, recreate from real-world videos, or just simply to play with 3D simulator, can be found here.

-

Instructions of how to semi-automatically re-create real-world videos' scenarios with homography matrices into 3D simulation can be found here.

The Multiverse Model

Our second contribution is a new model to generate multiple plausible future trajectories, which contains novel designs of using multi-scale location encodings and convolutional RNNs over graphs. We refer to our model as Multiverse.

Dependencies

- Python 2/3; TensorFlow-GPU >= 1.15.0

Pretrained Models

You can download pretrained models by running the script

bash scripts/download_single_models.sh.

Testing and Visualization

Instructions for testing pretrained models can be found here.

Training new models

Instructions for training new models can be found here.

Acknowledgments

The Forking Paths Dataset is created based on the CARLA Simulator and Unreal Engine 4.