ronggong / Musical Onset Efficient

Programming Languages

Labels

Projects that are alternatives of or similar to Musical Onset Efficient

An efficient deep learning model for musical onset detection

This repo contains code and supplementary information for the paper:

Towards an efficient and deep learning model for musical onset detection

The code aims to:

- reproduce the experimental results in this work.

- help retrain the onset detection models mentioned in this work for your own dataset.

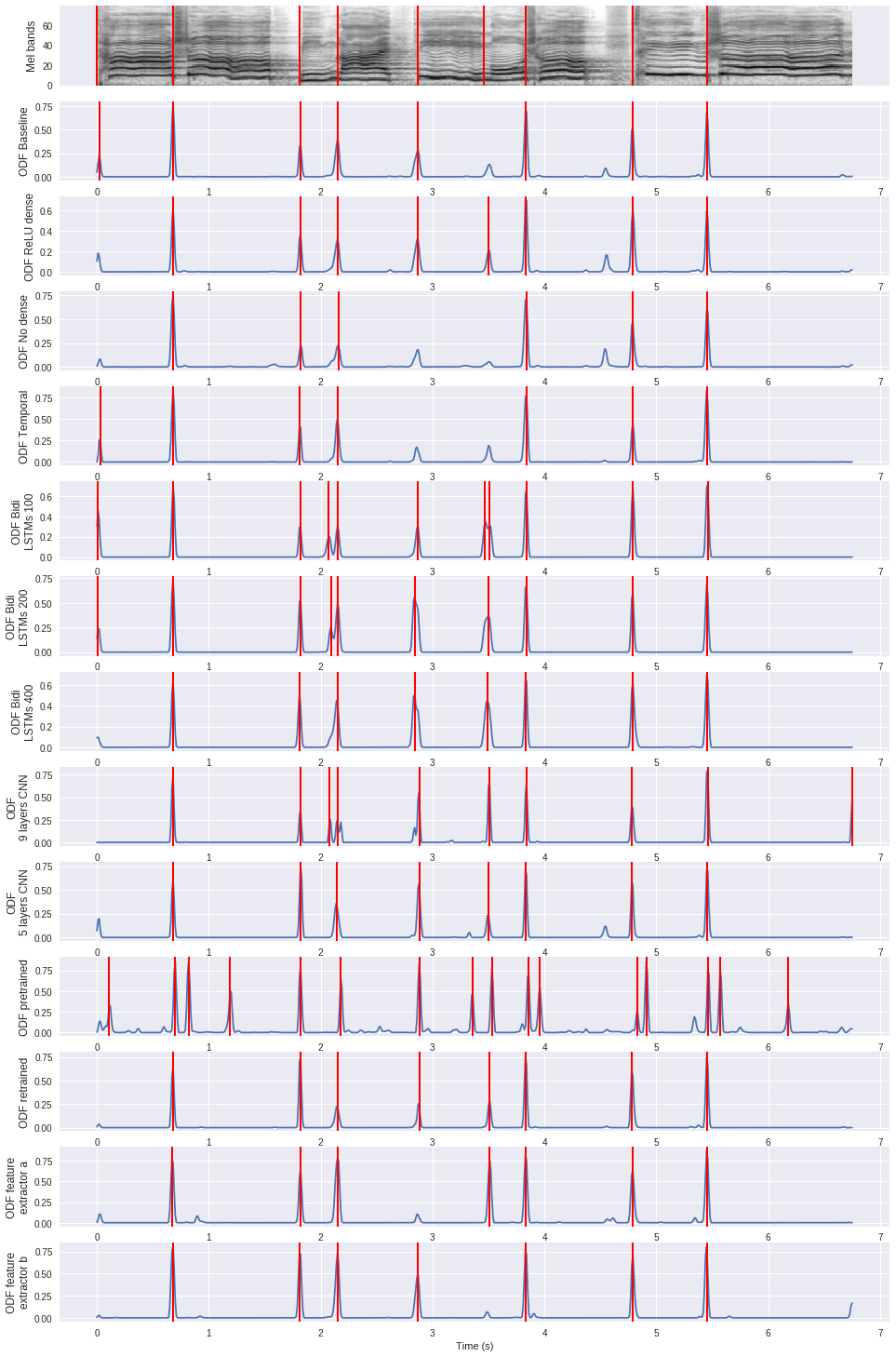

Below is a plot of the onset detection functions experimented in the paper.

- Red lines in the Mel bands plot are the ground truth syllable onset positions,

- those in the other plots are the detected onset positions by using peak-picking onset selection method.

For an interactive code demo to generate this plot and explore our work, please check our jupyter notebook. You should be able to "open with" google colaboratory in you google drive, then "open in playground" to execute it block by block. The code of the demo is in the colab_demo branch.

Contents

- A.1 Install dependencies

- A.2 Reproduce the experiment results with pretrained models

- A.3 General code for training data extraction

- A.4 Specific code for jingju and Böck datasets training data extraction

- A.5 Train the models using the training data

- B.1 Pretrained models

- B.2 Full results (precision, recall, F1)

- B.3 Statistical significance calculation data

- B.4 Loss curves (section 5.1 in the paper)

A. Code usage

A.1 Install dependencies

We suggest to install the dependencies in virtualenv

pip install -r requirements.txt

A.2 Reproduce the experiment results with pretrained models

- Download dataset: jingju; Böck dataset is available on request (please send an email).

- Change

nacta_dataset_root_path,nacta2017_dataset_root_pathin./src/file_path_jingju_shared.pyto your local jingju dataset path. - Change

bock_dataset_root_pathin./src/file_path_bock.pyto your local Böck dataset path. - Download pretrained models and put

them into

./pretrained_modelsfolder. - Execute below command lines to reproduce jingju or Böck datasets results:

python reproduce_experiment_results.py -d <string> -a <string>

-

-ddataset. It can be chosenjingjuorbock -

-aarchitecture. It can be chosen frombaseline, relu_dense, no_dense, temporal, bidi_lstms_100, bidi_lstms_200, bidi_lstms_400, 9_layers_cnn, 5_layers_cnn, pretrained, retrained, feature_extractor_a, feature_extractor_b. Please read the paper to decide which experiment result you want to reproduce:

A.3 General code for training data extraction

In case that you want to extract the feature, label and sample weights for your own dataset:

- We assume that your training set audio and annotation are stored in folders

path_audioandpath_annotation. - Your annotation should conform to either jingju or Böck annotation format. Jingju annotation is stored in

Praat textgrid file.

In our jingju textgrid annotations,

two tiers are parsed:

lineanddianSilence; The former contains musical line (phrase) level onsets, and the latter contains syllable level onsets. We assume that you also annotated your audio file in this kind of hierarchical format:tier_parentandtier_childcorresponding tolineanddianSilence. Böck dataset is annotated at each onset time, you can check Böck dataset's annotation in this link, - Run below command line to extract training data for your dataset:

python ./trainingSetFeatureExtraction/training_data_collection_general.py --audio <path_audio> --annotation <path_annotation> --output <path_output> --annotation_type <string, jingju or bock> --phrase <bool> --tier_parent <string e.g. line> --tier_child <string e.g. dianSilence>

-

--audiothe audio files path. Audio needs to be in 44.1kHz .wav format. -

--annotationthe annotation path. -

--output_pathwhere we want to store the output training data. -

--annotation_typejingju or Böck. The type of annotation we provide to the algorithm. -

--phrasedecides that if you want to extract the feature at file-level. If false is selected, you will get a single feature file for the entire input folder. -

--tier_parentthe parent tier, e.g. ling, only needed for jingju annotation type. -

--tier_childthe child tier, e.g. dianSilence, only needed for jingju annotation type.

A.4 Specific code for jingju and Böck datasets training data extraction

In case the you want to extract the feature, label and sample weights for the jingu and Böck datasets, we provide the easy executable code for this purpose. This script is memory-inefficient. It heavily slowed down my computer after finishing the extraction. I haven't found the solution to solve this problem. If you do, please kindly send me an email to tell me how. Thank you.

- Download dataset: jingju; Böck dataset is available on request (please send an email).

- Change

nacta_dataset_root_path,nacta2017_dataset_root_pathin./src/file_path_jingju_shared.pyto your local jingju dataset path. - Change

bock_dataset_root_pathin./src/file_path_bock.pyto your local Böck dataset path. - Change

feature_data_pathin./src/file_path_shared.pyto your local output path. - Execute below command lines to extract training data for jingju or Böck datasets:

python ./training_set_feature_extraction/training_data_collection_jingju.py --phrase <bool>

python ./training_set_feature_extraction/training_data_collection_bock.py

-

--phrasedecides that if you want to extract the feature at file-level. If false is selected, you will get a single feature file for the entire input folder. Böck dataset can only be processed in phrase-level.

A.5 Train the models using the training data

Below scripts allow you to train the model from the training data which you should have already extracted in step A.4.

- Extract jingju or Böck training data by following step A.4.

- Execute below command lines to train the models.

python ./training_scripts/jingju_train.py -a <string, architecture> --path_input <string> --path_output <string> --path_pretrained <string, optional>

python ./training_scripts/bock_train.py -a <string, architecture> --path_input <string> --path_output <string> --path_cv <string> --path_annotation <string> --path_pretrained <string, optional>

-

-avariable can be chosen frombaseline, relu_dense, no_dense, temporal, bidi_lstms_100, bidi_lstms_200, bidi_lstms_400, 9_layers_cnn, 5_layers_cnn, retrained, feature_extractor_a, feature_extractor_b.pretrainedmodel is not necessary to train explicitly because it comes from the5_layers_cnnmodel of the other datasets. -

--path_inputthe training data path. -

--path_outputthe model output path. -

--path_pretrainedthe pretrained model path for the transfer learning experiments. -

--path_cvthe 8 folds cross-validation files path, only used for Böck dataset. -

--path_annotationthe annotation path, only used for Böck dataset.

B. Supplementary information

B.1 Pretrained models

These models have been pretrained on jingju and Böck datasets. You can put them into ./pretrained_models

folder to reproduce the experiment results.

B.2 Full results (precision, recall, F1)

In jingju folder, you will find two result files for each model. The files with the postfix name

_peakPickingMadmom are the results of peak-picking onset selection method, and those with _viterbi_nolabel are

score-informed HMM results. In each file, only the first 5 rows are related to the paper, others are computed by using

other evaluation metrics.

_peakPickingMadmom first 5 rows format:

| onset selection method |

|---|

| best threshold searched on the holdout test set |

| Precision |

| Recall |

| F1-measure |

_viterbi_nolabel first 5 rows format:

| onset selection method |

|---|

| whether we evaluate label of each onset (no in our case) |

| Precision |

| Recall |

| F1-measure |

In Böck folder, there is only one file for each model, and its format is:

| best threshold searched on the holdout test set |

|---|

| Recall Precision F1-measure |

B.3 Statistical significance calculation data

The files in this link contain

- the jingju dataset evaluation results of 5 training times.

- the Böck dataset evaluation results of 8 folds.

You can download and put these files into ./statistical_significance/data folder. We also provide code for

the data parsing and p-value calculation. Please check ttest_experiment.py and ttest_experiment_transfer.py for

the detail.

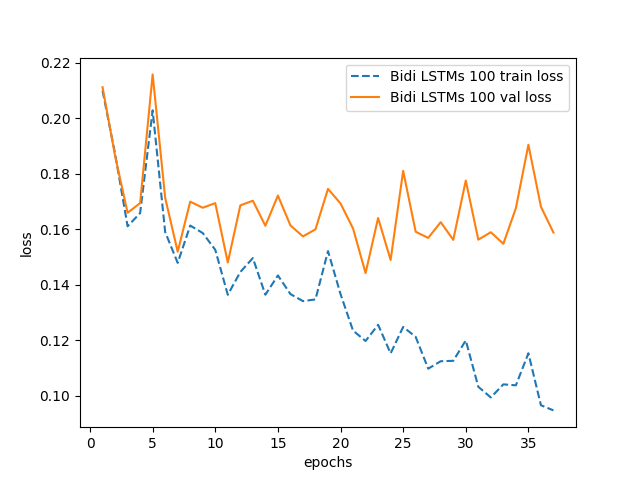

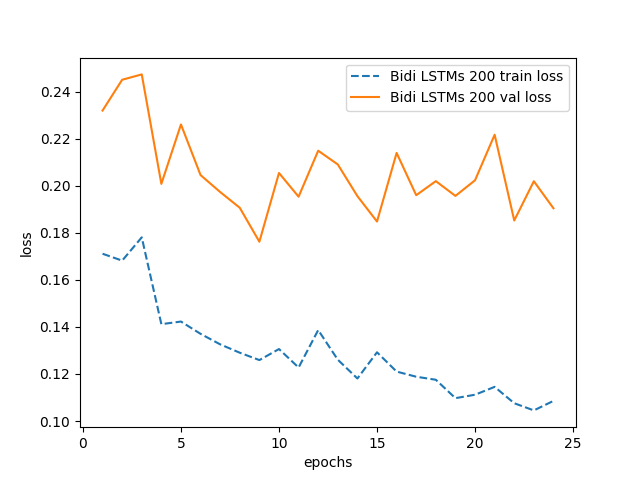

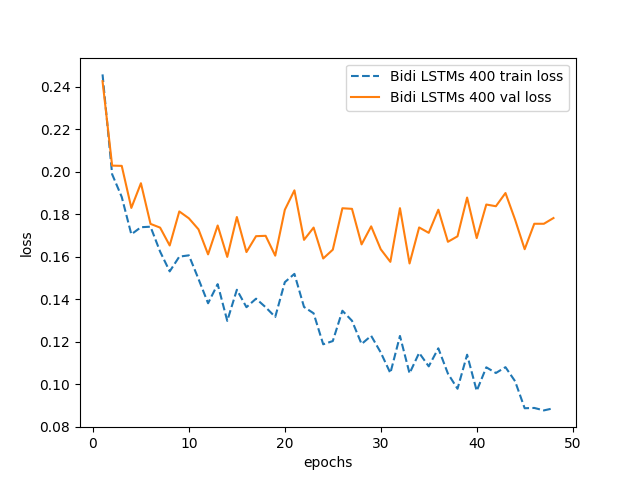

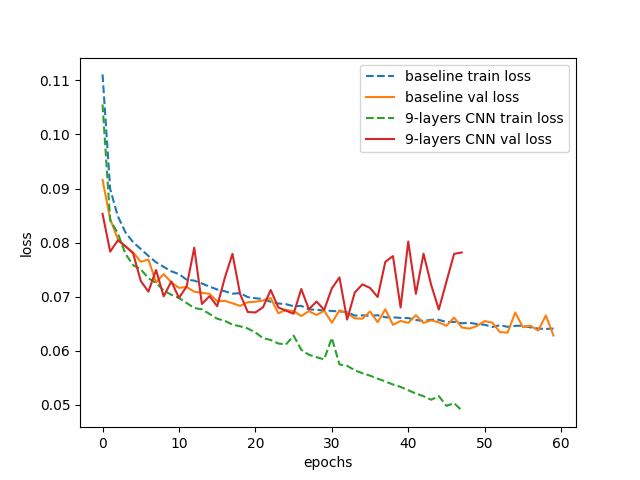

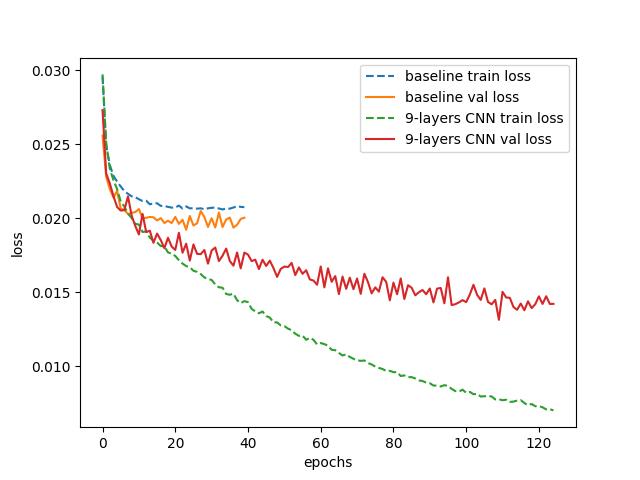

B.4 Loss curves (section 5.1 in the paper)

These loss curves aim to show the overfitting of Bidi LSTMs 100 and 200 models for Böck dataset and 9-layers CNN for both datasets.

Böck dataset Bidi LSTMs 100 losses (3rd fold)

Böck dataset Bidi LSTMs 200 losses (4th fold)

Böck dataset Bidi LSTMs 400 losses (1st fold)

Böck dataset baseline and 9-layers CNN losses (2nd model)

Jingju dataset baseline and 9-layers CNN losses (2nd model)

License

Code

This program is free software: you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation, either version 3 of the License, or (at your option) any later version.

This program is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details.

You should have received a copy of the GNU General Public License along with this program. If not, see http://www.gnu.org/licenses/.

Dataset, pretrained models and any other data used in this work (expect Böck dataset)

Creative Commons Attribution-NonCommercial 4.0